This repository contains the code to estimate and analyze component attributions, as introduced in our paper:

Decomposing and Editing Predictions by Modeling Model Computation

Harshay Shah, Andrew Ilyas, Aleksander Madry

Paper: https://arxiv.org/abs/2404.11534

Blog post: gradientscience.org/modelcomponents

[Overview] [Installation] [Code] [Data] [Notebooks] [Citation]

How does the internal computation of deep neural networks transform examples into predictions?

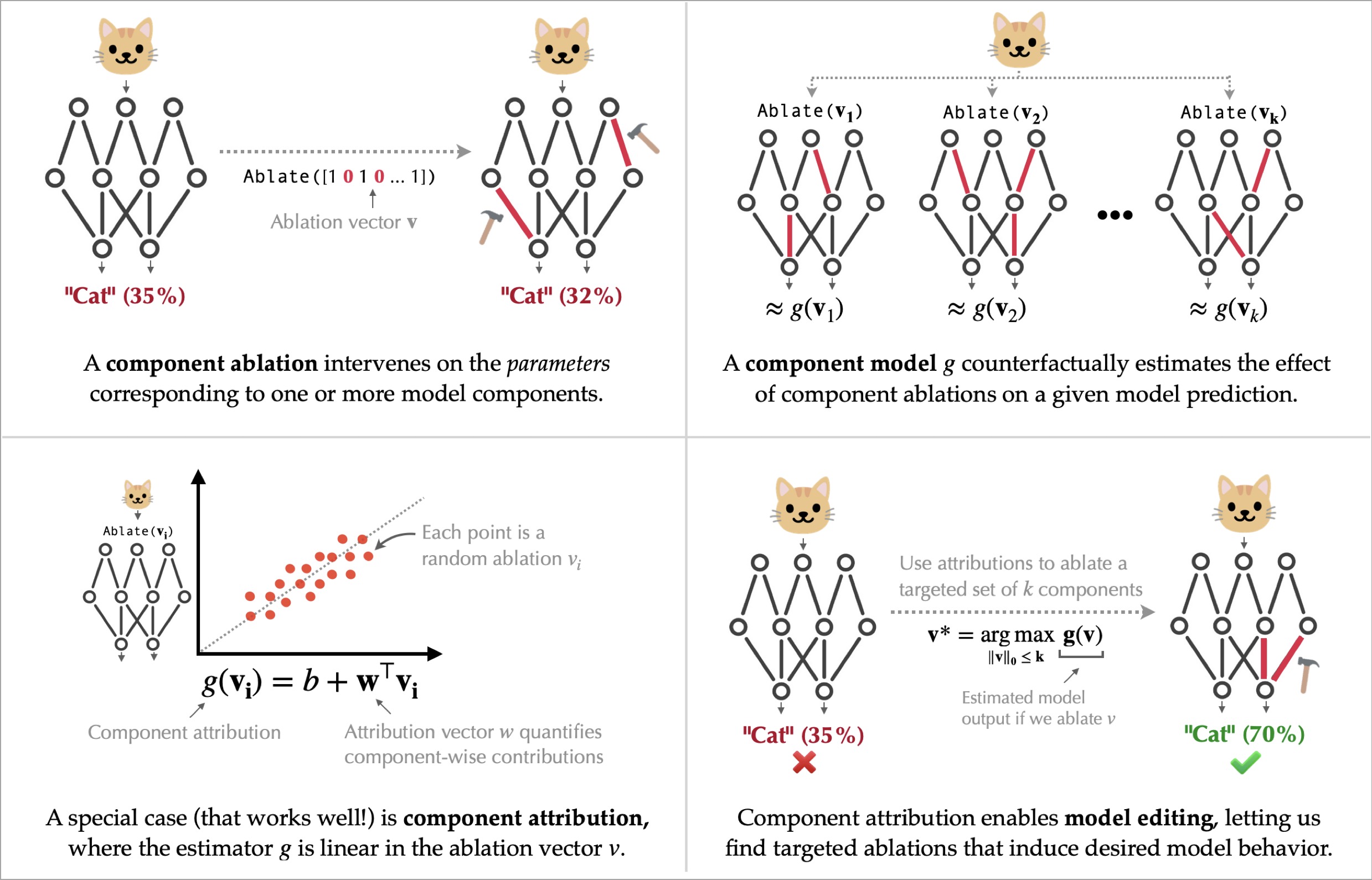

In our paper, we attempt to better characterize this computation by decomposing predictions in terms of model components, i.e., architectural "building blocks" such as convolution filters or attention heads that carry out a model's computation. We formalize our high-level goal of understanding how model components shape a given prediction into a concrete task called component modeling, described below.

Component models. The goal of a component model is to answer counterfactual questions like "what would happen to my image classifier on a given example if I ablated a specific set of convolution filters?" without intervening on the model computation. Given an example, a component attribution---a linear component model---is a linear counterfactual estimator that (a) assigns a "score" to each model component and (b) estimates the counterfactual effect of ablating a set of components on the example's prediction as a sum over component-wise scores.

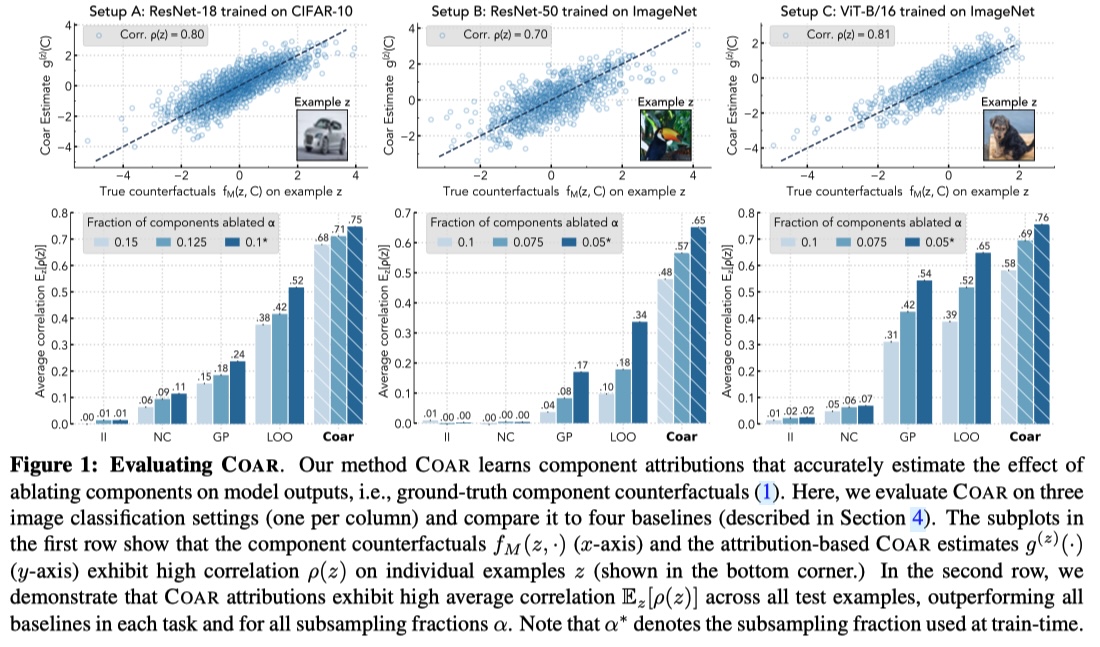

Estimating component attributions with Coar. We develop a scalable method for estimating component attributions called Coar. In Section 4 of our paper, we show that Coar yields accurate component attributions on large-scale vision and language models such as ImageNet ViTs and Phi-2. In this repository, we provide pre-computed component attributions as well as code for evaluating and applying Coar from scratch.

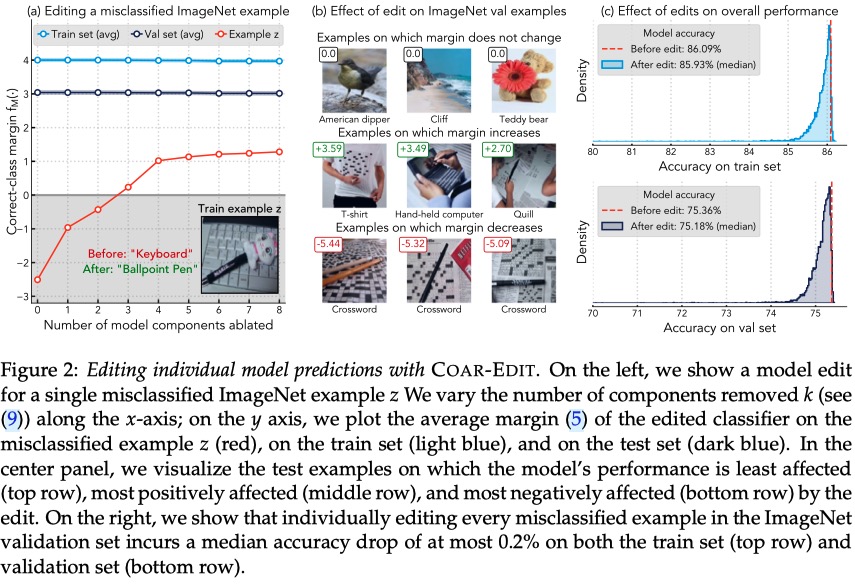

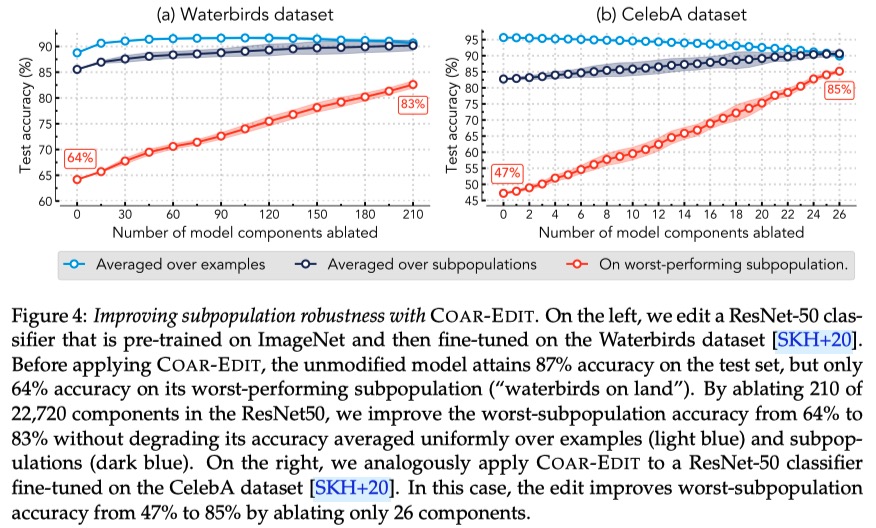

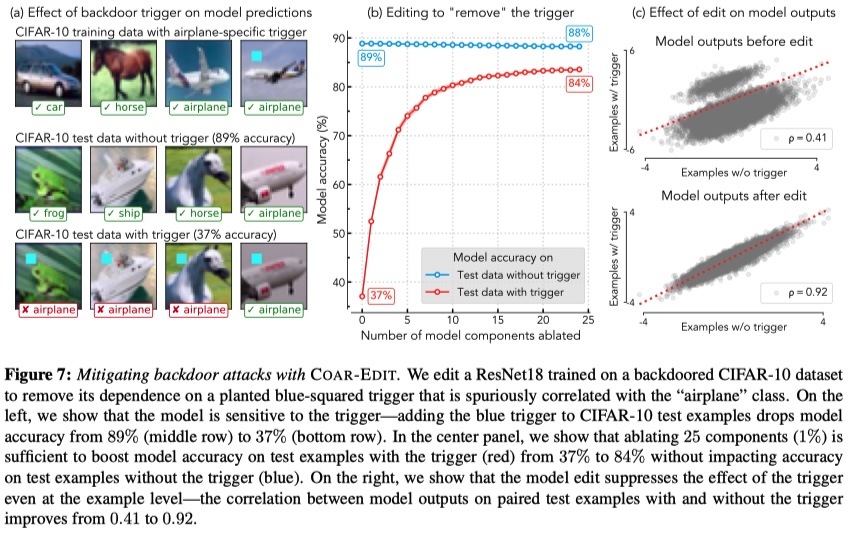

Editing models with component attributions. We also develop Coar-Edit, a simple three-step procedure for model editing using Coar attributions. Section 5 of our paper applies Coar-Edit to editing tasks such as fixing model errors, improving subpopulation robustness, and improving robustness to backdoor attacks. Here, we provide notebooks to reproduce (and extend) these experiments.

Our code relies on the FFCV Library. To install this library along with other dependencies including PyTorch, follow the instructions below:

conda create <ENVNAME> python=3.9 cupy pkg-config libjpeg-turbo opencv pytorch torchvision cudatoolkit=11.6 numba tqdm matplotlib seaborn jupyterlab ipywidgets cudatoolkit-dev scikit-learn scikit-image -c conda-forge -c pytorch

conda activate <ENVNAME>

conda update ffmpeg

git clone git@github.com:madrylab/model_components.git

cd model_components

pip install -r requirements.txtEstimating Coar attributions.

As shown above, we evaluate Coar, our method for estimating component attributions, on deep neural networks trained on image classification tasks.

We provide code in coar/ to compute Coar attributions from scratch for all three setups:

| 🧪 Experiment Setup | 🧩 Code for estimating Coar attributions |

|---|---|

| ResNet18 trained on CIFAR-10 (Setup A in Section 4) |

coar/estimate/cifar_resnet/ |

| ViT-B/16 trained on ImageNet (Setup B in Section 4) |

coar/estimate/imagenet_resnet/ |

| ResNet50 trained on ImageNet (Setup C in Section 4) |

coar/estimate/imagenet_vit/ |

| GPT-2 evaluated on TinyStories (Appendix I.1) |

coar/estimate/gpt2_tinystories/ |

For each setup, we compute Coar attributions (one per test example) in three stages:

- Initialization: We first use a user-defined JSON specification file to initialize a directory of data stores, i.e., memory-mapped numpy arrays.

-

Dataset construction: We then the data stores from above in order to incrementally build a "component dataset" for every example. Specifically, we use a Python script to construct component datasets containing tuples of (a)

$\alpha$ -sized random subsets of model components$C'$ and (b) the outputs of the model after ablating components in subset$C'$ . - Regression: We use the datasets described above to estimate component attributions (one per example) using fast_l1, a SAGA-based GPU solver for linear regression.

For a more detailed description of each stage, check our our mini-tutorial here.

Evaluating Coar attributions:

We provide code for evaluating Coar attributions in coar/evaluate.

Specifically, our evaluation quantifes the extent to which Coar attributions

can accurately predict the counterfactual effect of ablating random

🚧 Coming soon 🚧:

A pip-installable package for estimating and evaluating Coar attributions on any model, dataset, and task

in a streamlined manner.

We also open-source a set of Coar-estimated component attributions that we use in our paper:

| 💾 Dataset | 🔗 Model | 🧮 Component attributions using Coar |

|---|---|---|

| CIFAR-10 [url] |

ResNet model trained from scratch [module] [.pt] |

Components: 2,304 conv filters Attributions: [test.pt] |

| ImageNet [url] |

torchvision.models.ResNet50_Weights.IMAGENET1K_V1[url] |

Components: 22,720 conv filters Attributions: [val.pt] |

| ImageNet [url] |

torchvision.models.ViT_B_16_Weights.IMAGENET1K_V1[url] |

Components: 82,944 neurons Attributions: [val.pt] |

| CelebA [url] |

Fine-tuned ImageNet ResNet50 torchvision model[.pt] |

Components: 22,720 conv filters Attributions: [train.pt] [test.pt] |

| Waterbirds [url] |

Fine-tuned ImageNet ResNet50 torchvision model[.pt] |

Components: 22,720 conv filters Attributions: [train.pt] [test.pt] |

Alternatively, to download all models and component attributions in one go, run:

REPO_DIR=/path/to/local/repo

mkdir -p "$REPO_DIR/data"

wget 'https://www.dropbox.com/scl/fo/8ylssq64bbutqb8jzzs7p/h?rlkey=9ekfyo9xzxclvga4hw5iedqx0&dl=1' -O "$REPO_DIR/data/data.zip"

unzip -o "$REPO_DIR/data/data.zip" -d "$REPO_DIR/data" 2>/dev/null

rm -f "$REPO_DIR/data/data.zip"In our paper, we show that Coar-estimated attributions directly enable model editing. Specifically, we outline a simple three-step procedure for model editing called Coar-Edit and demonstrate its effectiveness on six editing tasks ranging from fixing model errors to mitigating backdoor attacks.

In this repository, we provide Jupyter notebooks in coar_edit to reproduce our model editing experiments

using pre-computed component attributions from above.

Specifically, we provide notebooks for the following experiments:

| 🧪 Experiment | 📓 Jupyter Notebook | 📊 Plot |

|---|---|---|

| Fixing a model error (Section 5.1) |

coar/edit/model_errors.ipynb |

|

| Improving subpopulation robustness (Section 5.3) |

coar/edit/subpop_robustness.ipynb |

|

| Mitigating backdoor attacks (Section 5.4) |

coar/edit/backdoor_attack.ipynb |

|

🚧 Coming soon 🚧: Notebooks for reproducing two more experiments from our paper: Selectively forgetting classes (Section 5.2); Improving CLIP robustness to typographic attacks (Section 5.5).

If you use this code in your work, please cite using the following BibTeX entry:

@article{shah2024decomposing,

title={Decomposing and Editing Predictions by Modeling Model Computation},

author={Shah, Harshay and Ilyas, Andrew and Madry, Aleksander},

journal={arXiv preprint arXiv:2404.11534},

year={2024}

}