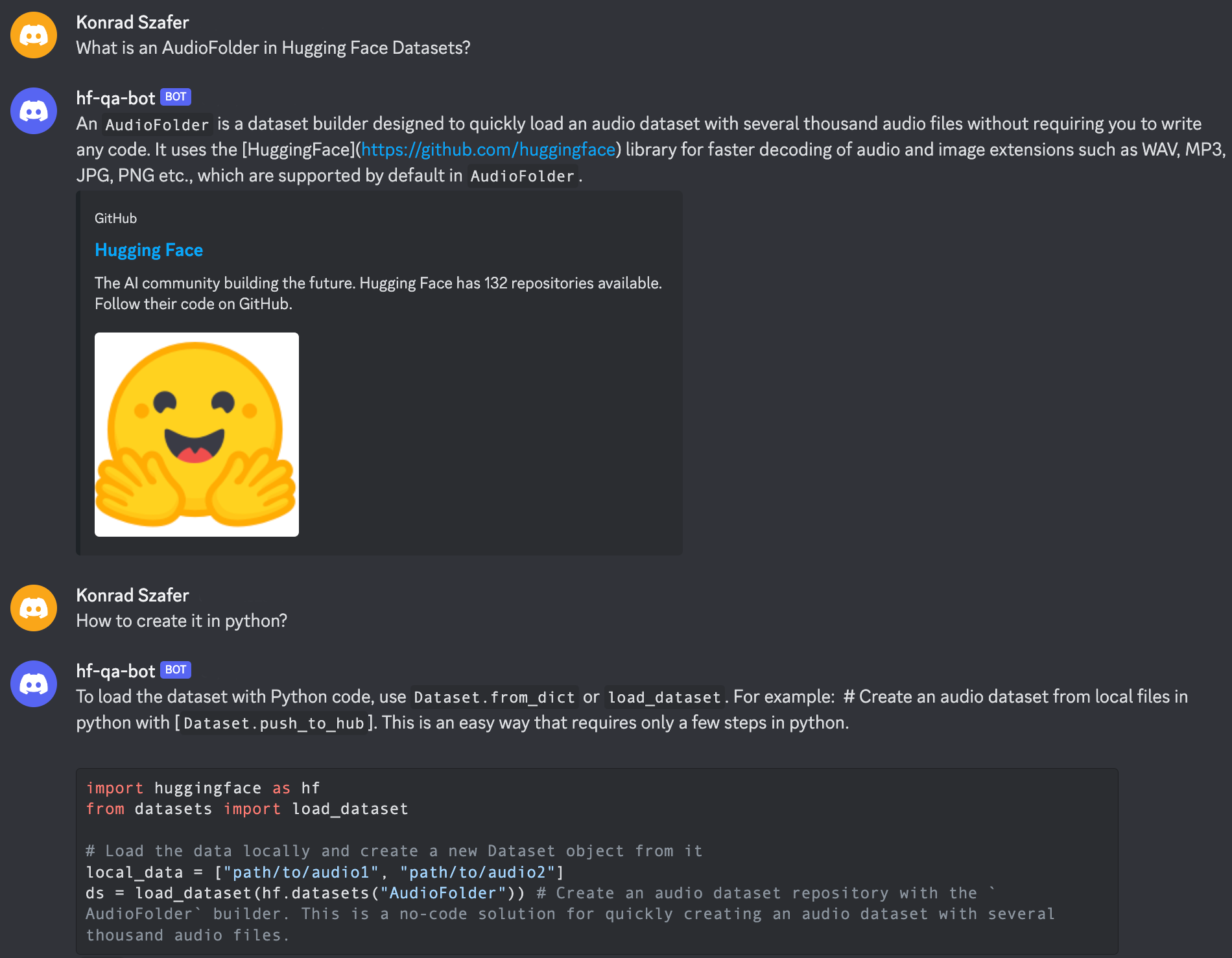

A multi-interface Q&A system that uses Hugging Face's LLM and Retrieval Augmented Generation (RAG) to deliver answers based on Hugging Face documentation. Operable as an API, Discord bot, or Gradio app, it also provides links to the documentation used to formulate each answer.

To execute any of the available interfaces, specify the required parameters in the .env file based on the .env.example located in the config/ directory. Alternatively, you can set these as environment variables:

QUESTION_ANSWERING_MODEL_ID- (str) A string that specifies either the model ID from the Hugging Face Hub or the directory containing the model weightsEMBEDDING_MODEL_ID- (str) embedding model ID from the Hugging Face Hub. We recommend using thehkunlp/instructor-largeINDEX_REPO_ID- (str) Repository ID from the Hugging Face Hub where the index is stored. List of the most actual indexes can be found in this section: IndexesPROMPT_TEMPLATE_NAME- (str) Name of the model prompt template used for question answering, templates are stored in theconfig/api/prompt_templatesdirectoryUSE_DOCS_FOR_CONTEXT- (bool) Use retrieved documents as a context for a given queryNUM_RELEVANT_DOCS- (int) Number of documents used for the previous featureADD_SOURCES_TO_RESPONSE- (bool) Include sources of the retrieved documents used as a context for a given queryUSE_MESSAGES_IN_CONTEXT- (bool) Use chat history for conversational experienceDEBUG- (bool) Provides additional logging

Install the necessary dependencies from the requirements file:

pip install -r requirements.txtAfter completing all steps as described in the Setting up section, specify the APP_MODE environment variable as gradio and run the following command:

python3 app.pyBy default, the API is served at http://0.0.0.0:8000. To launch it, complete all the steps outlined in the Setting up section, then execute the following command:

python3 -m apiTo interact with the system as a Discord bot, add additional required environment variables from the Discord bot section of the .env.example file in the config/ directory.

DISCORD_TOKEN- (str) API key for the bot applicationQA_SERVICE_URL- (str) URL of the API service. We recommend using:http://0.0.0.0:8000NUM_LAST_MESSAGES- (int) Number of messages used for context in conversationsUSE_NAMES_IN_CONTEXT- (bool) Include usernames in the conversation contextENABLE_COMMANDS- (bool) Allow commands, e.g., channel cleanupDEBUG- (bool) Provides additional logging

After completing all steps, run:

python3 -m botTo host bot on Hugging Face Spaces, specify the APP_MODE environment variable as discord, and the bot will be run automatically from the app.py file.

The following list contains the most current indexes that can be used for the system:

- All Hugging Face repositories over 50 Stars - 512-Character Chunks

- All Hugging Face repositories over 50 Stars - 812-Character Chunks

We use Python 3.10

To install all necessary Python packages, run the following command:

pip install -r requirements.txtWe use the pipreqsnb to generate the requirements.txt file. To install pipreqsnb, run the following command:

pip install pipreqsnbTo generate the requirements.txt file, run the following command:

pipreqsnb --force .To run unit tests, you can use the following command:

pytest -o "testpaths=tests" --noconftest