This is a university project made to learn about machine learning and facial expression recognition.

The objectif of this project was to create a software using machine learning to recognize facial expressions in a real time video stream (webcam).

We used the DeXpression CNN model from this paper. We trained the model on the MMI Facial Expression Database, which is a database of videos of people expressing emotions (link to the paper).

We also used un already trained implementation of the VGGFace model to compare the results with our model.

You can read the full report of the project here.

- tensorflow

- keras

- opencv

- numpy

Clone the repository and install the requirements:

git clone

cd FER_DeXpression

pip install -r requirements.txtYou can launch the program with the following command:

python main.py --model [dex|vgg]If you want to train the model, we already have a file with the features extracted from the MMI database. You can use it with the following command:

cd data

python keras_deXpression.pyYou can also create your own dataset and train the model with it.

We trained the model on the MMI database and we got the following results:

| Log Loss | Accuracy |

|---|---|

| 0.2 | 0.97 |

We can't really test the precision of the model because we don't have a test set. But we can see that the model is really precise with the training set, and in real time it seems to work well with only some emotion.

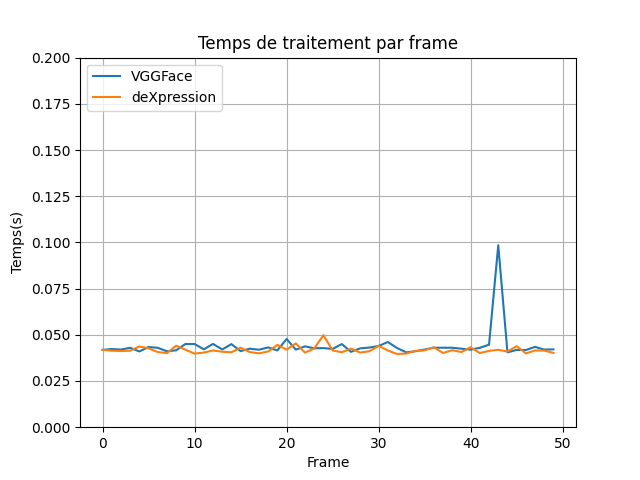

We also make a graph about the inference time of the model: