Tensorflow implementation of some common techniques of GANs, including losses, regularizations and normalizations.

- Losses:

- GAN

- LSGAN

- WGAN

- Hinge

- Gradient Regularizations:

- WGAN-GP

- DRAGAN

- Weights Normalizations/Regularizations:

- SpectralNorm

- Weight Clipping

- WeightNorm

- Orthonormal Regularization

- Generator Regularizations:

- Normalizations:

- BatchNorm, InstanceNorm, LayerNorm

-

Prerequisites

- Tensorflow 1.8

- Python 2.7 or 3.6

-

Training

- Important Arguments (See the others in train.py)

--n_d: # of d steps in each iteration (default:1)--n_g: # of g steps in each iteration (default:1)--loss_mode: gan loss (choices:[gan, lsgan, wgan, hinge], default:gan)--gp_mode: type of gradient penalty (choices:[none, dragan, wgan-gp], default:none)--norm: normalization (choices:[batch_norm, instance_norm, layer_norm, none], default:batch_norm)--weights_norm: weights normalization (choices:[none, spectral_norm, weight_clip], default:none)--vgan: vgan regularization (store_true)--model: model (choices:[conv_mnist, conv_64], default:conv_mnist)--dataset: dataset (choices:[mnist, celeba], default:mnist)--experiment_name: name for current experiment (default:default)

- Examples (See more in examples.md)

# vgan CUDA_VISIBLE_DEVICES=0 python train.py --n_d 1 --n_g 1 --loss_mode gan --gp_mode none --norm batch_norm --vgan --model conv_64 --dataset celeba --experiment_name conv64_celeba_loss{gan}_gp{none}_norm{batch_norm}_wnorm{none}_vgan # gan + dragan CUDA_VISIBLE_DEVICES=0 python train.py --n_d 1 --n_g 1 --loss_mode gan --gp_mode dragan --norm layer_norm --model conv_mnist --dataset mnist --experiment_name conv_mnist_loss{gan}_gp{dragan}_norm{layer_norm}_wnorm{none} # hinge + spectral_norm CUDA_VISIBLE_DEVICES=0 python train.py --n_d 1 --n_g 1 --loss_mode hinge --gp_mode none --norm none --weights_norm spectral_norm --model conv_64 --dataset celeba --experiment_name conv64_celeba_loss{hinge}_gp{none}_norm{none}_wnorm{spectral_norm} # hinge + dragan + instance_norm + spectral_norm CUDA_VISIBLE_DEVICES=0 python train.py --n_d 1 --n_g 1 --loss_mode hinge --gp_mode dragan --norm instance_norm --weights_norm spectral_norm --model conv_64 --dataset celeba --experiment_name conv64_celeba_loss{hinge}_gp{dragan}_norm{instance_norm}_wnorm{spectral_norm} # wgan + wgan-gp CUDA_VISIBLE_DEVICES=0 python train.py --n_d 5 --n_g 1 --loss_mode wgan --gp_mode wgan-gp --norm instance_norm --model conv_64 --dataset celeba --experiment_name conv64_celeba_loss{wgan}_gp{wgan-gp}_norm{instance_norm}_wnorm{none}

- Important Arguments (See the others in train.py)

- CelebA should be prepared by yourself in ./data/celeba/img_align_celeba/.jpg*

- Download the dataset: https://www.dropbox.com/sh/8oqt9vytwxb3s4r/AAB06FXaQRUNtjW9ntaoPGvCa?dl=0

- the above links might be inaccessible, the alternatives are

- Mnist will be automatically downloaded

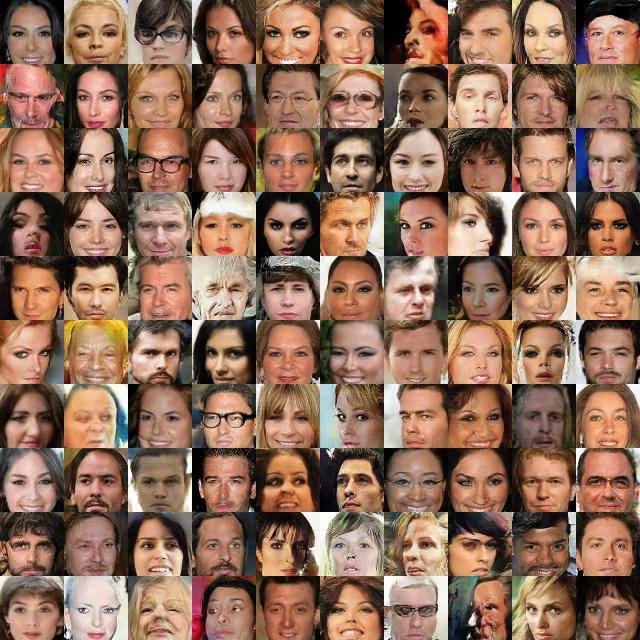

- Hinge + DRAGAN + InstanceNorm + SpectralNorm