Welcome to the official repository for the NeurIPS 2023 paper, "Chatting makes perfect: Chat-based Image Retrieval"!

Most image searches use just a caption. We took it a step further with ChatIR: our tool that chats with users to pinpoint the perfect image.

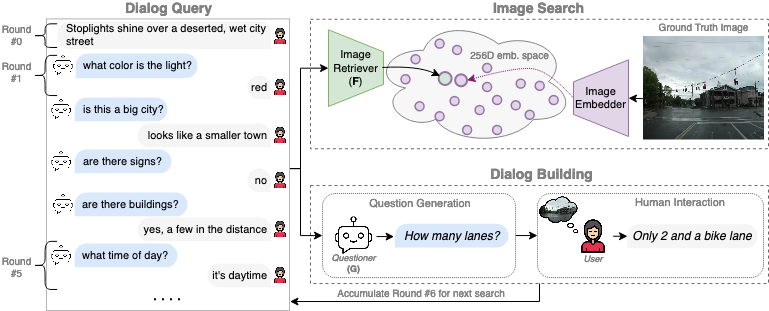

Chats emerge as an effective user-friendly approach for information retrieval, and are successfully employed in many domains, such as customer service, healthcare, and finance. However, existing image retrieval approaches typically address the case of a single query-to-image round, and the use of chats for image retrieval has been mostly overlooked. In this work, we introduce ChatIR: a chat-based image retrieval system that engages in a conversation with the user to elicit information, in addition to an initial query, in order to clarify the user's search intent. Motivated by the capabilities of today's foundation models, we leverage Large Language Models to generate follow-up questions to an initial image description. These questions form a dialog with the user in order to retrieve the desired image from a large corpus. In this study, we explore the capabilities of such a system tested on a large dataset and reveal that engaging in a dialog yields significant gains in image retrieval. We start by building an evaluation pipeline from an existing manually generated dataset and explore different modules and training strategies for ChatIR. Our comparison includes strong baselines derived from related applications trained with Reinforcement Learning. Our system is capable of retrieving the target image from a pool of 50K images with over 78% success rate after 5 dialogue rounds, compared to 75% when questions are asked by humans, and 64% for a single shot text-to-image retrieval. Extensive evaluations reveal the strong capabilities and examine the limitations of CharIR under different settings.

Want to test your model on the ChatIR task? Use the script below:

python eval.pyYou can run this script with two different baselines (by changing the 'baseline' variable):

Remember:

- CLIP is originally trained for captions, not dialogues. So, this is a zero-shot evaluation.

- Dialogues longer than 77 tokens are cut short due to CLIP's token limit. This affects the accuracy of results.

- BLIP fine-tuned on dialogues

The Image Retriever (F) that was fine-tuned on dialogues, as described in the paper. In order to run this baseline, you need to install the BLIP repository as a sub folder and download the model weights from below.

Here you can download the weights of the BLIP model (referred as Image Retriever) that was fine-tuned on ChatIR. This is a simple BLIP text-encoder that we further trained on dialogues from the VisualDialog dataset.

In this section, we show dialogues based on the VisDial1.0 validation images. Each dialogue encompasses 11 rounds, beginning with the image's caption and followed by 10 rounds of Q&A. Images are taken from COCO. Please review VisDial data page here.

| Questioner | Answerer | File (.json) | Notes |

|---|---|---|---|

| Human | Human | Download | This is VisDial1.0 val. set. |

| Human | BLIP2 | Download | Using VisDial1.0 val. questions. |

| ChatGPT | BLIP2 | Download | |

| ChatGPT (Unanswered) | BLIP2 | Download | Question generation with no answers (see paper) |

| Flan-Alpaca-XXL | BLIP2 | Download |

For detailed results, plots, and analysis, refer to the results/ directory.

If you find our work useful in your research, please consider citing:

@inproceedings{levy2023chatting,

title={Chatting Makes Perfect: Chat-based Image Retrieval},

author={Matan Levy and Rami Ben-Ari and Nir Darshan and Dani Lischinski},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023}

}

This project is licensed under the MIT License - see the LICENSE.md file for details.