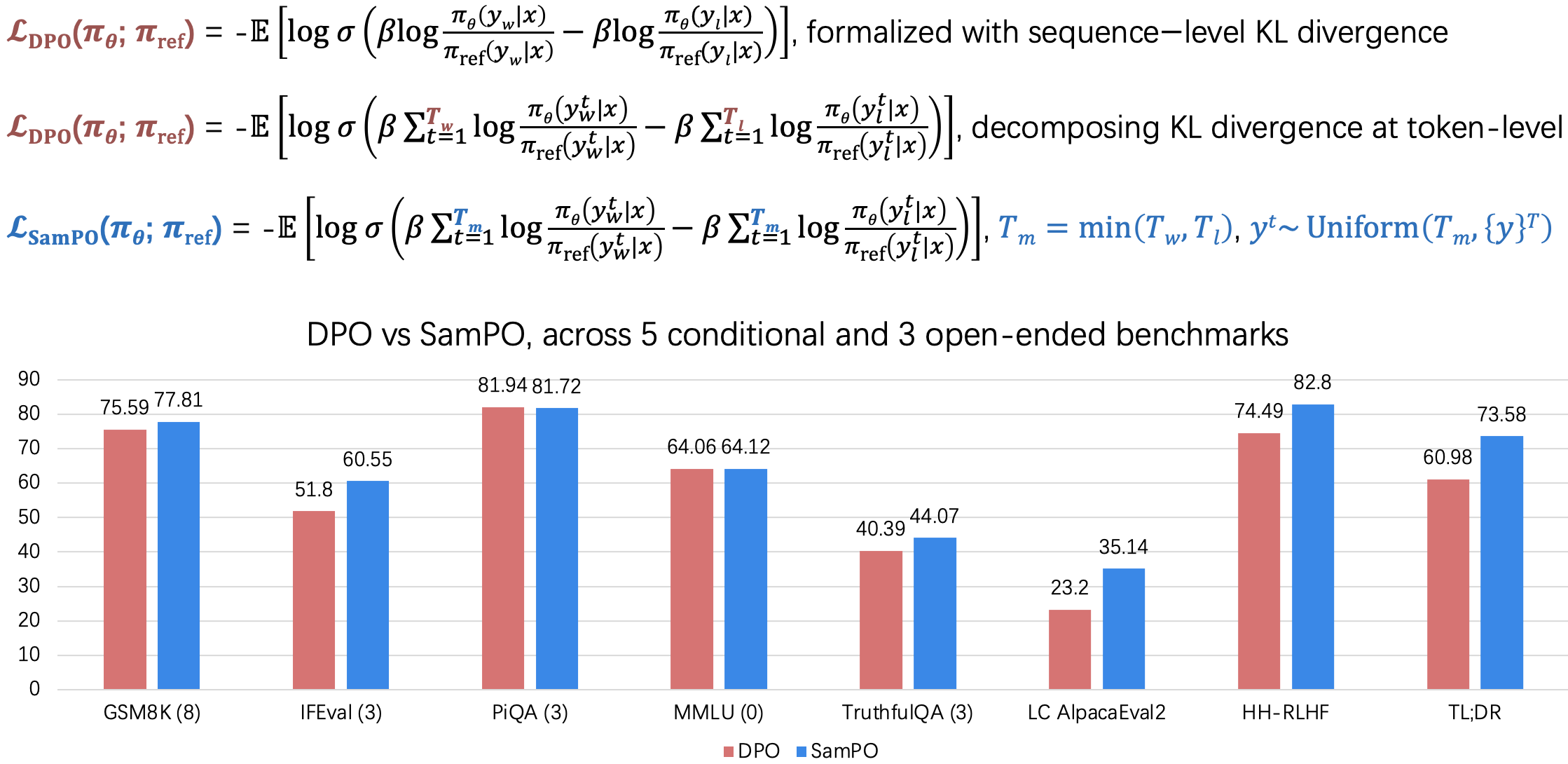

We provide codes and models for SamPO in this repository. Please refer to the paper for details: Eliminating Biased Length Reliance of Direct Preference Optimization via Down-Sampled KL Divergence. In short, we suggest that the discrepancy between sequence-level KL divergences between chosen and rejected sequences, used in DPO, results in overestimated or underestimated rewards due to varying token lengths, leading to the verbosity issue. We then introduce an effective downsampling regularization approach, named SamPO.

We provide requirements.txt for your convenience.

A quick check of the Key Difference.

Run `bash tasks.sh` for all DPO and all variants, including our SamPO.

For five conditional benchmarks, we use lm-evaluation-harness:

- GSM8K: 8-shot, report strict match

- IFEval: 3-shot, report instruction-level strict accuracy

- PiQA: 3-shot, report accuracy

- MMLU: 0-shot, report normalized accuracy

- TruthfulQA: 3-shot, report accuracy of single-true mc1 setting

For AlpacaEval2, we use official alpaca_eval:

- AlpacaEval2: win rate (%)

- LC AlpacaEval2: length-debiased win rate (%) of AlpacaEval2

For HH-RLHF & TL;DR, we use the same GPT-4 Win rate prompt template proposed by the DPO:

- Win rate (%): a win rate between fine-tune models vs. SFT basis

| Name | Share Link |

|---|---|

| Pythia-2.8B-HH-RLHF-Iterative-SamPO | HF Link |

| Pythia-2.8B-TLDR-Iterative-SamPO | HF Link |

| Llama-3-8B-Instruct-Iterative-SamPO | HF Link |

Note: test sets of HH-RLHF and TLDR are released in the above link as well.

This code is built upon the TRL repository.

@article{LUandLI2024SamPO,

title={Eliminating Biased Length Reliance of Direct Preference Optimization via Down-Sampled KL Divergence},

author={Lu, Junru and Li, Jiazheng and An, Siyu and Zhao, Meng and He, Yulan and Yin, Di and Sun, Xing},

journal={arXiv preprint arXiv:2406.10957},

year={2024}

}