Abstract [Paper]

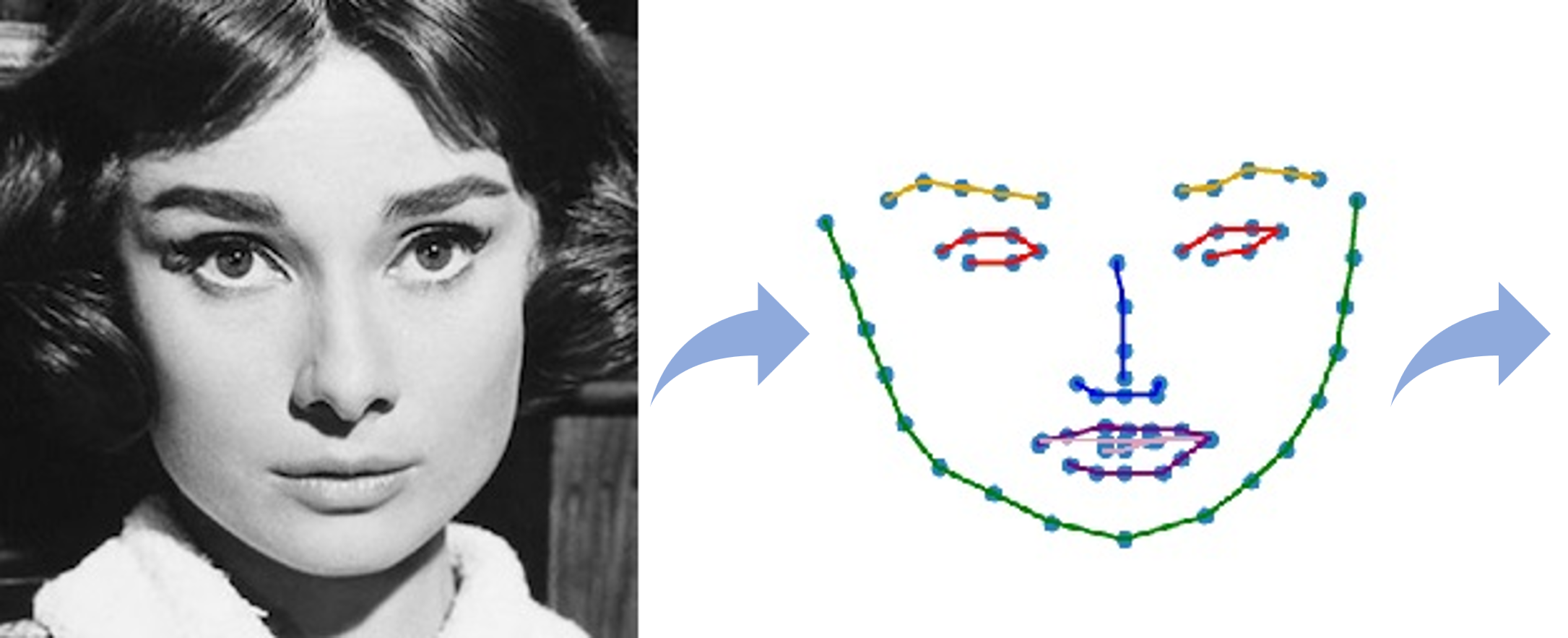

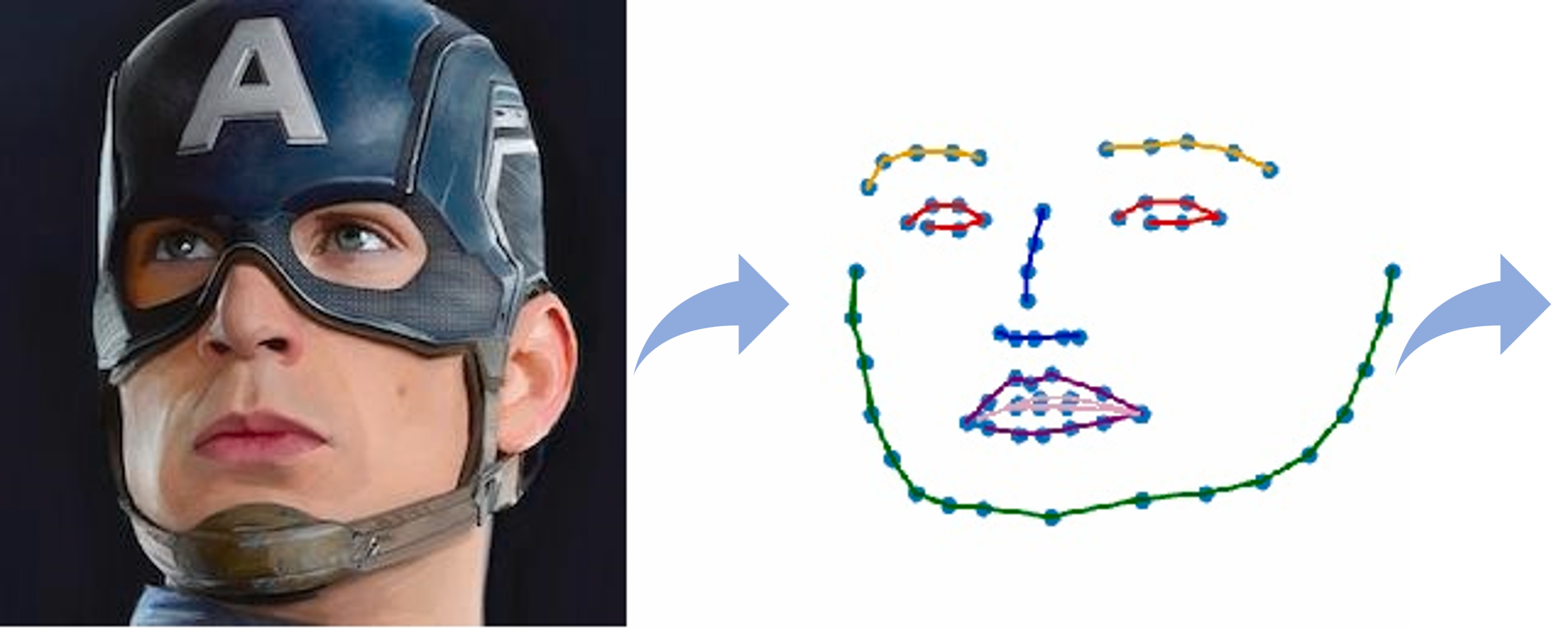

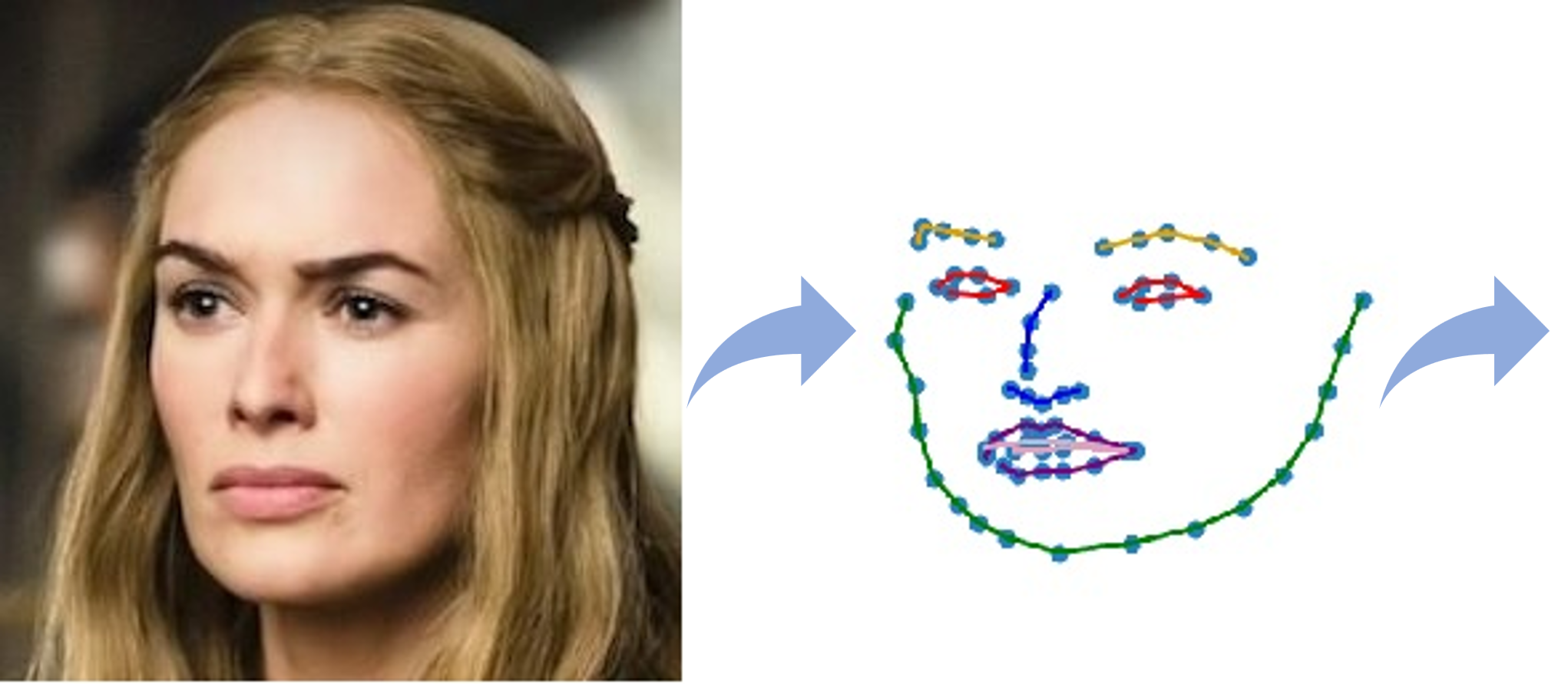

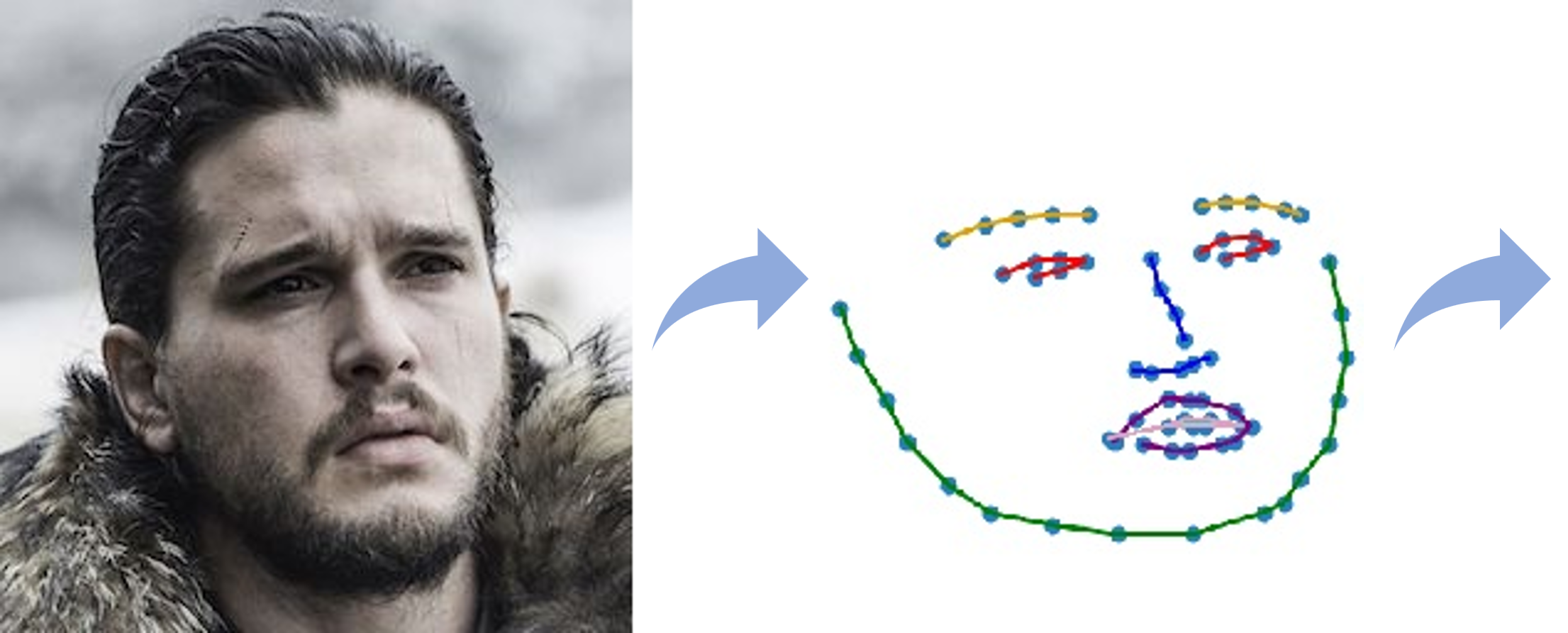

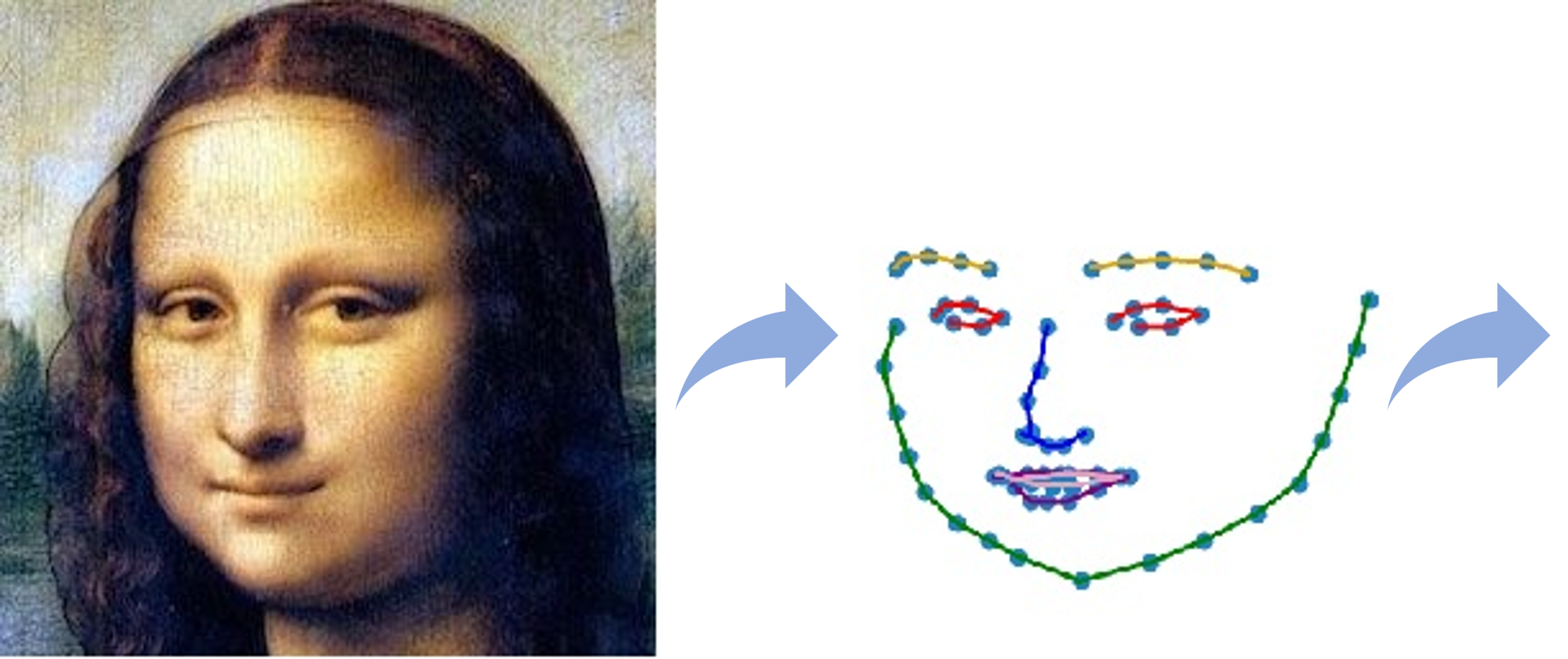

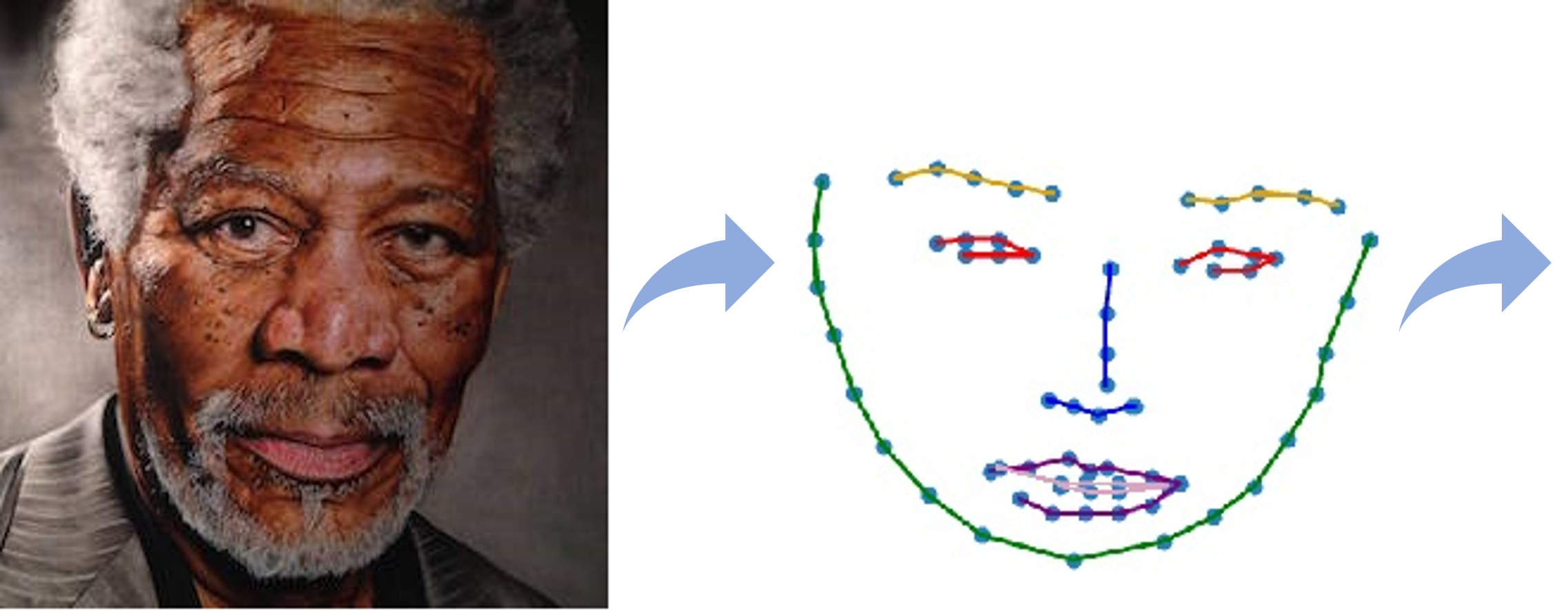

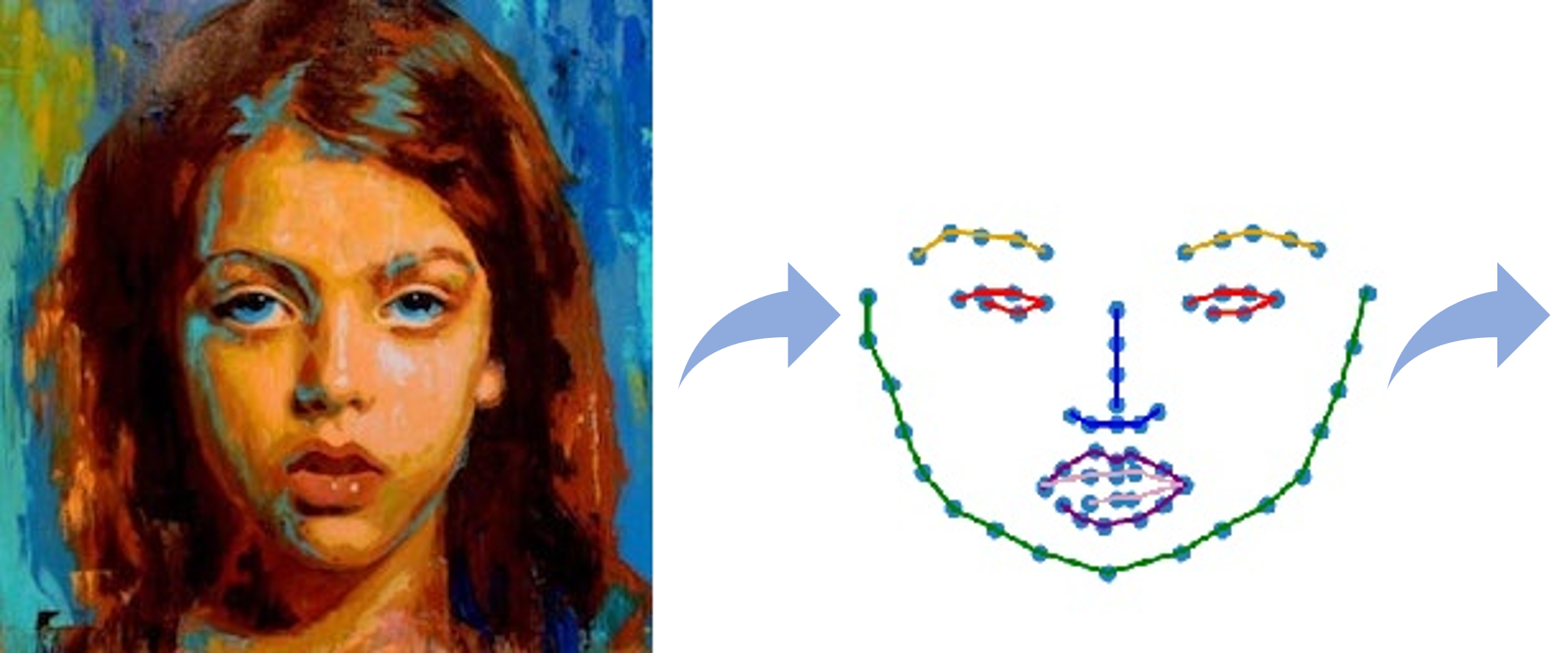

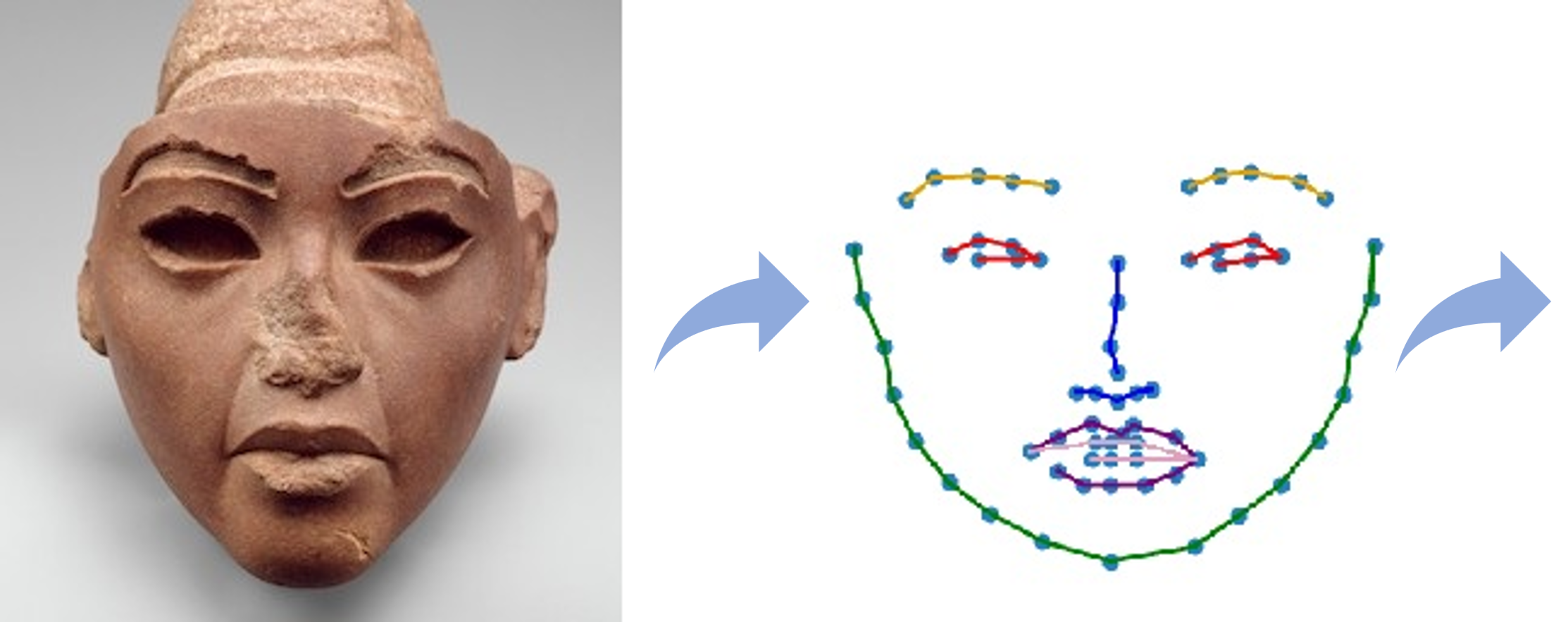

We address the task of unconditional head motion generation to animate still human faces in a low-dimensional semantic space. Deviating from talking head generation conditioned on audio that seldom puts emphasis on realistic head motions, we devise a GAN-based architecture that allows obtaining rich head motion sequences while avoiding known caveats associated with GANs. Namely, the autoregressive generation of incremental outputs ensures smooth trajectories, while a multi-scale discriminator on input pairs drives generation toward better handling of high and low frequency signals and less mode collapse. We demonstrate experimentally the relevance of the proposed architecture and compare with models that showed state-of-the-art performances on similar tasks.

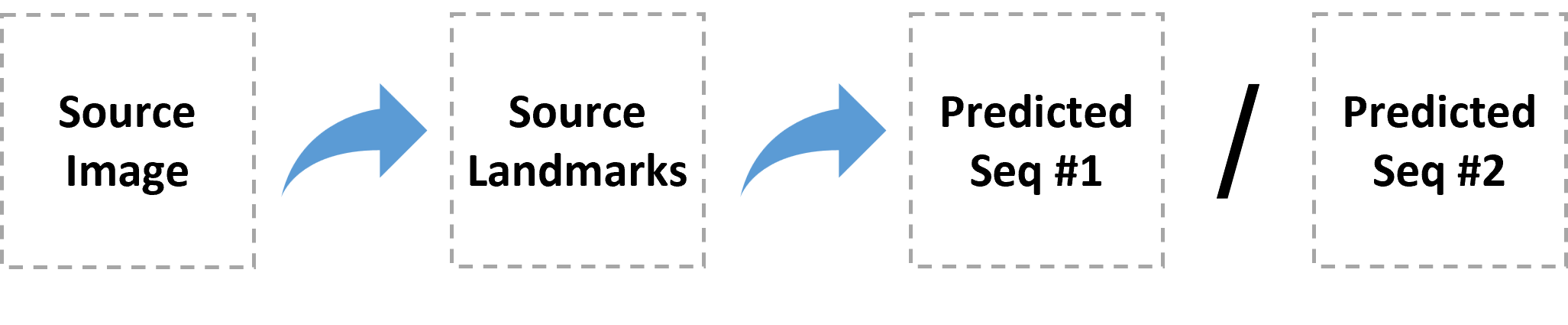

In the results presented below 120 frames are generated from a single reference image.

~~ In Vox2 preprocessing faces are centered, hence the suppression of head translation ~~

Several outputs can be obtained from the same reference image. See below for an illustration on SUHMo-RNN trained on CONFER DB.

SUHMo is a framework that can be implemented in several forms. Below are the proposed LSTM and Transformer variants of our model.

Incoming...

@misc{https://doi.org/10.48550/arxiv.2211.00987,

doi = {10.48550/ARXIV.2211.00987},

url = {https://arxiv.org/abs/2211.00987},

author = {Airale, Louis and Alameda-Pineda, Xavier and Lathuilière, Stéphane and Vaufreydaz, Dominique},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Autoregressive GAN for Semantic Unconditional Head Motion Generation},

publisher = {arXiv},

year = {2022},

copyright = {arXiv.org perpetual, non-exclusive license}

}

A. Bulat and G. Tzimiropoulos, “How far are we from solving the 2d & 3d face alignment problem? (and a dataset of 230,000 3d facial landmarks),” in ICCV, 2017.

C. Georgakis, Y. Panagakis, S. Zafeiriou, and M. Pantic, “The conflict escalation resolution (confer) database,” Image and Vision Computing, vol. 65, 2017.

J. S. Chung, A. Nagrani, and A. Zisserman, “Voxceleb2: Deep speaker recognition,” in INTERSPEECH, 2018.