CalliRewrite: Recovering Handwriting Behaviors from Calligraphy Images without Supervision

ICRA 2024

This is the repository of CalliRewrite: Recovering Handwriting Behaviors from Calligraphy Images without Supervision.

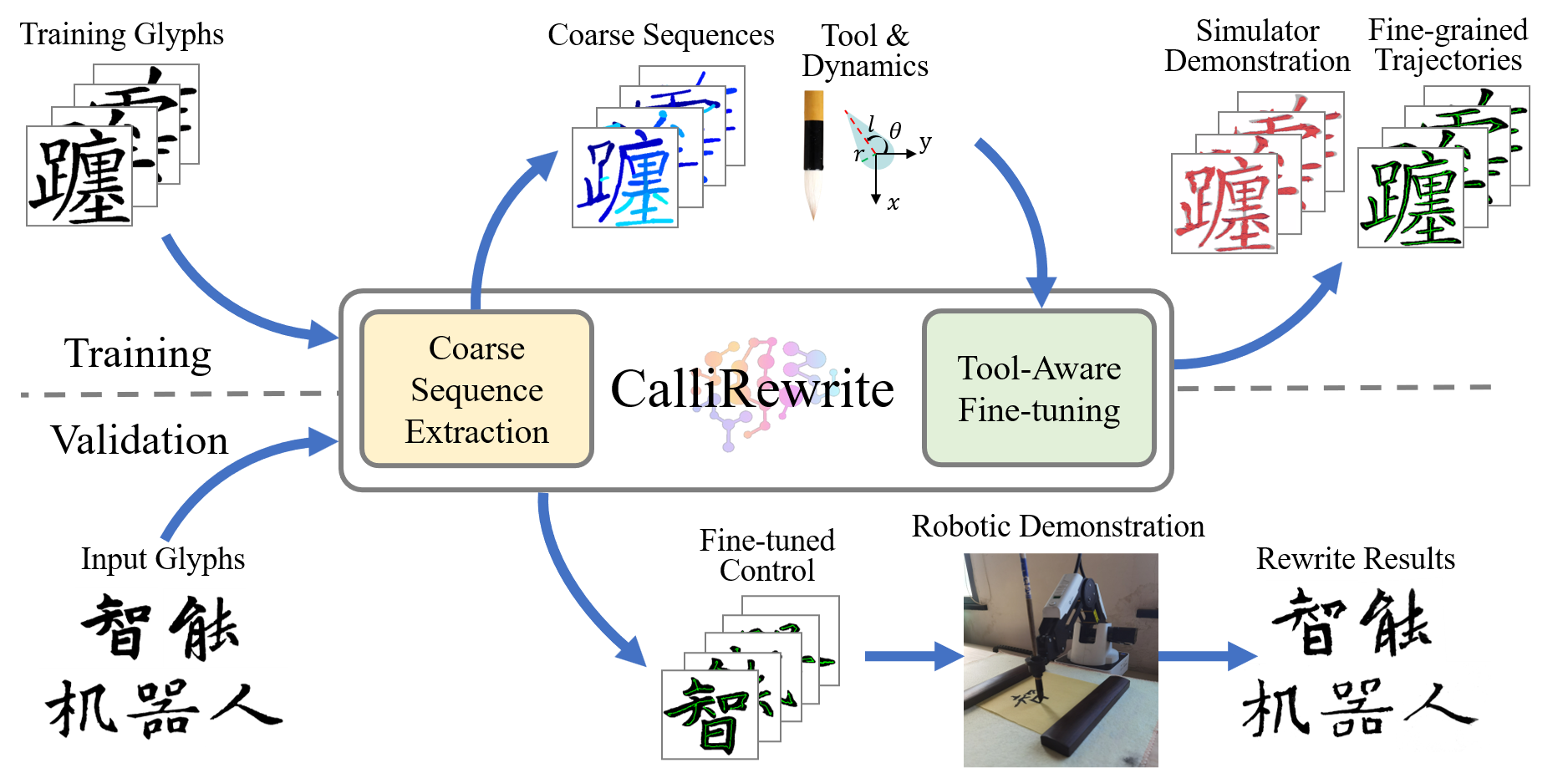

CalliRewrite is an unsupervised approach enabling low-cost robotic arms to replicate diverse calligraphic glyphs by manipulating different writing tools. We use fine-tuned unsupervised LSTM to perform coarse stroke segmentation, and refine them through a reinforcement learning method to produce fine-grained control.

For more information, please visit our project page.

- 2024.4.25 Coarse Sequence Extraction checjpoints released.

- 2024.4.6 CalliRewrite has been selected as a finalist for the IEEE ICRA Best Paper Award in Service Robotics

- 2024.2.27 Version 1.0 upload

We are releasing our network and checkpoints. The weights for the coarse sequence module are stored in this repository. You can download and place them in the folder ./outputs/snapshot/new_train_phase_1 and ./outputs/snapshot/new_train_phase_2. The parameters of the SAC model are learned based on the provided textual images; therefore, they vary depending on the different tools and input texts."

You can setup the pipeline under the following guidance.

Due to package version dependencies, we need to set up two separate environments for coarse sequence extraction and tool-aware finetuning. To do this, you can follow these steps:

-

Navigate to the directory for coarse sequence extraction:

cd seq_extract -

Create a new conda environment using the specified requirements file:

conda env create -f environment.yml

-

Activate the newly created environment (optional):

conda activate calli_ext

-

Navigate to the directory for sequence fine-tuning:

cd ../rl_finetune -

Create another new conda environment using the requirements file for fine-tuning:

conda env create -f environment.yml

-

Activate the second newly created environment:

conda activate callli_rl

-

Follow modify_env.md and correct some flaws in the packages (Necessary!):

By following these steps, you will have two separate conda environments configured for coarse sequence extraction and sequence fine-tuning, ensuring that the correct dependencies are installed for each task.

We provide three simple tools for modeling in the reinforcement learning environment: Calligraphy brush, fude pen, and flat tip marker. The geometry and dynamic properties are defined in ./rl_finetune/Callienv/envs/tools.py and folder ./rl_finetune/tool_property/. You can also define your own utensil easily.

For robotic demonstration, we provide a jupyter notebook in ./callibrate/callibrate.ipynb. It clearly demonstrates the whole process to find out the

We conduct a two-phase progressive training, for the first phase we train on QuickDraw Dataset, you can simply run the shell command:

conda activate calli_ext

cd seq_extractThen you can train the two-phase model with your owncollected fine-tuned data:

bash ./train.shFor example, test the model saved in "outputs/snapshot/new_train_phase_2" on images in the "imgs" folder:

python ./test.py --input imgs --model new_train_phase_2You can call the function to easily move inferenced sequences along with the images to ./rl_finetune/data/train_data' and ./rl_finetune/data/test_data'. Remember the number of train/test envs must be a divisor of the number of train/test data.

conda activate calli_rl

cd ..

python move_data

cd rl_finetuneThen you can have an easy startup:

bash ./scripts/train_brush.sh@article{luo2024callirewrite,

title={CalliRewrite: Recovering Handwriting Behaviors from Calligraphy Images without Supervision},

author={Luo, Yuxuan and Wu, Zekun and Lian, Zhouhui},

journal={arXiv preprint arXiv:2405.15776},

year={2024}

}