Dizhan Xue, Shengsheng Qian, and Changsheng Xu.

MAIS, Institute of Automation, Chinese Academy of Sciences

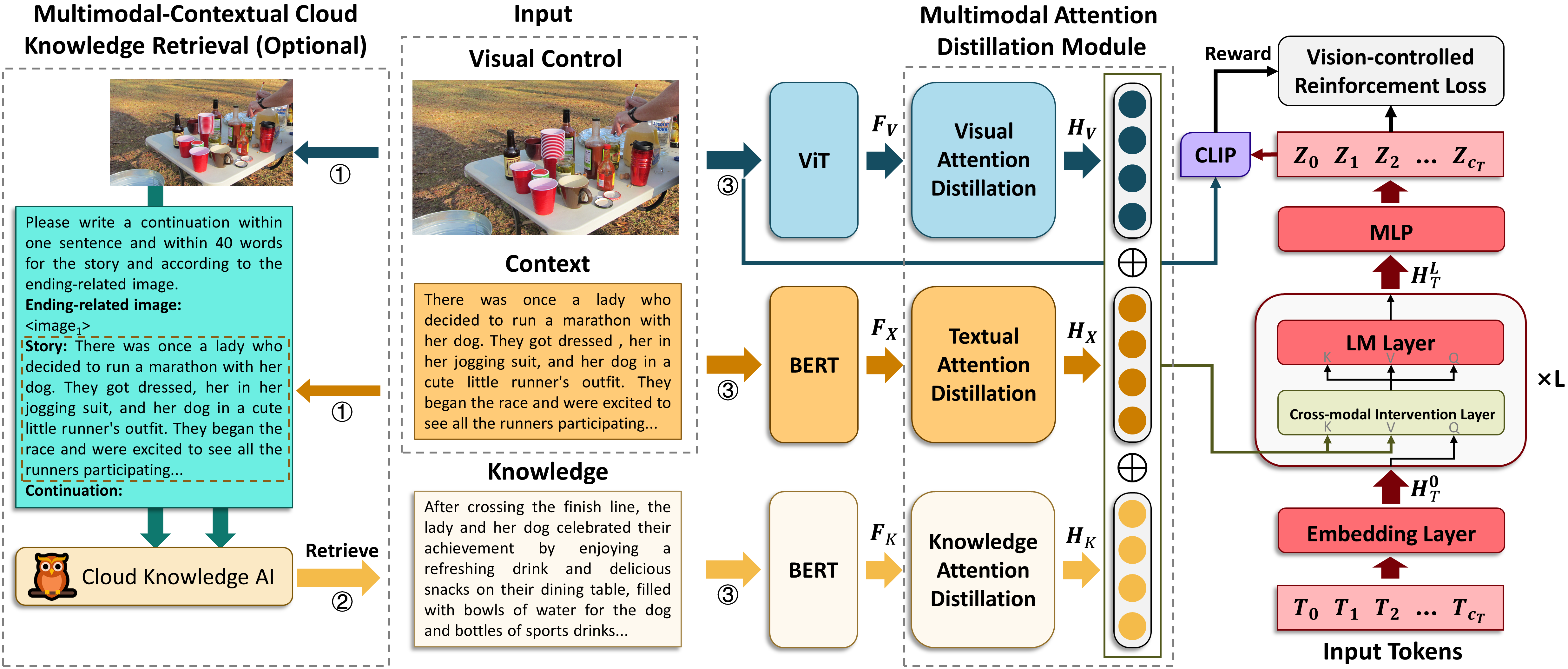

- Vision-Controllable Natural Language Generation (VCNLG) aims to continue natural language generation (NLG) following a peceived visual control.

- Vision-Controllable Language Model (VCLM) aligns a frozen vsiual encoder from BLIP, a frozen textual encoder BERT, and a trained-from-scratch or pretrained generative language model (LM).

- VCLM adopt a (optional) multimodal-contextual cloud knowledge retrieval to improve edge computing AI when additional knowledge is needed.

- VCLM adopt vision-controlled reinforcement learning to constrain the trained model to follow visual controls.

1. Prepare the code and the environment

Git clone our repository, creating a python environment and activate it via the following command

git clone https://github.com/LivXue/VCNLG.git

cd VCNLG

conda env create -f environment.yml

conda activate vcnlgWe adopt ViT pretrained by BLIP to extract visual features. Download the weights of BLIP w/ ViT-L and save the file to visual_feature_extraction/checkpoints/model_large.pth

2. Prepare the datasets

VIST-E [Link]

Download SIS-with-labels.tar.gz, train_split.(0-12).tar.gz, val_images.tar.gz, test_images.tar.gz and unzip them into data/VIST-E.

NOTE: There should be train.story-in-sequence.json, val.story-in-sequence.json, test.story-in-sequence.json in data/VIST-E/ and image_id.jpg/png in data/VIST-E/images/.

Then, run

python visual_feature_extraction/extract_fea_img.py --input_dir data/VIST-E/images --output_dir data/VIST-E/ViT_features --device <your device>to extract the ViT features of images.

Then, run

python data/VIST-E/prepare_data.py --images_directory data/VIST-E/ViT_features --device <your device>to generate the story files.

Finally, run

python data/VIST-E/extract_clip_feature.py --input_dir data/VIST-E/images --output_dir data/VIST-E/clip_featuresto generate clip features.

NOTE: There should be story_train.json, story_val.json, story_test.json in data/VIST-E/, <image_id>.npy in data/VIST-E/ViT_features/, and <image_id>.npy in data/VIST-E/clip_features/.

LSMDC-E [Link]

Download LSMDC 2021 version (task1_2021.zip, MPIIMD_downloadLinks.txt, MVADaligned_downloadLinks.txt) and unzip them into data/LSMDC-E.

NOTE: Due to LSMDC agreement, we cannot share data to any third-party.

NOTE: There should be LSMDC16_annos_training_someone.csv, LSMDC16_annos_val_someone.csv, LSMDC16_annos_test_someone.csv, MPIIMD_downloadLinks.txt, MVADaligned_downloadLinks.txt in data/LSMDC-E/.

Then, merge MPIIMD_downloadLinks.txt and MVADaligned_downloadLinks.txt to a download_video_urls.txt file, modify the user name and password to LSMDC in data/LSMDC-E/generate_clips.py and run

python data/LSMDC-E/generate_clips.py --output_path data/LSMDC-E/videos --user_name <your user name to LSMDC> --password <your password to LSMDC>to download videos and save resampled frames in to data/LSMDC-E/videos.

Then, run

python visual_feature_extraction/extract_fea_video.py --input_dir data/LSMDC-E/videos --output_dir data/LSMDC-E/ViT_features --device <your device>to extract the ViT features of video frames.

Then, run

python data/LSMDC-E/prepare_data.py --input_path data/LSMDC-Eto generate the story files.

Finally, run

python data/LSMDC-E/extract_clip_feature_video.py --input_dir data/LSMDC-E/videos --output_dir data/LSMDC-E/clip_featuresto generate clip features.

NOTE: There should be story_train.json, story_val.json, story_test.json in data/LSMDC-E/, <video_id>.npy in data/LSMDC-E/ViT_features/, and <video_id>.npy in data/LSMDC-E/clip_features/.

3. (Optional) Fetch Textual Knowledge

Download the code and pretrained checkpoints of mPLUG-Owl.

Then, run our script

python mPLUG-Owl/test_onshot.py

to retrieve knowledge for the datasets.

Check the configs in utils/opts.py and run

python train.py --dataset <dataset>to train the model.

Then, run

python eval.py --dataset <dataset>to test the model.

Coming soon...

We provide our results generated by VCLM on VIST-E and LSMDC-E test sets in results/

This repository is under BSD 3-Clause License.