Xinyu Liu, Beiwen Tian, Zhen Wang, Rui Wang, Kehua Sheng, Bo Zhang, Hao Zhao, Guyue Zhou

Paper Accepted to CVPR 2023.

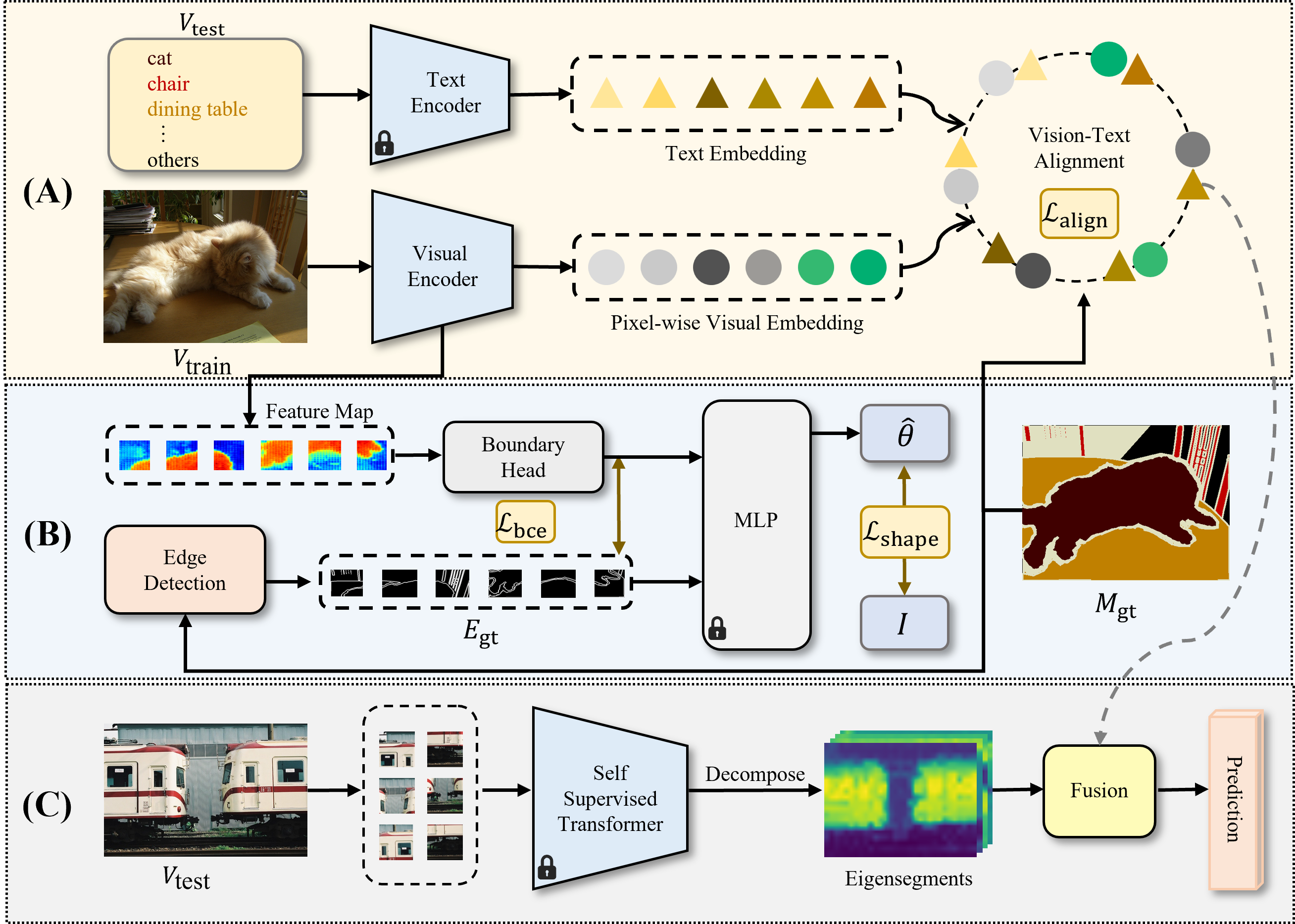

Thanks to the impressive progress of large-scale vision-language pretraining, recent recognition models can classify arbitrary objects in a zero-shot and open-set manner,with a surprisingly high accuracy. However, translating this success to semantic segmentation is not trivial, because this dense prediction task requires not only accurate semantic understanding but also fine shape delineation and existing vision-language models are trained with image-level language descriptions. To bridge this gap, we pursue shape-aware zero-shot semantic segmentation in this study. Inspired by classical spectral methods in the image segmentation literature, we propose to leverage the eigenvectors of Laplacian matrices constructed with self-supervised pixel-wise features to promote shape-awareness. Despite that this simple and effective technique does not make use of the masks of seen classes at all, we demonstrate that it outperforms a state-of-the-art shape-aware formulation that aligns ground truth and predicted edges during training. We also delve into the performance gains achieved on different datasets using different backbones and draw several interesting and conclusive observations: the benefits of promoting shape-awareness highly relates to mask compactness and language embedding locality. Finally, our method sets new state-of-the-art performance for zero-shot semantic segmentation on both Pascal and COCO, with significant margins.

torch=1.7.1

torchvision=0.8.2

clip=1.0

timm=0.4.12

Download the PASCAL-5i and COCO-20i datasets following HERE.

The ./datasets/ folder should have the following hierarchy:

└── datasets/

├── VOC2012/ # PASCAL VOC2012 devkit

│ ├── Annotations/

│ ├── ImageSets/

│ ├── ...

│ ├── SegmentationClassAug/

│ └── pascal_k5/

├── COCO2014/

│ ├── annotations/

│ │ ├── train2014/ # (dir.) training masks

│ │ ├── val2014/ # (dir.) validation masks

│ │ └── ..some json files..

│ ├── train2014/

│ ├── val2014/

│ └── coco_k5/

Download the top K=5 eigenvectors of the Laplacian matrix of image features from HERE. Unzip it directly and merge them with the current ./datasets/VOC2012/pascal/ and ./datasets/COCO2014/coco/ folder

CUDA_VISIBLE_DEVICES=0,1,2,3 python pascal_vit.py train --arch vitl16_384 --fold {0, 1, 2, 3} --batch_size 6 --random-scale 2 --random-rotate 10 --lr 0.001 --lr-mode poly --benchmark pascal

CUDA_VISIBLE_DEVICES=0,1,2,3 python coco_vit.py train --arch vitl16_384 --fold {0, 1, 2, 3} --batch_size 6 --random-scale 2 --random-rotate 10 --lr 0.0002 --lr-mode poly --benchmark coco

To test the trained model with its checkpoint:

CUDA_VISIBLE_DEVICES=0 python pascal_vit.py test --arch vitl16_384 --fold {0, 1, 2, 3} --batch_size 1 --benchmark pascal --eig_dir ./datasets/VOC2012/pascal_k5/ --resume "path_to_trained_model/best_model.pt"

CUDA_VISIBLE_DEVICES=0 python coco_vit.py test --arch vitl16_384 --fold {0, 1, 2, 3} --batch_size 1 --benchmark coco --eig_dir ./datasets/COCO2014/coco_k5/ --resume "path_to_trained_model/best_model.pt"

You can download our corresponding pre-trained models as follows:.

| Dataset | Fold | Backbone | Text Encoder | mIoU | URL |

|---|---|---|---|---|---|

| PASCAL | 0 | ViT-L/16 | ViT-B/32 | 62.7 | download |

| PASCAL | 1 | ViT-L/16 | ViT-B/32 | 64.3 | download |

| PASCAL | 2 | ViT-L/16 | ViT-B/32 | 60.6 | download |

| PASCAL | 3 | ViT-L/16 | ViT-B/32 | 50.2 | download |

| Dataset | Fold | Backbone | Text Encoder | mIoU | URL |

|---|---|---|---|---|---|

| COCO | 0 | ViT-L/16 | ViT-B/32 | 33.8 | download |

| COCO | 1 | ViT-L/16 | ViT-B/32 | 38.1 | download |

| COCO | 2 | ViT-L/16 | ViT-B/32 | 34.4 | download |

| COCO | 3 | ViT-L/16 | ViT-B/32 | 35.0 | download |

| Dataset | Fold | Backbone | Text Encoder | mIoU | URL |

|---|---|---|---|---|---|

| COCO | 0 | DRN | ViT-B/32 | 34.2 | download |

| COCO | 1 | DRN | ViT-B/32 | 36.5 | download |

| COCO | 2 | DRN | ViT-B/32 | 34.6 | download |

| COCO | 3 | DRN | ViT-B/32 | 35.6 | download |