We utilize MMHuman3D for estimating the coarse human poses.

-

Prepare MMHuman3D:

- Please follow the official instructions for installation, body model preparation.

- Choose a pre-trained model and download related resources. We use HMR to run our cases. The file structure is like as:

mmhuman3d ├── mmhuman3d ├── docs ├── demo ├── tests ├── tools ├── configs └── data └── body_models ├── J_regressor_extra.npy ├── J_regressor_h36m.npy ├── smpl_mean_params.npz └── smpl ├── SMPL_FEMALE.pkl ├── SMPL_MALE.pkl └── SMPL_NEUTRAL.pkl -

Prepare h^2^tc Data: Download h^2^tc data and fetch the

rgbd0image folder. -

Extract the human body pose:

cd mmhuman3dpython demo/estimate_smpl.py \ configs/hmr/resnet50_hmr_pw3d.py \ data/checkpoints/resnet50_hmr_pw3d.pth \ //lipeng1: whats this? --multi_person_demo \ --tracking_config demo/mmtracking_cfg/deepsort_faster-rcnn_fpn_4e_mot17-private-half.py \ --input_path ${DATASET_PATH}/${TAKE_ID}/processed/rgbd0 \ --show_path ${RESULT_OUT_PATH}/${TAKE_ID}.mp4 \ --smooth_type savgol \ --speed_up_type deciwatch \ --draw_bbox \ --output ${RESULT_OUT_PATH}

--input_pathis the input image folder. Please specify the${DATASET_PATH}/${TAKE_ID}.--show_pathwill save the visualized results in .mp4 format. Please specify the${RESULT_OUT_PATH}/${TAKE_ID}.mp4.--outputis the output folder path. The estimated human body poses will be saved in${RESULT_OUT_PATH}/inference_result.npz.

More detailed configs and explanations can be found here.

Considering the presence of visual occlusion, the results of estimated human poses obtained from MMHuman3D can be coarse, particularly in the arms and hands. However, given that the multi-modal data streams collected in our dataset, including the OptiTrack, SMP glove poses, RGB-D images and so on, users can utilize this information to optimize the estimated human poses. Here is an example pipeline that demonstrates how OptiTrack streams and SMP glove poses can be used to enhance the estimated human poses.

-

Prepare the h^2^tc data.

- Download the h^2^tc data and process it.

- Follow the manual calibration instruction to calibrate the camera's extrinsic parameters.

- Place the extrinsic parameters file

CamExtr.txtin folder${TAKE_ID}, like:

002870 ├── processed └── CamExtr.txt -

Prepare the environment

conda create -n pose python=3.7 conda activate pose conda install pytorch==1.6.0 torchvision==0.7.0 cudatoolkit=10.1 -c pytorch pip install matplotlib opencv-python scikit-learn trimesh \ Pillow pyrender pyglet==1.5.15 tensorboard \ git+https://github.com/nghorbani/configer \ torchgeometry==0.1.2 smplx==0.1.28

-

Prepare SMPL+H model

(Note: In the last coarse human pose estimation stage, the mmhuman3d estimates human body poses only. In this stage, we want to recover the body+hands poses, so we use the smplh model.)- Create an account on the project page.

- Go to the

Downloadspage and download theExtended SMPL+H model (used in AMASS). - Unzip the

smplh.tar.xzto the folderbody_models.

-

Prepare Pose Prior VPoser

(VPoser is used to regularize the optimized body poses to avoid those impossible poses. ) //lipeng2: those impossible poses?- Create an account on the project page

- Go to the

Downloadpage and underVPoser: Variational Human Pose Priorclick onDownload VPoser v1.0 - CVPR'19(Note: it's important to download v1.0 and not v2.0 which is not supported and will not work.) - Unzip the

vposer_v1_0.zipto the foldercheckpoints.

-

File structure:

pose_reconstruction_frommm ├── body_models └── neutral └── female └── male └── model.npz ├── checkpoints └── vposer_v1_0 ├── motion_modeling ├── pose_fitting ├── utils ├── config.py ├── fit_h2tc_mm.cfg # config file lipeng3: where is it? ├── h2tc_fit_dataset_mm.py ├── motion_optimizer.py └── run_fitting_mm.py -

Run the multi-modal optimizer to optimize the human poses with the OptiTrack data and SMP glove hands data.

python pose_reconstruction_frommm/run_fitting_mm.py \ @./pose_reconstruction_frommm/fit_h2tc_mm.cfg \ --smplh ./pose_reconstruction_frommm/body_models/male/model.npz \ --data-path ${DATASET_PATH}/${TAKE_ID}/processed/rgbd0 \ --mmhuman ${MMHUMAN3D_OUTPATH}/inference_result.npz \ --out ${OUTPATH} \

fit_h2tc_mm.cfgis the configuration file.--smplhspecifies the smplh human model path. We use the male model by default. You can change the path to specify the female ('female/model.npz') or neutral ('neutral/model.npz') human model.--data-pathspecifies the processed h^2^tc image folder, e.g.,root/002870/processed/rgbd0.--mmhumanspecifies the coarse body pose file extracted from coarse human pose estimation, e.g.,root/vis_results/inference_result.npz.--outspecifies the folder path to save the optimized human pose results. The output visualized meshes are saved in${OUTPATH}/body_meshes_humor. The results are saved in${OUTPATH}/results_out/stage2_results.npz.

Given the coarse MMHuman3D pose estimation

The OptiTrack term, denoted as

The wrist cost, denoted as

where

Independent frame-by-frame pose estimation often leads to temporal inconsistency in the estimated poses. To address this issue, we introduce a regularization term, denoted as

For the hand poses, we have already obtained the ground truth values

To animate the human model and retarget the motion to robots, we first transfer the human model motion to general format animation (.fbx). Then we retarget the animation to new rigged models. The operations below demand professional animation skills, including both rigging and skinning techniques. Please check the rigging and skinning tutorial first to get the know-how.

- Prepare the environment

-

Install Python FBX.

-

Run the following commands.

cd smplh_to_fbx_animation pip install -r requirements.txt

-

- Prepare SMPLH fbx model

Follow the SMPL-X Blender Add-On Tutorial to export the Tpose smplh skinned mesh as a rigged model (For convenience, you can export and use the smplx skinned mesh. In this step, smplh and smplx meshes are equivalent as we aim to transfer human motion to .fbx format animation). Save the female skinned mesh

smplx-female.fbxand male skinned meshsmplx-male.fbxto the folder./FBX_model. - File structure:

SMPL-to-FBX-main ├──FBX_model | ├──smplx-female.fbx | └──smplx-male.fbx ├──Convert.py ├──FbxReadWriter.py ├──SMPLXObject.py ├──SmplObject.py └──PathFilter.py - Run the following command to start converting.

python smplh_to_fbx_animation/Convert.py \ --input_motion_base ${SMPLH_POSE} \ --fbx_source_path FBX_model/smplx-male.fbx \ --output_base ${OUT_PATH}

-

--input_motion_base: specifies the optimized pose path. -

--fbx_source_path: specifies the skinned meshes. We use male mesh by default. -

--output_base: the animation file will be saved in${OUT_PATH}\fbx_animation.. You can open it via Blender or Unity 3D.

-

We utilize Unity 3D (2022.3.17) to demonstrate the retargeting process. Please refer to the tutorial video for a step-by-step instruction. Here is a brief overview of the steps involved:

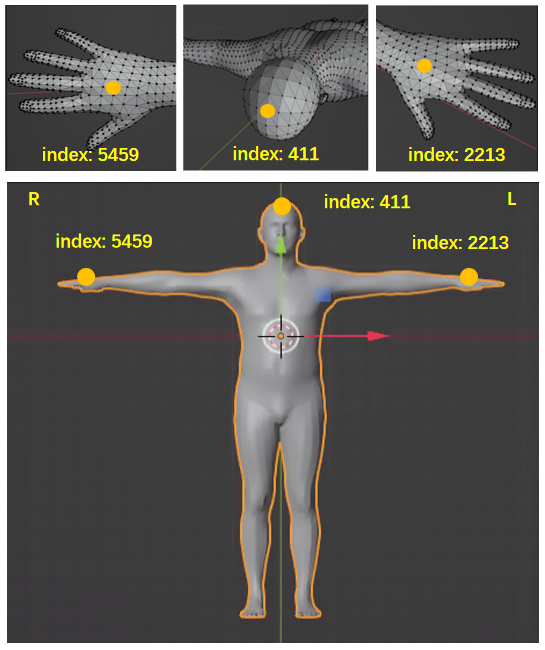

- Rigging the mesh model: Start with a mesh model and bind the mesh vertices to bones. So that we can animate the mesh model by animating its bones.

- Specify corresponding skeleton joints: In Unity 3D, after setting the rigged models as

humanoidin theanimation type, the software automatically solves the corresponding skeleton joints between the rigged models A and B. - Animation: Follow the instructions provided in the tutorial video. Unity 3D employs the Linear Blend Skinning (LBS) algorithm for animation.

The video below demonstrates a collection of retargeting results. It shows our pose reconstruction results and retargeting results using jvrc, atlas, icub and pepper robots. You can find the rigged models of these 4 robots in folder assets\robot_models (T-pose, .fbx format).