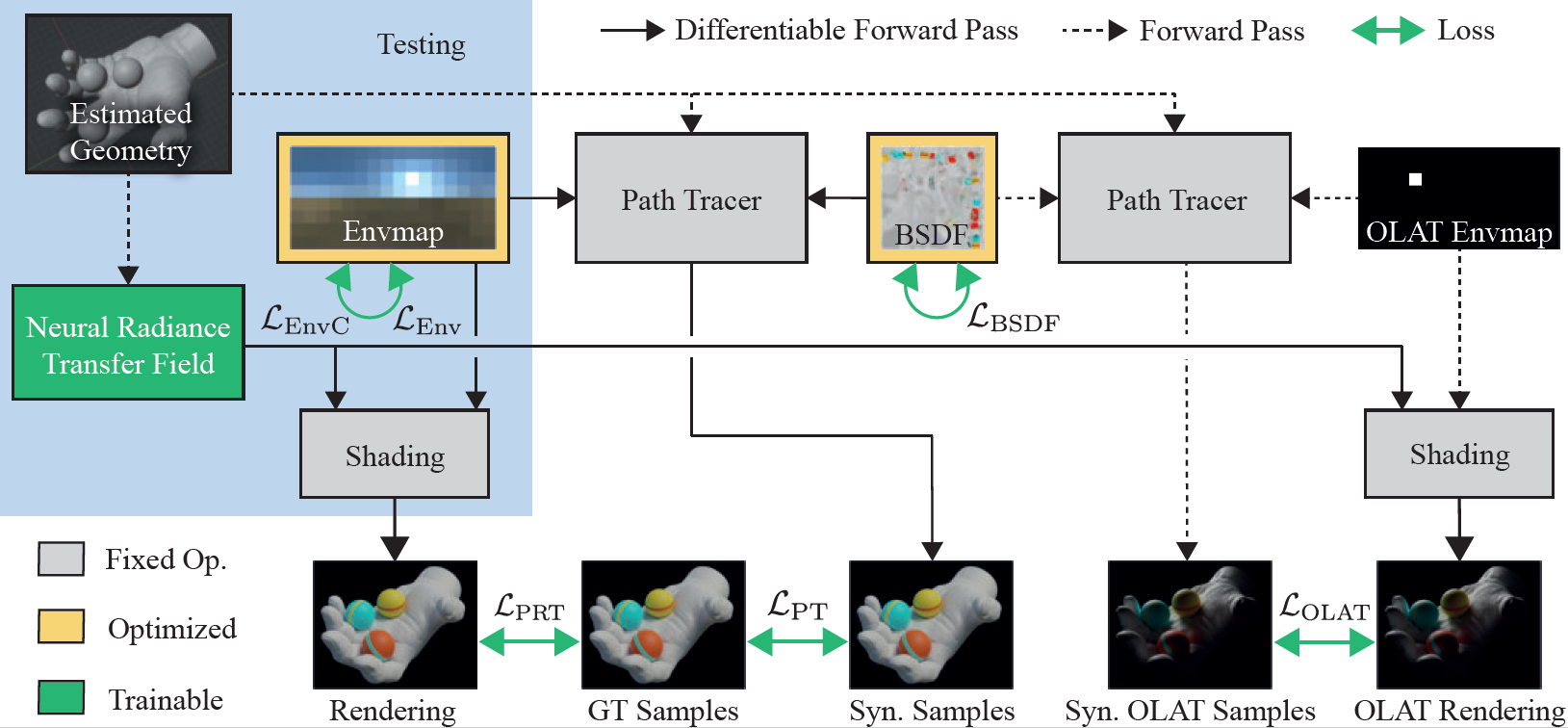

Pytorch implementation of Neural Radiance Transfer Fields for Relightable Novel-view Synthesis with Global Illumination.

Linjie Lyu1, Ayush Tewari2, Thomas Leimkühler1, Marc Habermann1, Christian Theobalt1

1Max Planck Institute for Informatics,Saarland Informatics Campus , 2MIT

-

Install Mitsuba2 with gpu_autodiff in variants.

-

Requirement

- python>=3.6 (tested on python=3.8.5)

- pytorch>=1.6.0

pip install tqdm scikit-image opencv-python pandas tensorboard addict imageio imageio-ffmpeg pyquaternion scikit-learn pyyaml seaborn PyMCubes trimesh plyfile redner-gpu- Install Blender (2.9.2 tested).

Mainly camera poses and segmentation masks(optional) are required. The input images can be captured under one or multiple environment maps. The dataset then needs to be put in the following file structure:

image/envmap0/

000.exr

001.exr

...

image/envmap1/

000.exr

001.exr

...

mask/

000.png

001.png

...

envmap/

test.exr

mitsuba/

envmap.png (32×16)

texture.png (512×512)

mesh/

mesh.obj (after the geometry reconstruction)

cameras.npz

olat_cameras.npz

test_cameras.npz

cameras.npz should match the poses of the input (ground truth) images. olat_cameras.npz includes the sampled (usually 200-300) camera parameters for OLAT synthesis.

Please refer to blender/camera_poses for more detail on how to output the camera parameters from your blender camera.

-

Geometry Reconstruction

We use this implementation of NeuS.

- Clone the repository. Prepare your data following their Readme.

- The camera parameter format of NeuS is slightly different from ours. You can replace their /dataio/DTU.py with our /NeuS/DTU.py to run their script with our camera parameter format.

- You should use neus.yaml as the config when running their script. And remember to normalize the mesh inside the unit sphere.

- Run NeuS with your data, and get the reconstructed mesh.

- Note that you can raise the marching cube resolution to extract the mesh from SDF with better geometry details.

-

Material Estimation

Before running Mitsuba2 for initial material estimation, check UV mapping in /blender. Put the post-processed mesh and an example image of texture and environment map under the data folder.

cd src

python mitsuba_material.py --data_dir $data_root--scene $scene --output_dir $output_root-

Render Buffers for OLAT views

Store position and view direction buffers for each view.

python render_buffer.py --data_dir $data_root --scene $scene --output_dir $output_root-

OLAT synthesis

Please refer to OLAT synthesis in blender

-

Train Network (overfitting to OLAT synthesis)

python train_olat.py --data_dir $data_root --scene $scene--output_dir $output_root- Train Network (jointly train with real images)

python train_joint.py --data_dir $data_root --scene $scene --output_dir $output_root- Test and relight

We use environment map resolution of 32×16.

python relight.py --data_dir $data_root --scene $scene --output_dir $output_root@inproceedings{lyu2022nrtf,

title = {Neural Radiance Transfer Fields for Relightable Novel-view Synthesis with Global Illumination},

author = {Lyu, Linjie and Tewari, Ayush and Leimkuehler, Thomas and Habermann, Marc and Theobalt, Christian},

year = {2022},

booktitle={ECCV},

}