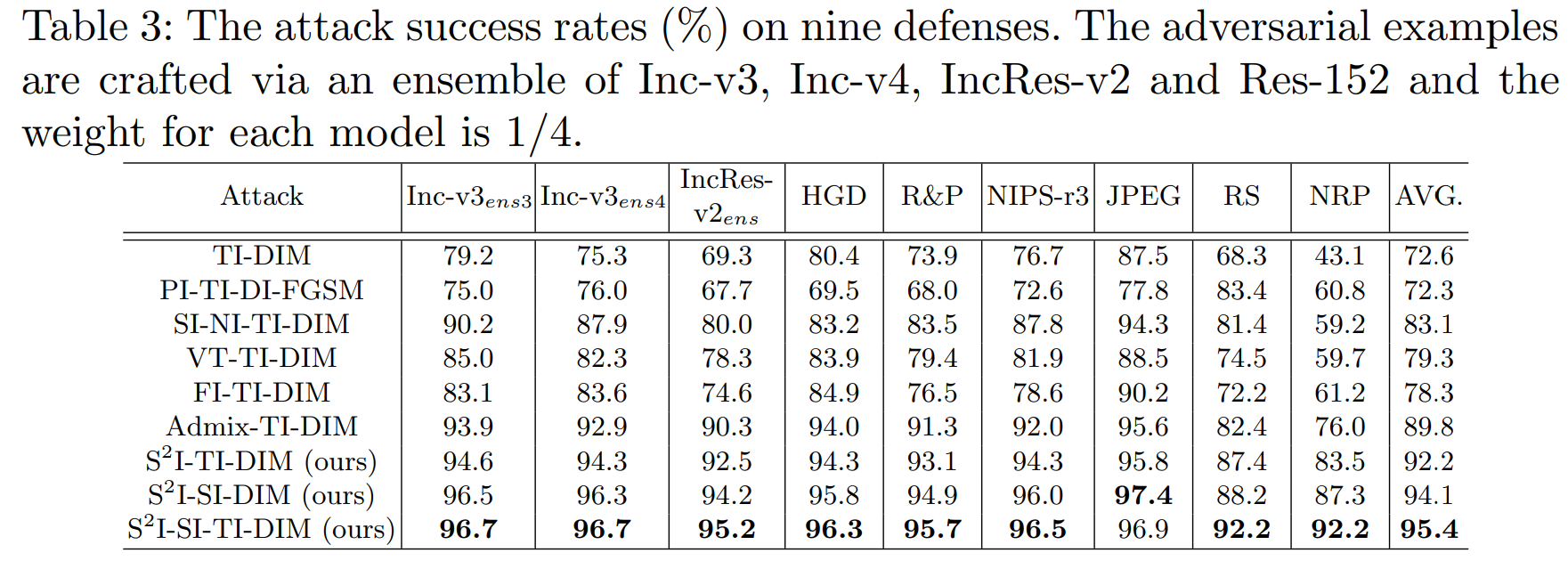

This repository is the official Pytorch code implementation for our paper Frequency Domain Model Augmentation for Adversarial Attack. In this paper, we propose a novel spectrum simulation attack to craft more transferable adversarial examples against both normally trained and defense models. Extensive experiments on the ImageNet dataset demonstrate the effectiveness of our method, e.g., attacking nine state-of-the-art defense models with an average success rate of 95.4%.

- All of existing model augmentation methods investigate relationships of different models in spatial domain, which may overlook the essential differences between them.

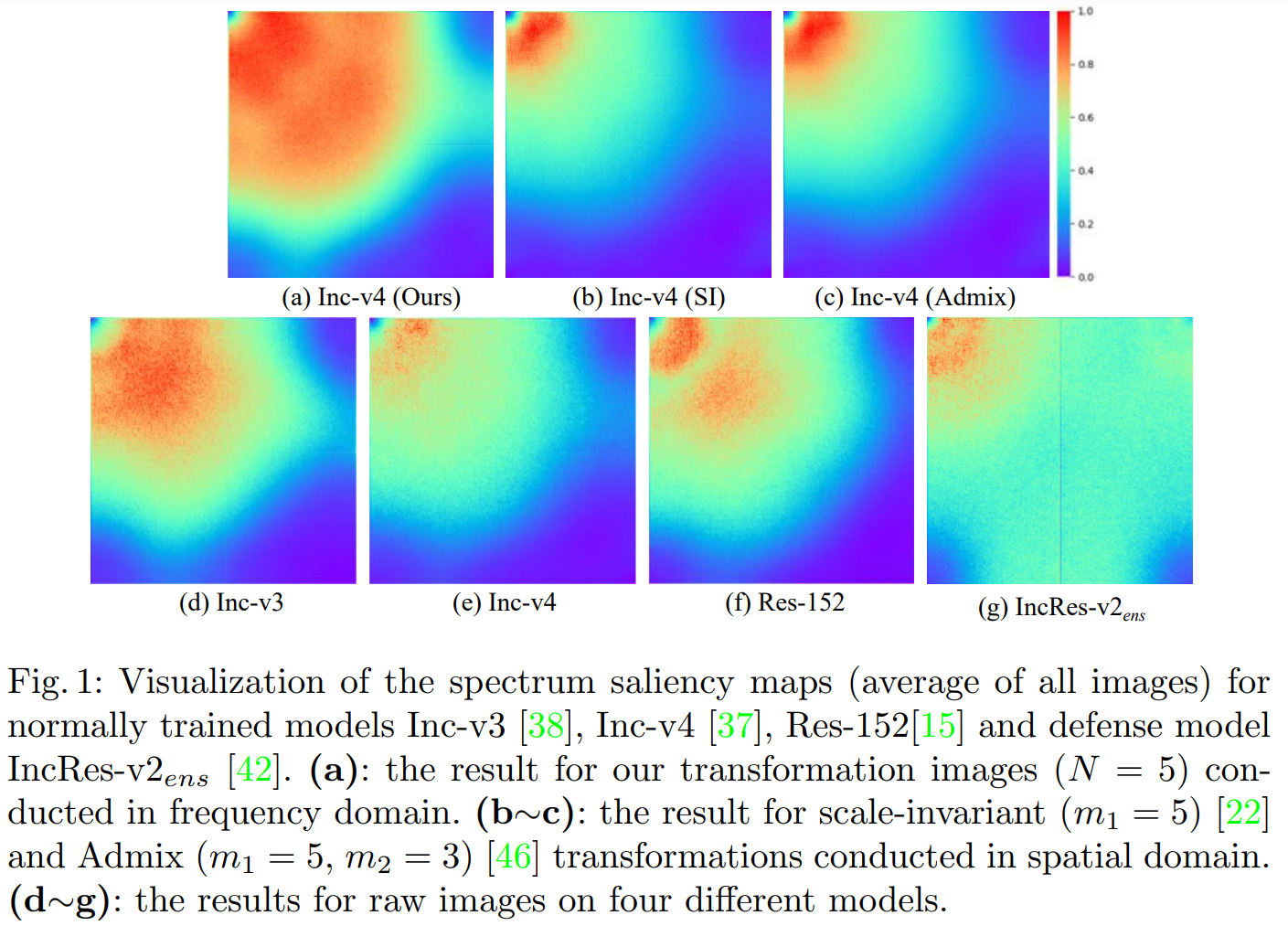

- To better uncover the differences among models, we introduce the spectrum saliency map (see Sec. 3.2) from a frequency domain perspective since representation of images in this domain have a fixed pattern, e.g., low-frequency components of an image correspond to its contour.

- As illustrated in Figure 1 (d~g), spectrum saliency maps (See Sec. 3.2) of different models significantly vary from each other, which clearly reveals that each model has different interests in the same frequency component.

==> Motivated by these, we consider tuning the spectrum saliency map to simulate more diverse substitute models, thus generating more transferable adversarial examples.

- python 3.8

- torch 1.8

- pretrainedmodels 0.7

- numpy 1.19

- pandas 1.2

-

Prepare models

Download pretrained PyTorch models here, which are converted from widely used Tensorflow models. Then put these models into

./models/ -

Generate adversarial examples

Using

attack.pyto implement our S2I-FGSM, you can run this attack as followingCUDA_VISIBLE_DEVICES=gpuid python attack.py --output_dir outputs

where

gpuidcan be set to any free GPU ID in your machine. And adversarial examples will be generated in directory./outputs. -

Evaluations on normally trained models

Running

verify.pyto evaluate the attack success rateCUDA_VISIBLE_DEVICES=gpuid python verify.py

-

Evaluations on defenses

To evaluate the attack success rates on defense models, we test eight defense models which contain three adversarial trained models (Inc-v3ens3, Inc-v3ens4, IncRes-v2ens) and six more advanced models (HGD, R&P, NIPS-r3, RS, JPEG, NRP).

- Inc-v3ens3,Inc-v3ens4,IncRes-v2ens: You can directly run

verify.pyto test these models. - HGD, R&P, NIPS-r3: We directly run the code from the corresponding official repo.

- RS: noise=0.25, N=100, skip=100. Download it from corresponding official repo.

- JPEG: Refer to here.

- NRP: purifier=NRP, dynamic=True, base_model=Inc-v3ens3. Download it from corresponding official repo.

- Inc-v3ens3,Inc-v3ens4,IncRes-v2ens: You can directly run

If you find this work is useful for your research, please consider citing our paper:

@inproceedings{Long2022ssa,

author = {Yuyang Long and

Qilong Zhang and

Boheng Zeng and

Lianli Gao and

Xianglong Liu and

Jian Zhang and

Jingkuan Song},

title = {Frequency Domain Model Augmentation for Adversarial Attack},

Booktitle = {European Conference on Computer Vision},

year = {2022}

}