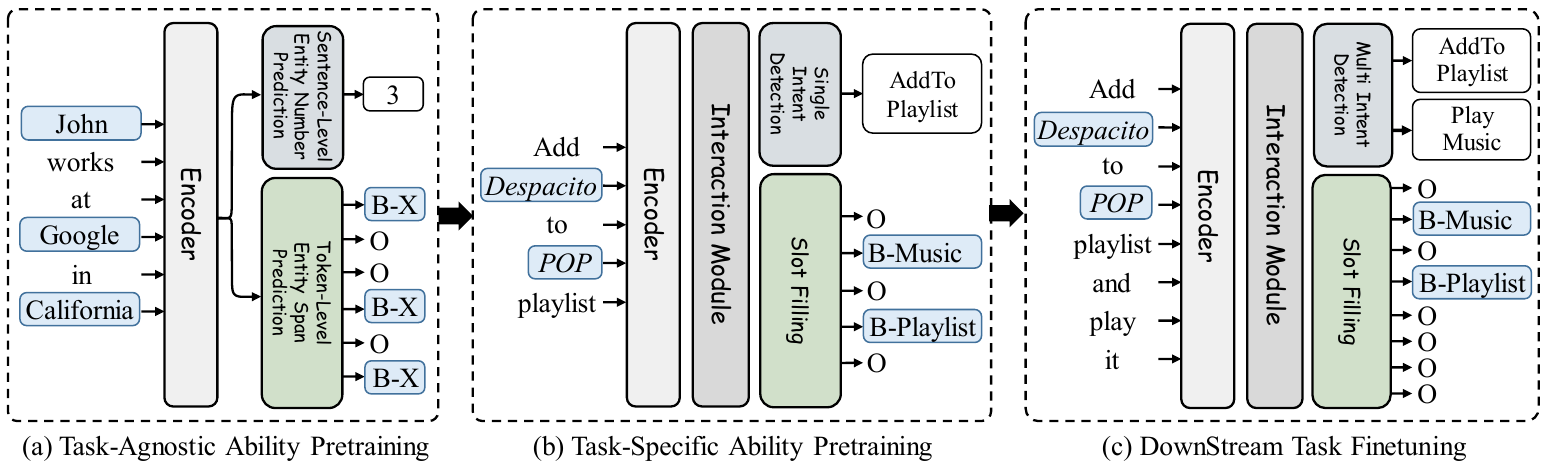

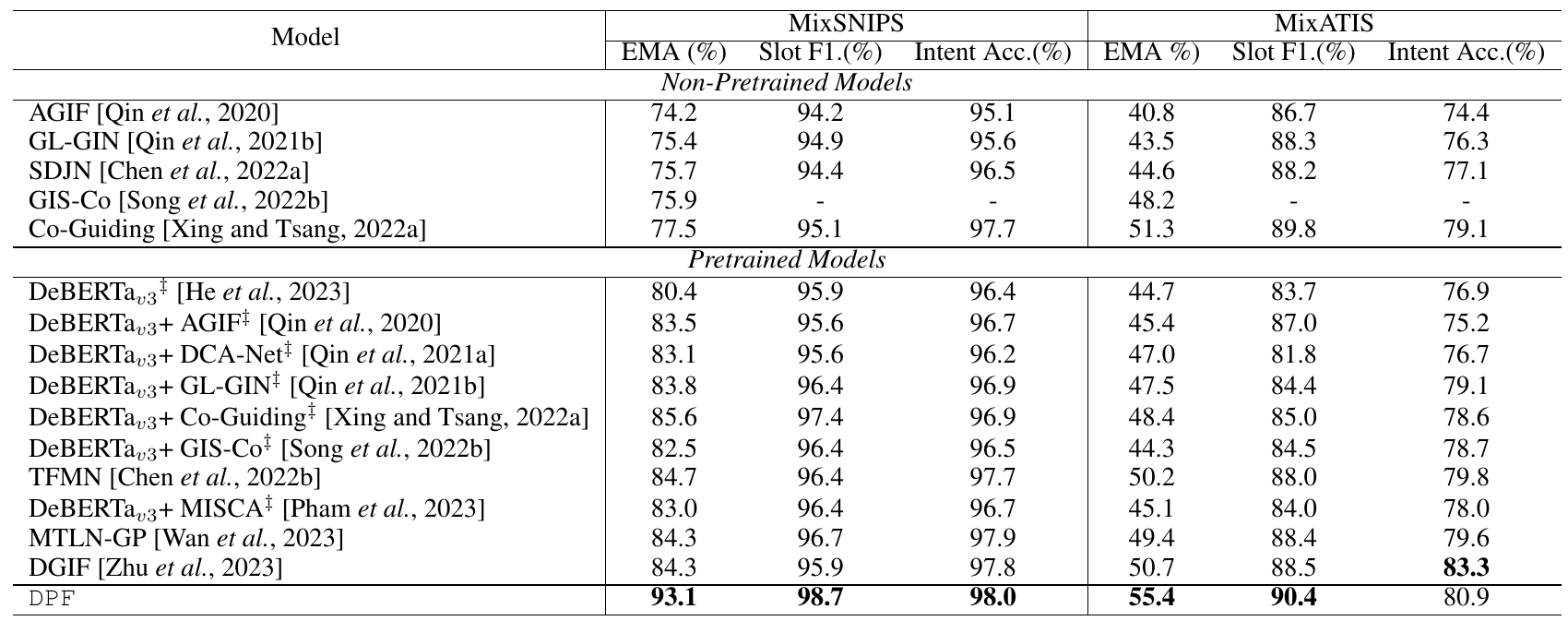

Decoupling breaks data barriers: A Decoupled Pre-training Framework for Multi-Intent Spoken Language Understanding

🔥 Official implementation for "Decoupling breaks data barriers: A Decoupled Pre-training Framework for Multi-Intent Spoken Language Understanding".

If you find this project useful for your research, please consider citing the following paper:

@inproceedings{qin-etal-2024-dpf,

title = "Decoupling breaks data barriers: A Decoupled Pre-training Framework for Multi-Intent Spoken Language Understanding",

author = "Qin, Libo and

Chen, Qiguang and

Zhou, Jingxuan and

Li, Qinzheng and

Lu, Chunlin and

Che, Wanxiang",

booktitle = "Proc. of IJCAI 2024",

}

DPF requires Python>=3.8, and torch>=1.12.0.

git clone https://github.com/LightChen233/DPF.git && cd DPF/

pip install -r requirements.txtDue to the restrictions of the open source agreement, we cannot open source the pre-training data. However, you can download our pre-trained models from different stages at TAAP and TSAP.

Please put the model in the save/dpf/taap/ckpt-155000 and save/dpf/tsap/ckpt-45000 directories.

Download: Due to the restrictions of the open source agreement, we cannot distribute the integrated results. Please download the dataset at the url provided in the original paper.

Process: Please process it into OpenSLU format.

Position:

- Please put TAAP data in the following paths:

dataset/taap/train.jsonlanddataset/taap/dev.jsonl. - Please put TSAP data in the following paths:

dataset/tsap/train.jsonlanddataset/tsap/dev.jsonl.

First, you need to pre-train on the TAAP data:

python run.py -cp config/reproduction/dpf/run_taap.yamlThe model will be saved in the save/taap path.

Secondly, you need to clean the classification header of the TAAP model:

python tools/clean_classifier.py --load_dir save/dpf/taap/ckpt-155000 \

--save_dir save/dpf/taap/ckpt-155000 python run.py -cp config/reproduction/dpf/run_tsap.yamlpython run.py -cp config/reproduction/dpf/run_mix-atis.yamlpython run.py -cp config/reproduction/dpf/run_mix-snips.yamlYou can also visit our checkpoint at LightChen2333/deberta-v3-dpf-mix-atis and LightChen2333/deberta-v3-dpf-mix-snips

* NOTE: Due to some stochastic factors(e.g., GPU and environment), it maybe need to slightly tune the hyper-parameters using grid search to obtain better results.

Please create Github issues here or email Qiguang Chen or Libo Qin if you have any questions or suggestions.

Our codes are adapted from OpenSLU.