This is the official PyTorch implementation of paper:

Zhixuan Liang, Yao Mu, Mingyu Ding, Fei Ni, Masayoshi Tomizuka, Ping Luo

ICML 2023 (Oral Presentation)

Code is tested on CUDA 11.1 with torch 1.9.1

You should install mujoco200 first and get your licence here.

conda env create -f environment.yml

conda activate diffuser_kuka

pip install -e .Specify the project root path.

export ADAPTDIFF_ROOT=$(pwd)Download and extract the checkpoint of guided model and dataset for model pretraining from this URL and save it as the following path

tar -xzvf metainfo.tar.gz

ln -s metainfo/kuka_dataset ${ADAPTDIFF_ROOT}/kuka_dataset

ln -s metainfo/logs ${ADAPTDIFF_ROOT}/logsThis part is equal to diffuser repo. To train the unconditional diffusion model on the block stacking task, you can use the following command:

python scripts/kuka.py --suffix <suffix>

Model checkpoints are saved in "logs/multiple_cube_kuka_{suffix}_conv_new_real2_128".

You may evaluate the diffusion model on unconditional stacking with

python scripts/unconditional_kuka_planning_eval.py

or conditional stacking with

python scripts/conditional_kuka_planning_eval.py

Generated samples and videos will be logged to ./results periodically.

With the trained kuka model, you can generate KUKA Stacking data (bootstrapping) or KUKA Pick and Place data (adaptation).

python scripts/conditional_kuka_planning_eval.py --env_name 'multiple_cube_kuka_temporal_convnew_real2_128' --diffusion_epoch 650 --save_render --do_generate --suffix ${SAVE_DIR_SUFFIX} --eval_times 1000

# You can specify the directory name to save data with --suffixYou can set a different dataset by specifying --data_path.

python scripts/pick_kuka_planning_eval.py --env_name 'multiple_cube_kuka_temporal_convnew_real2_128' --diffusion_epoch 650 --save_render --do_generate --suffix ${SAVE_DIR_SUFFIX} --eval_times 1000

# You can specify the directory name to save data with --suffixData generated path is: 'logs/{env_name}/plans_weighted5.0_128_1000/{suffix}/gen_dataset'

Note: There are directories under {suffix} directory, one is "cond_samples" which contains all the generated data and the other is "gen_dataset" which contains the selected high-quality data. We use "gen_dataset" to fine tune opur model.

For example, you set your generated data to ./pick2put_dataset/gen_0

python scripts/kuka_fine.py --data_path "./pick2put_dataset/gen_0" --suffix adapt1 --train_step 401000 --pretrain_path <pretrain_diffusion_ckpt> --visualization

# set visualization True only when you can have a graphicX, unset visualization will not affect the resultModel checkpoints are saved in 'logs/multiple_cube_kuka_pick_conv_new_real2_128/{suffix}'

You can still test the bootstrapping performance of AdaptDiffuser on unconditional stacking with

python scripts/unconditional_kuka_planning_eval.py --env_name <fine_tuned_model_dir>

or conditional stacking with

python scripts/conditional_kuka_planning_eval.py --env_name <fine_tuned_model_dir>

python scripts/pick_kuka_planning_eval.py --env_name <fine_tuned_model_dir>

Besides, you could specify the env_name to pretrained diffuser's diffusion model to derive its performance on KUKA Pick and Place Task.

If you find this code useful for your research, please use the following BibTeX entry.

@inproceedings{liang2023adaptdiffuser,

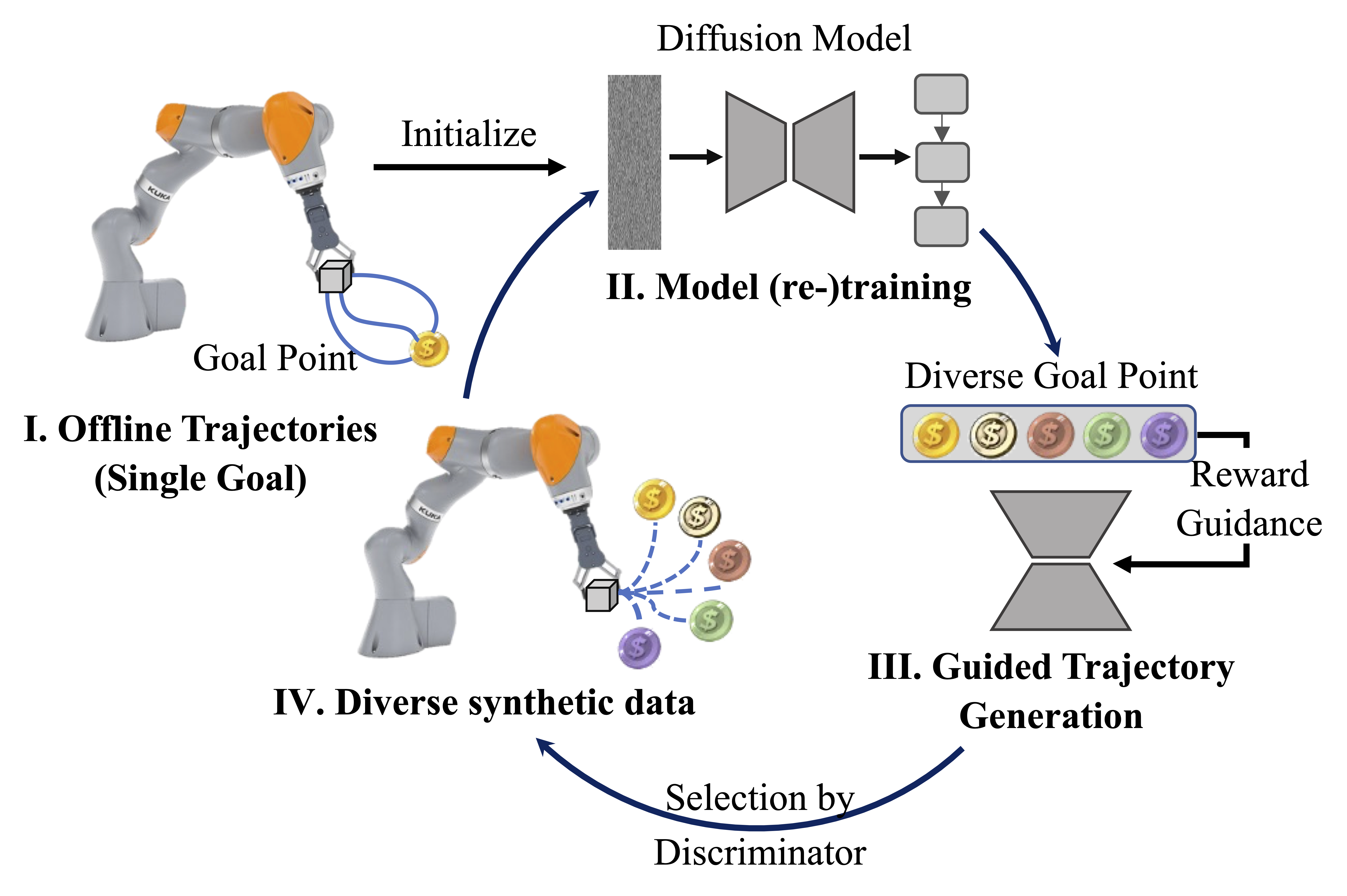

title={AdaptDiffuser: Diffusion Models as Adaptive Self-evolving Planners},

author={Zhixuan Liang and Yao Mu and Mingyu Ding and Fei Ni and Masayoshi Tomizuka and Ping Luo},

booktitle = {International Conference on Machine Learning},

year={2023},

}The diffusion model implementation is based on Michael Janner's diffuser repo. The organization of this repo and remote launcher is based on the trajectory-transformer repo. We thank the authors for their great works! Also, extend my great thanks to members of MMLAB-HKU.