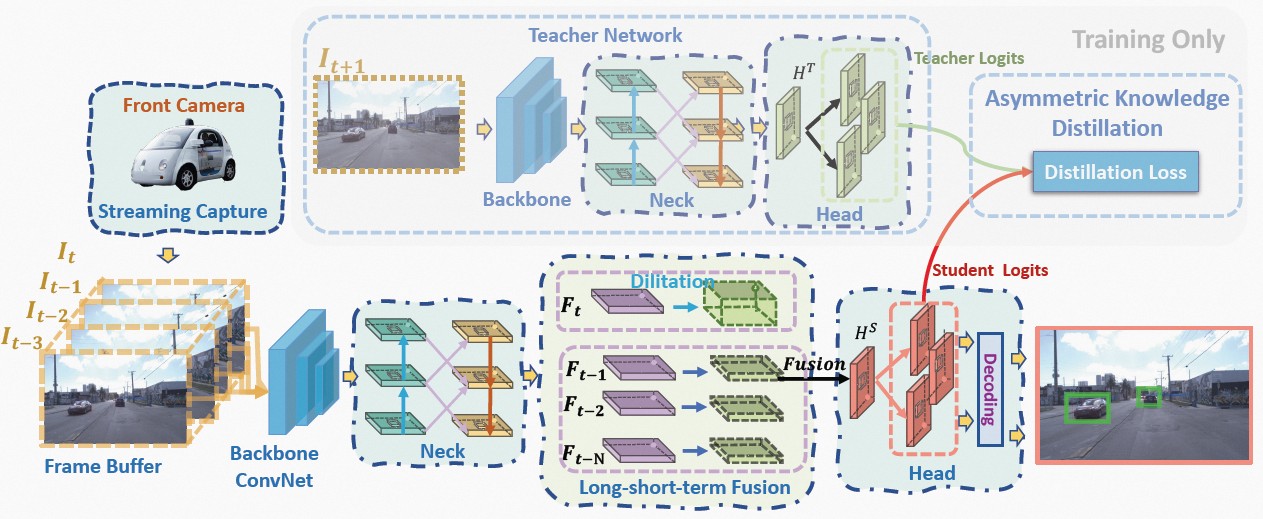

Real-time perception, or streaming perception, is a crucial aspect of autonomous driving that has yet to be thoroughly explored in existing research. To address this gap, we present DAMO-StreamNet, an optimized framework that combines recent advances from the YOLO series with a comprehensive analysis of spatial and temporal perception mechanisms, delivering a cutting-edge solution. The key innovations of DAMO-StreamNet are: (1) A robust neck structure incorporating deformable convolution, enhancing the receptive field and feature alignment capabilities. (2) A dual-branch structure that integrates short-path semantic features and long-path temporal features, improving motion state prediction accuracy. (3) Logits-level distillation for efficient optimization, aligning the logits of teacher and student networks in semantic space. (4) A real-time forecasting mechanism that updates support frame features with the current frame, ensuring seamless streaming perception during inference.~Our experiments demonstrate that DAMO-StreamNet surpasses existing state-of-the-art methods, achieving 37.8% (normal size (600, 960)) and 43.3% (large size (1200, 1920)) sAP without using extra data. This work not only sets a new benchmark for real-time perception but also provides valuable insights for future research. Additionally, DAMO-StreamNet can be applied to various autonomous systems, such as drones and robots, paving the way for real-time perception.

| Model | size | velocity | sAP 0.5:0.95 |

sAP50 | sAP75 | coco pretrained models | weights |

|---|---|---|---|---|---|---|---|

| DAMO-StreamNet-S | 600×960 | 1x | 31.8 | 52.3 | 31.0 | link | link |

| DAMO-StreamNet-M | 600×960 | 1x | 35.5 | 57.0 | 36.2 | link | link |

| DAMO-StreamNet-L | 600×960 | 1x | 37.8 | 59.1 | 38.6 | link | link |

| DAMO-StreamNet-L | 1200×1920 | 1x | 43.3 | 66.1 | 44.6 | link | link |

Please find the teacher model here.

You can refer to StreamYOLO/LongShortNet to install the whole environments.

Refer to here to prepare the Argoverse-HD dataset. Please put the dataset into ./data or create a symbolic links to the dataset in ./data.

Please download the models provided above into ./models and organize them as:

./models

├── checkpoints

│ ├── streamnet_l_1200x1920.pth

│ ├── streamnet_l.pth

│ ├── streamnet_m.pth

│ └── streamnet_s.pth

├── coco_pretrained_models

│ ├── yolox_l_drfpn.pth

│ ├── yolox_m_drfpn.pth

│ └── yolox_s_drfpn.pth

└── teacher_models

└── l_s50_still_dfp_flip_ep8_4_gpus_bs_8

└── best_ckpt.pth

bash run_train.shbash run_eval.shOur implementation is mainly based on StreamYOLO and LongShortNet. We gratefully thank the authors for their wonderful works.