- 2023/02/28 Initial code.

- 2023/02/28 Paper released on Arxiv.

- 2023/02/17 Demo release.

In this paper, we propose the first stereo SSC method named OccDepth, which fully exploits implicit depth information from stereo images to help the recovery of 3D geometric structures. The Stereo Soft Feature Assignment (Stereo-SFA) module is proposed to better fuse 3D depth-aware features by implicitly learning the correlation between stereo images. Besides, the Occupancy Aware Depth (OAD) module is used to obtain geometry-aware 3D features by knowledge distillation using pre-trained depth models. In addition, a reformed TartanAir benchmark, named SemanticTartanAir, is provided in this paper for further testing our OccDepth method on SSC task.

Mesh results compared with ground truth on KITTI-08:

Voxel results compared with ground truth on KITTI-08: Full demo videos can be downloaded via `git lfs pull`, the demo videos are saved as "assets/demo.mp4" and "assets/demo_voxel.mp4".

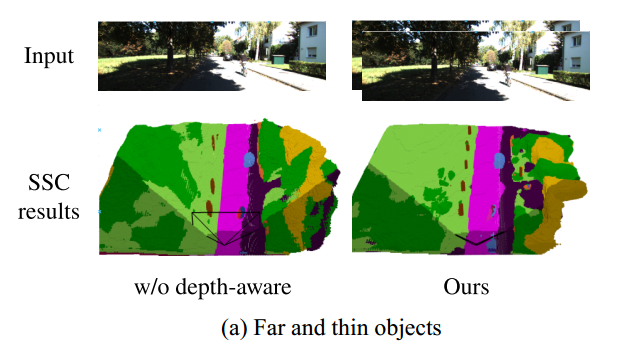

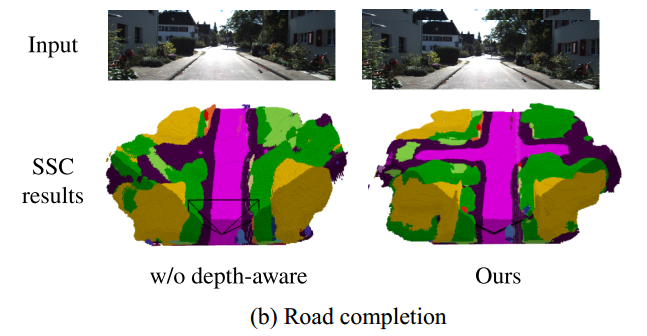

Fig. 1: RGB based Semantic Scene Completion with/without depth-aware. (a) Our proposed OccDepth method can detect smaller and farther objects. (b) Our proposed OccDepth method complete road better.

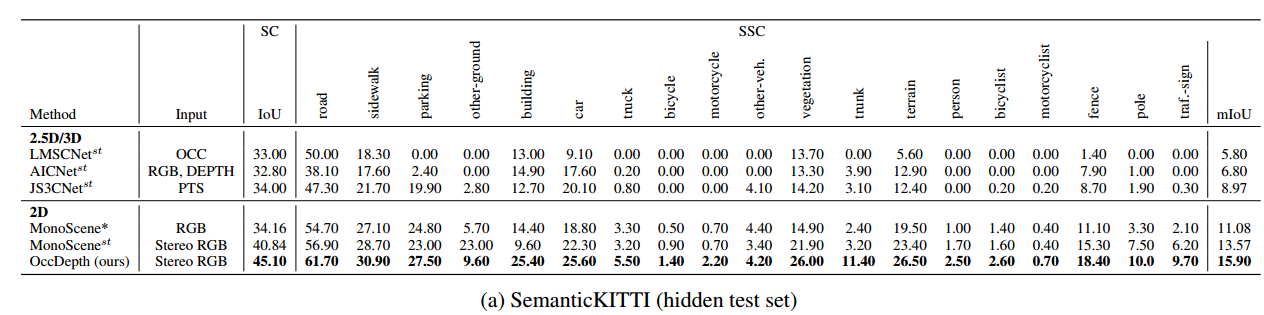

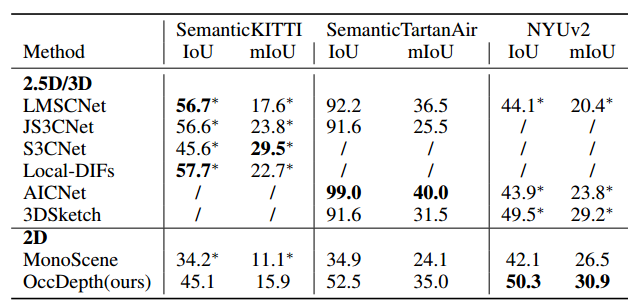

| Method | Input | SC IoU | SSC mIoU |

|---|---|---|---|

| 2.5D/3D | |||

| LMSCNet(st) | OCC | 33.00 | 5.80 |

| AICNet(st) | RGB, DEPTH | 32.8 | 6.80 |

| JS3CNet(st) | PTS | 39.30 | 9.10 |

| 2D | |||

| MonoScene | RGB | 34.16 | 11.08 |

| MonoScene(st) | Stereo RGB | 40.84 | 13.57 |

| OccDepth (ours) | Stereo RGB | 45.10 | 15.90 |

- Create conda environment:

conda create -y -n occdepth python=3.7

conda activate occdepth

conda install pytorch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.7 -c pytorch -c nvidia- Install dependencies:

pip install -r requirements.txt

conda install -c bioconda tbb=2020.2-

Download kitti odometry and semantic dataset

-

Download preprocessed depth

-

Preprocessed kitti semantic data

cd OccDepth/ python occdepth/data/semantic_kitti/preprocess.py data_root="/path/to/semantic_kitti" data_preprocess_root="/path/to/kitti/preprocess/folder"

-

Download NYUv2 dataset

-

Preprocessed NYUv2 data

cd OccDepth/ python occdepth/data/NYU/preprocess.py data_root="/path/to/NYU/depthbin" data_preprocess_root="/path/to/NYU/preprocess/folder"

- Setting

DATA_LOG,DATA_CONFIGinenv_{dataset}.sh, examples:##examples export DATA_LOG=$workdir/logdir/semanticKITTI export DATA_CONFIG=$workdir/occdepth/config/semantic_kitti/multicam_flospdepth_crp_stereodepth_cascadecls_2080ti.yaml

- Setting

data_root,data_preprocess_rootanddata_stereo_depth_rootin config file (occdepth/config/xxxx.yaml), examples:##examples data_root: '/data/dataset/KITTI_Odometry_Semantic' data_preprocess_root: '/data/dataset/kitti_semantic_preprocess' data_stereo_depth_root: '/data/dataset/KITTI_Odometry_Stereo_Depth'

cd OccDepth/

source env_{dataset}.sh

## 4 gpus and batch size on each gpu is 1

python occdepth/scripts/generate_output.py n_gpus=4 batch_size_per_gpu=1cd OccDepth/

source env_{dataset}.sh

## 1 gpu and batch size on each gpu is 1

python occdepth/scripts/eval.py n_gpus=1 batch_size_per_gpu=1cd OccDepth/

source env_{dataset}.sh

## 4 gpus and batch size on each gpu is 1

python occdepth/scripts/train.py logdir=${DATA_LOG} n_gpus=4 batch_size_per_gpu=1This repository is released under the Apache 2.0 license as found in the LICENSE file.

Our code is based on these excellent open source projects:

Many thanks to them!

- https://github.com/wzzheng/TPVFormer

- https://github.com/FANG-MING/occupancy-for-nuscenes

- https://github.com/nvlabs/voxformer

If you find this project useful in your research, please consider cite:

@article{miao2023occdepth,

Author = {Ruihang Miao and Weizhou Liu and Mingrui Chen and Zheng Gong and Weixin Xu and Chen Hu and Shuchang Zhou},

Title = {OccDepth: A Depth-Aware Method for 3D Semantic Scene Completion},

journal = {arXiv:2302.13540},

Year = {2023},

}

If you have any questions, feel free to open an issue or contact us at miaoruihang@megvii.com, huchen@megvii.com.