Codes for ACM Multimedia Asia 2023 paper I2SRM: Intra- and Inter-Sample Relationship Modeling for Multimodal Information Extraction.

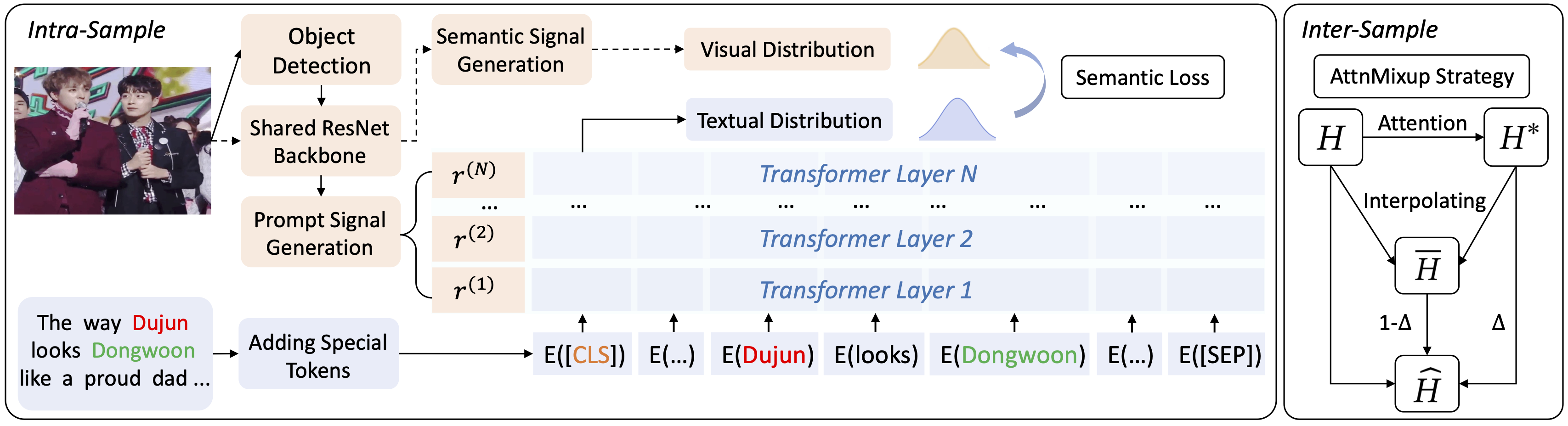

The approach includes the intra-sample and inter-sample relationship modeling modules. The intra-sample relationship modeling module can rectify different modality distributions. The inter-sample relationship modeling module can enhance representations across samples by AttnMixup Strategy.Please install the following requirements:

transformers==4.11.3

torchvision==0.8.2

torch==1.7.1

The multimodal relation extraction dataset MNRE can be downloaded here.

The multimodal named entity recognition: Twitter2015 and Twitter2017 can be downloaded here ( twitter-2015 and twitter-2017 ).

To train the I2SRM model, please use the following bash command:

bash run.shFor testing, please delete the "--save_path" line and add load your checkpoint by leveraging "--load_path" in run.sh.

If you find our paper inspiring, please cite:

@article{huang2023i2srm,

title={I2SRM: Intra-and Inter-Sample Relationship Modeling for Multimodal Information Extraction},

author={Huang, Yusheng and Lin, Zhouhan},

journal={arXiv preprint arXiv:2310.06326},

year={2023}

}