[Project] [Paper] [Related Work: A2RL (for Auto Image Cropping)]

Official Chainer implementation of GP-GAN: Towards Realistic High-Resolution Image Blending

| source | destination | mask | composited | blended |

|---|---|---|---|---|

|

|

|

|

|

GP-GAN (aka. Gaussian-Poisson GAN) is the author's implementation of the high-resolution image blending algorithm described in:

"GP-GAN: Towards Realistic High-Resolution Image Blending"

Huikai Wu, Shuai Zheng, Junge Zhang, Kaiqi Huang

Given a source image, a destination image and a mask, our algorithm could blend the two images given the mask and generate high-resolution and realsitic results. Our algorithm is based on deep generative models such as Wasserstein GAN.

Contact: Hui-Kai Wu (huikaiwu@icloud.com)

- Install the python libraries. (See

Requirements). - Download the code from GitHub:

git clone https://github.com/wuhuikai/GP-GAN.git

cd GP-GAN-

Download the pretrained models

blending_gan.npzandunsupervised_blending_gan.npzfrom Google Drive, then put them inmodels. -

Run the python script:

python run_gp_gan.py --src_image images/test_images/src.jpg --dst_image images/test_images/dst.jpg --mask_image images/test_images/mask.png --blended_image images/test_images/result.png| Mask | Copy-and-Paste | Modified-Poisson | Multi-splines | Supervised GP-GAN | Unsupervised GP-GAN |

|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

The code is written in Python3.5 and requires the following 3rd party libraries:

- scipy

- numpy

- fuel

pip install git+git://github.com/mila-udem/fuel.gitDetails see the official README for installing fuel.

pip install scikit-imageDetails see the official README for installing skimage.

pip install chainerDetails see the official README for installing Chainer. NOTE: All experiments are tested with Chainer 1.22.0. It should work well with Chainer 1.**.* without any change.

Type python run_gp_gan.py --help for a complete list of the arguments.

--supervised: use unsupervised Blending GAN if set to False--list_path: process batch of images according to the list

- Download Transient Attributes Dataset, see the project website for more details.

- Crop the images in each subfolder:

python crop_aligned_images.py --data_root [Path for imageAlignedLD in Transient Attributes Dataset]- Train Blending GAN:

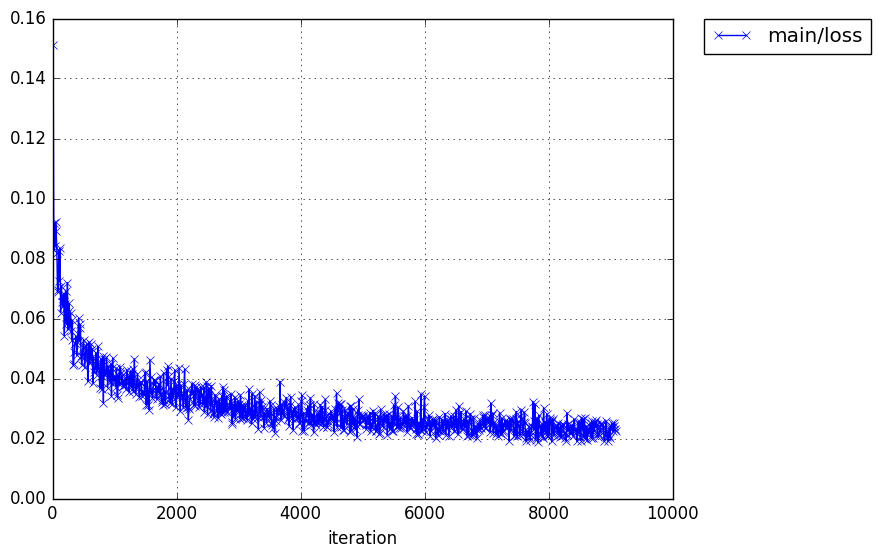

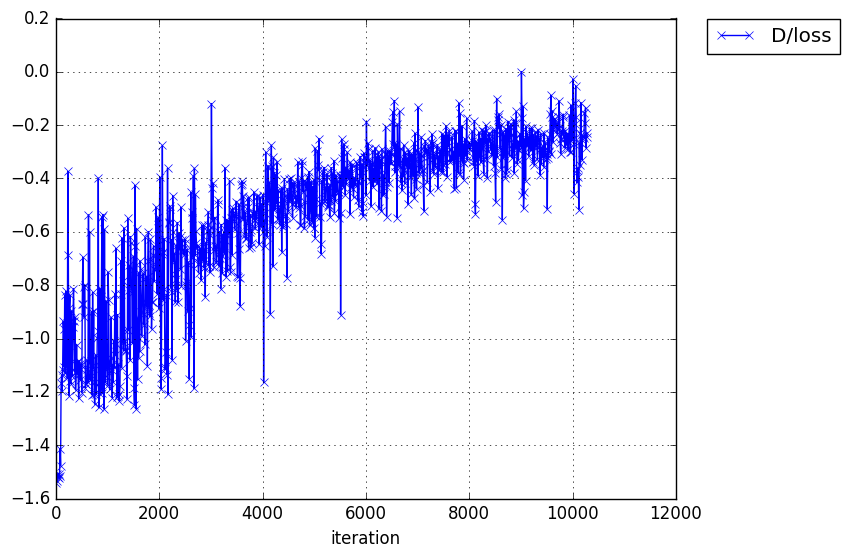

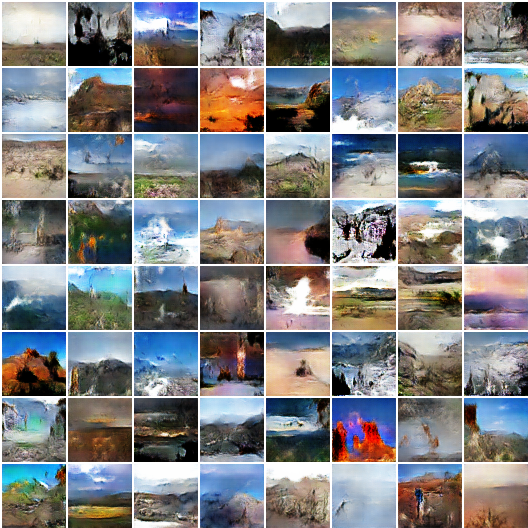

python train_blending_gan.py --data_root [Path for cropped aligned images of Transient Attributes Dataset]- Training Curve

- Result

| Training Set | Validation Set |

|---|---|

|

|

- Download the hdf5 dataset of outdoor natural images: ourdoor_64.hdf5 (1.4G), which contains 150K landscape images from MIT Places dataset.

- Train unsupervised Blending GAN:

python train_wasserstein_gan.py --data_root [Path for outdoor_64.hdf5]NOTE: Type python [SCRIPT_NAME].py --help for more details about the arguments.

- The folder name on LabelMe is

/transient_attributes_101 - The processed masks are in the folder

maskon this repository - Coresponding scripts for processing raw xmls from

LabelMeare also in the foldermask

Download pretrained caffe model and transform it to Chainer model:

python load_caffe_model.pyOr Download pretrained Chainer model directly.

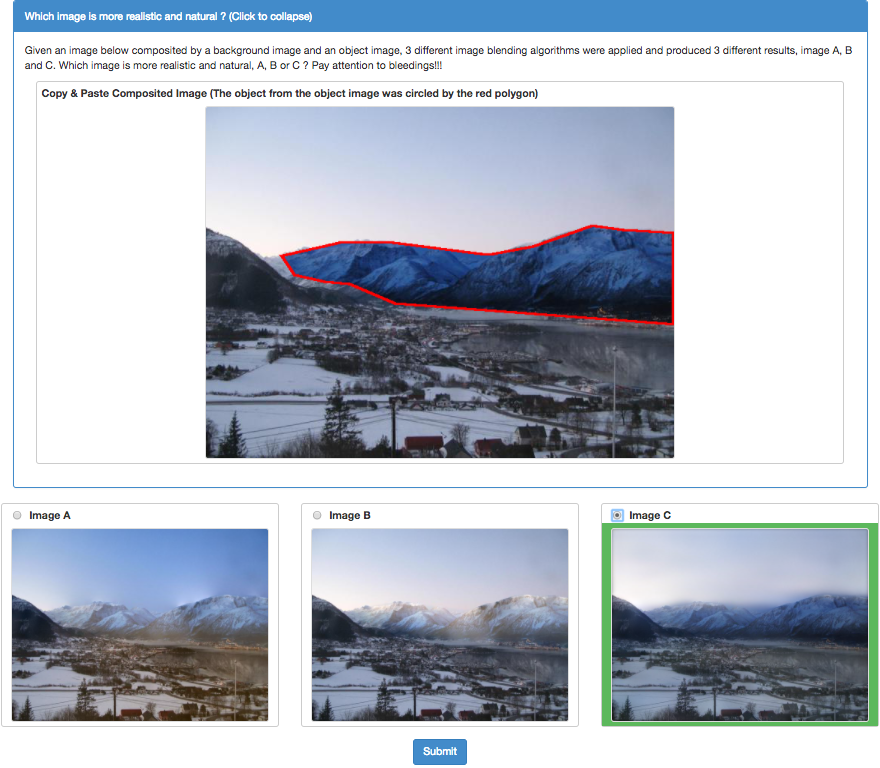

python predict_realism.py --list_path [List File]- Install lighttgb:

sudo apt-get install lighttpd- Start server by running the script in folder

user_study:

sh light_tpd_server.sh [Image Folder] [Port]See [user_study.html] in folder user_study for details.

Code for baseline methods can be downloaded from here.

Also, the modified code for baseline methods is in folder Baseline.

- Experiment with more gradient operators like Sobel or egde detectors like Canny.

- Add more constraints for optimizing

zvector like Perception Loss. - Try different losses like CNN-MRF.

@article{wu2017gp,

title={GP-GAN: Towards Realistic High-Resolution Image Blending},

author={Wu, Huikai and Zheng, Shuai and Zhang, Junge and Huang, Kaiqi},

journal={arXiv preprint arXiv:1703.07195},

year={2017}

}