Update: Permission is required for using Facebook Graph API to get information from public pages not owned by yourself

The Rfacebook package is authored and maintained by Pablo Barbera and Michael Piccirilli

This demo will provide a brief introduction in

- setting up and using the Rfacebook package to scrape public pages posts and comments.

- performing a simple lexicon-based sentiment analysis

- performing text frequency analysis with the use of word cloud will be applied

- plotting a simple trend analysis based on the page's "likes", "shares" and "comments"

- creating a heatmap of the page posts by day and time

- and the page's posting frequency.

- Installing the Rfacebook package

install.packages("Rfacebook")

library(Rfacebook)- Getting Temporary Access Token

- Get temporary (2hrs) FB access token via: https://developers.facebook.com/tools/explorer > click on Get Token

- Replace token <- "" with your token code

- You will need Graph API v2.6 and higher to get Reactions (love, haha, wow, sad, angry)

- Creating an App on Facebook Platform

- Create an app via: https://developers.facebook.com/

- Replace app_id="xxx" with your App ID code

- Replace app_secret="xxx" with your App Secret code

token <- "xxxxxxxxxx"

fb_oauth <- fbOAuth(app_id="xxx",app_secret="xxx",extended_permissions = TRUE)

save(fb_oauth, file="fb_oauth")

load("fb_oauth")- Get the latest 5000 posts their respective Reactions information from FB Page thatsmyfairprice

ntucPosts <- getPage("thatsmyfairprice", token, n = 5000, reactions = T)head(ntucPosts)| id | likes_count | from_id | from_name | message | created_time | type | link | story | comments_count | shares_count | love_count | haha_count | wow_count | sad_count | angry_count | post_datetime |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 124299741408_10150633686196409 | 5 | 124299741408 | NTUC FairPrice | NA | 1973-01-01T08:00:00+0000 | link | https://www.facebook.com/124299741408/posts/10150633686196409/ | NTUC FairPrice added a life event from 1973: Founded in 1973. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1973-01-01 08:00:00 |

| 124299741408_10150856484056409 | 8 | 124299741408 | NTUC FairPrice | NA | 1983-01-01T08:00:00+0000 | photo | https://www.facebook.com/thatsmyfairprice/photos/p.10150856484011409/10150856484011409/?type=3 | NTUC FairPrice added a life event from 1983: NTUC FairPrice Co-operative Ltd Formed. | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1983-01-01 08:00:00 |

| 124299741408_10150856485356409 | 3 | 124299741408 | NTUC FairPrice | NA | 1983-06-01T07:00:00+0000 | link | https://www.facebook.com/124299741408/posts/10150856485356409/ | NTUC FairPrice added a life event from June 1983: Used Textbook Project Launched. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1983-06-01 07:00:00 |

| 124299741408_10150856487136409 | 6 | 124299741408 | NTUC FairPrice | NA | 1985-01-01T08:00:00+0000 | photo | https://www.facebook.com/thatsmyfairprice/photos/p.10150856487101409/10150856487101409/?type=3 | NTUC FairPrice added a life event from 1985: FairPrice Housebrand Products Introduced. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1985-01-01 08:00:00 |

| 124299741408_10150856489041409 | 1 | 124299741408 | NTUC FairPrice | NA | 1991-01-01T08:00:00+0000 | photo | https://www.facebook.com/thatsmyfairprice/photos/p.10150856489011409/10150856489011409/?type=3 | NTUC FairPrice added a life event from 1991: First Retailer to Implement Bar-Coding at Check-Out Counters. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1991-01-01 08:00:00 |

| 124299741408_10150856491601409 | 3 | 124299741408 | NTUC FairPrice | NA | 1994-01-01T08:00:00+0000 | photo | https://www.facebook.com/thatsmyfairprice/photos/p.10150856491566409/10150856491566409/?type=3 | NTUC FairPrice added a life event from 1994: Everyday Low Price (EDLP) Essential Items Introduced. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1994-01-01 08:00:00 |

Check how many posts are scraped from the FB page

nrow(ntucPosts)2473

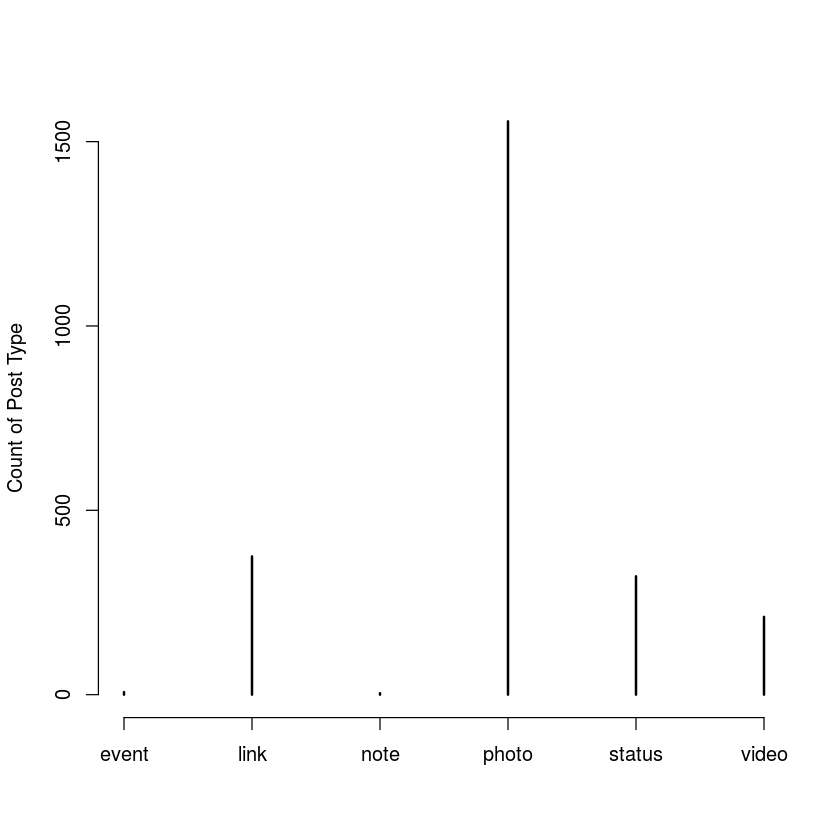

- Checking the distribution of post type of this FB page

table(ntucPosts$type) event link note photo status video

7 375 4 1555 321 211

plot(table(ntucPosts$type),ylab="Count of Post Type")- Let's take a look at the number of Likes & Reactions in each Post Type

install.packages("sqldf")

library(sqldf)postType <- sqldf("select type, count(type) as total_type_count,

sum(shares_count) as total_shares,

sum(likes_count) as total_likes,

sum(love_count) as total_love,

sum(haha_count) as total_haha,

sum(wow_count) as total_wow,

sum(sad_count) as total_sad,

sum(angry_count) as total_angry

from ntucPosts group by type")postType| type | total_type_count | total_shares | total_likes | total_love | total_haha | total_wow | total_sad | total_angry |

|---|---|---|---|---|---|---|---|---|

| event | 7 | 2 | 2786 | 10 | 1 | 0 | 0 | 0 |

| link | 375 | 9710 | 83335 | 560 | 93 | 484 | 12 | 18 |

| note | 4 | 4 | 26 | 0 | 0 | 0 | 0 | 0 |

| photo | 1555 | 34243 | 424959 | 3014 | 209 | 1419 | 172 | 138 |

| status | 321 | 2016 | 17255 | 6 | 47 | 20 | 8 | 192 |

| video | 211 | 38630 | 82989 | 1593 | 295 | 392 | 55 | 65 |

- Loop through all the posts and get their respective comments

ntucComment <- list()

for (i in 1:length(ntucPosts$id)){

ntucComment[[i]] <- getPost(ntucPosts$id[i], token, likes=F, comments=T)

ntucComment[[i]][['reactions']] <- getReactions(post=ntucPosts$id[i], token)

if (nrow(ntucComment[[i]][["comments"]]) == 0)

ntucComment[[i]][['comments']][1,] <- c(NA,NA,NA,NA,NA,NA,NA)

}

ntucComments <- do.call(rbind, lapply(ntucComment, data.frame, stringsAsFactors=FALSE))head(ntucComments)| post.from_id | post.from_name | post.message | post.created_time | post.type | post.link | post.id | post.likes_count | post.comments_count | post.shares_count | ... | comments.likes_count | comments.comments_count | comments.id | reactions.id | reactions.likes_count | reactions.love_count | reactions.haha_count | reactions.wow_count | reactions.sad_count | reactions.angry_count |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 124299741408 | NTUC FairPrice | NA | 1973-01-01T08:00:00+0000 | link | https://www.facebook.com/124299741408/posts/10150633686196409/ | 124299741408_10150633686196409 | 5 | 0 | 0 | ... | NA | NA | NA | 124299741408_10150633686196409 | 5 | 0 | 0 | 0 | 0 | 0 |

| 124299741408 | NTUC FairPrice | NA | 1983-01-01T08:00:00+0000 | photo | https://www.facebook.com/thatsmyfairprice/photos/p.10150856484011409/10150856484011409/?type=3 | 124299741408_10150856484056409 | 8 | 0 | 1 | ... | NA | NA | NA | 124299741408_10150856484056409 | 8 | 0 | 0 | 0 | 0 | 0 |

| 124299741408 | NTUC FairPrice | NA | 1983-06-01T07:00:00+0000 | link | https://www.facebook.com/124299741408/posts/10150856485356409/ | 124299741408_10150856485356409 | 3 | 0 | 0 | ... | NA | NA | NA | 124299741408_10150856485356409 | 3 | 0 | 0 | 0 | 0 | 0 |

| 124299741408 | NTUC FairPrice | NA | 1985-01-01T08:00:00+0000 | photo | https://www.facebook.com/thatsmyfairprice/photos/p.10150856487101409/10150856487101409/?type=3 | 124299741408_10150856487136409 | 6 | 0 | 0 | ... | NA | NA | NA | 124299741408_10150856487136409 | 6 | 0 | 0 | 0 | 0 | 0 |

| 124299741408 | NTUC FairPrice | NA | 1991-01-01T08:00:00+0000 | photo | https://www.facebook.com/thatsmyfairprice/photos/p.10150856489011409/10150856489011409/?type=3 | 124299741408_10150856489041409 | 1 | 0 | 0 | ... | NA | NA | NA | 124299741408_10150856489041409 | 1 | 0 | 0 | 0 | 0 | 0 |

| 124299741408 | NTUC FairPrice | NA | 1994-01-01T08:00:00+0000 | photo | https://www.facebook.com/thatsmyfairprice/photos/p.10150856491566409/10150856491566409/?type=3 | 124299741408_10150856491601409 | 3 | 0 | 0 | ... | NA | NA | NA | 124299741408_10150856491601409 | 3 | 0 | 0 | 0 | 0 | 0 |

nrow(ntucComments)19518

There are 19518 rows of comments scraped but there are not actually 19518 comments being made. This is because some of the posts do not have any comments made and their respective comments columns will show NA.

First, let's proceed to standardize the dataframe variable names

names(ntucComments)- 'post.from_id'

- 'post.from_name'

- 'post.message'

- 'post.created_time'

- 'post.type'

- 'post.link'

- 'post.id'

- 'post.likes_count'

- 'post.comments_count'

- 'post.shares_count'

- 'comments.from_id'

- 'comments.from_name'

- 'comments.message'

- 'comments.created_time'

- 'comments.likes_count'

- 'comments.comments_count'

- 'comments.id'

- 'reactions.id'

- 'reactions.likes_count'

- 'reactions.love_count'

- 'reactions.haha_count'

- 'reactions.wow_count'

- 'reactions.sad_count'

- 'reactions.angry_count'

- We will replace all . with _ instead

names(ntucComments) <- gsub("\\.", "_", names(ntucComments))

names(ntucComments)- 'post_from_id'

- 'post_from_name'

- 'post_message'

- 'post_created_time'

- 'post_type'

- 'post_link'

- 'post_id'

- 'post_likes_count'

- 'post_comments_count'

- 'post_shares_count'

- 'comments_from_id'

- 'comments_from_name'

- 'comments_message'

- 'comments_created_time'

- 'comments_likes_count'

- 'comments_comments_count'

- 'comments_id'

- 'reactions_id'

- 'reactions_likes_count'

- 'reactions_love_count'

- 'reactions_haha_count'

- 'reactions_wow_count'

- 'reactions_sad_count'

- 'reactions_angry_count'

- Create a function that coverts FB date format to GMT

format.facebook.date <- function(datestring) {

date <- as.POSIXct(datestring, format = "%Y-%m-%dT%H:%M:%S+0000", tz="GMT")

}- Adding two new variable post_datetime & comments_datetime to ntucComments dataframe

This two variable will use the format.facebook.date function to convert the original FB date format to GMT format

ntucComments$comments_datetime <- format.facebook.date(ntucComments$comments_created_time)

ntucComments$post_datetime <- format.facebook.date(ntucComments$post_created_time)- Convert the comments variable column to ASCII

ntucComments$comments_message <- iconv(ntucComments$comments_message, "ASCII", "UTF-8", sub="")- Remove comments made by the organisation itself

ntucCommentsClean <- subset(ntucComments, comments_from_name != "NTUC FairPrice")- Substituting emoticons with text/descriptions

ntucCommentsClean$comments_message <- gsub(":-)", " happy ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub(";-)", " happy ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub(":)", " happy ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub(";)", " happy ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub("=p", " happy ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub(":p", " happy ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub(":P", " happy ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub("=P", " happy ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub("=)", " happy ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub(":-)", " happy ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub("<3", " love ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub(":\\(", " sad ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub(":-\\(", " sad ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub(":x", " oops ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub(":X", " oops ", ntucCommentsClean$comments_message)- Substituting line breaks, tabs, digits, url and punctuations with empty space

ntucCommentsClean$comments_message <- gsub("\n", " ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub("\t", " ", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub("\\d", "", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub("http[^[:blank:]]+", "", ntucCommentsClean$comments_message)

ntucCommentsClean$comments_message <- gsub("[[:punct:]]", "", ntucCommentsClean$comments_message)- Removing rows with less than 3 characters (I deemed to be not useful at all for text analysis)

ntucCommentsClean <- subset(ntucCommentsClean, nchar(comments_message) > 2)- load positive and negative lexicons

positives = readLines("positive-words.txt")

negatives = readLines("negative-words.txt")- Adding custom abbreviation / internet slangs to lexicon

positives = c(positives, 'thx', 'congrats', 'luv')

negatives = c(negatives, 'wtf', 'cancellation')- Installing required packages

install.packages("stringr")

install.packages("dplyr")

install.packages("plyr")

library(stringr)

library(dplyr)

library(plyr)Installing package into '/home/nbcommon/R'

(as 'lib' is unspecified)

Installing package into '/home/nbcommon/R'

(as 'lib' is unspecified)

Installing package into '/home/nbcommon/R'

(as 'lib' is unspecified)

Attaching package: 'dplyr'

The following objects are masked from 'package:stats':

filter, lag

The following objects are masked from 'package:base':

intersect, setdiff, setequal, union

------------------------------------------------------------------------------

You have loaded plyr after dplyr - this is likely to cause problems.

If you need functions from both plyr and dplyr, please load plyr first, then dplyr:

library(plyr); library(dplyr)

------------------------------------------------------------------------------

Attaching package: 'plyr'

The following objects are masked from 'package:dplyr':

arrange, count, desc, failwith, id, mutate, rename, summarise,

summarize

- Creating a simple scoring sentiment analysis function

score.sentiment = function(sentences, pos.words, neg.words, .progress='none')

{

require(plyr)

require(stringr)

scores = laply(sentences, function(sentence, pos.words, neg.words) {

sentence = gsub('[[:punct:]]', '', sentence)

sentence = gsub('[[:cntrl:]]', '', sentence)

sentence = gsub('\\d+', '', sentence)

sentence = tolower(sentence)

word.list = str_split(sentence, '\\s+')

words = unlist(word.list)

pos.matches = match(words, pos.words)

neg.matches = match(words, neg.words)

pos.matches = !is.na(pos.matches)

neg.matches = !is.na(neg.matches)

score = sum(pos.matches) - sum(neg.matches)

return(score)

}, pos.words, neg.words, .progress=.progress )

scores.df = data.frame(score=scores, text=sentences)

return(scores.df)

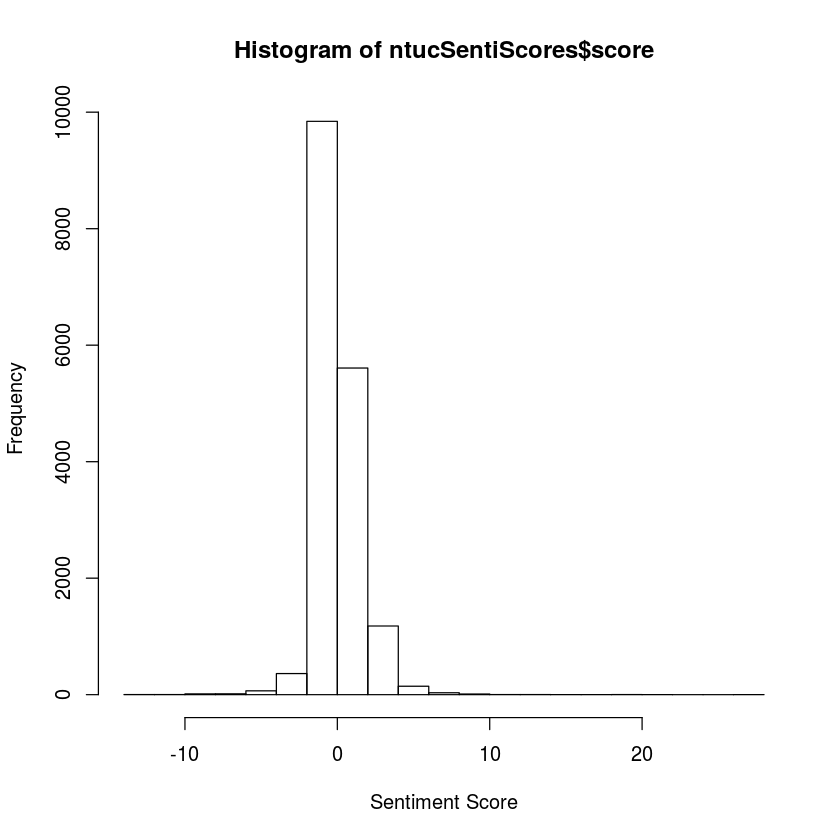

}ntucSentiScores <- score.sentiment(ntucCommentsClean$comments_message,positives,negatives,.progress = "text") |======================================================================| 100%

hist(ntucSentiScores$score,xlab="Sentiment Score")he histogram shows that most of the comments have a score hovering around the 0 score mark. The histogram depicts a normal distribution indicating that most of the comments are neutral with some positive and negative comments

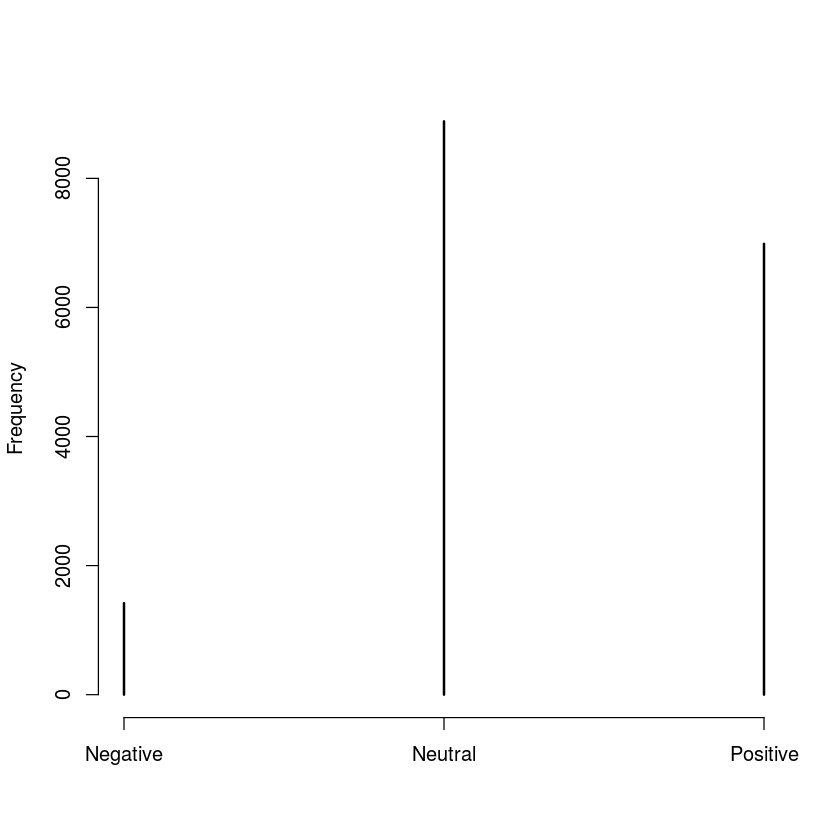

- Now, let's add a new variable sentiment_polar to determine the sentiment of the comment

ntucCommentsClean$sentiment <- ntucSentiScores$score

ntucCommentsClean$sentiment_polar <- ifelse(ntucCommentsClean$sentiment == 0, "Neutral", ifelse(ntucCommentsClean$sentiment > 0, "Positive", "Negative"))head(ntucCommentsClean, n=2)| post_from_id | post_from_name | post_message | post_created_time | post_type | post_link | post_id | post_likes_count | post_comments_count | post_shares_count | ... | reactions_likes_count | reactions_love_count | reactions_haha_count | reactions_wow_count | reactions_sad_count | reactions_angry_count | comments_datetime | post_datetime | sentiment | sentiment_polar | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 13 | 124299741408 | NTUC FairPrice | NA | 2004-01-01T08:00:00+0000 | photo | https://www.facebook.com/thatsmyfairprice/photos/p.10150858221106409/10150858221106409/?type=3 | 124299741408_10150858221126409 | 1 | 3 | 0 | ... | 1 | 0 | 0 | 0 | 0 | 0 | 2012-06-08 09:23:48 | 2004-01-01 08:00:00 | 0 | Neutral |

| 15 | 124299741408 | NTUC FairPrice | NA | 2004-01-01T08:00:00+0000 | photo | https://www.facebook.com/thatsmyfairprice/photos/p.10150858221106409/10150858221106409/?type=3 | 124299741408_10150858221126409 | 1 | 3 | 0 | ... | 1 | 0 | 0 | 0 | 0 | 0 | 2015-03-04 19:44:11 | 2004-01-01 08:00:00 | -1 | Negative |

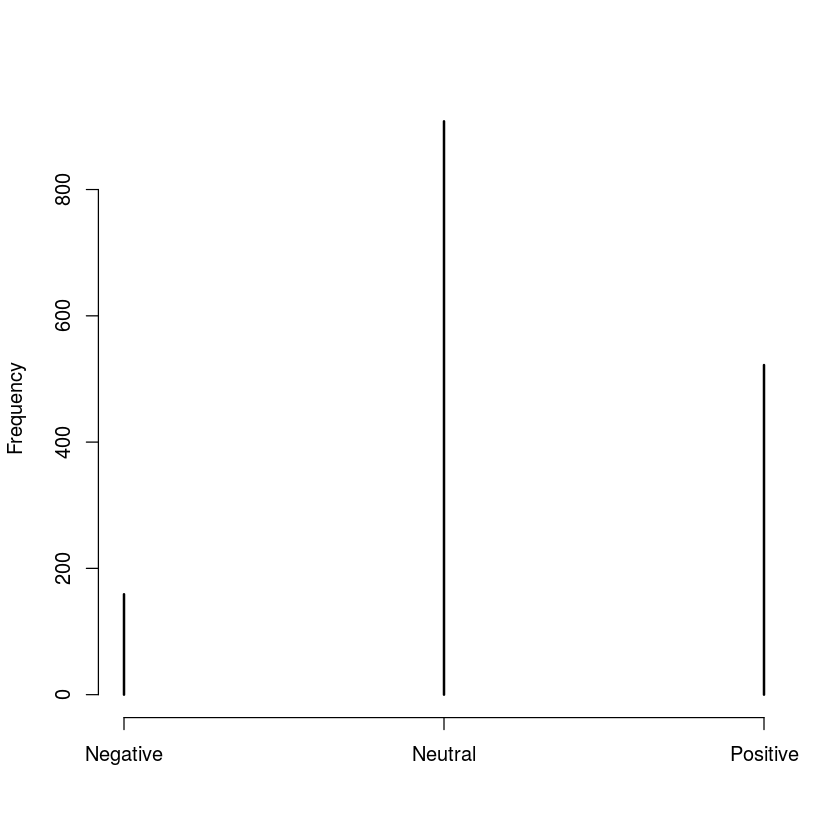

table(ntucCommentsClean$sentiment_polar) Negative Neutral Positive

1418 8884 6986

plot(table(ntucCommentsClean$sentiment_polar),ylab="Frequency")mean(ntucCommentsClean$sentiment)0.56461129106895

mean sentiment score of 0.56 indicates a mostly neutral and positive comments

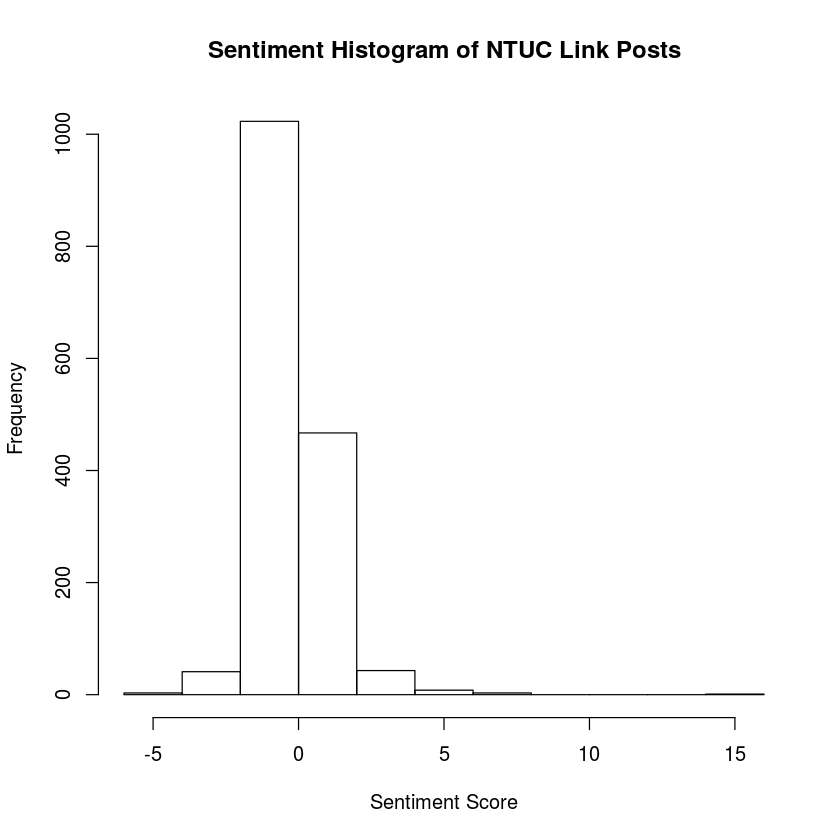

You can continue to check the comments sentiments based on the post type as well

For e.g. sentiment for post_type = "link"

ntucLink <- subset(ntucCommentsClean, post_type == "link")

hist(ntucLink$sentiment, xlab = "Sentiment Score", main = "Sentiment Histogram of NTUC Link Posts")

table(ntucLink$sentiment_polar)

mean(ntucLink$sentiment)

plot(table(ntucLink$sentiment_polar),ylab="Frequency")Negative Neutral Positive

159 908 522

0.351793580868471

- Installing required packages for Text Analysis

Needed = c("tm", "SnowballC", "RColorBrewer", "wordcloud")

install.packages(Needed, dependencies=TRUE)Installing packages into '/home/nbcommon/R'

(as 'lib' is unspecified)

Warning message:

"dependencies 'Rcampdf', 'Rgraphviz', 'tm.lexicon.GeneralInquirer' are not available"also installing the dependency 'Rpoppler'

Warning message in install.packages(Needed, dependencies = TRUE):

"installation of package 'Rpoppler' had non-zero exit status"

- Creating corpus, lower case transformation, removing of punctuation / numbers and stopwords

library(tm)

# create corpus

corpus = Corpus(VectorSource(ntucCommentsClean$comments_message))

# Conversion to lower case

corpus = tm_map(corpus, content_transformer(tolower))

# Removal of punctuation

corpus = tm_map(corpus, removePunctuation)

# Removal of numbers

corpus = tm_map(corpus, removeNumbers)

# Removal of stopwords

corpus = tm_map(corpus, removeWords, stopwords("english"))Loading required package: NLP

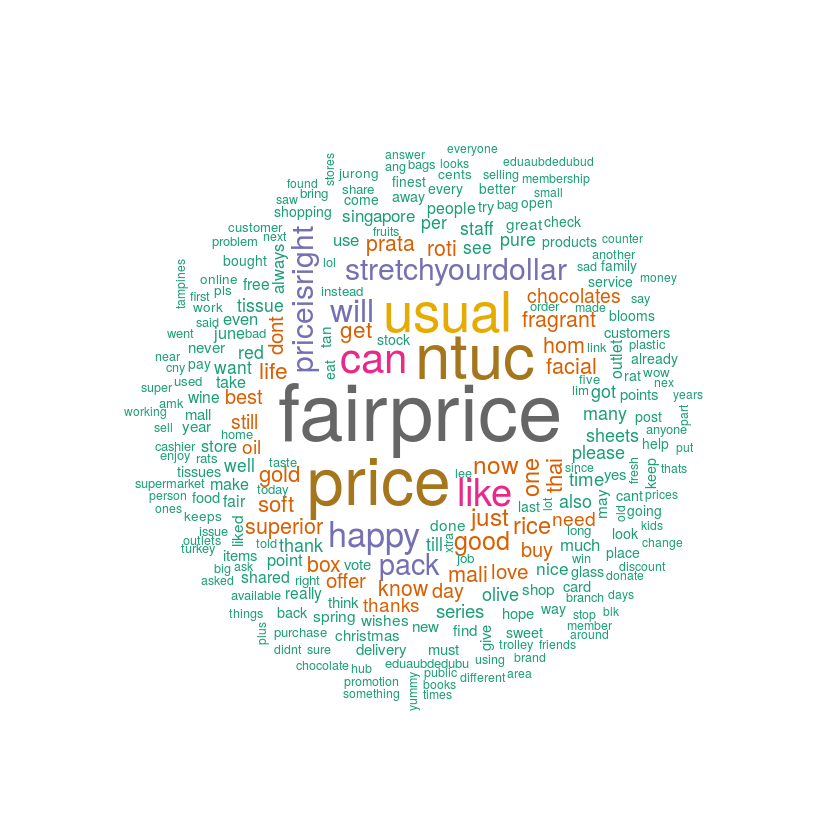

- Generating wordcloud

library(wordcloud)

wordcloud(corpus, random.order = F, min.freq=2, max.words=250,

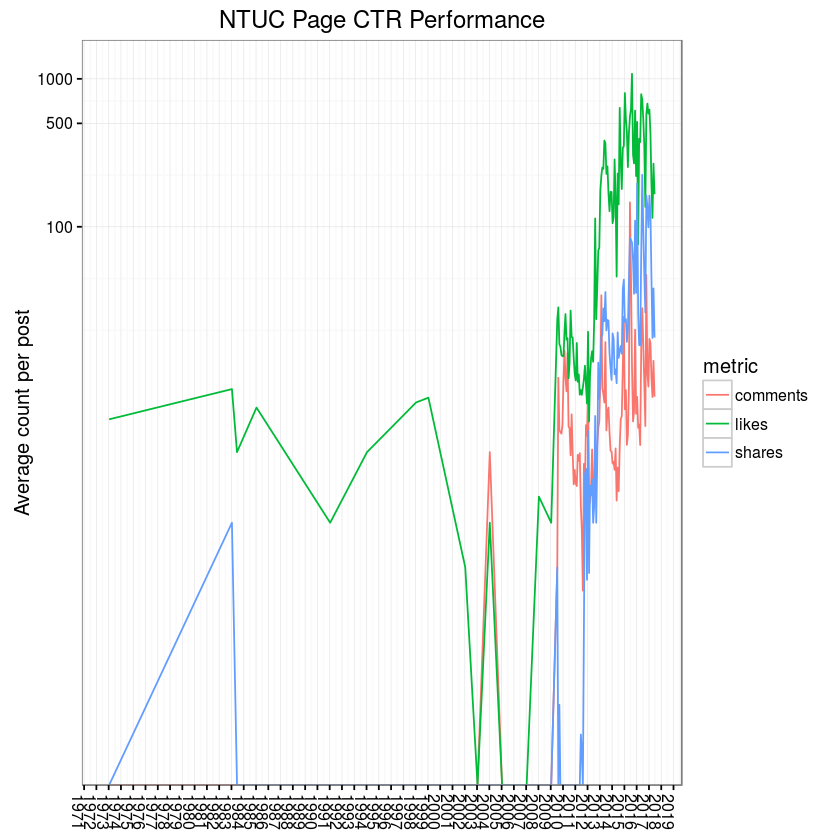

colors = brewer.pal(8, "Dark2"))- Creating function for aggregation matric

aggregate.matric <- function(metric){

m <- aggregate(ntucPosts[[paste0(metric, "_count")]],

list(month = ntucPosts$month),

mean)

m$month <- as.Date(paste0(m$month, "-15"))

m$metric <- metric

return(m)

}ntucPosts$timestamp <- format.facebook.date(ntucPosts$created_time)

ntucPosts$month <- format(ntucPosts$timestamp, "%Y-%m")df.list <- lapply(c("likes", "comments", "shares"), aggregate.matric)

df <- do.call(rbind, df.list)- Installing ggplot2 package

install.packages("ggplot2")

install.packages("scales")

library(ggplot2)

library(scales)Installing package into '/home/nbcommon/R'

(as 'lib' is unspecified)

Installing package into '/home/nbcommon/R'

(as 'lib' is unspecified)

Attaching package: 'ggplot2'

The following object is masked from 'package:NLP':

annotate

- Creating the trend plot

ggplot(df, aes(x = month, y = x, group = metric)) +

geom_line(aes(color = metric)) +

scale_x_date(date_breaks = "years", labels = date_format("%Y")) +

scale_y_log10("Average count per post", breaks = c(100, 500, 1000)) +

theme_bw() +

theme(axis.title.x = element_blank(), axis.text.x=element_text(angle = -90, hjust = 0)) +

ggtitle("NTUC Page CTR Performance") - Installing lubridate package to work with Date and Time

install.packages("lubridate")

library(lubridate)Installing package into '/home/nbcommon/R'

(as 'lib' is unspecified)

Attaching package: 'lubridate'

The following object is masked from 'package:base':

date

- Creating the relevant date and time variables

ntucPosts$datetime <- format.facebook.date(ntucPosts$created_time)

ntucPosts$dayweek <- wday(ntucPosts$datetime, label=T)

ntucPosts$dayint <- wday(ntucPosts$datetime)

ntucPosts$sghour <- with_tz(ntucPosts$datetime, "Asia/Singapore")

ntucPosts$hour <- hour(ntucPosts$sghour)- Installing heatmap package

install.packages("d3heatmap")

library(d3heatmap)Installing package into '/home/nbcommon/R'

(as 'lib' is unspecified)

also installing the dependency 'htmlwidgets'

- Creating heatmap of posts by day and time

heatmapFrame <- matrix(0,nrow=24,ncol=7);

rownames(heatmapFrame) <- 0:23

colnames(heatmapFrame) <- c("Sun", "Mon", "Tue", "Wed", "Thu", "Fri", "Sat")

for (i in 1:24) {

for (j in 1:7) {

heatmapFrame[i,j] <- nrow(subset(ntucPosts,dayint==j & hour==i-1))

}

}

d3heatmap(heatmapFrame, scale = "column",dendrogram = "none", color = scales::col_quantile("Blues", NULL, 5))You can amend the codes to create and show the day and time heatmap for customers' comments using the above provided code

- Finally, let's look at the posts frequency by the organisation

time.interval <- min(ntucPosts$datetime) %--% max(ntucPosts$datetime)

sampledays <- round(time.interval / ddays(1))

ntucPostFreq <- sampledays / nrow(ntucPosts)ntucPostFreq6.57096643752527

It seems that the organisation would create a new post every 6.5 days

Further analysis such as:

- comparision of organisation's post timing and customers' active timing can be performed to determine if the organisation is posting contents at the right time

- topic analysis (via the use of LDA) on customers' contents can be perform to determine what topics/subjects customers are talking about or are interested in