- CNCF Cloud Native Interactive Landscape

- Development Containers

- Turnkey * Containers

- k8s-for-docker-desktop

- There are many computing orchestrators

- They make decisions about when and where to "do work"

- We've done this since the dawn of computing:Mainframe schedulers, Puppet, Terraform, AWS, Mesos, Hadoop, etc.

- Since 2014 we've had resurgence of new orchestration projects because:

- Popularity of distributed computing

- Docker containers as a app package and isolated runtime

- We needed "many servers to act like one, and run many containers"

- An the Container Orchestrator was born

- Many open source projects have been created in the last 5 years to:

- Schedule running of containers on servers

- Dispatch them across many nodes

- Monitor and react to container and server health

- Provide storage, networking, proxy, security, and logging features

- Do all this in a declarative way, rather than imperative

- Provide API's to allow extensibility and management

- Kubernetes, aka K8s

- Docker Swarm(and Swarm classic)

- Apache Mesos/Marathon

- Cloud Foundry

- Amazon ECS(not OSS, AWS-only)

- HashiCorp Nomad

- Many of these tools run on top of Docker Engine

- Kubernetes is the one orchestator with many distributions

- Kubernetes "vanilla upstream"(not a distribution)

- Cloud-Managed distros: AKS, GKE, EKS,DOK...

- Self-Managed distros: RedHat OpenShift, Docker Enterprise, Rancher, Canonical Charmed, openSUSE Kubic...

- Vanilla installers: Kubeadm, kops, kubicorn...

- Local dev/test: Docker Desktop, minikube, microK8s

- CI testing:kind

- Special builds: Rancher K3s

- And Many, many more..(86 as of June 2019)

- Kubernetes is a container management system

- It runs and manages containerized applications on a cluster(one or more servers)

- Often this is simply called "container orchestration"

- Sometimes shortened to Kube or K8s("Kay-eights" or "Kates")

- Start 5 containers using image

atseashop/api:v1.3 - Place an internal load balancer in front of these containers

- Start 10 containers using image

atseashop/webfront:v1.3 - Place a public load balancer in front of these containers

- It's Black Friday(or Christmas), traffic spikes, grow our cluster and add containers

- New release!Replace my containers with the new image

atseashop/webfront:v1.4 - Keep processing requests during the upgrade;update my containers one at a time

- Basic autoscaling

- Blue/green deployment, canary deployment

- Long running services, but also batch(one-off) and CRON-like jobs

- Overcommit our cluster and evict low-priority jobs

- Run services with stateful data(database etc.)

- Fine-grained access control defining what can be done whom on which resources

- Integrating third party services(service catalog)

- Automating complex tasks(operators)

- Ha ha ha ha

- OK, I was trying to scare you, it's much simpler than that ❤️

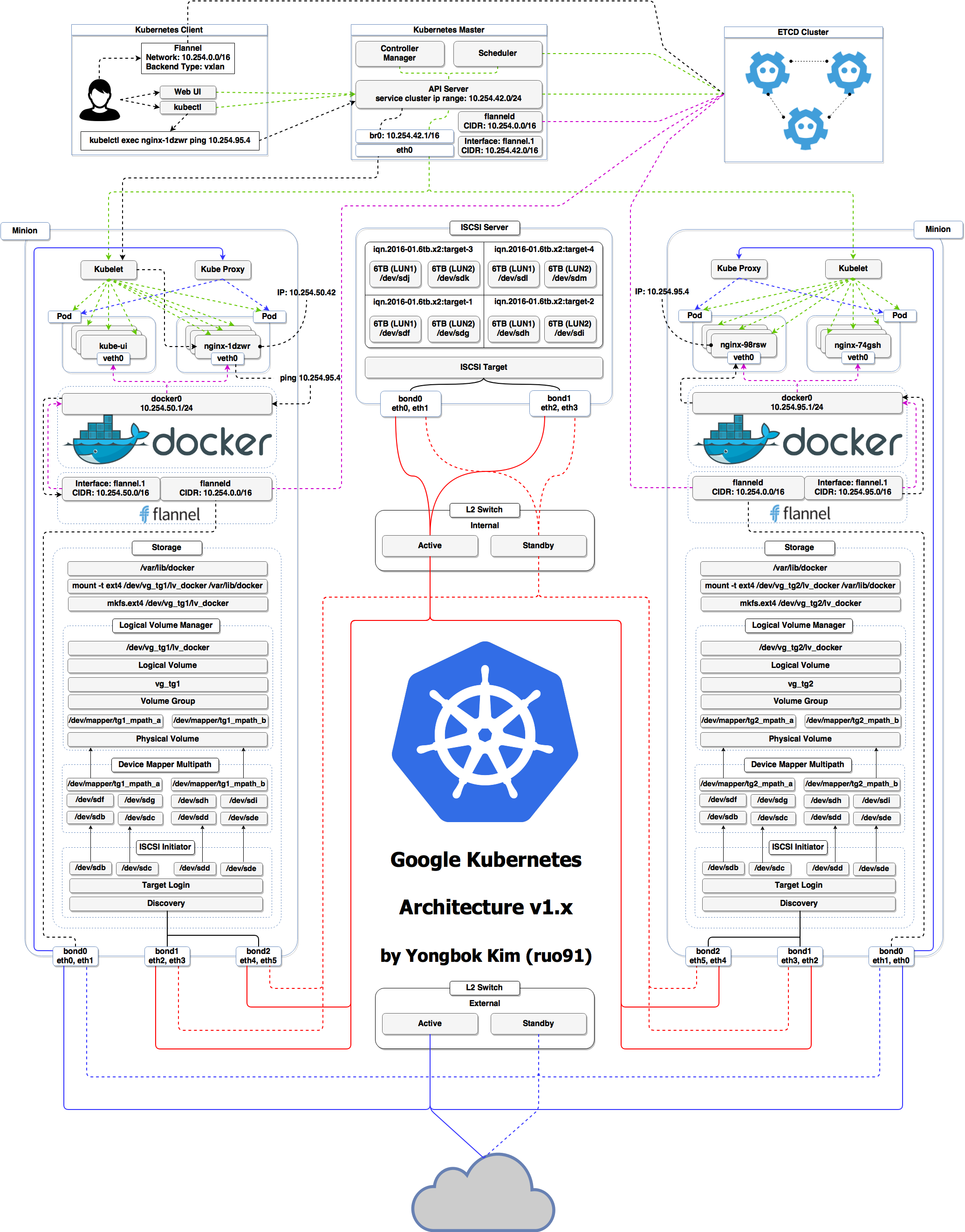

- The nodes executing our containers run a collection of services:

- a container Engine(typically Docker)

- kubelet(the "node agent")

- kube-proxy(a necessary but not sufficient network component)

- Nodes were formerly called "minions"

- (You might see that word in older articles or documentation)

- The Kubernetes logic(its "brains") is a collection of services:

- the API server(our point of entry to everything!)

- core services like the scheduler and controller manager

etcd(a highly available key/value store; the "database" of Kubernetes)

- Together, these services form the control plane of our cluster

- The control plane is also called the "master"

- It is common to reserve a dedicated node for the control plane

- (Except for single-node development clusters, like when using minikube)

- This node is then called a "master"

- (Yes,this is ambiguous:is the "master" a node, or the whole control plane?)

- Normal applications are restricted from running on this node

- (By using a mechanism called "taints")

- When high availability is required, each service of the control plane must be resilient

- The control plane is then replicated on multiple nodes

- (This is sometimes called a "multi-master" setup)

- The services of the control plane can run in or out of containers

- For instance: since

etcdis a critical service, some people deploy it directly on a dedicated cluster(without containers)- (This is illustrated on the first "super complicated" schema)

- In some hosted Kubernetes offerings(e.g. AKS, GKE, EKS), the control plane is invisible

- (We only "see" a Kubernetes API endpoint)

- In that case, there is no "master node"

For this reason, it is more accurate to say "control plane" ranther than "master"

No!

- By default, Kubernetes uses the Docker Engine to run containers

- Or leverage other pluggable runtimes through the Container Runtime Interface

- We could also use rkt("Rocket") from CoreOS(deprecated)

- containerd: maintained by Docker, IBM, and community

- Used by Docker Engine, microK8s, K3s, GKE, and standalone; has

ctrCLI - CRI-O: maintained by Red Hat, SUSE, and community; based on containerd

- Used by OpenShift and Kubic, version matched to Kubernetes

Yes!

- In this course, we'll run our apps on a single node first

- We may need to build images and ship them around

- We can dot these things without Docker

- (and get diagnosed with NIH syndrome)

- Docker is still the most stable container engine today

- (but other options are maturing very quickly)

NIH--> Not Invented Here

- On your development environments, CI pipelines...:

- Yes, almost certainly

- On our production servers:

- Yes(today)

- Probally not(in the future)

More information about CRI on the Kubernetes blog

- We will interact with our Kubernetes cluster through the Kubernetes API

- The Kubernetes API is (mostly) RESTful

- It allows us to create, read, update, delete resources

- A few common resource types are:

- node (a machine == physical or virtual -- in our cluster)

- pod (group of containers running together on a node)

- service (stable network endpoint to connect to one or multiple containers)

- Pods are a new abstraction!

- A

podcan have multiple containers working together - (But you usually only have on container per pod)

- Pod is our smallest deployable unit; Kubernetes can't mange containers directly

- IP address are associated with

pods, not with individual containers - Containers in a pod share

loacalhost, and can share volumes - Multiple containers in a pod are deployed together

- In reality, Docker doesn't know a pod, only containers/namespaces/volumes

- Best: Get a environment locally

- Docker Desktop(Win/MacOS), minikube(Win Home), or microk8s(Linux)

- Small setup effort;free;flexible environments

- Requires 2GB+ of memory

- Good: Setup a cloud Linux host to run microk8s

- Great if you don't have the local resources to run Kubernetes

- Small setup effort; only free for a while

- My $50 DigitalOcean coupon lets you run Kubernetes free for a month

- Last choice: Use a browser-based solution

- Low setup effort; but host is short-lived and has limited resources

- Not all hands-on examples will work in the browser sandbox

- Docker Desktop(DD) is great for a local dev/test setup

- Requires modern macOS or Windows 10 Pro/Ent/Edu(no Home)

- Requires Hyper-V, and disables VirtualBox

Exercise

- Download Windows or macOS versions and install

- For Windows, ensure you pick "Linux Containers" mode

- Once running, enabled Kubernetes in Settings/Perferences

kubectl get nodes- A good local install option if you can't run Docker Desktop

- Inspired by Docker Toolbox

- Will create a local VM and configure latest Kubernetes

- Has lots of other features with it

minikubeCLI - But, requires spearate install of VirtualBox and kubectl

- May not work with older Windows version(YMMV)

Exercise

- Download and install

VirtualBox - Download

kubectl, and add to $PATH - Download and install

minikube - Run

minikube startto create and run a Kubernetes VM - Run

minikube stopwhen you're done

- Easy install and management of local Kubernetes

- Made by Canonical(Ubuntu).Installs using

snap.Works nearly everywhere - Has lots of other features with its

microk8sCLI - But, requires you install snap if not on Ubuntu

- Runs on containerd rather than Docker, no biggie

- Needs alias setup for

microk8s.kubectl

Exercise

- Install

microk8s, change group permissions, then set alias in bashrc

sudo apt install snapd

sudo snap install microk8s --classic

microk8s.kubectl

sudo usermod -a -G microk8s <username>

echo "alias kubectl='microk8s.kubectl'" >> ~/.bashrc

Last choice: Use a browser-based solution

- Low setup effort; but host is short-lived and has limited resources

- Services are not always working right, and may not be up to date

- Not all hands-on examples will work in the browser sandbox

Exercise

- Use a prebuilt Kubernetes server at

Katacoda - Or setup a Kubernetes node at

play-with-k8s.com - Maybe try the latest OpenShift at

learn.openshift.com - See if instruqt works for

a Kubernetes playground

- You can use

shpodfor examples shpodprovides a shell running in a pod on the cluster- It comes with many tools pre-installed(helm, stern, curl, jq...)

- These tools are used in many exercises in these slides

shpodalso gives you shell completion and a fancy prompt- Create it with

kubectl apply -f https://k8smastery.com/shpod.yaml - Attach to shell with

kubectl attach --namespace=shpod -ti shpod - After finishing course

kubectl delete -f https://k8smastery.com/shpod.yaml

kubectlis(almost) the only tool we'll need to talk to Kubernetes- It is a rich CLI tool around the Kubernetes API

- (Everything you can do with

kubectl, you can do directly with the API)

- (Everything you can do with

- On our machines, there is a

~/.kube/configfile with:- the Kubernetes API address

- the path to our TLS certificates used to authenticate

- You can also use the

--kubeconfigflag to pass a config file - Or directly

--server,--user, etc. kubectlcan be pronounced "Cube C T L", "Cube cuttle", "Cube cuddle"...- I'll be using the official name "Cube Control"

- Let's look at our

Noderesources withkubectl get!

Exercise

- Look at the composition of our cluster:

kubectl get node- These commands are equivalent:

kubectl get no

kubectl get node

kubectl get nodeskubectl getcon output JSON, YAML, or be directly formatted

Exercise

- Give us more info about the nodes:

kubectl get nodes -o wide- Let's have some YAML:

kubectl get no -o yamlSee that kind: List at the end? It's the type of our result!

- It's super easy to build custom reports

Exercise

- Show the capacity of all our nodes as a stream of JSON objects:

kubectl get nodes -o json | jq ".items[] | {name:.metadata.name} + .status.capacity"kubectl describeneeds a resource type and (optionally) a resource name- It is possible to provide a resource name prefix(all matching objects will be displayed)

kubectl describewill retrieve some extra information about the resource

Exercise

- Look at the information available for your node name with one of the following:

kubectl describe node/<node>

kubectl describe node <node>

kubectl describe node/docker-desktop(We should notice a bunch of control plane pods.)

- We can list all available resource types by running

kubectl api-resources- (in Kubernetes 1.10 and prior, this command used to be

kubectl get)

- (in Kubernetes 1.10 and prior, this command used to be

- We can view the definition for a resource type with:

kubectl explain type- We can view the definition of a field in a resource, for instance:

kubectl explain node.spec- Or get the list of all fields and sub-fields:

kubectl explain node --recursivee.g.

kubectl explain node

kubectl explain node.spec- We can access the same information by reading the

API documentation - The API documentation is usually easier to read, but:

- it won't show custom types(like Custom Resource Definitions)

- We need to make sure that we look at the correct version

kubectl api-resourcesandkubectl explainperform introspection- (they communicate with the API server and obtain the exact type definitions)

- The most common resource names have three forms:

- singular(e.g.

node,service,deployment) - plural(e.g.

nodes,services,deployments) - short(e.g.

no,svc,deploy)

- singular(e.g.

- Some resources do not have a short name

Endpointsonly have a plural form- (because even a single

Endpointsresource is actually a list of endpoints)

- (because even a single

- A service is a stable endpoint to connect to "something"

- (in the initial proposal, they were called "portals")

Exercise

- List the services on our cluster with one of these commands:

kubectl get services

kubectl get svc

There is already one service on our cluster: the Kubernetes API itself.

- Containers are manipulated through pods

- A pod is a group of containers:

- running together (on the same node)

- sharing resources(RAM, CPU; but also network, volumes)

Exercise

- List pods on our cluster:

kubectl get pods- Namespaces allow us to segregate resources

Exercise

- List the namespaces on our cluster with one of these commands:

kubectl get namespaces

kubectl get namespace

kubectl get nsYou know what...This kube-system thing looks suspicious

In fact, I'm pretty sure it showed up earlier, when we did:

kubectl describe node <node-name>- By default,

kubectluses thedefaultnamespace - We can see resources in all namespaces with

--all-namespaces

Exercise

- List the pods in all namespaces:

kubectl get pods --all-namespaces- Since Kubernetes 1.14, we can also use

-Aas a shorter version:

kubectl get pods -AHere are our system pods!

etcdis our etcd serverkube-apiserveris the API serverkube-controller-managerandkube-schedulerare other control plane componentscorednsprovides DNS-based service discovery(replacing kube-dns as of 1.11)kube-proxyis the(per-node) component managing port mappings and such<net name>is the optional(per-node) component managing the network overlay- the

READYcolumn indicates the number of conatainers in each pod - Note: this only shows containers, you won't see host svcs(e.g. microk8s)

- Also Note: you may see different namespaces depending on setup

- We can also look at a different namespace(other than

default)

Exercise

- List only the pods in the

kube-systemnamespace:

kubectl get pods --namespace=kube-system

kubectl get pods -n kube-system- We can use

-n/--namespacewith almost everykubectlcommand - Example:

kubectl create --namespace=Xto create something in namespace X

- We can use

-A/--all-namespaceswith most commands that manipulate multiple objects - Examples:

kubectl deletecan delete resources across multiple namespaceskubectl labelcan add/remove/update labels across multiple namespaces

Exercise

- List the pods in the

kube-publicnamespace:

kubectl -n kube-public get podsNothing!

kube-public is created by our installer & used for security bootstrapping.

- The only interesting object in

kube-publicis a ConfigMap namedcluster-info

Exercise

- List ConfigMap objects:

kubectl -n kube-public get configmaps- inspect

cluster-info:

kubectl -n kube-public get configmap cluster-info -o yamlNote the selfLink URI:/api/v1/namespaces/kube-public/configmaps/cluster-info

We can use that (later in kubectl context lectures)!

kubectl -n kube-public get configmaps

kubectl -n kube-public get configmap cluster-info -o yaml- Starting with kubernetes 1.14, there is

kube-node-leasenamespace- (or in Kubernetes 1.13 if the NodeLease feature gate is enabled)

- That namespace contains one Lease object per node

- Node leases are a new way to implement node heartbeats

- (i.e. node regularly pinging the control plane to say "I'm alive!")

- For more details, see

KEP-0009or thenode controller documentation

- First things first: we cannot run a container

- We are going to run a pod, and in that pod there will be a single container

- In that container in the pod, we are going to run a simple

pingcommand - Then we are going to start additional copies of the pod

- We need to spcify at least a name and the image we want to use

Exercise

- Let's ping

1.1.1.1, Cloudflare'spublic DNS resolver:

kubectl run pingpong --image alpine ping 1.1.1.1(Starting with Kubernetes 1.12, we get a message telling us that kubectl run is deprecated. Let's ignore it for now.)

- Let's look at the resources that were created by

kubectl run

Exercise

- List most resource types:

kubectl get allWe should see the following things:

deployment.apps/pingpong(the deployment that we just created)replicaset.apps/pingpong-xxxxxxxxx(a replica set created by the deployment)pod/pingpong-xxxxxxxx-yyyyyyy(a pod created by the replica set)

Note: as of 1.10.1, resource types are displayed in more detail.

- A deployment is a high-level construct

- allows scaling, rolling updates, rollbacks

- multiple deployments can be used together to implement a

canary deployment - delegates pods management to replica sets

- A replica set is a low-level construct

- makes sure that a given number of identical pods are running

- allows scaling

- rarely used directly

- Note: A replication controller is the (deprecated) predecessor of a replica set

kubectl runcreated a deployment,deployment.apps/pingpong

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/pingpong 1 1 1 1 10m

- That deployment created a replica set,

replicaset.apps/pingpong-xxxxxxxxxxxxx

NAME DESIRED CURRENT READY AGE

replicaset.apps/pingpong-7c8bbcd9bc 1 1 1 10m

- That replica set created a pod,

pod/pingpong-xxxxxxxxxxxxx-yyyy

NAME READY STATUS RESTARTS AGE

pod/pingpong-7c8bbcd9bc-6c9qz 1/1 Running 0 10m

- We'll see later how these folks play together for:

- scaling, high availability, rolling updates

- Let's use the

kubectl logscommand - We will pass either a pod name, or a type/name

- (E.g. if we specify a deployment or replica set, it will get the first pod in it)

- Unless specified otherwise, it will only show logs of the first container in the pod

- (Good thing there's only one in ours!)

Exercise

- View the result of our

pingcommand:

kubectl logs deploy/pingpong

- We can create additional copies of our container(I mean, our pod) with

kubectl scale

Exercise

- Scale our

pingpongdeployment:

kubectl scale deploy/pingpong --replicas 3- Note that this command does exactly the same thing:

kubectl scale deployment pingpong --replicas 3Note: what if we tried to scale replicaset.apps/pingpong-xxxxxxx?

We could! But he deployment would notice it right away, and scale back to the ...

kubectl scale deploy/pingpong --replicas 3Streaming logs in real time

- Just like

docker logs,kuberctl logssupports conveninent options:-f/--followto stream logs in real time(a latail -f)--tailto indicate how many lines you want to see(from the end)--sinceto get logs only after a given timestramp

Exercise

- View the latest logs of our

pingcommand:

kubectl logs deploy/pingpong --tail 1 --follow- Leave that command running, so that we can keep an eye on these logs

- What happens if we restart

kubctl logs?

Exercise

- interrupt

kubectl logs(with Ctrl-C) - Restart it:

kubectl logs deploy/pingpong --tail 1 --followkubectl logs will warn us that multiple pods were found, and that it's showing us only one of them.

Let's leave kubectl logs running while we keep exploring.

- The deployment

pingpongwatches itsreplica set - The replica set ensures that the right number of pods are running

- What happens if pods disappear?

Exercise

- In a separate window, watch the list of pods:

watch kubectl get pods- Destroy the pod currently shown by

kubectl logs:

kubectl delete pod pingpong-xxxxxxxxxxxxx-yyyyyye.g.

watch kubectl get pods

kubectl delete pod pingpong-xxxxxxxxxxxxx-yyyyyykubectl delete podterminates the pod gracefully- (sending it the TERM signal and waiting for it to shutdown)

- As soon as the pod is in "Terminating" state, the Replica Set replaces it

- But we can still see the output of the "Terminating" pod in

kubectl logs - Until 30 seconds later, when the grace period expires

- The pod is then killed, and

kubectl logsexits

- What if we wanted to start a "one-shot" container that doesn't get restarted?

- We could use

kubectl run --restart=OnFailureorkubectl run --restart=Never - These commands would create jobs or pods instead of deployments

- Under the hood,

kubectl runinvokes "generators" to create resource descriptions - We could also write these resource descriptions ourselves(typically in YAML),

and create them on the cluster with

kubectl apply -f(discussed later) - With

kubectl run --schedule=..., we can also create cronjobs.

- A Cron Job is a job that will be executed at specific intervals

- (the name comes from the traditional cronjobs executed by the UNIX crond)

- It requires a schedule,represented as five space-separated fields:

- minute[0,59]

- hour[0,23]

- day of the month[1,31]

- month of the year[1,12]

- day of the week([0,6] with 0=Sunday)

*means "all vaild values";/Nmeans "every N"- Example:

*/3 * * * *means "every three minutes"

- Let's create a simple job to be executed every three minutes

- Cron Jobs need to terminate, otherwise they'd run forever

Exercise

- Create the Cron Job:

kubectl run --schedule="*/3 * * * *" --restart=OnFailure --image=alpine sleep 10- Check the resource that was created:

kubectl get cronjobs- At the specified schedule, the Cron Job will create a Job

- The Job will create a Pod

- The Job will make sure that the Pod completes

- (re-creating another on if it fails, for instance if its node fails)

Exercise

- Check the Jobs that are created:

kubectl get jobs(it will take a few minutes before the first job is scheduled.)

- As we can see from the previous slide,

kubectl runcan do many things - The exact type of resource created is not obvious

- To make things more explicit, it is better to use

kubectl create:kubectl create deploymentto create a deploymentkubectl create jobto create a jobkubectl create cronjobto run a job periodically- (since Kubernetes 1.14)

- Eventually,

kubectl runwill be used only to start one-shot pods

kubectl run- easy way to get started

- versatile

kubectl create <resource>- explicit, but lacks some features

- can't create a CronJob before Kubernetes 1.14

- can't pass command-lin arguments to deployments

kubectl create -f foo.yamlorkubectl apply -f foo.yaml- all features are available

- requires writing YAML

- Can we stream the logs of all our

pingpongpods?

Exercise

- Combine

-land-fflags:

kubectl logs -l run=pingpong --tail 1 -fNote: combining -l and -f is only possible since Kubernetes 1.14!

Let's try to understand why...

- Let's see what happens if we try to stream the logs for more than 5 pods

Exercise

- Scale up our deployment:

kubectl scale deployment pingpong --replicas=8- Stream the logs

kubectl logs -l run=pingpong --tail 1 -fWe see a message like the following one:

error: your are attempting to follow 8 log streams,

but maximum allowed concurency is 5,

use --max-log-requests to increase the limit

e.g.

kubectl scale deployment pingpong --replicas=8

kubectl get pods

kubectl logs -l run=pingpong --tail 1 -f

# error: you are attempting to follow 8 log streams, but maximum allowed concurency is 5, use --max-log-requests to increase the limitkubectlopens one connection to the API server per pod- For each pod, the API server opens one extra connection to the corresponding kubelet

- If there are 1000 pods in our deployments, that's 1000 inbound + 1000 outbound connections on the API server

- This could easily put a lot of stress on the API server

- Prior Kubernetes 1.14, it was decided to not allow multiple connections

- From Kubernetes 1.14, it is allowed, but limited to 5 connetcions

- (this can be changed with

--max-log-requests)

- (this can be changed with

- For more details about the rationale, see

PR #67573

- We don't see which pod sent which log line

- If pods are restarted/replaced, the log stream stops

- If new pods are added, we don't see their logs

- To stream the logs of multiple pods, we need to write a selector

- There are external tools to address these shortcomings

- (e.g.: Stern)

- The

kubectl logscommands has limitaions:- it cannot stream logs from multiple pods at a time

- when showing logs from multiple pods, it mixes them all together

- We are going to see how to do it better

- We could(if we were so inclined) write a program or script that would:

- take a selector as an argument

- enumerate all pods matching that selector (with

kubectl get -l...) - for one

kubectl logs --follow ...command per container - annotate the logs (the output of each

kubectl logs ....process) with their origin - preserve ordering by using

kubectl logs --timestramps ...and merge the output

- We could do it, but thankfully, other did it for us already!

Stern is an open source project by Wercker

From the README:

Stern allows you to tail multiple pods on Kubernetes and multiple containers within the pod. Each result is color coded for quicker debugging.

The query is a regular expression so the pod name can easily be filtered and you don't need to specify the exact id (for instance omitting the deployment id). If a pod is deleted it gets removed from tail and if a new pod is added it automatically gets tailed.

Exactly what we need!

- Run

stern(without arguments) to check if it's installed

$ stern

Tail multiple pods and containers from Kubernetes

Usage:

stern pod-query [flags]

- If it is not installed, the easiest method is to download a

binary release - The following commands will install Stern on a Linux Intel 64 bit machine:

sudo curl -L -o /usr/local/bin/stern \

https://github.com/wercker/stern/releases/download/1.11.0/stern_linux_amd64

sudo chmod +x /usr/local/bin/stern- On OS X, just

brew install stern

- There are two ways to specify the pods whose logs we want to see:

-lfollowed by a selector expression(like with manykubectlcommands)- with a "pod query," i.e. a regex used to match pod names

- These two ways can be combined if necessary

Exercise

- View the logs for all the pingpong containers:

stern pingpong- The

--tail Nflag shows the lastNlines for each container- (instead of showing the logs since the creation of the container)

- The

-t/--timestampsflag shows timestamps - The

--all-namespacesflag is self-explanatory

Exercise

- View what's up with the

pingpongsystem containers:

stern --tail 1 --timestamps pingpongLet's cleanup before we start the next lecture!

Exercise

- remove our deployment and cronjob:

kubectl delete deployment/pingpong cronjob/sleep- They say, "a picture is worth one thousand words."

- The following 19 slides show what really happens when we run:

kubectl run web --image=nginx --replicas=3kubectl exposecreates a service for existing pods- A service is a stable address for a pod (or a bunch of pods)

- If we want to connect to our pod(s), we need to create a service

- Once a service is created, CoreDNS will allow us to resolve it by name

- (i.e. after creating service

hello, the namehellowill resolve to something)

- (i.e. after creating service

- There are different types of services, detailed on the following slides:

ClusterIP,NodePort,LoadBalancer,ExternalName

ClusterIP(default type)- a virtual IP address is allocated for the service(in an internal, private range)

- this IP address is reachable only from within the cluster(nodes and pods)

- our code can connect to the service using the original port number

NodePort- a port is allocated for the service(by default, in the 30000-32768 range)

- that port is made available on all our nodes and anybody can connect to it

- our code must be changed to connect to that new port number

These service types are always available.

Under the hood:kube-proxy is using a userland proxy and a bunch of iptables rules.

LoadBalancer- an external load balancer is allocated for the service

- the load balancer is configured accordingly

- (e.g.: a

NodePortservice is created, and the load balancer sends traffic to that port)

- (e.g.: a

- available only when the underlying infrastructure provides some "load balancer as as service"

- (e.g. AWS, Azure, GCE, OpenStack...)

ExternalName- the DNS entry managed by CoreDNS will just be a

CNAMEto a provided record - no port, no IP address, no nothing else is allocated

- the DNS entry managed by CoreDNS will just be a

- Since

pingdoesn't have anything to connect to, we'll have to run something else - We could use the

nginxoffical image, but ...- ...we wouldn't be able to tell the backends from each other!

- We are going to use

bretfisher/httpenv, a tiny HTTP server written in Go bretfisher/httpenvlistens on port 8888- it serves its environment variables in JSON format

- The environment variables will include

HOSTNAME, which will be the pod name- (and therefore, will be different on each backend)

- We could do

kubectl run httpenv --image=bretfisher/httpenv... - But since

kubectl runis changing, let's see how to seekubectl createinstead

Exercise

- In another window, watch the pods(to see when they are created)

kubectl get pods -w- Create a deployment for this very lightweight HTTP server:

kubectl create deployment httpenv --image=bretfisher/httpenv- Scale it to 10 replicas:

kubectl scale deployment httpenv --replicas=10Exercise

# t1

kubectl get pods -w

# t2

kubectl create deployment httpenv --image=bretfisher/httpenv

# t1

ctrl+c

kubectl get pods

kubectl get all

clear

kubectl get pods -w

# t2

kubectl scale deployment httpenv --replicas=10

kubectl expose deployment httpenv --port 8888

kubectl get service- You can assign IP addresses to services, but they are stll layer 4

- (i.e. a service is not an IP address; it's an IP address + protocol + port)

- This is caused by the current implementation of

kube-proxy- (it relies on mechanisms that don't support layer 3)

- As a result: you have to indicate the port number for your service

- Running services with arbitrary port (or port ranges) requires hacks

- (e.g. host networking mode)

- We will now send a few HTTP requests to our pods

Exercise

- Run shpod if not on Linux host so we can access internal ClusterIP

kubectl apply -f https://bret.run/shpod.yml

kubectl attach --namespace=shpod -it shpod- Let's obtain the IP address that was allocated for our service, programmatically:

IP=$(kubectl get svc httpenv -o go-template --template '{{ .spec.clusterIP }}')

echo $IP- Send a few requests:

curl http://$IP:8888/- Too much output? Filter it with

jq:

curl -s http://$IP:8888/ | jq .HOSTNAME

exit

kubectl delete -f https://bret.run/shpod.yml

kubectl delete deployment/httpenv- Sometimes, we want to access our scaled services directly:

- if we want to save a tiny little bit of latency(typically less than 1ms)

- if we need to connect over arbitrary ports(instead of a few fixed ones)

- if we need to communicate over another protocol than UDP or TCP

- if we want to decide how to balance the requests client-side

- ...

- In that case, we can use a

headless service

- A headless service is obtained by setting the

clusterIPfield toNone- (Either with

--cluster-ip=None, or by providing a custom YAML)

- (Either with

- As a result, the service doesn't have a virtual IP address

- Since there is no virtual IP address, there is no load balancer either

- CoreDNS will return the pods' IP addresses as multiple

Arecords - This gives us an easy way to discover all the replicas for a deployment

- A service has a number of "endpoints"

- Each endpoint is a host + port where the service is available

- The endpoints are maintained and updated automatically by Kubernetes

Exercise

- Check the endpoints that Kubernetes has associated with our

httpenvservice:

kubectl describe service httpenv- In the output, there will be a line starting with

Endpoints:. - That line will list a bunch of addresses in

host:portformat.

- When we have many endpoints, our deploy commands truncate the list

kubectl get endpoints- If we want to see the full list, we can use a different output:

kubectl get endpoints httpenv -o yaml- These IP addresses should match the addresses of the corresponding pods:

kubectl get pods -l app=httpenv -o wideendpointsis the only resource that cannot be singular

kubectl get endpoint

# error: the server doesn't have a resource type "endpoint"- This is because the type itself is plural(unlike every other resource)

- There is no

endpointobject:type Endpoints struct - The type doesn't represent a single endpoint, but a list of endpoints

- The default type(ClusterIP) only works for internal traffic

- If we want to accept external traffic, we can use one of these:

- NodePort (expose a service on a TCP port between 30000-32768)

- LoadBalancer (provision a cloud load balancer for our service)

- ExternalIP (use one node's external IP address)

- Ingress(a special mechanism for HTTP services)

We'll see NodePorts and Ingresses more in detail later.

Let's cleanup before we start the next lecture!

Exercise

- remove our httpenv resources:

kubectl delete deployment/httpenv service/httpenv- TL,DR:

- Our cluster(nodes and pods) is one big flat IP network.

- In detail:

- all nodes must be able to reach each other, without NAT

- all pods must be able to reach each other, without NAT

- pods and nodes must be able to reach each other, without NAT

- each pod is aware of its IP address(no NAT)

- pod IP addresses are assigned by the network implementation

- Kubernetes doesn't mandate any particular implementaion

- Everything can reach everything

- No address translation

- No port translation

- No new protocol

- The network implementation can decide how to allocate addresses

- IP addresses don't have to be "portable" from a node to another

- (For example, We can use a subnet per node and use a simple routed topology)

- The specification is simple enough to allow many various implementaions

- Everything can reach everything

- if you want security, you need to add network policies

- the network implementation you use needs to support them

- There are literally dozens of implementations out there

- (15 are listed in the Kubernetes documentaion)

- Pods have level 3(IP) connectivity, but services are level 4(TCP or UDP)

- (Services map to a single UDP or TCP port; no port ranges or arbitrary IP packets)

kube-proxyis on the data path when connecting to a pod or container,and its not particularly fast(relies on userland proxying or iptables)

- The nodes we are using have been set up to use kubenet, Calico, or something else

- Don't worry about the warning about

kube-proxyperformance - Unless you:

- routinely saturate 10G network interfaces

- count packet rates in millions per second

- run high-traffic VOIP or gaming platforms

- do weird things that involve millions of simultaneous connections

- (in which case you're already familiar with kernel tuning)

- if necessary, there are alternatives to

kube-proxy; e.g.kube-router

- Most Kubernetes cluster use CNI "plugins" to implement networking

- When a pod is created, Kubernetes delegates the network setup to these plugins

- (it can be a single plugin, or a combination of plugins, each doing one task)

- Typically, CNI plugins will:

- allocate an IP address(by calling an IPAM plugin)

- add a network interface into the pod's network namespace

- configure the interface as well as required routes, etc.

- The "pod-to-pod network" or "pod network"

- provides communication between pods and nodes

- is generally implemented with CNI plugins

- The "pod-to-service network"

- provides internal communication and load balancing

- is generally implemented with kube-prox(or maybe kube-router)

- Network policies:

- provide firewalling and isolation

- can be bundled with the "pod network" or provided by another component

- Inbound traffic can be handled by multiple components:

- something like kube-proxy or kube-router(for NodePort services)

- load balancers(ideally, connected to the pod network)

- It is possible to use multiple pod networks in parallel

- (with "meta-plugins" like CNI-Genie or Multus)

- Some solutions can fill multiple roles

- (e.g. kube-router can be set up to provide the pod network and/or network polcies and/or replace kube-proxy)

- It is a DockerCoin miner!

- No,you can't buy coffee with DockerCoins

- How DockerCoins works:

- generate a few random bytes

- hash these bytes

- increment a counter(to keep track of speed)

- repeat forever!

- DockerCoins is not a cryptocurrency

- (the only common points are "randomness", "hashing" and "coins" in the name)

- DockerCoins is made of 5 services

rng= web service generating random byteshasher= web service computing hash of POSTed dataworker= background process callingrngandhasherwebui= web interface to watch progressredis= data store(holds a counter updated byworker)

- These 5 services are visible in the application's Compose file, dockercoins-compose.yml

workerinvokes web servicerngto generate random bytesworkerinvokes web servicehasherto hash these bytesworkerdoes this in an infinite loop- Every sceond,

workerupdatesredisto indicate how many loops were done webuiqueriesredis, and computes and exposes "hashing speed" in our browser (See diagram on next slide!)

How does each service find out the address of the other ones?

- We do not hard-code IP addresses in the code

- We do not hard-code FQDNs in the code, either

- We just connect to a service name, and container-magic does the rest

- (And by container-magic, we mean "a crafty, dynamic, embedded DNS server")

redis = Redis("redis")

def get_random_bytes():

r = requests.get("http://rng/32")

return r.content

def hash_bytes(data):

r = requets.post("http://hasher/",

data=data,

headers={"Content-Type":"application/octet-stream"})(Full source code available here)

workerwill log HTTP requests torngandhasherrngandhasherwill log incoming HTTP requestswebuiwill give us a graph on coins mined per second

- Compose is(still) great for local development

- You can test this app if you have Docker and Compose installed

- If not, remember

play-with-docker.com

Exercise

- Download the compose file somewhere and run it

curl -o docker-compose.yml https://k8smastery.com/dockercoins-compose.yml

docker-compose up- View the

webuionlocalhost:8000or click the8080link in PWD

- The worker doesn't update the counter after every loop, but up to once per second

- The speed is computed by the browser, checking the counter about once per second

- Between two consecutive updates, the counter will increase either by 4, or by 0

- The perceived speed will therefore be 4 - 4 - 4 - 0 - 4 - 4 - 0 etc.

- What can we conclude from this?

- "I'm clearly incapple of writing good frontend code!"😁

- If we interrupt Compose(with

^C),it will politely ask the Docker Engine to stop the app - The Docker Engine will send a

TERMsignal to the containers - If the containers do not exit in a timely manner, the Engine sends a

KILLsignal

Exercise

- Stop the application by hitting

^C

- For development using Docker, it has build, ship, and run features

- Now that we want to run on a cluster, things are different

- Kubernetes doesn't have a build feature built-in

- The way to ship(pull) images to Kubernetes is to use a registry

- What happens when we execute

docker run alpine? - If the Engine needs to pull the

alpineimage, it expands it intolibrary/alpine library/alpineis expanded intoindex.docker.io/library/alpine- The Engine communicates with

index.docker.ioto retrievelibrary/alpine:latest - To use something else than

index.docker.io, we specify it in the image name - Examples:

docker pull gcr.io/google-containers/alpine-with-bash:1.0

docker build -t registry.mycompany.io:5000/myimage:awesome .

docker push registry.mycompany.io:5000/myimage:awesome- There are many options!

- Manually:

- build locally(with

docker buildor otherwise) - push to the registry

- build locally(with

- Automatically:

- build and test locally

- when ready, commit and push a code repository

- the code repository notifies an automated build system

- that system gets the code, builds it, pushes the image to the registry

- There are SAAS products like Docker Hub, Quay, GitLab...

- Each major cloud provider has an option as well

- (ACR on Azure, ECR on AWS, GCR on Google Cloud...)

- There are also commercial products to run our own registry

- (Docker Enterprise DTR, Quay, GitLab, JFrog Artifacotry...)

- And open source options, too!

- (Quay, Portus, OpenShift OCR, Gitlab, Harbor, Kraken...)

- (I don't mention Docker Distribution here because it's too basic)

- When picking a registry, pay attention to:

- Its build system

- Multi-user auth and mgmt(RBAC)

- Storage feature(replication, cahing, garbage collection)

- Create one deployment for each component

- (hasher, redis, rng, webui, worker)

- Expose deployments that need to accept connections

- (hasher, redis, rng, webui)

- For redis, we can use the official redis image

- For the 4 others, we need to build images and push them to some registry

- For everyone's convenience, we took care of building DockerCoins images

- We pushed these images to the DockerHub, under the

dockercoinsuser - These images are tagged with a version number, v0.1

- The full image names are therefore:

dockercoins/hasher:v0.1dockercoins/rng:v0.1dockercoins/webui:v0.1dockercoins/worker:v0.1

- We can now deploy our code(as well as a redis instance)

Exercise

- Deploy

redis:

kubectl create deployment redis --image=redis- Deploy everything else:

kubectl create deployment hasher --image=dockercoins/hasher:v0.1

kubectl create deployment rng --image=dockercoins/rng:v0.1

kubectl create deployment worker --image=dockercoins/worker:v0.1

kubectl create deployment webui --image=dockercoins/webui:v0.1- After waiting for the deployment to complete, let's look at the logs!

- (Hint: use

kubectl get deploy -wto watch deployment events)

- (Hint: use

Exercise

- Look at some logs:

kubectl logs deploy/rng

kubectl logs deploy/worker

kubectl logs deploy/worker --follow🤔rng is fine... But not worker.

Oh right! We forgot to expose

- Three deployments need to be reachable by others:

hasher,redis,rng workerdoesn't need to be exposedwebuiwill be dealt with later

Exercise

- Expose each deployment, specifying the right port:

kubectl expose deployment redis --port 6379

kubectl expose deployment rng --port 80

kubectl expose deployment hasher --port 80$ minikube start --vm-driver=hyperkit --registry-mirror=https://registry.docker-cn.com --image-mirror-country=cn --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

$ minikube start --image-mirror-country=cn --iso-url=https://kubernetes.oss-cn-hangzhou.aliyuncs.com/minikube/iso/minikube-v1.6.0.iso --registry-mirror=https://dockerhub.azk8s.cn --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --vm-driver="hyperv" --hyperv-virtual-switch="minikube v switch" --memory=4096 - Now we would like to access the Web UI

- We will expose it with a

NodePort- (just like we did for the registry)

Exercise

- Create a

NodePortservice for the Web UI:

kubectl expose deploy/webui --type=NodePort --port=80- Check the port that was allocated:

kubectl get svcwebui NodePort 10.96.39.74 80:31900/TCP 9s

e.g. ->> http://localhost:31900

- Our ultimate goal is to get more DockerCoins

- (i.e. increase the number of loops per second shown on the web UI)

- Let's look at the

architectureagain: - We're at 4 hashes a second.Let's ramp this up!

- The loop is done in the worker; perhaps we could try adding more workers?

- All we have to do is scale the

workerDeployment

Exercise

- Open two new terminals to check what's going on with pods and deployments:

kubectl get pods -w

kubectl get deployments -w- Now, create more

workerreplicas:

kubectl scale deployment worker --replicas=2- If 2 workers give us 2x speed,what about 3 workers?

Exercise

- Scale the

workerDeployment further:

kubectl scale deployment worker --replicas=3- The graph in the web UI should go up again.

- (This is looking great! We're gonna be RICH!)

- Let's see if 10 workers give us 10x speed!

Exercise

- Scale the

workerDeployment to a bigger number:

kubectl scale deployment worker --replicas=10

# kubectl scale deployment rng --replicas=2

# kubectl scale deployment hasher --replicas=2The graph will peak at 10 hashes/second. (We can add as many workers as we want: we will never go past 10 hashes/second.)

- If this was high-quality, production code, we would have instrumentation

- (Datadog, Honeycomb, New Relic, statsd, Sumologic,...)

- It's not

- Perhaps we could benchmark our web services?

- (with tools like

ab, or even simpler,httping)

- (with tools like

- We want to check

hasherandrng - We are going to use

httping - It's just like

ping, but using HTTPGETrequests- (it measures how long it takes to perform one

GETrequest)

- (it measures how long it takes to perform one

- It's used like this:

httping [-c count] http://host:port/path- Or even simpler:

httping ip.ad.dr.ess- We will use

httpingon the ClusterIP addresses of our services

- We can simply check the output of

kubectl get services - Or do it programmatically, as in the example below

Exercise

- Retrieve the IP addresses:

HASHER=$(kubectl get svc hasher -o go-template={{.spec.clusterIP}})

RNG=$(kubectl get svc rng -o go-template={{.spec.clusterIP}})Now we can access the IP addresses of our services through $HASHER and $RNG.

Exercise

- Remember to use

shpodon macOS and Windows:

kubectl apply -f https://bret.run/shpod.yml

kubectl attach --namespace=shpod -ti shpod- Check the response times for both services:

httping -c 3 $HASHER

httping -c 3 $RNG- The bottleneck seems to be

rng - What if we don't have enough entropy and can't generate enough random numbers?

- We need to scale out the

rngservice on multiple machines!

Note:this is a fiction!We have enough entropy.But we need a pretext to scale out.

- Oops we only have one node for learning.🤔

- Let's pretend and I'll explain along the way.

(in fact, the code of rng uses /dev/urandom. which never runs out of entropy......

...and is just as good as /dev/random)

- So far, we created resources with the following commands:

kubectl runkubectl create deploymentkubectl expose

- We can also create resources directly with YAML manifests

kubectl create -f whatever.yaml- creates resources if they don't exist

- if resources already exist, don't alter them(and display error message)

kubectl apply -f whatever.yaml- creates resources if they don't exist

- if resources already exist,update them(to match the definition provided by the YAML file)

- stores the manifest as an annotation in the resource

- The manifest can contain multiple resources separated by

---

kind: ...

apiVersion: ...

metadata:

name: ...

...

spec:

...

---

kind: ...

apiVersion: ...

metadata:

name: ...

...

spec:

...- The manifest can also contain a list of resources

apiVersion: v1

kind: List

items:

- kind: ...

apiVersion: ...

...

- kind: ...

apiVersion: ...

...- We provide a YAML manifest with all the resources for DockerCoins (Deployments and Services)

- We can use it if we need to deploy or redeploy DockerCoins

Exercise

- Deploy or redeploy DockerCoins:

kubectl apply -f https://k8smastery.com/dockercoins.yaml- Note the warinings if you already had the resources created

- This is because we didn't use

applybefore - This is OK for us learning, so ignore the warnings

- Generally in production you want to stick with one method or the other

- Kubernetes resources can also be viewed with an official web UI

- That dashboard is usually exposed over HTTPS (this requires obtaining a proper TLS certificate)

- Dashboard users need to authenticate

- We are going to take a dangerous shortcut

- We could(and should) use Let's Encrypt...

- ...but we don't want to deal with TLS certificates

- We could(and should) learn how authentication and authorization work...

- ...but we will use a guest account with admin access instead

Yes, this will open our cluster to all kinds of shenanigans.Don't do this at home.

- We are going to deploy that dashboard with one single command

- This command will create all the necessary resources (the dashboard itself, the HTTP wrpper, the admin/guest account)

- All these resources are defined in a YAML file

- All we have to do is load that YAML file with

kubctl apply -f

Exercise

- Create all the dashboard resources, with the following command:

kubectl apply -f https://k8smastery.com/insecure-dashboard.yaml

kubectl get svc dashboard- We have three authentication options at this point:

- token (associated with a role that has appropriate permissions)

- kubeconfig (e.g. using the

~/.kube/configfile) - "skip" (use the dashboard "service account")

- Let's use "skip": we're logged in!

By the way, we just added a backdoor to our Kubernetes cluster!

- The steps that we just showed you are for educational purpose only!

- If you do that on your production cluster, people can and will abuse it

- For an in-depth discussion about securing the dashboard

- check this execllent post on Heptio's blog

- Minikube/microK8s can be enabled with easy commands

minikube dashboardandmicrok8s.enable dashboard

- Kube Web View

- read-only dashboard

- optimized for "troubleshooting and incident response"

- see vision and goals for details

- Kube Ops View

- "provides a common operational picture for multiple Kubernetes clusters"

- Your Kubernetes distro comes with one!

- Cloud-provided control-planes often don't come with one.

- When we do

kubectl apply -f <URL>.we create arbitrary resources - Resources can be evil;imagine a

deploymentthat...- starts bitcoin miners on the whole cluster

- hides in a non-default namespace

- bing-mounts our nodes' filesystem

- inserts SSH keys in the root account(on the node)

- encrypts our data and ransoms it

curl | shis convenient- It's safe if you use HTTPS URLs from trusted sources

kubectl apply -fis convenient- It's safe if you use HTTPS URLs from trusted sources

- Example:the official setup instructions for most pod networks

- We want to scale

rngin a way that is different from how we scaledworker - We want one(and exactly one) instance of

rngper node - We do not want two instances of

rngon the same node - We will do that with a daemon set

- Can't we just do

kubectl scale deployment rng --replicas=...? - Nothing guarantees that the

rngcontainers will be distributed evenly - If we add nodes later,they will not automatically run a copy of

rng - If we remove(or reboot) a node, one

rngcontainer will restart elsewhere (add we will end up with two instancesrngon the same node)

- Daemon sets are great for cluster-wide, per-node processes:

kube-proxy- CNI network plugins

- monitoring agents

- hardware management tools(e.g. SCSI/FC HBA agents)

- etc.

- They can also be restricted to run only on some nodes

- Unfortunately, as of Kubernetes 1.15, the CLI cannot create daemon sets

- More precisely: it doesn't have a subcommand to create a daemon set

- But any kind of resource can always be created by providing a YAML description:

kubectl apply -f foo.yaml- How do we create the YAML file for our daemon set?

- option 1: read the docs

- option 2:

viour way out of it

- Let's start with the YAML file for the current

rngresource Exercise - Dump the

rngresource in YAML:

kubectl get deploy/rng -o yaml > rng.yml- Edit

rng.yml

- What if we just changed the

kindfield?(It can't be that easy,right?)

Exercise

- Change

kind: Deploymenttokind: DaemonSet - Save, quit

- Try to create our new resource:

kubectl apply -f rng.yml- The core of the error is:

error validating data:

[ValidationError(DaemonSet.spec):

unknown field "replicas" in io.k8s.api.extensions.v1beta1.DaemonSetSpec,

...- Obviously, it doesn't make sense to specify a number of replicas for a daemon set

- Workaround:fix the YAML

- remove the

replicasfield - remove the

strategyfield(which defines the rollout mechanism for a deployment) - remove the

progressDeadlineSecondsfield(also used by the rollout mechani) - remove the

status: {}line at the end

- remove the

- We could also tell Kubernetes to ignore these errors and try anyway

- The

--forceflag's actual name is--validate=false

Exercise

- Try to load our YAML file and ignore errors:

kubectl apply -f rng.yml --validate=falseWait...Now, can it be that easy?

- Did we transform our

deploymentinto adaemonset? Exercise - Look at the resources that we have now:

kubectl get all- You can have different resource types with the same name

(i.e. a deployment and a daemon set both named

rng) - We still have the old

rngdeployment

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/rng 1/1 1 1 20h

- But how we have the new

rngdaemon set as well

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/rng 1 1 1 1 1 <none> 7s

- If we check with

kubectl get pods, we see:- one pod for the deployment(named

rng-xxxxxxxxxxx-yyyyy) - one pod per node for the daemon set(named

rng-zzzzz)

- one pod for the deployment(named

NAME READY STATUS RESTARTS AGE

pod/rng-58596fdbd-lqlsp 1/1 Running 0 20h

pod/rng-qnq4n 1/1 Running 0 7sThe daemon set created one pod per node.

In a multi-node setup, master usually have taints preventing pods from running there.

(To schedule a pod on this node anyway,the pod will require appropriate tolerations)

- Look at the web UI

- The graph should now go above 10 hashes per second!

- It looks like the newly created pods are serving traffic correctly

- How and why did this happen?

(We didn't do anything special to add them to the

rngservice load balancer!)

- The

rngservice is load balancing requests to a set of pods - That set of pods is defined by the selector of the

rngservice

Exercise

- Check the selector in the

rngservice definition:

kubectl describe service rng- The selector is

app=rng - It means "all the pods having the label app=rng" (They can have additional labels as well, that's OK!)

- We can use selectors with many

kubectlcommands - For instance, with

kubectl get,kubectl logs,kubectl delete... and more

Exercise

- Get the list of pods matching selector

app=rng:

kubectl get pods -l app=rng

kubectl get pods --selector app=rngBut...why do these pods(in particular, the new ones)have this app=rng label?

Where do labels come from?

- When we create a deployment with

kubectl create deployment rng, this deployment gets the labelapp=rng - The replica sets created by this deployment also get the label

app=rng - The pods created by these replica sets also get the label

app=rng - When we created the daemon set from the deployment, we re-used the same spec

- Therefore, the pods created by the daemon set get the same labels

- When we use

kubectl run stuff, the label isrun=stuffinstead

- We would like to remove a pod from the load balancer

- What would happen if we removed that pod, with

kubectl delete pod...?- It would be re-created immediately(by the replica set ro the daemon set)

- What would happen if we removed the

app=rnglabel from that pod?- It would also be re-created immediately

- Why?!?

- The "mission" of a replica set is:

- "Make sure that there is the right number of pods matching this spec!"

- The "mission" of a daemon set is:

- "Make sure that there is a pod matching this spec on each node!"

- In fact, replica sets adn daemon sets do not check pod specifications

- They merely have a selector, and they look for pods matching that selector

- Yes, we can fool them by manually creating pods with the "right" labels

- Bottom line:if we remove our

app=rnglabel... *...The pod "disappears" for its parent, which re-creates another pod to replace it - Since both the

rngdaemon set and therngreplica set useapp=rng...- ...Why don't they "find" each other's pods?

- Replica sets have a more specific selector, visible with

kubectl describe- (It looks like

app=rng, pod-template-hash=abcd1234)

- (It looks like

- Daemon sets also have a more specific selector, but it's invisible

- (It looks like

app=rng, controller-revision-hash=abcd1234)

- (It looks like

- As a result,each controller only "sees" the pods it manages

- Currently, the

rngservice is defined by theapp=rngselector - The only way to remove a pod is to remove or change the

applabel - ...But that will cause another pod to be created instead!

- What's the solution?

- We need to change the selector of the

rngservice! - Let's add another label to that selector(e.g. enabled=yes)

- If a selector specifies multiple labels, they are understood as a logical AND (In other words: the pods must match all the labels)

- Kubernetes has support for advanced, set-based selectors (But these cannot be used with services, at least not yet!)

- Add the label

enabled=yesto all ourrngpods - Update the selector for the

rngservice to also includeenabled=yes - Toggle traffic to a pod by manually adding/removing the

enabledlabel - Profit!

Note: if we swap steps 1 and 2, it will cause a short

service disruption, becasuse there will be

a period of time during which the service selector

won't match any pod. During that time,

requests to the service will time out.By doing

things in the order above, we guarantee that

there won't be any interruption.

- We want to add the label

enabled=yesto all pods that haveapp=rng - We could edit each pod one by one with

kubectl edit... - ... Or we could use

kubectl labelto label them all kubectl labelcan use selectors itself

Exercise

- Add

enabled=yesto all pods that haveapp=rng

kubectl label pods -l app=rng enabled=yes- We need to edit the service specification

- Reminder: in the service deinition, we will see

app: rngin two places- the label of the service itself(we don't need to touch that one)

- the selector of the service(that's the one we want to change)

Exercise

- Update the service to add

enabled: yesto its selector:

kubectl edit service rng- YAML parsers try to help us:

xyzis the string"xyz"42is the integer42yesis the boolean valuetrue

- If we want the string

"42"or the string"yes", we have to quote them - So we have to use

enabled: "yes"

For a good laugh:if we had used "ja", "oui", "si"... as the value,it would have world.

Exercise

- Update the service to add

enabled: "yes"to its selector:

kubectl edit service rng- This time is should work!

- If we did everything correctly, the web UI shouldn't show any change.

- We want to disable the pod that was created by the deployment

- All we have to do, is remove the

enabledlabel from that pod - To identify that pod, we can use its name

- ... Or rely on the fact that it's the only one with a

pod-template-hashlabel - Good to know:

kubctl label ... foo=doesn't remove a label(it sets it to an empty string)- to remove label

foo, usekubectl label ... foo- - to change an existing label, we would need to add

--overwrite

Exercise

- In one window, check the logs of that pod:

POD=$(kubectl get pod -l app=rng,pod-template-hash -o name)

kubectl logs --tail 1 --follow $POD

(We should see a steady stream of HTTP logs)- In another window, remove the label from the pod:

kubectl label pod -l app=rng,pod-template-hash enabled-

(The stream of HTTP logs should stop immediately)There might be a slight change in the web UI(since we removed a bit of capacity from rng service).if we remove more pods, the effect should be more visible.

- If we scale up our cluster by adding new nodes, the daemon set will create more pods

- These pods won't have the

enable=yeslabel - If we want these pods to have that label, we need to edit the daemon set spec

- We can do that with e.g.

kubectl edit daemonset rng

- When a pod is misbehaving, we can delete it:another one will be recreated

- But we can also change its labels

- It will be removed from the load balancer(it won't receive traffic anymore)

- Another pod will be recreated immediately

- But the problematic pod is still here, and we can inspect and debug it

- We can even re-add it to the rotation if necessary

- (Very useful to troubleshoot intermittent and elusive bugs)

- Conversely, we can add pods matching a service's selector

- These pods will then receive requests and serve traffic

- Examples:

one-shot pod with all debug flags enabled, to collect logs- pods created automatically, but added to rotation in a second step (by setting their label accordingly)

- This gives us building blocks for canary and blue/green deployments

Let's cleanup before we start the next lecture!

Exercise

- remove our DockerCoin resources(for now):

kubectl delete -f https://k8smastery.com/dockercoins.yaml

kubectl delete daemonset/rng- To use Kubernetes is to "live in YAML"!

- It's more important to learn the foundations then to memorize all YAML keys(hundreds+)

- There are various ways to generate YAML with Kubernetes, e.g.:

kubectl runkubectl create deployment(and a few otherkubectl createvariants)kubectl expose

- These commands use "generators" because the API only accepts YAML(actually JSON)

- Pro: They are easy to use

- Con: They have limits

- When and why do we need to write our own YAML?

- How do we write YAML from scratch?

- And maybe,what is YAML?

- It's technically a superset of JSON, designed for humans

- JSON was good for machines, but not for humans

- Spaces set the structure. One space off and game over

- Remember spaces not tabs,Ever!

- Two spaces is standard, but four spaces works too

- You don't have to learn all YAML features, but key concepts you need:

- Key/Value Pairs

- Array/Lists

- Dictionary/Maps

- Good online tutorials exist here, here, here, and YouTube here

- Can be in YAML or JSON, but YAML is 100%.

- Each file contains one or more manifests

- Each manifest describes an API object(deployment, service, etc.)

- Each manifest needs four parts(root key:values in the file)

apiVersion:

kind:

metadata:

spec:- This is a single manifest that creates one Pod

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.17.3apiVersion: v1

kind: Service

metadata:

name: mynginx

spec:

type: NodePort

ports:

- port: 80

selector:

app: mynginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mynginx

spec:

replicas: 3

selector:

matchLabels:

app: mynginx

template:

metadata:

labels:

app: mynginx

spec:

containers:

- name: nginx

image: nginx:1.17.3- Advanced(and even not-so-advanced) features require us to write YAML:

- pods with multiple containers

- resource limits

- healthchecks

- many other resource options

- Other resource types don't have their own commands!

- DaemonSets

- StatefulSets

- and more!

- How do we access these features?

- Output YAML from existing resources

- Create a resource(e.g. Deployment)

- Dump its YAML with

kubectl get -o yaml... - Edit the YAML

- Use

kubectl apply -f ...with the YAML file to: - update the resource(if it's the same kind)

- create a new resource(if it's a different kind)

- Or...we have the docs, with good starter YAML

- StatefulSet, DaemonSet, ConfigMap, and a ton more on Github

- Or...we can use

-o yaml --dry-run

- We can use the

-o yaml --dry-runoption combo witherunandcreate

Exercise

- Generate the YAML for a Deployment without creating it:

kubectl create deployment web --image nginx -o yaml --dry-run- Generate the YAML for a Namespace without creating it:

kubectl create namespace awesome-app -o yaml --dry-run- We can clean up the YAML even more if we want

- (for instance, we can remove the

creationTimestampand empty dicts)

- (for instance, we can remove the

clusterrole # Create a ClusterRole.

clusterrolebinding # Create a ClusterRoleBinding for a particular ClusterRole.

configmap # Create a configmap from a local file, directory or literal

cronjob # Create a cronjob with the specified name

deployment # Create a deployment with the specified name

job # Create a job with the specified name

namespace # Create a namespace with the specified name

poddisruptionbudget # Create a pod disruption budget with the specified name

priorityclass # Create a priorityclass with the specified name

quota # Create a quota with the specified name

role # Create a role with single rule

rolebinding # Create a RoleBinding for a particular Role or ClusterRole

secret # Create a secret using specified subcommand

service # Create a service using specified subcommand

serviceaccount # Create a service account with the specified name- Ensure you use valid

createcommands with required options for each

- Paying homage to Kelsey Hightower's "Kubernetes The Hard Way"

- A reminder about manifest:

- Each file contains one or more manifests

- Each manifest describes an API object(deployment, service, etc.)

- Each manifest needs four parts(root key:values in the file)

apiVersion: # find with "kubectl api-versions"

kind: # find with "kubectl api-resources"

metadata:

spec: # find with "kubectl describe pod"- Those three

kubectlcommands, plus the API docs, is all we'll need

- Find the resource

kindyou want to create(api-resources) - Find the latest

apiVersionyour cluster supports forkind(api-versions) - Give it a

namein metadata(minimum) - Dive into the

specof thatkindkubectl explain <kind>.speckubectl explain <kind> --recursive

- Browse the docs

API Referencefor your cluster version to supplement - Use

--dry-runand--server-dry-runfor testing kubectl createanddeleteuntil you get it right

kubectl api-resources

kubectl api-versions

kubectl explain pod

kubectl explain pod.spec

kubectl explain pod.spec.volumes

kubectl explain pod.spec --recursive- Using YAML(instead of

kubectl run/create/etc.)allows to be declarative - The YAML describes the desired state of our cluster and applications

- YAML can be stored. versioned, archived(e.g. in git repositories)

- To change resource, change the YAML files(instead of using

kubectl edit/scale/label/etc.) - Changes can be reviewed before being applied (with code reviews, pull requests...)

- This workflow is sometimes called "GitOps" (there are tools like Weave Flux or GitKube to facilitate it)

- Get started with

kubectl run/create/expose/etc. - Dump the YAML with

kubectl get -o yaml - Tweak that YAML and

kubectl applyit back - Store that YAML for reference(for further deployments)

- Feel free to clean up the YAML

- remove fields you don't know

- check that it still works!

That YAMLwill be useful later when using e.g. Kustomize or Helm

- Use generic linters to check proper YAML formatting

- yamllint.com

- codebeautiful.org/yaml-validator

- For humans without kubectl, use a web Kubernetes YAML validator: kubeyaml.com

- In CI, you might use CLI tools

- YAML linter:

pip install yamllintgithub.com/adrienverge/yamllint - Kubernetes validator:

kubevalgithub.com/instrumenta/kubeval

- YAML linter:

- We'll learn about Kubernetes cluster-specific validation with kubectl later

- We already talked about using

--dry-runfor building YAML - Let's talk more about options for testing YAML

- Including testing against the live cluster API!

- The

--dry-runoption can also be used withkubectl apply - However, it can be mileading(it doesn't do a "real" dry run)

- Let's see what happens in the following scenario:

- generate the YAML for a Deployment

- tweak the YAML to transform it into a DaemonSet

- apply that YAML to see what would actually be created

Exercise

- Generate the YAML for a deployment:

kubectl create deployment web --image=nginx -o yaml > web.yaml- Change the

kindin the YAML to make it aDaemonSet - Ask

kubectlwhat would be applied:

kubectl apply -f web.yaml --dry-run --validate=false -o yamlThe resulting YAML doesn't represent a valid DaemonSet.

- Since Kubernetes 1.13, we can use server-side dry run and diffs

- Server-side dry run will do all the work, but not persist to etcd

- (all validation and mutation hooks will be executed)

Exercise

- Try the same YAML files as earlier,with server-side dry run:

kubectl apply -f web.yaml --server-dry-run --validate=false -o yaml- The resulting YAML doesn't have the

replicasfield anymore. - Instead, it has the fields expected in a DaemonSet

- The YAML is verified much more extensively

- The only step that is skipped is "write to etcd"

- YAML that passes server-side dry run should apply successfully (unless the cluster state changes by the time the YAML is actually applied)

- Validating or mutating hooks that have side effects can also be an issue

- Kubernetes 1.13 also introduced

kubectl diff kubectl diffdoes a server-side dry run, and shows differences

Exercise

- Try

kubectl diffon a simple Pod YAML:

curl -O https://k8smastery.com/just-a-pod.yaml

kubectl apply -f just-a-pod.yaml

# edit the image ta to :1.17

kubectl diff -f just-a-pod.yamlNote: we don't need to specify --validate=false here.

Let's cleanup before we start the next lecture!

Exercise

- remove our "hello" pod:

kubectl delete -f just-a-pod.yaml- By default(without rolling updates), when a scaled resource is updated:

- new pods are created

- old pods are terminated

- ...all at the same time

- if something goes wrong,

- With rolling updates, when a Deployment is updated, it happens progressively

- The Deployment controls multiple ReplicaSets

- Each ReplicaSet is a group of identical Pods (with the same image, arguments, parameters)

- During the rolling update, we have at least two ReplicaSets:

- the "new" set(corresponding to the "target" version)

- at least one "old" set

- We can have multiple "old" sets (if we start another update before the first one is done)

- Two parameters determine the pace of the rollout:

maxUnavailableandmaxSurge - They can be specified in absolute number of pods, or percentage of the

replicascount - At any given time...

- there will always be at least

replicas-maxUnavailablepods available - there will never be more than

replicas+maxSurgepods in total - there will therefore be up to

maxUnavailable+maxSurgepods being updated

- there will always be at least

- We have the possibility of rolling back to the previous version (if the update fails or is unsatisfactory in any way)

- Recall how we build custom reports with

kubectlandjq:

Exercise

- Show the rollout plan for our deployments:

kubectl apply -f https://k8smastery.com/dockercoins.yaml

kubectl get deploy -o json | jq ".items[] | {name:.metadata.name} + .spec.strategy.rollingUpdate" - As of Kubernetes 1.8, we can do rolling updates with:

deployments,daemonsets,statefulsets - Editing one of these resources will automatically result in a rolling update

- Rolling updates can be monitored with the

kubectl rolloutsubcommand

Exercise

- Let's monitor what's going on by opening a few terminals, and run :

kubectl get pods -w

kubectl get replicasets -w

kubectl get deployments -w- Update

workereither withkubectl edit, or by running:

kubectl set image deploy worker worker=dockercoins/worker:v0.2That rollout should be pretty quick. What shows in the web UI?

- At first, it looks like nothing is happening(the graph remains at the same level)

- According to

kubectl get deploy -w, thedeploymentwas updated really quickly - But

kubectl get pods -wtells a different story - The old

podsare still here, and they stay inTerminatingstate for a while - Eventually, they are terminated; and then the graph decreases significantly

- This delay is due to the fact that our worker doesn't handle signals

- Kubernetes sends a "polite" shutdown request to the worker,which ignores it

- After a grace period, Kubernetes gets impatient and kills the container (The grace period is 30 seconds, but can be changed if needed)

- What happens if we make a mistake?

Exercise

- Update

workerby specifiying a non-existent image:

kubectl set image deploy worker worker=dockercoins/worker:v0.3- Check what's going on:

kubectl rollout status deploy workerOur rollout is stuck. However, the app is not dead. (After a minute, it will stabilize to be 20-25% slower.)

kubectl get pods

# ErrImagePull- Why is our app a bit slower?

- Because