.

├── colab # runnable code in colab (run this)

│ ├── model.ipynb # model code

│ ├── model_smote.ipynb # smote model code

│ └── score_calc.ipynb # evaluating code

│

├── img # img

│ └── model.PNG # overall model picture

│

├── model # trained model

│ ├── efficient_b0_model.pt # model with efficient-net b0

│ ├── efficient_b1_model.pt # model with efficient-net b1

│ └── smote_model_b1.pt # smote applying model with efficient-net b1

│

├── src # same with colab but seperated

│ ├── model.py # model code

│ ├── smote.py # apply smote code

│ ├── score_calc.py # evaluating code

│ └── training.py # training code

│

└── README.md

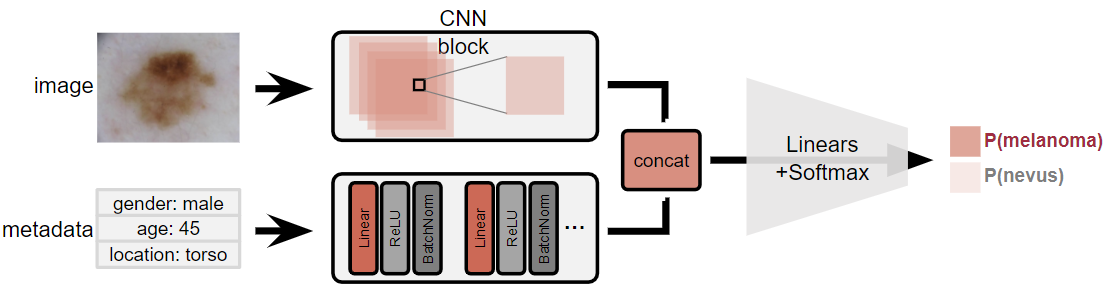

As will be explained below, we used efficient-net for feature extracting. Therefore, the image was resized to 240x240 so that it could be put into an efficient net.

Meanwhile, the metadata used for learning were sex, age and site. This was expressed using one hot encoding and then concated and used as single tensor data.

The model consists of a CNN layer to learn images, an FC layer to learn meta data, and an FC layer to finally classify the results from each layer.

We used efficient-net as a baseline model. We use transfer learning so that this baseline model does feature extracting and we trained our models from these extracted features.

In FC layer, we use some regularization using Batch normalization, Dropout, etc. we use ReLU as activation function.

Similar with FC layer for metadata and it finally classify to two classes (melanoma / nevus)

learning rate: 0.0001

learning rate decay: 0.95

weight decay: 0.001

learning rate scheduler: StepLR (gamma = 0.95)

We used Adam as the optimizer. The Adam optimizer has the advantage of having a momentum and is able to learn faster and get out of the local minimum, and has the advantage of using an adaptive learning rate like RMSprop.