Multi-Armed-Bandit

Description

This is an implementation of

How to Install:

# In project root folder

pip install -r requirements.txt

How to Run:

# In project root folder

./run.sh

Tasks

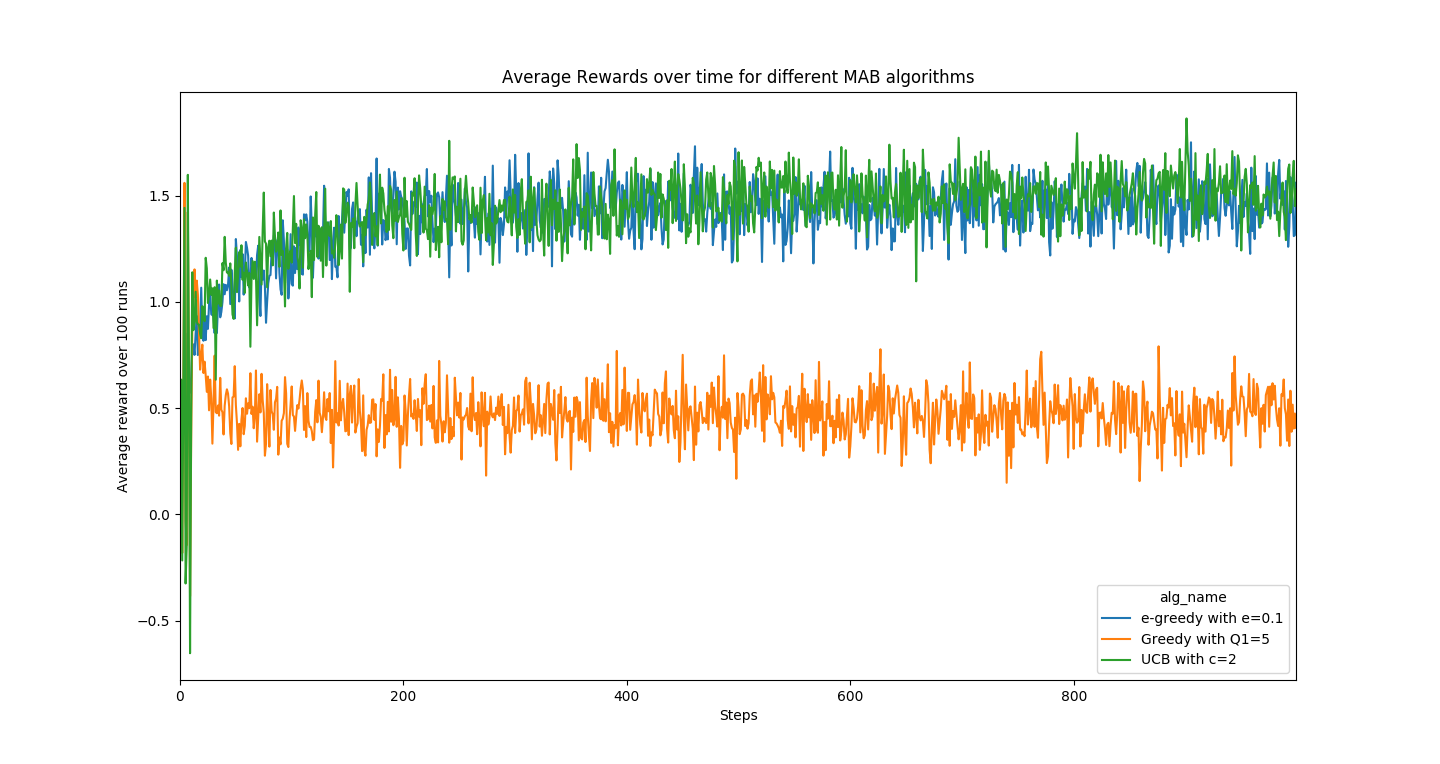

Part 1

A plot of reward over time (averaged over 100 runs each) on the same axes, for

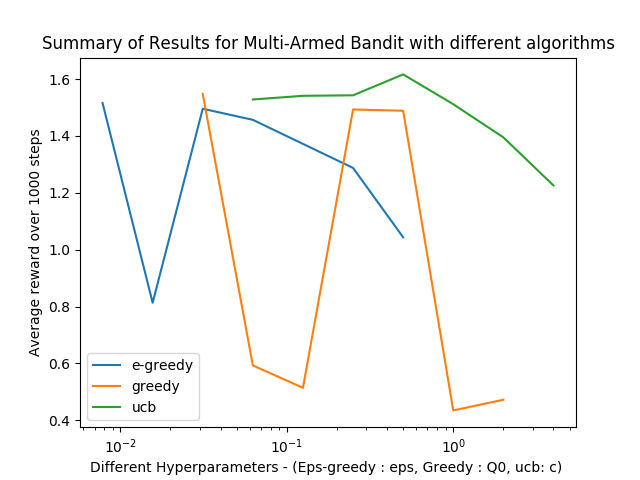

Part 2

A summary comparison plot of rewards over first 1000 steps for the three algorithms with different values of the hyperparameters.