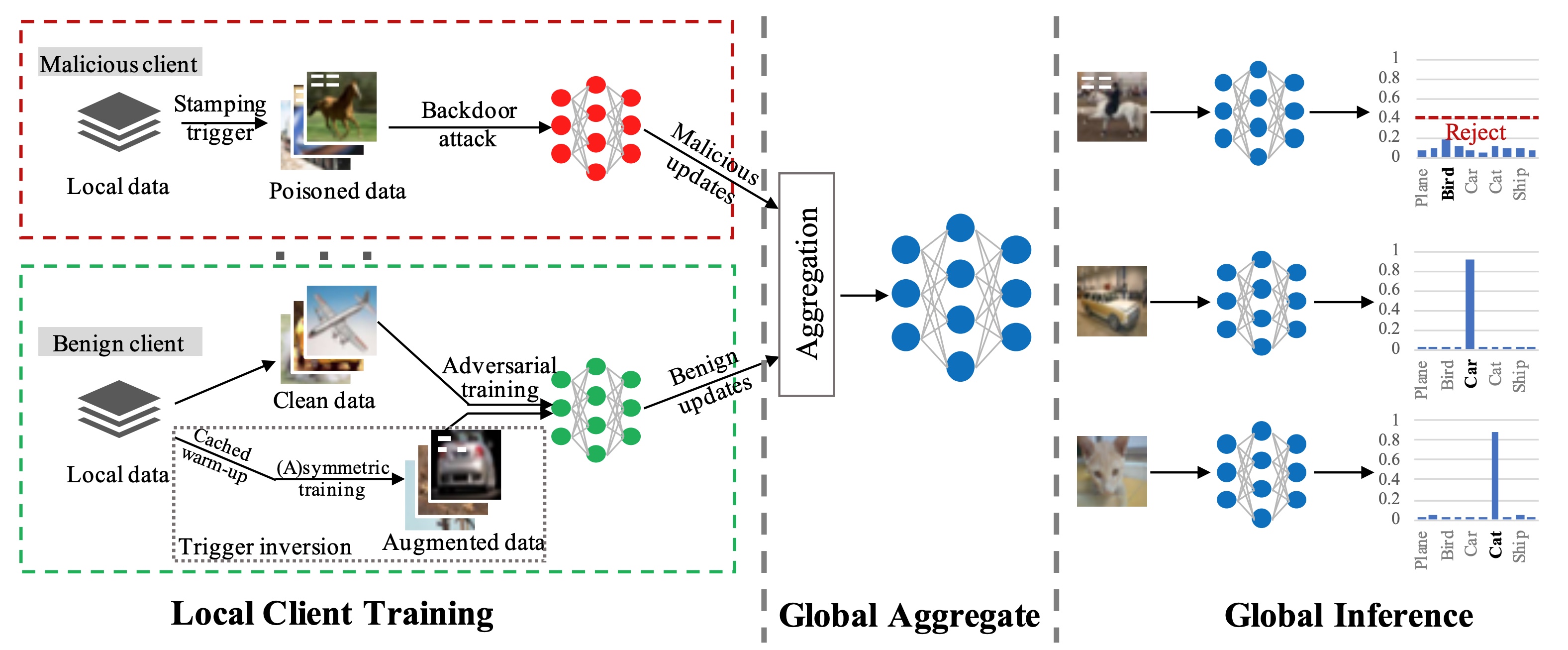

- This is the PyTorch implementation for ICLR 2023 paper "FLIP: A Provable Defense Framework for Backdoor Mitigation in Federated Learning".

- This paper also wins a Best Paper Award 🏆 at ECCV 2022 AROW Workshop.

- [openreview] | [arXiv] | [workshop slides]

- Python >= 3.7.10

- PyTorch >= 1.7.1

- TorchVision >= 0.8.2

- PyYAML >= 6.0

- Pre-trained clean models prepared in the directory

./saved_models/. - You can also train from round 0 to obtain the pre-trained clean models.

- Run the following commands to reproduce the results in the paper.

# Create python environment (optional)

conda env create -f environment.yml

source activate flipPrior to federated training and inverting, make sure to create a data directory ./data/ and set the correct parameters in the .yaml file for single-shot or continuous FL backdoor attacks. Parameters can be found in the ./utils/xx.yaml directory.

python main.py --params utils/mnist.yamlpython main.py --params utils/fashion_mnist.yamlpython main.py --params utils/cifar.yaml.

├── data # data directory

├── models # model structures for different datasets

├── saved_models # pre-trained clean models and saved models during training

│ ├── cifar_pretrain # pre-trained clean models on CIFAR-10

│ ├── fashion_mnist_pretrain # pre-trained clean models on Fashion-MNIST

│ └── mnist_pretrain # pre-trained clean models on MNIST

├── utils # utils / params for different datasets

├── config.py # set GPU device and global variables

├── helper.py # helper functions

├── image_helper.py # helper functions for image datasets, e.g., load data, etc.

├── image_train.py # normal training and invert training

├── invert_CIFAR.py # benign local clients invert training on CIFAR-10

├── invert_FashionMNIST.py # benign local clients invert training on Fashion-MNIST

├── invert_MNIST.py # benign local clients invert training on MNIST

├── main.py # main function, run this file to train and invert

├── test.py # test metrics

├── train.py # train function, image_train.py is called in this file

└── ...

Please cite our work as follows for any purpose of usage.

@inproceedings{

zhang2023flip,

title={{FLIP}: A Provable Defense Framework for Backdoor Mitigation in Federated Learning},

author={Kaiyuan Zhang and Guanhong Tao and Qiuling Xu and Siyuan Cheng and Shengwei An and Yingqi Liu and Shiwei Feng and Guangyu Shen and Pin-Yu Chen and Shiqing Ma and Xiangyu Zhang},

booktitle={The Eleventh International Conference on Learning Representations },

year={2023},

url={https://openreview.net/forum?id=Xo2E217_M4n}

}