Basic versions of agents from Spinning Up in Deep RL written in PyTorch. Designed to run quickly on CPU on Pendulum-v0 from OpenAI Gym.

To see differences between algorithms, try running diff -y <file1> <file2>, e.g., diff -y ddpg.py td3.py.

For MPI versions of on-policy algorithms, see the mpi branch.

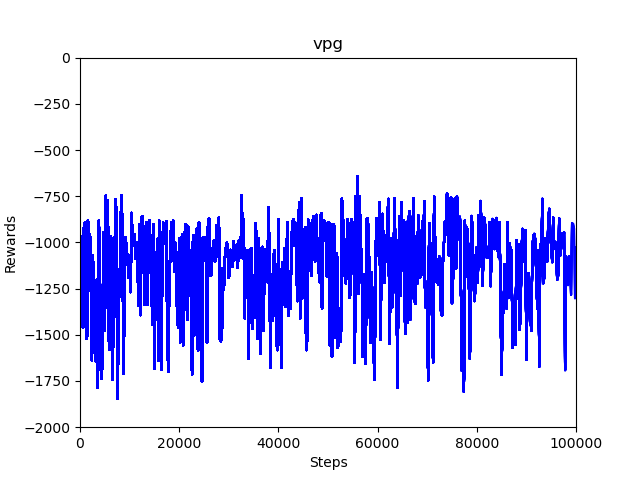

- Vanilla Policy Gradient/Advantage Actor-Critic (

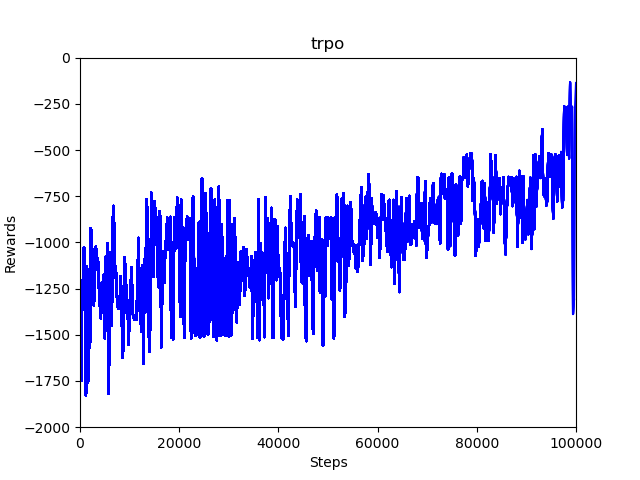

vpg.py) - Trust Region Policy Gradient (

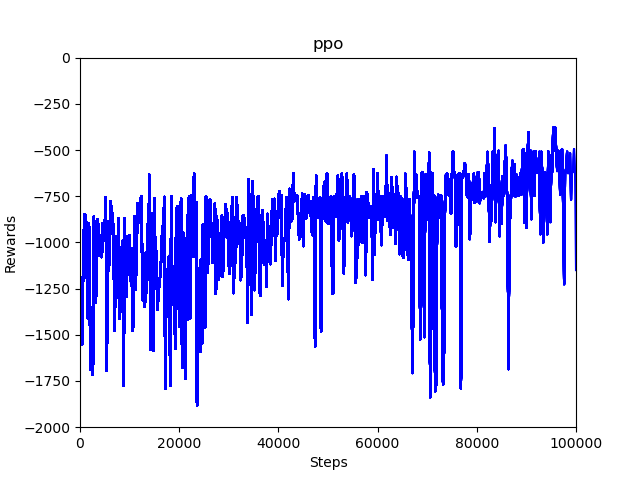

trpo.py) - Proximal Policy Optimization (

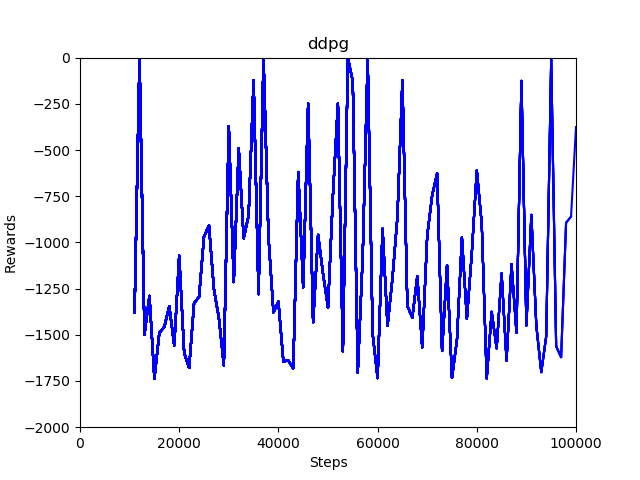

ppo.py) - Deep Deterministic Policy Gradient (

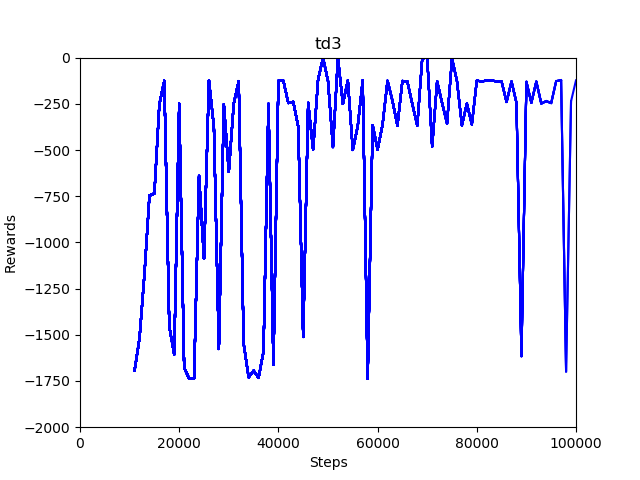

ddpg.py) - Twin Delayed DDPG (

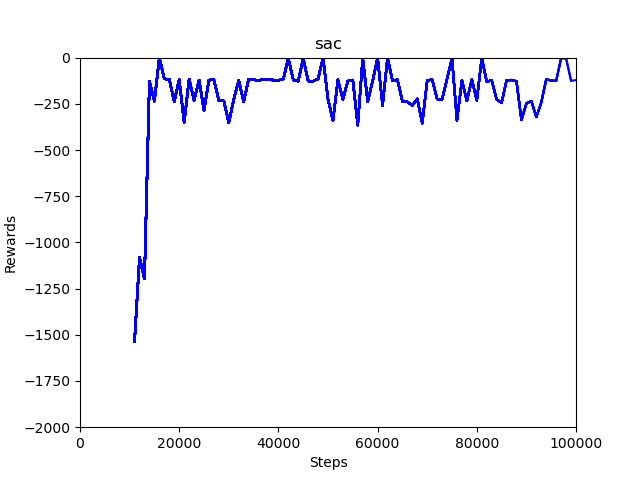

td3.py) - Soft Actor-Critic (

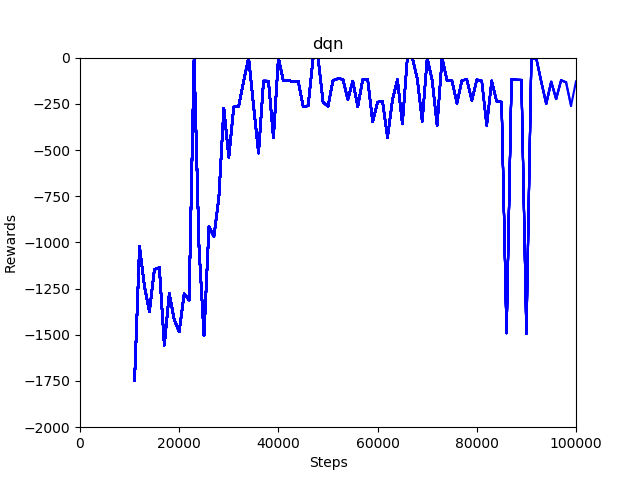

sac.py) - Deep Q-Network (

dqn.py)

Note that implementation details can have a significant effect on performance, as discussed in What Matters In On-Policy Reinforcement Learning? A Large-Scale Empirical Study. This codebase attempts to be as simple as possible, but note that for instance on-policy algorithms use separate actor and critic networks, a state-independent policy standard deviation, per-minibatch advantage normalisation, and several critic updates per minibatch, while the deterministic off-policy algorithms use layer normalisation. Equally, soft actor-critic uses a transformed Normal distribution by default, but this can also help the on-policy algorithms.

- Spinning Up in Deep RL (TensorFlow)

- Fired Up in Deep RL (PyTorch)