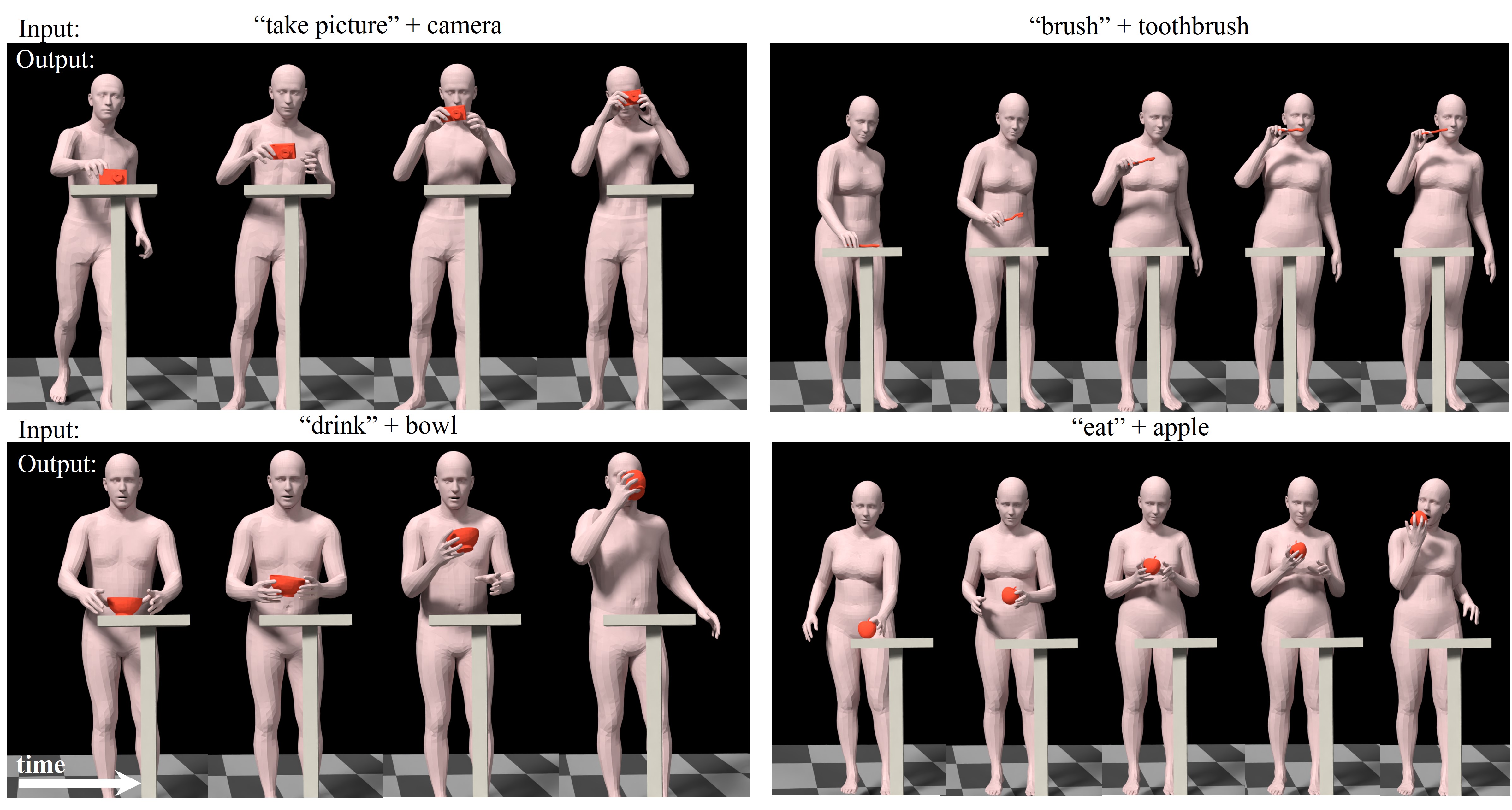

Paper | Video | Project Page

We have tested our code on the following setups:

- Ubuntu 20.04 LTS

- Windows 10, 11

- Python >= 3.8

- Pytorch >= 1.11

- conda >= 4.9.2 (optional but recommended)

Follow these commands to create a conda environment:

conda create -n IDMS python=3.8

conda activate IDMS

conda install -c pytorch pytorch=1.11 torchvision cudatoolkit=11.3

pip install -r requirements.txt

For pytorch3D installation refer to https://github.com/facebookresearch/pytorch3d/blob/main/INSTALL.md

Note: If PyOpenGL installed using requirements.txt causes issues in Ubuntu, then install PyOpenGL using:

apt-get update

apt-get install python3-opengl

-

Follow the instructions on the SMPL-X website to download SMPL-X model and keep the downloaded files under the

smplx_modelfolder. -

Download the GRAB dataset from the GRAB website, and follow the instructions there to extract the files. Save the raw data in

../DATASETS/GRAB. -

To pre-process the GRAB dataset for our setting, run:

python src/data_loader/dataset_preprocess.py

Download the pretrained weights for the models used in our paper from here and keep it inside save\pretrained_models.

- To evaluate our pre-trained model, run:

python src/evaluate/eval.py

- To generate the

.npyfiles with the synthesized motions, run:

python src/test/test_synthesis.py

- To visualize sample results from our paper, run:

python src/visualize/render_smplx.py

This code is distributed under an MIT LICENSE.