Kai Liu, Haotong Qin, Yong Guo, Xin Yuan, Linghe Kong, Guihai Chen, and Yulun Zhang, "2DQuant: Low-bit Post-Training Quantization for Image Super-Resolution", arXiv, 2024

[arXiv] [visual results] [pretrained models]

- 2024-06-09: This repo is released.

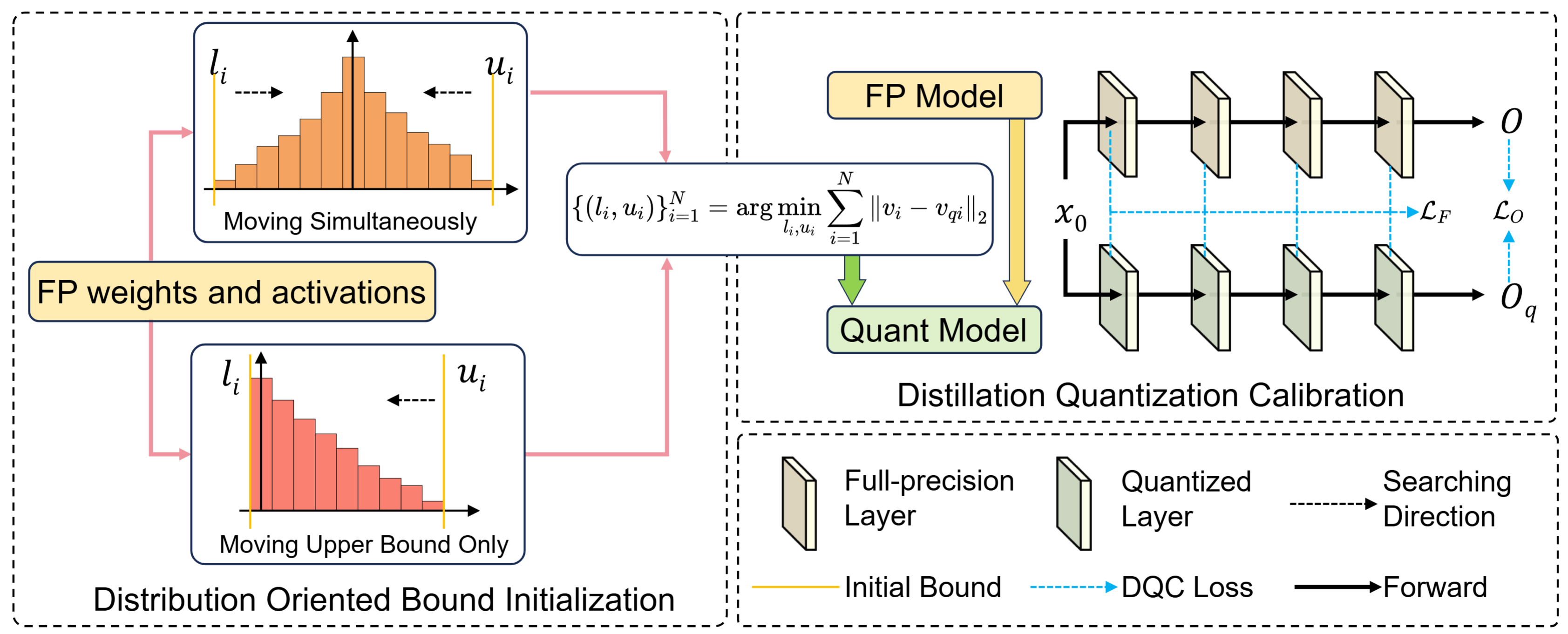

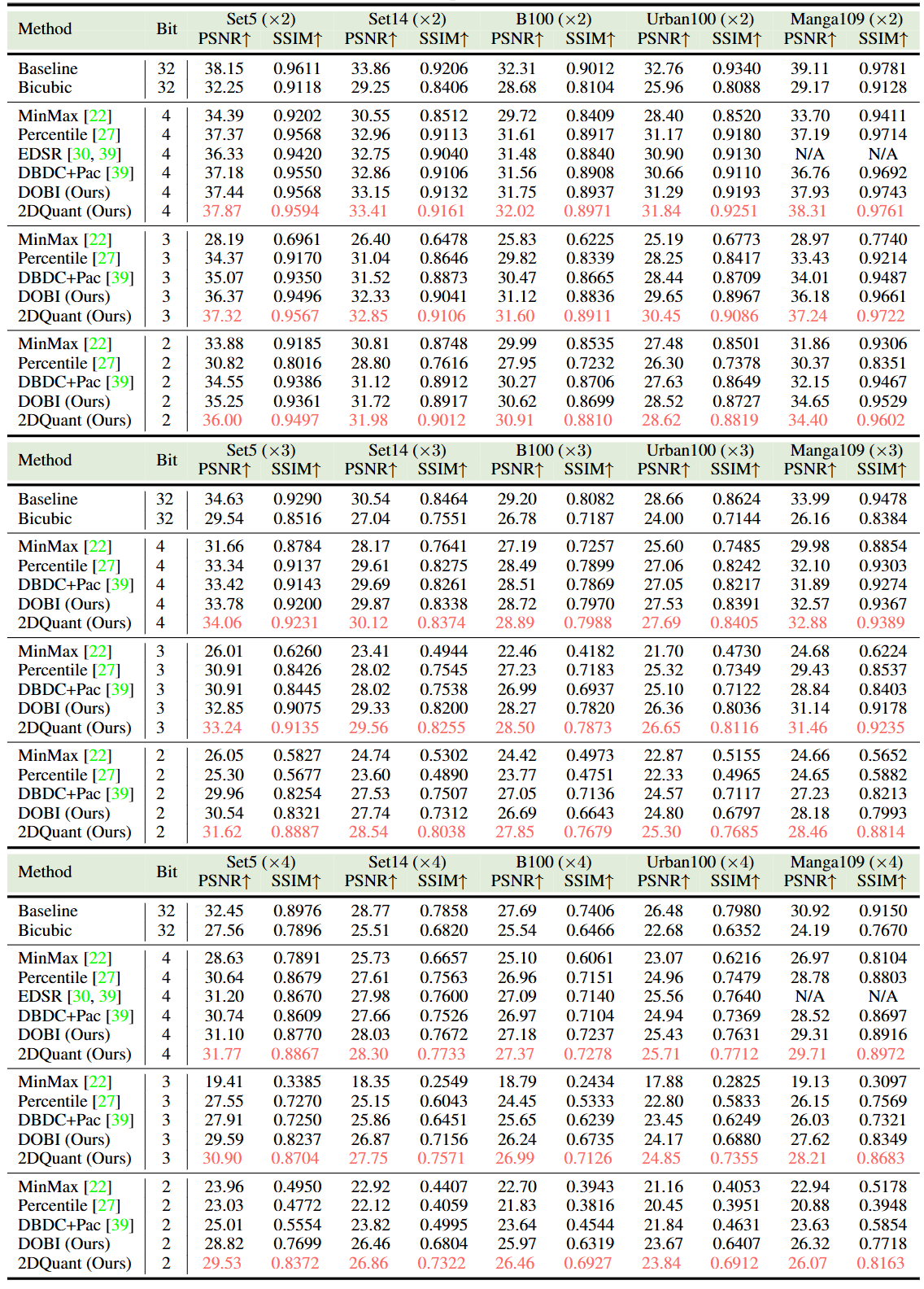

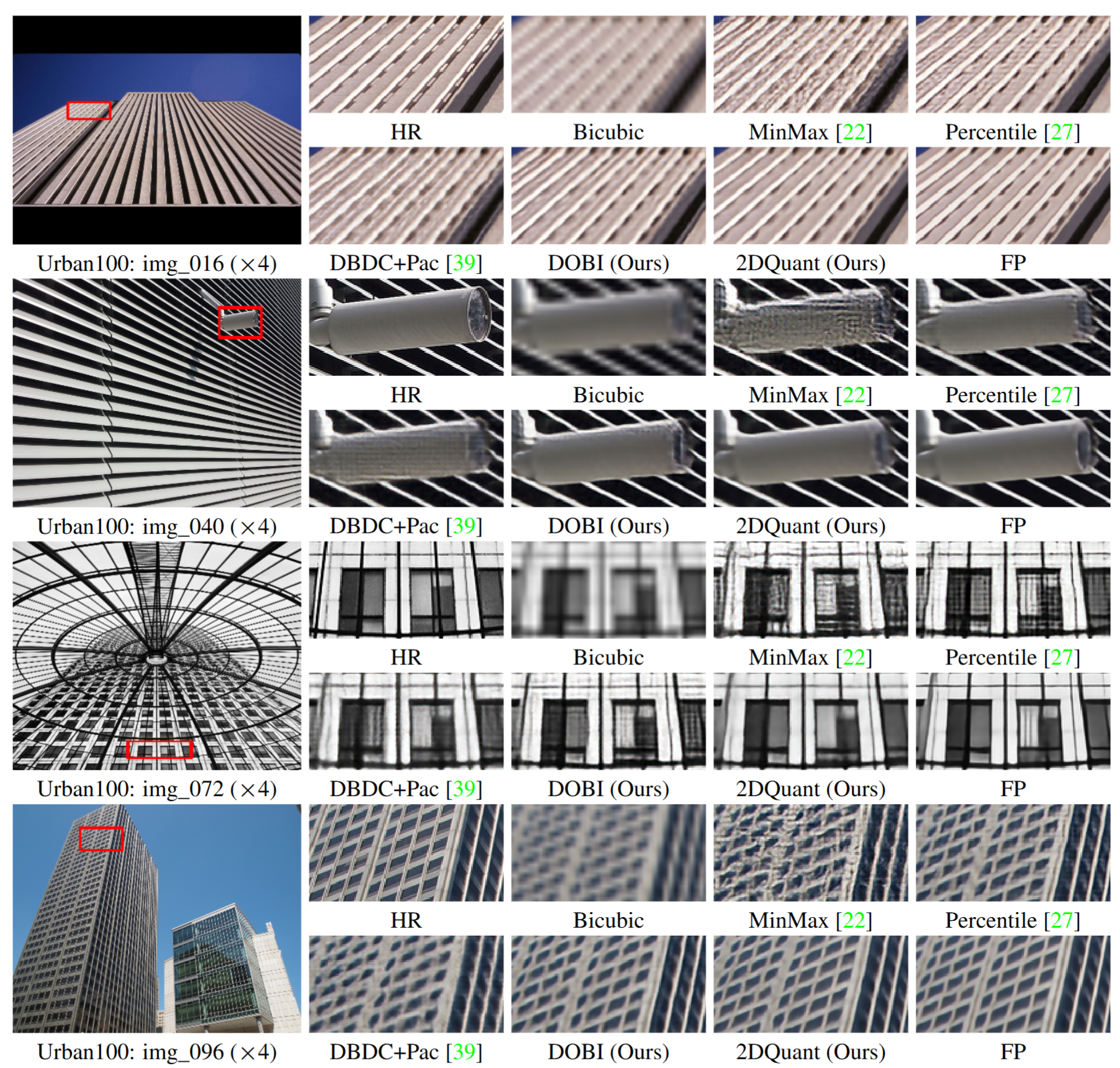

Abstract: Low-bit quantization has become widespread for compressing image super-resolution (SR) models for edge deployment, which allows advanced SR models to enjoy compact low-bit parameters and efficient integer/bitwise constructions for storage compression and inference acceleration, respectively. However, it is notorious that low-bit quantization degrades the accuracy of SR models compared to their full-precision (FP) counterparts. Despite several efforts to alleviate the degradation, the transformer-based SR model still suffers severe degradation due to its distinctive activation distribution. In this work, we present a dual-stage low-bit post-training quantization (PTQ) method for image super-resolution, namely 2DQuant, which achieves efficient and accurate SR under low-bit quantization. The proposed method first investigates the weight and activation and finds that the distribution is characterized by coexisting symmetry and asymmetry, long tails. Specifically, we propose Distribution-Oriented Bound Initialization (DOBI), using different searching strategies to search a coarse bound for quantizers. To obtain refined quantizer parameters, we further propose Distillation Quantization Calibration (DQC), which employs a distillation approach to make the quantized model learn from its FP counterpart. Through extensive experiments on different bits and scaling factors, the performance of DOBI can reach the state-of-the-art (SOTA) while after stage two, our method surpasses existing PTQ in both metrics and visual effects. 2DQuant gains an increase in PSNR as high as 4.52dB on Set5 (

$\times 2$ ) compared with SOTA when quantized to 2-bit and enjoys a 3.60$\times$ compression ratio and 5.08$\times$ speedup ratio.

| HR | LR | SwinIR-light (FP) | DBDC+Pac | 2DQuant (ours) |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

- Release code and pretrained models

- Datasets

- Models

- Training

- Testing

- Results

- Citation

- Acknowledgements

We achieved state-of-the-art performance. Detailed results can be found in the paper.

Click to expand

- quantitative comparisons in Table 3 (main paper)

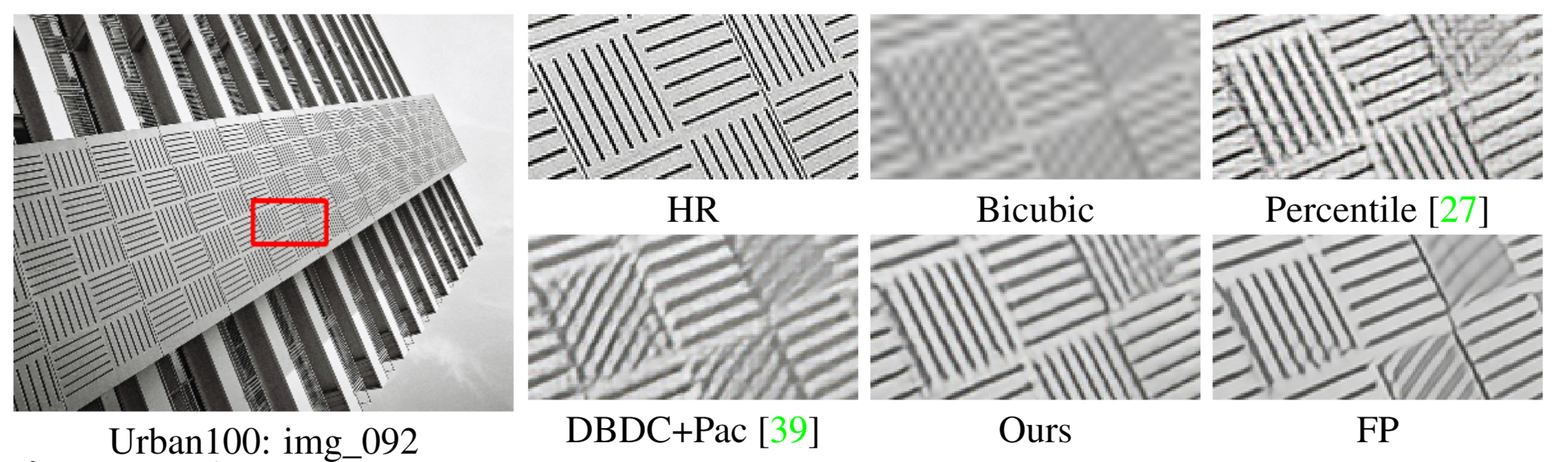

- visual comparison in Figure 1 (main paper)

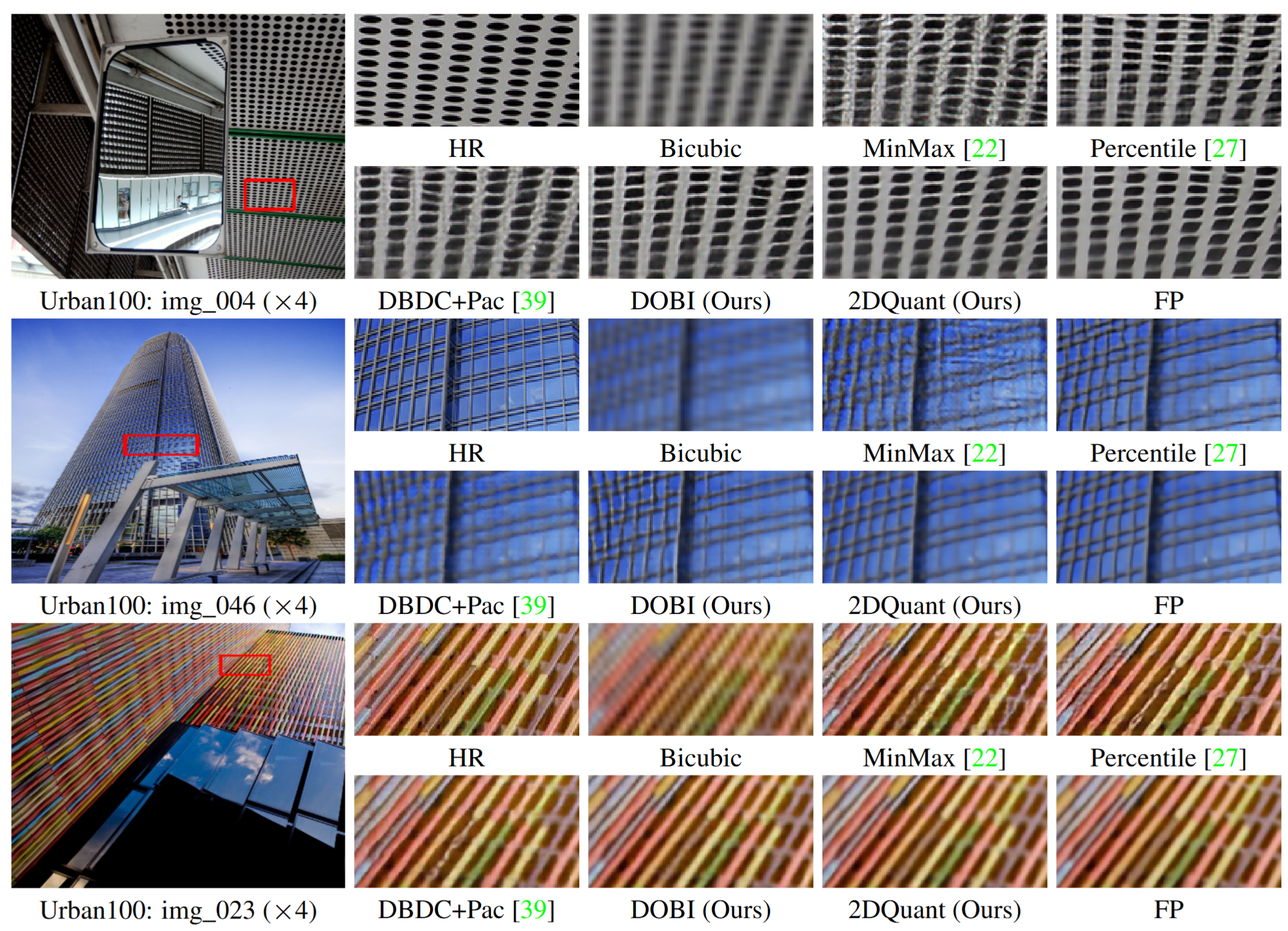

- visual comparison in Figure 6 (main paper)

- visual comparison in Figure 12 (supplemental material)

This code is built on BasicSR.