In this paper, IE TensorRT was researched to optimize machine learning model inference for NVidia GPU platforms.

The research of this tool was considered on the problem of object detection using the SSD machine learning model.

Experiments were conducted to evaluate the quality and performance of the optimized models.

After that, the results were used in a demo application, which consisted in writing a conditional prototype of a possible implementation of a part of the emergency braking system.

For more details see report.

The graph below shows the performance of the model. FPS is used as a metric. When the batch size is different from one, the fps value is calculated as the ratio of the total time spent processing the batch to the number of samples in this batch. The table values represent the network bandwidth per second.

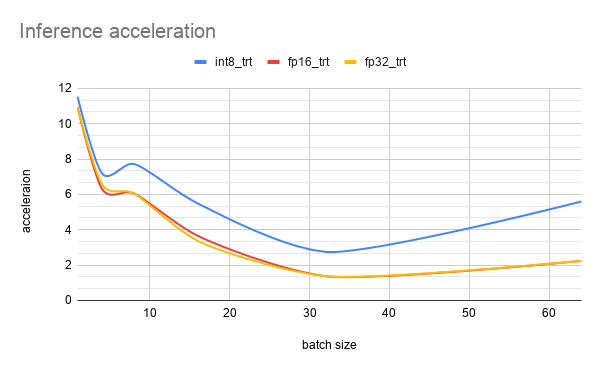

The previous graph operates with absolute performance values. But sometimes it can be helpful to look at the relative gain that comes from optimizing inference. Acceleration was considered relative to the speed values of the FP16 model, which used pure PyTorch to work.

It can be seen from the graphs that the greatest acceleration is achieved at small batch sizes. When the size is equal to one - the maximum. This is especially useful for real-time applications where data comes in sequentially and needs to be processed immediately. It also allows you to run relatively "heavy" models on various embedded modules (nvidia jetson agx xavier, nvidia jetson nano) so that they work out in a reasonable time.

data- directory that contains helper files for the projectsrc- project source codemodels- directory that contains the implementation of machine learning modelsutils- some helper project functionalitybenchmarking.py- script for comparative analysis of model prediction accuracyconversion_tensorrt.py- script for automatic model conversion from PyTorch to TensorRTinference.py- script that launches the demo application

conf/config.yaml- configuration file with launch parameters

To run the demo application, you need to change the following

parameters in the config.yaml file :

weights_path- path to the weights of the trained modelvideo_path- the path to the video where the application will runuse_fp16_mode- (OPTIONAL) set tofalseif usingfp32weightsuse_tensorrt- (OPTIONAL) set totrueif using weights converted forTensorRT

Then enter the following command in the terminal:

python src/inference.pyTo start benchmarking, you need to change the following parameters

in the config.yaml file:

weights_path- path to the weights of the trained modelcoco_data_path- path to the directory whereCOCO 2017 Datasetis locateduse_fp16_mode- (OPTIONAL) set tofalseif usingfp32weightsuse_tensorrt- (OPTIONAL) set totrueif using weights converted forTensorRTeval_batch_size- (OPTIONAL) batch size

Then enter the following command in the terminal:

python src/benchmarking.py- PyTorch

- OpenCV

- TensorRT

- torch2trt

To work, you need to install the following dependencies:

- СUDA version: 10.2

- Nvidia Driver Version: 440

- cuDNN version: 7.6.5

- Install CUDA and Nvidia Driver using the

.debpackage. To do this, follow the instructions. - Install cuDNN via

.tararchive, for this you need to follow the link. Then select the desired version (Download cuDNN v7.6.5 (November 18th, 2019), for CUDA 10.2), then download the.tararchive (cuDNN Library for Linux (x86)). - Then follow the instructions to install (tar file installation):

tar -xzvf cudnn-x.x-linux-x64-v8.x.x.x.tgz

sudo cp cuda/include/cudnn*.h /usr/local/cuda/include

sudo cp cuda/lib64/libcudnn* /usr/local/cuda/lib64

sudo chmod a+r /usr/local/cuda/include/cudnn*.h /usr/local/cuda/lib64/libcudnn*Anaconda was used to work.

- First you need to create a new environment with

python 3.7:

conda create -n python37-cuda102 python=3.7 anaconda

conda activate python37-cuda102- You need to install

pipin the given environment:

conda install -n python37-cuda102 -c anaconda pipPyCudaneeds to be installed:

pip install 'pycuda>=2019.1.1'- You need to install

ONNX parser:

pip install onnx==1.6.0- Next, install

PyTorch(version> = 1.5.0) for the desired CUDA version.

After all the steps above have been done, you can install

TensorRT (version 6.1.0.8).

This must be done through the .tar file so that you can install

it in the environment created earlier by anaconda.

To install, follow the link.

Then download: TensorRT 6.0.1.8 GA for Ubuntu 16.04 and CUDA 10.2 tar package.

Then follow the instructions

from the section Tar File Installation.

- It is necessary to unpack the archive into any convenient directory:

tar xzvf TensorRT-7.2.1.6.Ubuntu-16.04.x86_64-gnu.cuda-10.2.cudnn8.0.tar.gz- Export the absolute path to the terminal:

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:<directory where the archive was unpacked>/TensorRT-6.0.1.8/lib cd TensorRT-${version}/pythonpip install tensorrt-6.0.1.8-cp37-none-linux_x86_64.whlcd TensorRT-${version}/uffpip install uff-0.6.9-py2.py3-none-any.whlcd TensorRT-${version}/graphsurgeonpip install graphsurgeon-0.4.5-py2.py3-none-any.whlcd TensorRT-${version}/onnx_graphsurgeonpip install onnx_graphsurgeon-0.2.6-py2.py3-none-any.whl

After all the points have been done, enter the following command in the terminal:

python -c "import tensorrt as trt; print(trt.__version__)"If everything went well, then a version of TensorRT should appear.

After all the steps above have been done, install the converter in anaconda environment:

- Clone: https://github.com/NVIDIA-AI-IOT/torch2trt

python setup.py install --plugins

.png)