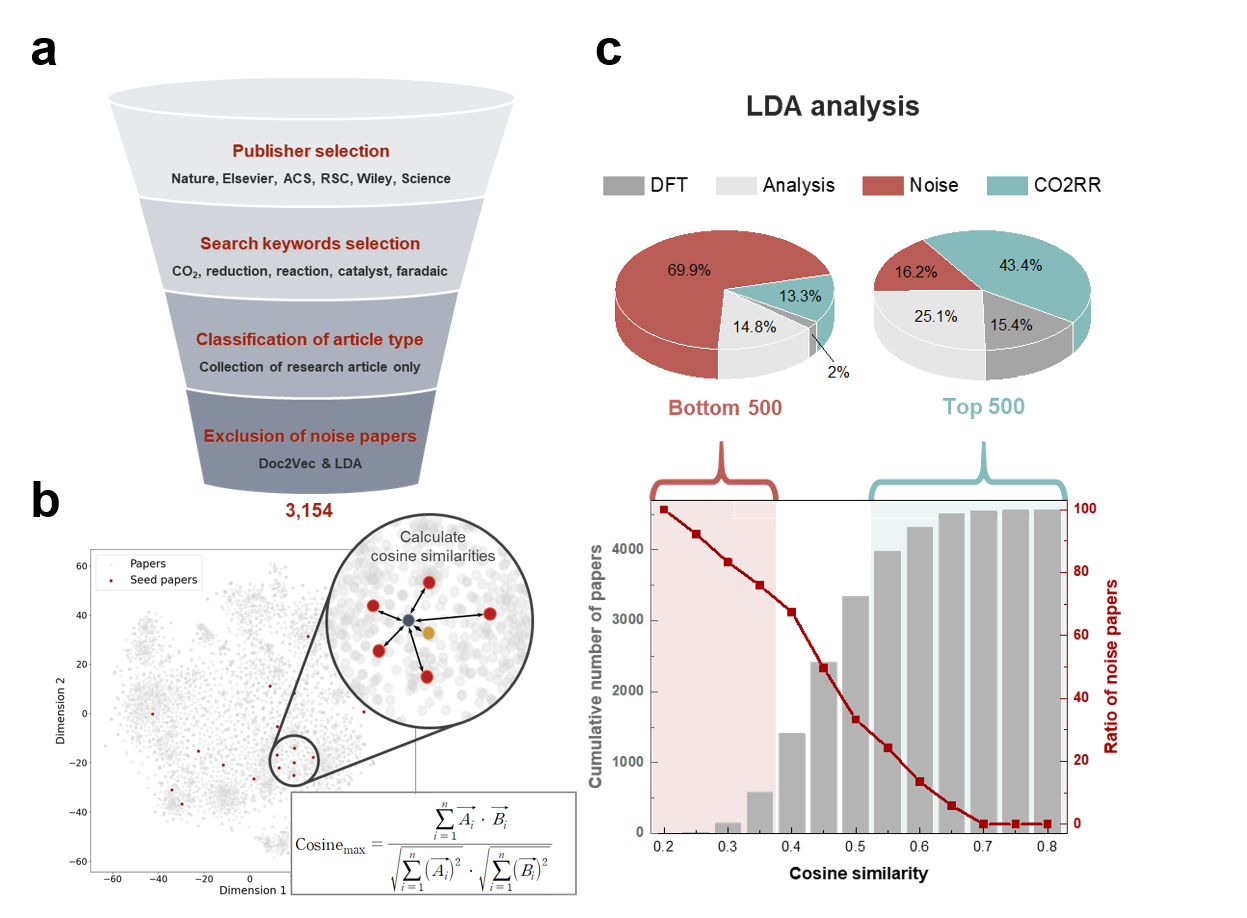

Two techniques are needed to automatically extract data from the literature and utilize the extracted data. The first is a technology to selectively classify only the papers we want through preprocess of paper, and the second is a technology, called NER, to automatically extract information from the literature. Therefore, This repository contains how to exclude noise paper from corpus (paper preprocess) and CO2 reduction reaction (CO2RR) NER models (BERT, Bi-LSTM) that classify words in literature into predefined classes, such as catalyst and product.

Using conda

conda env create -f conda_requirements_paper.yamlUsing pip

pip install -r requirements_paper.txtgit clone https://github.com/KIST-CSRC/CO2RR_NER.git

git lfs pull

Examples: Train Doc2vec

from gensim.models.doc2vec import Doc2Vec,TaggedDocument from chemdataextractor.doc.text import Text from chemdataextractor.nlp.tokenize import ChemWordTokenizer import os import time import numpy as np from Paper_preprocess.Doc2vec import DocumentVector path_dir = 'your_corpus_path' documentvector = DocumentVector() documentvector.run(path_dir) print(time.strftime('%c', time.localtime(time.time())))

Examples: Train LDA

from gensim.models.ldamodel import LdaModel from chemdataextractor.doc.text import Text from chemdataextractor.nlp.tokenize import ChemWordTokenizer import os import time import pickle from nltk.corpus import stopwords from gensim import corpora import pandas as pd import csv import pyLDAvis import pyLDAvis.gensim_models from gensim.models.coherencemodel import CoherenceModel import matplotlib.pyplot as plt import warnings import nltk nltk.download('averaged_perceptron_tagger') from Paper_preprocess.Lda import Lda path_dir = 'your_corpus_path' lda_obj = Lda() lda_obj.run(path_dir) print(time.strftime('%c', time.localtime(time.time())))

Examples: Calculate cosine similarity

- Load the trained doc2vec model into your corpus.

- Set seed papers that are examples of papers you want to selectively collect.

- Calculate the cosine similarity of each paper using Calculate_cosine_similarity.py

Finally, the noise paper can be removed using the calculated cosine similarity and lda result. Please refer to this paper for details

Using conda

conda env create -f conda_requirements_ner.yamlUsing pip

pip install -r requirements_ner.txtgit clone https://github.com/KIST-CSRC/CO2RR_NER.git

git lfs pull

The MatBERT model retrained with CO2RR was large, so it was uploaded using large file storage (LFS). Therefore, the "git lfs pull" command is absolutely necessary.

Please ensure that after set up the directories as follows:

BERT_CRF

├── best_model

│ └── .gitattributes

│ └── best.pt

├── dataset

│ └── examples of data (such as new_IOBES_bert_base_cased.csv)

├── models

│ └── base_ner_model.py

│ └── bert_model.py

│ └── crf.py

├── predict

│ └── dataset

│ └── ACS_CO2RR_00343.csv

│ └── ACS_CO2RR_00343.json

├── utils

│ └── data.py

│ └── metrics.py

│ └── post_process.py

└── predict.py

└── run.py

Model pretrainig and finetuning is done by modifying the code opensourced by MatBERT_NER authors to suit our work.

Examples: Re-train BERT model

The code below is part of the run.py file. After modifying the parameters of the corresponding part as you want, if you run the run.py file, learning will proceed.

n_epochs = 100 full_finetuning = True savename = time.strftime("%Y%m%d") device = "cuda:1" models = {'bert_base': 'bert-base-cased', 'scibert': 'allenai/scibert_scivocab_cased', 'matbert': './matbert-base-cased', 'matscibert' : 'm3rg-iitd/matscibert', 'bert_large' :'bert-large-cased'} splits = {'_80_10_10': [0.8, 0.1, 0.1]} label_type = 'BERT' tag_type = 'IOBES' # 0: exclude structure entities. structure = '1' batch_size = 32 k_fold = 10 datafile = f"./dataset/new_{tag_type}_matbert_cased.csv" for alias, split in splits.items(): # If you want to change the model you want to retrain, edit this part for model_name in ['matbert']:

Examples: Predicting samples

- Like a file in the "predict/dataset/.." path, prepare two data files in csv and json format.

- Run predict.py

We also used the Bi-LSTM CRF as the NER model and compared the performance of the BERT CRF model. For more details, see the paper below. Download the Bi-LSTM CRF code here.

For more details, see the paper below.

Please cite us if you are using our model in your research work: