KAIST CS479: Machine Learning for 3D Data (2023 Fall)

Programming Assignment 5

Instructor: Minhyuk Sung (mhsung [at] kaist.ac.kr)

TA: Yuseung Lee (phillip0701 [at] kaist.ac.kr)

Install the required package within the requirements.txt

pip install -r requirements.txt

To resolve the high computational demands of diffusion models and enhance their generation quality, Rombach et al. proposed Latent Diffusion in which the diffusion process takes place in a latent space instead of the RGB pixel space. Based on this paper, an open-source text-to-image generation model Stable Diffusion was introduced in 2022 and has led to a wide variety of applications as presented in the below sections.

In this tutorial, we will have a hands-on experience with the basic pipeline of Stable Diffusion and further have a look at its applications including (i) Conditional Generation, (ii) Joint Diffusion, (iii) Zero-shot 3D Generation.

[Introduction to Huggingface & Diffusers]

Before going into main tasks on Latent Diffusion, we will have a look at Hugging Face, an open-source hub for ML applications and Diffusers, a handy library for pretrained diffusion models. We will be loading the pretrained Stable Diffusion model from Diffusers for the remaining tasks.

TODO:

Sign into Hugging Face and obtain the access token from `https://huggingface.co/settings/tokens`.

In the past couple of years, numerous diffusion-based Text-to-Image (T2I) models have emerged such as Stable Diffusion, DALL-E 2 and Midjourney. In this section, we will do a simple hands-on practice with Stable Diffusion, which has been widely utilized in vision-based generative AI research projects.

Moreover, to have a quick look at the conditional generation capabilities of Latent Diffusion, we will generate images with ControlNet using various condition images such as depth maps and canny edge maps.

Source code: task_1_1_Stable_Diffusion_intro.ipynb

TODO:

Load `StableDiffusionPipeline` and `DDIMScheduler` from Diffusers, and generate images with a text prompt.

Source code: task_1_2_Stable_Diffusion_detailed.ipynb

Different from the source code in 1.1, in which we imported the full StableDiffusionPipeline, the code for 1.2 imports the inner modules of Stable Diffusion separately and defines the sampling process.

Understanding the inner sampling code for Stable Diffusion can help us understand the generation process of diffusion models. For instance, the below GIF shows the visualizations of the decoded latents x_t at each timestep of the sampling process, along with the predicted x_0 at each timestep (See DDIM paper for details).

TODO:

- Understand the sampling pipeline of Stable Diffusion.

- Visualize the intermediate and final outputs of the DDIM sampling process.

- Make a video of the sampling process of Stable Diffusion as below (You can use make_video.ipynb for converting images to video)

Source code: task_1_3_ControlNet.ipynb

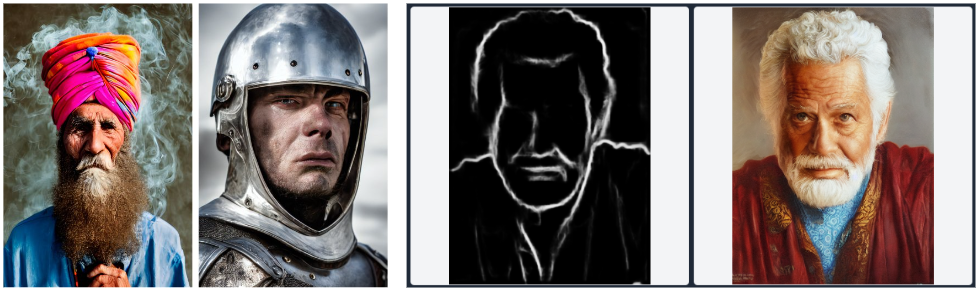

Diffusers provides pretrained ControlNet models that can take various condtion images as input (e.g. depth map, canny edge map, segment masks) along with a text prompt. The source code provides the code for generating canny edge maps or depth maps from a source image and feeding them to a ControlNet model for conditional generation. Try the conditional generation with your own image data and also feel free to explore other types of conditions provided in this link.

TODO:

- Using your own image data, extract canny edge maps and depth maps and test conditional generation with ControlNet.

- Load a ControlNet model trained for another type of condition (e.g. normal maps, scribbles) and test with your own data.

- (Optional) Train a ControlNet model on any single condtion following the instructions in https://github.com/lllyasviel/ControlNet/blob/main/docs/train.md.

Source code: task_2_Joint_Diffusion.ipynb

Although Stable Diffusion has made a breakthrough in text-guided image generation, one of its limitations is that it struggles to generate images of various resolutions, such as long panorama images. Notably, the default resolution of the widely used Stable Diffusion model is fixed as 512x512. Recently, MultiDiffusion [Bar-Tal et al.] has proposed a simple solution to this issue, enabling the generation of arbitrary resolutions through joint diffusion processes using a pretrained Stable Diffusion. Moreover, SyncDiffusion [Lee et al.] further extends this idea by guiding the diffusion processes towards a globally coherent output.

In this task, we will begin from the simple Stable Diffusion pipeline from 1.2 and extend it to a joint diffusion pipeline based on the ideas from MultiDiffusion & SyncDiffusion. This will let us generate an image of arbitrary resolution using a pretrained Stable Diffusion without any additional training.

First, we will modifty the sampling pipeline of Stable Diffusion to enable the joint sampling of multiple diffusion processes as proposed in MultiDiffusion [Bar-Tal et al.]. You should fill in the parts of the source code marked with TODO. (It is recommended to implement in order of the given numbers next to TODO.)

After implementing the below (1) ~ (3) TODOs, you will be able to run MultiDiffusion with the given sampling code.

TODO:

(1) Update the current window latent with the denoised latent. (NOTE: Follow Eq. (7) in MultiDiffusion paper))

(2) Keep track of overlapping regions of latents.

(3) For overlapping region of latents, take the average of the latent values (latent averaging)

After implementing upto (3), generate panorama images with your own text prompt. (Resolution HxW=512x2048)

Now we will improve the joint diffusion pipeline implement in 2.1 to generate globally coherent images, as proposed in SyncDiffusion [Lee et al.]. After successfully implementing 2.1 you should starting filling in code starting from TODO (4).

After implementing all TODOs, you can now switch the sampling method from MultiDiffusion from SyncDiffusion by setting use_syncdiffusion as True.

TODO:

(4) Obtain the `foreseen denoised latent (x_0)` of the anchor window, and decode the latent to obtain the anchor image. (See DDIM paper)

(5) Update the current window latent by gradient descent comparing the perceptual similarity of current window and the anchor window. (See the pseudocode in SyncDiffusion)

After implementing upto (5), compare the generated panoramas from MultiDiffusion and SyncDiffusion (using the same random seed).

[Optional] Add techniques to accelerate the sampling time using the tradeoff between (i) sampling time and (ii) coherence of generated image. (e.g. enabling gradient descent for certain timesteps, etc.)

. task_3_DreamFusion/ (Task 3)

├── freqencoder/ <--- Implementation of frequency positional encodings

├── gridencoder/ <--- Implementation of grid based positional encodings

├── nerf/

│ ├── network.py <--- Original NeRF based network

│ ├── network_grid.py <--- Hash grid based network

│ ├── provider.py <--- Ray dataset

│ ├── sd.py <--- (TODO) Stable Dreamfusion where you need to fill in SDS computation.

│ └── utils.py <--- utils for overall training.

├── raymarching/

├── encoding.py <--- Encoder based on positional encoding implementations.

├── main.py <--- main python script

└── optimizer.py

In this task, we will look into how a 2D diffusion model trained with a large-scale text-image dataset can be leveraged to generate 3D objects from text. DreamFusion [Poole et al.] proposed a novel method, called Score Distillation Sampling (SDS), to distill a 2D diffusion prior for text-to-3D synthesis.

If we take a look at the objective function of diffusion models, it minimizes the difference between random noise

If the model is already trained well,

SDS leverages this intuition to update 3D objects. Let say we have a differentiable image generator

However, in practice, U-Net Jacobian term

By setting

In short, we can synthesize NeRF scenes using a pre-trained 2D text-to-image diffusion model by SDS.

TODO:

- Fill in a function to compute the SDS loss in `sd.py` and generate 3D objects from text.

Generate scenes from a text prompt, and report 360 degree rendering videos.

These are some example prompts you can try:

- "A hamburger",

- "A squirrel reading a book",

- "A bunny sitting on top of a stack of pancakes"

If you are interested in this topic, we encourage you to check out the materials below.

- Stable Diffusion Clearly Explained!

- Hugging Face Stable Diffusion v2.1

- Awesome-Diffusion-Models

- ControlNet Github Repo

- High-Resolution Image Synthesis with Latent Diffusion Models

- Imagen: Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding

- ControlNet: Adding Conditional Control to Text-to-Image Diffusion Models

- GLIGEN: Open-Set Grounded Text-to-Image Generation

- Prompt-to-Prompt Image Editing with Cross Attention Control

- Diffusion Self-Guidance for Controllable Image Generation

- EDICT: Exact Diffusion Inversion via Coupled Transformations

- MultiDiffusion: Fusing Diffusion Paths for Controlled Image Generation

- SyncDiffusion: Coherent Montage via Synchronized Joint Diffusions

- MVDiffusion: Enabling Holistic Multi-view Image Generation with Correspondence-Aware Diffusion

- DreamFusion: Text-to-3D using 2D Diffusion

- Score Jacobian Chaining: Lifting Pretrained 2D Diffusion Models for 3D Generation

- ProlificDreamer: High-Fidelity and Diverse Text-to-3D Generation with Variational Score Distillation

- TEXTure: Text-Guided Texturing of 3D Shapes

- Text2Tex: Text-driven Texture Synthesis via Diffusion Models

The code for Task 3 (DreamFusion) is heavily based on the implementation from Stable-DreamFusion.