This assignment will be about the differences and similarities between electronic and acoustic singer-songwriters. Acoustic and electronic music are a world apart, but it seems now that they can be closer than expected by the fact that both acoustic and electronic singer-songwriters exist. I define acoustic singer-songwriters as 1 or max. 2 people that sing and play exclusively on 1 acoustic instrument, mainly guitar or piano. Examples are Passenger and Ed Sheeran. Electronic singer-songwriters, to me, are people who produce their own music containing (not necessarily but also not limited to) synthesizers, looping stations or drums computers, and are able to perform it live. The music must contain roughly the same amount of singing as the acoustic songs. Examples are Chet Faker and JAIN.

The question that will be examined in this report is: Apart from the instruments used, what are the major differences between music of acoustic and electronic singer-songwriters?

There are two corpera that will be compared with each other. These are Spotify playlists. For the acoustic singer-songwriters, a playlist generated by Spotify that was called: "Acoustic Singer-songwriters" was chosen. The playlist was checked manually and all the songs satisfied the criteria stated above. This corpus represents the acoustic singer songwriters pretty well. The playlist for the electronic singer-songwriters was made by a Spotify user and complemented by me. It contains many songs that fulfill the criteria from above, but also more songs that belong to the Indie genre, with lots of electric guitars, but no electronic instruments. This playlist does not totally represent the playlist needed to execute this research, but I am planning on adjusting it manually.

One really typical song in the acoustic playlist is 'Everything'll be Alright (Will's Lullaby)' by Joshua Radin. The most remarkable value is danceability (0.53). The song is very soft and sweet and doesn't sound very danceable to me. A value of 0.53 seems higher than I would expect. I think 0.5 is a nice middle value for danceability. A lot of songs have this value. The value for acousticness is 0.693. Since this is a very acoustic sounding song, I would've expected the song to have higher acoustic value, but compared to the other songs, 0.693 is already pretty high. The mean acousticness of the acoustic playlist is 0.646 (SD = 0.268), so it is already higher than average.

In the electronic playlist, a typical song is 'Dynabeat' by JAIN. The song is a lot more danceable than 'Everything'll be Alright (Will's Lullaby)' and that shows in the analysis (0.911). The acousticness is very low (0.0787). The instrumentalness is 0, which would imply that there are no instrumental parts in the song, but there is a instrumental solo in the bridge, so this analysis is not totally correct.

The energy of the two songs is both around 0.5. That seems odd, because 'Makeba' sounds more energised. It can be explained by the tempo. According to Spotify, the tempo of 'Everything'll be Alright (Will's Lullaby)' is 171, but is sounds way slower than that. The tempo of 'Makeba' is 116. It seems logical that the energy of the song will be determined by the tempo.

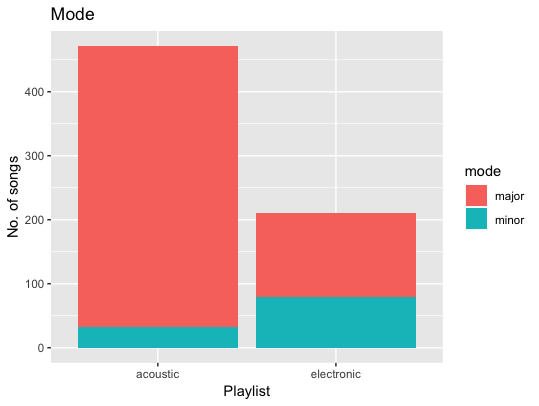

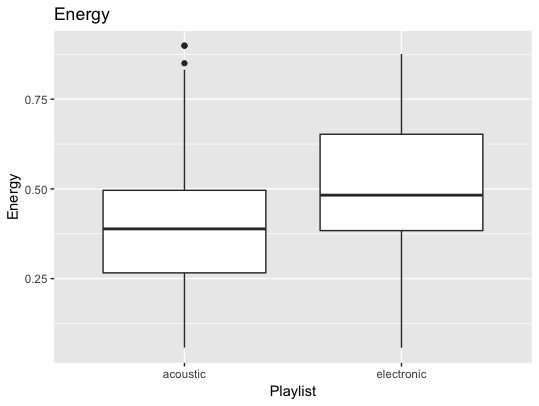

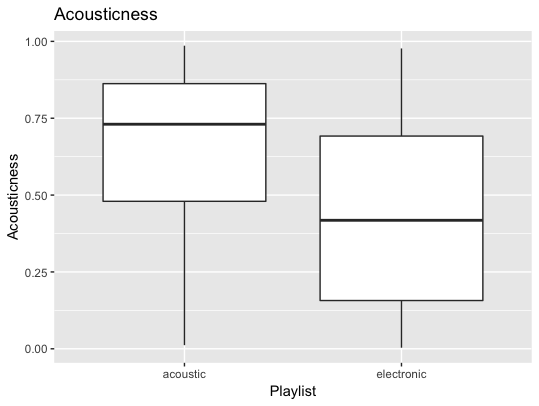

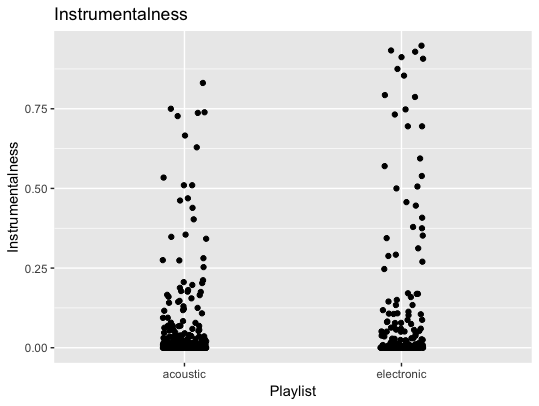

The biggest differences in the quick analysis are the mode, energy, acousticness and instrumentalness.

The acoustic playlist has 33 minor songs, in a total of 471 songs. That's about 7%. The electronic playlist has 83 minor songs, in a total of 218 songs. With 38%, that's a lot more. I find this a very interesting difference. Some people find electronic music in general darker and harder to listen to, and a minor key fits that description. This difference in mode fits my research question the best.

The electronic playlist has more energy (mean = 0.527, SD = 0.180), acoustic (mean = 0.392, SD = 0.166). The minimal energy of the electronic songs is 0.112, twice as high as the value of the acoustic playlist: 0.0577. Energy is an interesting aspect for the research question, especially together with the tempo of the playlists.

The next variable to be compared is acousticness. The acoustic playlist has a higher acousticness, which makes a lot of sense. Acoustic: mean = 0.646, SD = 0.268. Electronic: mean = 0.411, SD = 0.292. The difference is not as big as one would expect though.

Lastly, the instrumentalness. It is clear that the electronic has more instrumental parts in the songs. This could be because of so called 'dancebreaks' that are in a lot of electronic songs. Acoustic songs tend to have these less. There could be acoustic solo's in acoustic songs, but I think they would be shorter than the dancebreaks.

In the acoustic playlist, the song 'Seize the Night' by Will Varley has the highest speechiness, 0.2260. The song below it has 0.1370. The rest of the differences is a lot smaller. The artists voice sounds very raspy. Maybe this is interpreted as more sounding like speech than smoother voices.

In the electronic playlist, the song 'My bed' by Marbert Rocel has the largest danceability (0.976), but the song is in my opinion pretty mellow. It sounds happy, and you could dance to it, but there sure are a lot of songs that sound more danceable to me.

The song 'Monkeys in my Head' is the most danceable song in the acoustic playlist, 0.869, and sounds about the same as 'My Bed'.

I do think that I should include the outliers because they do sound like representative songs.

The playlists are more alike than different, but there are some interesting features to explore. The differences in mode and energy. I would've expected a larger difference in danceability, but maybe that'll come forward when the electronic playlist is more representative.