This repository is for Contextual Transformer Networks for Visual Recognition.

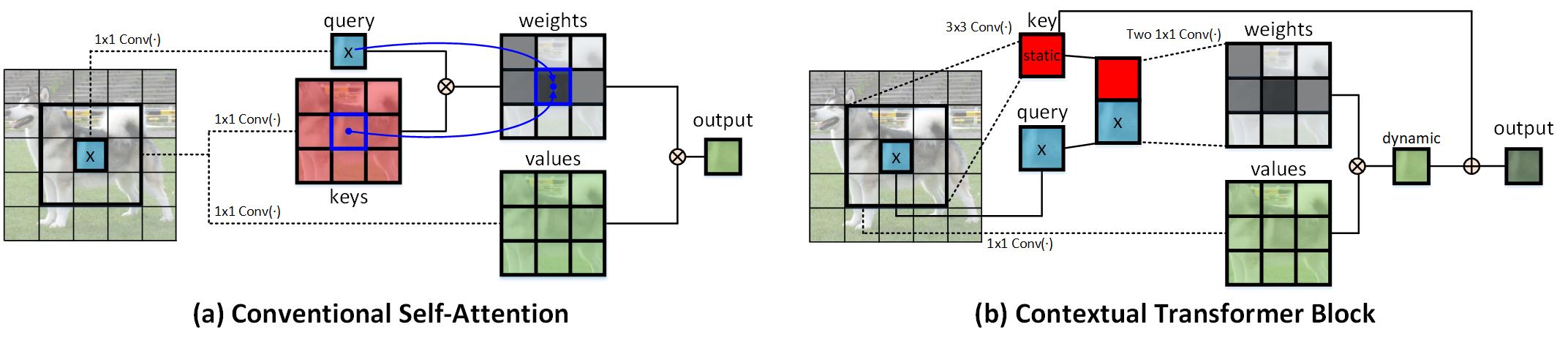

CoT is a unified self-attention building block, and acts as an alternative to standard convolutions in ConvNet. As a result, it is feasible to replace convolutions with their CoT counterparts for strengthening vision backbones with contextualized self-attention.

Rank 1 in Open World Image Classification Challenge @ CVPR 2021. (Team name: VARMS)

The code is mainly based on timm.

- PyTorch 1.8.0+

- Python3.7

- CUDA 10.1+

- CuPy.

git clone https://github.com/JDAI-CV/CoTNet.git

First, download the ImageNet dataset. To train CoTNet-50 on ImageNet on a single node with 8 gpus for 350 epochs run:

python -m torch.distributed.launch --nproc_per_node=8 train.py --folder ./experiments/cot_experiments/CoTNet-50-350epoch

The training scripts for CoTNet (e.g., CoTNet-50) can be found in the cot_experiments folder.

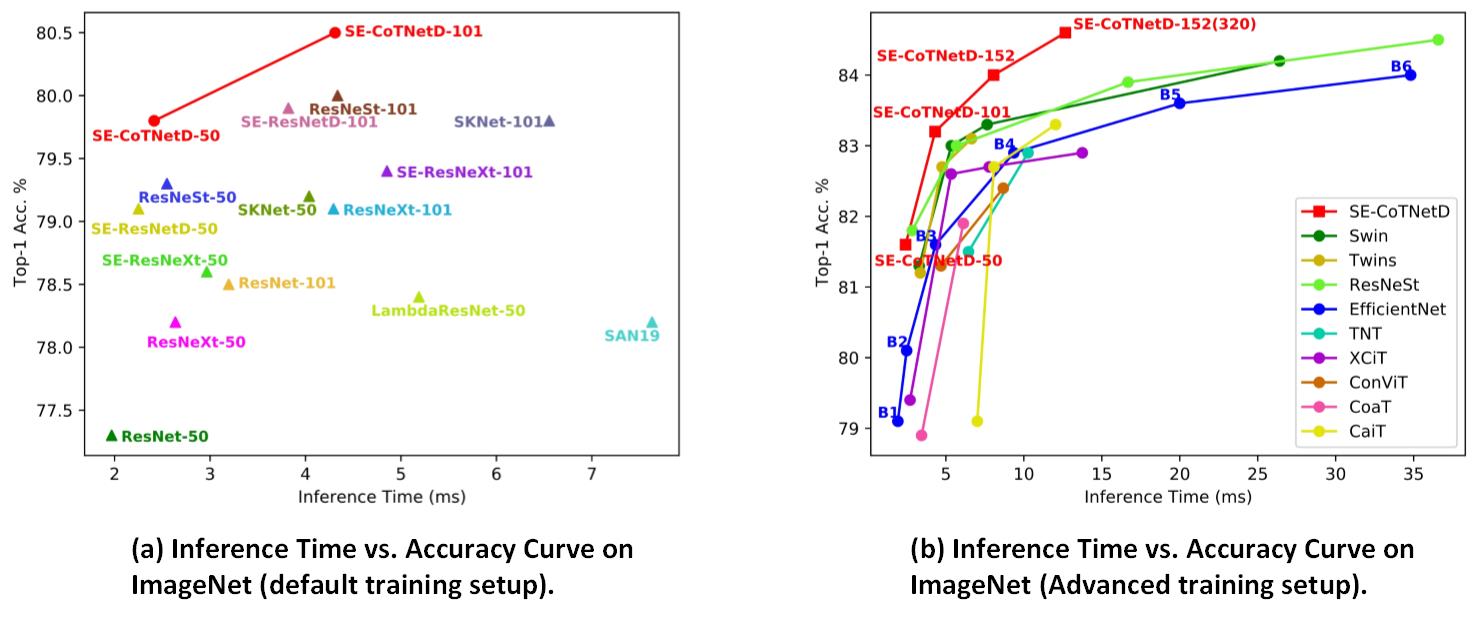

CoTNet models consistently obtain better top-1 accuracy with less inference time than other vision backbones across both default and advanced training setups. In a word, CoTNet models seek better inference time-accuracy trade-offs than existing vision backbones.

@article{cotnet,

title={Contextual Transformer Networks for Visual Recognition},

author={Li, Yehao and Yao, Ting and Pan, Yingwei and Mei, Tao},

year={2021}

}

Thanks the contribution of timm and awesome PyTorch team.