Now that you've seen a brief introduction to PCA, it's time to try implementing the algorithm on your own.

You will be able to:

- Perform PCA in Python and scikit-learn using Iris dataset

- Measure the impact of PCA on the accuracy of classification algorithms

- Plot the decision boundary of different classification experiments to visually inspect their performance.

To practice PCA, you'll take a look at the iris dataset. Run the cell below to load it.

from sklearn import datasets

import pandas as pd

iris = datasets.load_iris()

df = pd.DataFrame(iris.data, columns=iris.feature_names)

df['Target'] = iris.get('target')

df.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | Target | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | 0 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | 0 |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | 0 |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | 0 |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | 0 |

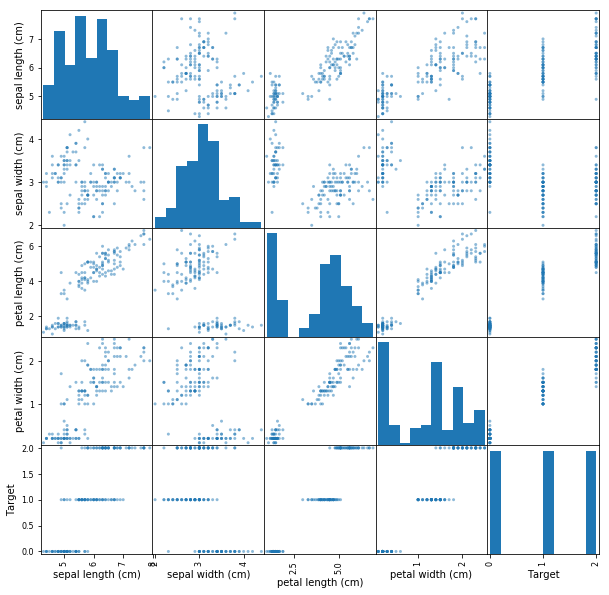

In a minute, you'll perform PCA and visualize the datasets principle components. Before, its helpful to get a little more context regarding the data that you'll be working with. Run the cell below in order to visualize the pairwise feature plots. With this, notice how the target labels are easily separable by any one of the given features.

import matplotlib.pyplot as plt

%matplotlib inline

pd.plotting.scatter_matrix(df, figsize=(10,10));# Create features and Target dataset

# Your code here

# Standardize the features

# Your code here

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| sepal length | sepal width | petal length | petal width | |

|---|---|---|---|---|

| 0 | -0.900681 | 1.032057 | -1.341272 | -1.312977 |

| 1 | -1.143017 | -0.124958 | -1.341272 | -1.312977 |

| 2 | -1.385353 | 0.337848 | -1.398138 | -1.312977 |

| 3 | -1.506521 | 0.106445 | -1.284407 | -1.312977 |

| 4 | -1.021849 | 1.263460 | -1.341272 | -1.312977 |

Now its time to perform PCA! Project the original data which is 4 dimensional into 2 dimensions. The new components are just the two main dimensions of variance present in the data.

- Initialize an instance of PCA from scikit-learn with 2 components

- Fit the data to the model

- Extract the first 2 principal components from the trained model

# Run the PCA algorithm

# Your code here

To visualize the components, it will be useful to also look at the target associated with the particular observation. As such, append the target (flower name) to the principal components in a pandas dataframe.

# Create a new dataset from principal components

# Your code here

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| PC1 | PC2 | target | |

|---|---|---|---|

| 0 | -2.264542 | 0.505704 | Iris-setosa |

| 1 | -2.086426 | -0.655405 | Iris-setosa |

| 2 | -2.367950 | -0.318477 | Iris-setosa |

| 3 | -2.304197 | -0.575368 | Iris-setosa |

| 4 | -2.388777 | 0.674767 | Iris-setosa |

Great, you now have a set of two dimensions, reduced from four against our target variable, the flower name.

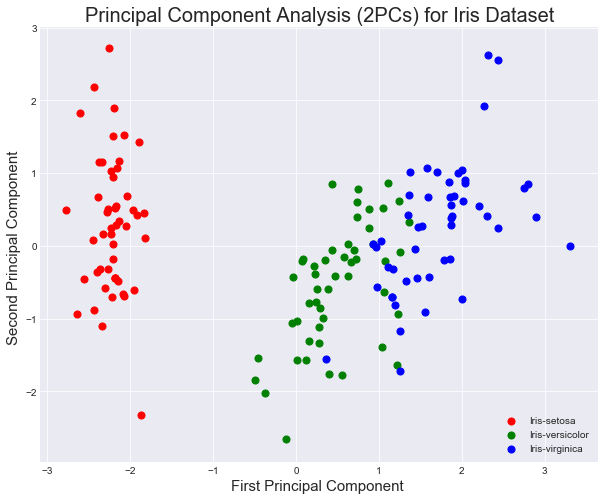

Using the target data, we can visualize the principal components according to the class distribution.

- Create a scatter plot from principal components while color coding the examples

# Principal Componets scatter plot

# Your code here You can see above that the three classes in the dataset are fairly well separable. As such, this compressed representation of the data is probably sufficient for the classification task at hand. Compare the variance in the overall dataset to that captured from your two primary components.

# Calculate the variance explained by pricipal components

# Your code here

Variance of each component: [0.72770452 0.23030523]

Total Variance Explained: 95.8

As you should see, these first two principal components account for the vast majority of the overall variance in the dataset. This is indicative of the total information encapsulated in the compressed representation compared to the original encoding.

Since the principal components explain 95% of the variance in the data, it is interesting to consider how a classifier trained on the compressed version would compare to one trained on the original dataset.

- Run a

KNeighborsClassifierto classify the Iris dataset - Use a trai/test split of 80/20

- For reproducability of results, set random state =9 for the split

- Time the process for splitting, training and making prediction

# classification complete Iris dataset

# Your code here

Accuracy: 1.0

Time Taken: 0.0017656260024523363

Great , so you can see that we are able to classify the data with 100% accuracy in the given time. Remember the time taken may different randomly based on the load on your cpu and number of processes running on your PC.

Now repeat the above process for dataset made from principal components

- Run a

KNeighborsClassifierto classify the Iris dataset with principal components - Use a trai/test split of 80/20

- For reproducability of results, set random state =9 for the split

- Time the process for splitting, training and making prediction

# Run the classifer on PCA'd data

# Your code here

Accuracy: 0.9666666666666667

Time Taken: 0.00035927799763157964

While some accuracy is loss in this representation, the training time has vastly improved. In more complex cases, PCA can even improve the accuracy of some machine learning tasks. In particular, PCA can be useful to reduce overfitting.

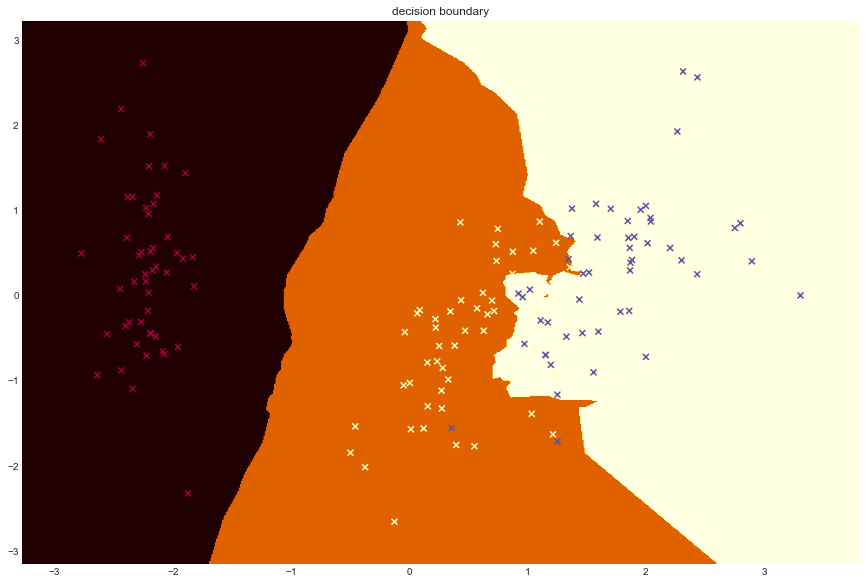

# Plot decision boundary using principal components

def decision_boundary(pred_func):

#Set the boundary

x_min, x_max = X.iloc[:, 0].min() - 0.5, X.iloc[:, 0].max() + 0.5

y_min, y_max = X.iloc[:, 1].min() - 0.5, X.iloc[:, 1].max() + 0.5

h = 0.01

# build meshgrid

xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))

Z = pred_func(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

# plot the contour

plt.figure(figsize=(15,10))

plt.contourf(xx, yy, Z, cmap=plt.cm.afmhot)

plt.scatter(X.iloc[:, 0], X.iloc[:, 1], c=y, cmap=plt.cm.Spectral, marker='x')

decision_boundary(lambda x: model.predict(x))

plt.title("decision boundary")Text(0.5,1,'decision boundary')

In this lab you applied PCA to the popular Iris dataset. You looked at performance of a simple classifier and impact of PCA on it. From here, you'll continue to explore PCA at more fundamental levels.