by Jinfeng Xu, Siyuan Yang, Xianzhi Li, Yuan Tang, Yixue Hao, Long Hu, Min Chen

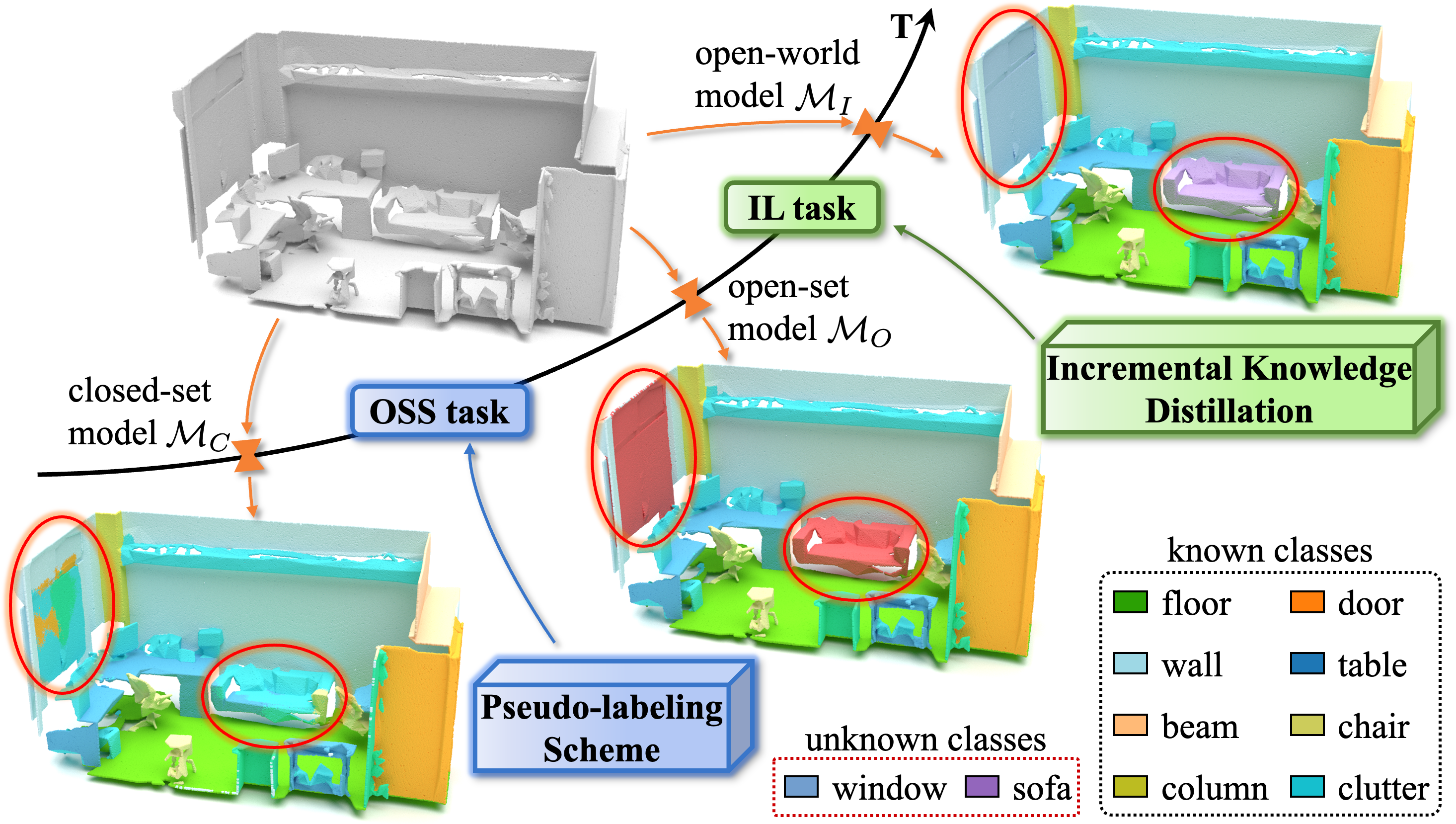

PointCloudPDF is the repository for our Computer Vision and Pattern Recognition (CVPR) 2024 paper 'PDF: A Probability-Driven Framework for Open World 3D Point Cloud Semantic Segmentation'. In this paper, we propose a Probability-Driven Framework (PDF) for open world semantic segmentation that includes (i) a lightweight U-decoder branch to identify unknown classes by estimating the uncertainties, (ii) a flexible pseudo-labeling scheme to supply geometry features along with probability distribution features of unknown classes by generating pseudo labels, and (iii) an incremental knowledge distillation strategy to incorporate novel classes into the existing knowledge base gradually. Our framework enables the model to behave like human beings, which could recognize unknown objects and incrementally learn them with the corresponding knowledge. Experimental results on the S3DIS and ScanNetv2 datasets demonstrate that the proposed PDF outperforms other methods by a large margin in both important tasks of open world semantic segmentation.

We organize our code based on the Pointcept which is a powerful and flexible codebase for point cloud perception research. The directory structure of our project looks like this:

│

├── configs <- Experiment configs

│ ├── _base_ <- Base configs

│ ├── s3dis <- configs for s3dis dataset

│ │ ├── openseg-pt-v1-0-msp <- open-set segmentation configs for msp method based on the pointTransformer

│ │ └── ...

│ └── ...

│

├── data <- Project data

│ └── ...

│

├── docs <- Project documents

│

├── libs <- Third party libraries

│

├── pointcept <- Code of framework

│ ├── datasets <- Datasets processing

│ ├── engines <- Main procedures of training and evaluation

│ ├── models <- Model zoo

│ ├── recognizers <- recognizers for open-set semantic segmentation

│ └── utils <- Utilities of framework

│

├── scripts <- Scripts for training and test

│

├── tools <- Entry for program launch

│

├── .gitignore

└── README.md

- Ubuntu: 18.04 and above.

- CUDA: 11.3 and above.

- PyTorch: 1.10.0 and above.

conda create -n pointpdf python=3.8

conda activate pointpdf

conda install ninja==1.11.1 -c conda-forge

conda install pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=11.3 -c pytorch

conda install scipy==1.9.1 scikit-learn==1.1.2 numpy==1.19.5 mkl==2024.0 -c conda-forge

conda install pyg pytorch-cluster pytorch-scatter pytorch-sparse -c pyg

conda install sharedarray tensorboard tensorboardx yapf addict einops plyfile termcolor timm -c conda-forge --no-update-deps

conda install h5py pyyaml -c anaconda --no-update-deps

pip install spconv-cu113

pip install torch-points3d

pip install open3d # for visualization

pip uninstall sharedarray

pip install sharedarray==3.2.1

cd libs/pointops

python setup.py install

cd ../..

cd libs/pointops2

python setup.py install

cd ../..The preprocessing supports semantic and instance segmentation for both ScanNet20.

- Download the ScanNet v2 dataset.

- Run preprocessing code for raw ScanNet as follows:

# RAW_SCANNET_DIR: the directory of downloaded ScanNet v2 raw dataset.

# PROCESSED_SCANNET_DIR: the directory of the processed ScanNet dataset (output dir).

python pointcept/datasets/preprocessing/scannet/preprocess_scannet.py --dataset_root ${RAW_SCANNET_DIR} --output_root ${PROCESSED_SCANNET_DIR}-

(Alternative) The preprocess data can also be downloaded [here], please agree the official license before download it.

-

(Optional) Download ScanNet Data Efficient files:

# download-scannet.py is the official download script

# or follow instructions here: https://kaldir.vc.in.tum.de/scannet_benchmark/data_efficient/documentation#download

python download-scannet.py --data_efficient -o ${RAW_SCANNET_DIR}

# unzip downloads

cd ${RAW_SCANNET_DIR}/tasks

unzip limited-annotation-points.zip

unzip limited-bboxes.zip

unzip limited-reconstruction-scenes.zip

# copy files to processed dataset folder

cp -r ${RAW_SCANNET_DIR}/tasks ${PROCESSED_SCANNET_DIR}- Link processed dataset to codebase:

# PROCESSED_SCANNET_DIR: the directory of the processed ScanNet dataset.

mkdir data

ln -s ${PROCESSED_SCANNET_DIR} ${CODEBASE_DIR}/data/scannet- Download S3DIS data by filling this Google form. Download the

Stanford3dDataset_v1.2.zipfile and unzip it. - Run preprocessing code for S3DIS as follows:

# S3DIS_DIR: the directory of downloaded Stanford3dDataset_v1.2 dataset.

# RAW_S3DIS_DIR: the directory of Stanford2d3dDataset_noXYZ dataset. (optional, for parsing normal)

# PROCESSED_S3DIS_DIR: the directory of processed S3DIS dataset (output dir).

# S3DIS without aligned angle

python pointcept/datasets/preprocessing/s3dis/preprocess_s3dis.py --dataset_root ${S3DIS_DIR} --output_root ${PROCESSED_S3DIS_DIR}

# S3DIS with aligned angle

python pointcept/datasets/preprocessing/s3dis/preprocess_s3dis.py --dataset_root ${S3DIS_DIR} --output_root ${PROCESSED_S3DIS_DIR} --align_angle

# S3DIS with normal vector (recommended, normal is helpful)

python pointcept/datasets/preprocessing/s3dis/preprocess_s3dis.py --dataset_root ${S3DIS_DIR} --output_root ${PROCESSED_S3DIS_DIR} --raw_root ${RAW_S3DIS_DIR} --parse_normal

python pointcept/datasets/preprocessing/s3dis/preprocess_s3dis.py --dataset_root ${S3DIS_DIR} --output_root ${PROCESSED_S3DIS_DIR} --raw_root ${RAW_S3DIS_DIR} --align_angle --parse_normal-

(Alternative) The preprocess data can also be downloaded [here] (with normal vector and aligned angle), please agree with the official license before downloading it.

-

Link processed dataset to codebase.

# PROCESSED_S3DIS_DIR: the directory of processed S3DIS dataset.

mkdir data

ln -s ${PROCESSED_S3DIS_DIR} ${CODEBASE_DIR}/data/s3dis- PointTransformer on S3DIS dataset

export PYTHONPATH=./ && export CUDA_VISIBLE_DEVICES=${CUDA_VISIBLE_DEVICES}

# open-set segmentation with msp method

python tools/train.py --config-file configs/s3dis/openseg-pt-v1-0-msp.py --num-gpus ${NUM_GPU} --options save_path=${SAVE_PATH}

# open-set segmentation with our method (training from scratch)

python tools/train.py --config-file configs/s3dis/openseg-pt-v1-0-our.py --num-gpus ${NUM_GPU} --options save_path=${SAVE_PATH}The msp method does not make changes to the backbone, which only differs from semantic segmentation in the evaluation process. Our method trains open-set segmentation model by finetuning the semantic segmentation model. Therefore, our method can resume training from msp checkpoint directly:

# open-set segmentation with our method (resume training from msp checkpoint)

python tools/train.py --config-file configs/s3dis/openseg-pt-v1-0-our.py --num-gpus ${NUM_GPU} --options save_path=${SAVE_PATH} resume=True weight=${MSP_CHECKPOINT_PATH}- PointTransformer on ScannetV2 dataset

export PYTHONPATH=./ && export CUDA_VISIBLE_DEVICES=${CUDA_VISIBLE_DEVICES}

# open-set segmentation with msp method

python tools/train.py --config-file configs/scannet/openseg-pt-v1-0-msp.py --num-gpus ${NUM_GPU} --options save_path=${SAVE_PATH}

# open-set segmentation with our method (training from scratch)

python tools/train.py --config-file configs/scannet/openseg-pt-v1-0-our.py --num-gpus ${NUM_GPU} --options save_path=${SAVE_PATH}

# open-set segmentation with our method (resume training from msp checkpoint)

python tools/train.py --config-file configs/scannet/openseg-pt-v1-0-our.py --num-gpus ${NUM_GPU} --options save_path=${SAVE_PATH} resume=True weight=${MSP_CHECKPOINT_PATH}- StratifiedTransformer on S3DIS dataset

TODO

- StratifiedTransformer on ScannetV2 dataset

export PYTHONPATH=./ && export CUDA_VISIBLE_DEVICES=${CUDA_VISIBLE_DEVICES}

# open-set segmentation with msp method

python tools/train.py --config-file configs/scannet/openseg-st-v1m1-0-origin-msp.py --num-gpus ${NUM_GPU} --options save_path=${SAVE_PATH}

# open-set segmentation with our method (training from scratch)

python tools/train.py --config-file configs/scannet/openseg-st-v1m1-0-origin-our.py --num-gpus ${NUM_GPU} --options save_path=${SAVE_PATH}

# open-set segmentation with our method (resume training from msp checkpoint)

python tools/train.py --config-file configs/scannet/openseg-st-v1m1-0-origin-our.py --num-gpus ${NUM_GPU} --options save_path=${SAVE_PATH} resume=True weight=${MSP_CHECKPOINT_PATH}The evaluation results can be obtained by appending parameter eval_only to training command. For example:

export PYTHONPATH=./ && export CUDA_VISIBLE_DEVICES=${CUDA_VISIBLE_DEVICES}

# evaluate the msp method

python tools/train.py --config-file configs/scannet/openseg-pt-v1-0-msp.py --num-gpus ${NUM_GPU} --options save_path=${SAVE_PATH} weight=${CHECKPOINT_PATH} eval_only=True

# evaluate our method

python tools/train.py --config-file configs/scannet/openseg-pt-v1-0-our.py --num-gpus ${NUM_GPU} --options weight=${MSP_CHECKPOINT_PATH} save_path=${SAVE_PATH} eval_only=TrueNote that, these are not precise evaluation results. To test the model, please refer to Test.

TODO

TODO

@misc{pointcept2023,

title={Pointcept: A Codebase for Point Cloud Perception Research},

author={Pointcept Contributors},

howpublished = {\url{https://github.com/Pointcept/Pointcept}},

year={2023}

}

If you have any questions, please contact jinfengxu.edu@gmail.com.

?logo=github&style=flat)