- 2022.07.29 Support BEVDepth.

- 2022.07.26 Add configs and pretrained models of bevdet-r50 and bevdet4d-r50.

- 2022.07.13 Support bev-pool proposed in BEVFusion, which will speed up the training process of bevdet-tiny by +25%.

- 2022.07.08 Support visualization remotely! Please refer to Get Started for usage.

- 2022.06.29 Support acceleration of the Lift-Splat-Shoot view transformer! Please refer to [Technical Report] for detailed introduction and Get Started for testing BEVDet with acceleration.

- 2022.06.01 We release the code and models of both BEVDet and BEVDet4D!

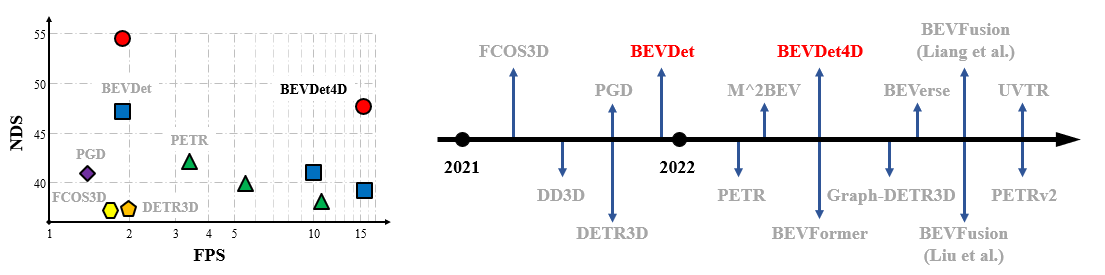

- 2022.04.01 We propose BEVDet4D to lift the scalable BEVDet paradigm from the spatial-only 3D space to the spatial-temporal 4D space. Technical report is released on arixv. [BEVDet4D].

- 2022.04.01 We upgrade the BEVDet paradigm with some modifications to improve its performance and inference speed. Thchnical report of BEVDet has been updated. [BEVDetv1].

- 2021.12.23 BEVDet is now on arxiv. [BEVDet].

| Method | mAP | NDS | FPS | Mem (MB) | Model | Log |

|---|---|---|---|---|---|---|

| BEVDet-R50 | 29.9 | 37.7 | 16.7 | 5,007 | ||

| BEVDepth-R50* | 33.3 | 40.6 | 15.7 | 5,185 | ||

| BEVDet4D-R50 | 32.2 | 45.7 | 16.7 | 7,089 | ||

| BEVDepth4D-R50* | 36.1 | 48.5 | 15.7 | 7,365 | ||

| - | - | - | - | - | - | - |

| BEVDet-Tiny | 30.8 | 40.4 | 15.6 | 6,187 | google / baidu | google /baidu |

| BEVDet4D-Tiny | 33.8 | 47.6 | 15.5 | 9,255 | google / baidu | google /baidu |

- *Thirdparty implementation, please refer to Megvii for official implementation.

- Memory is tested in the training process with batch 1 and without using torch.checkpoint.

Please see getting_started.md

# with acceleration

python tools/analysis_tools/benchmark.py configs/bevdet/bevdet-sttiny-accelerated.py $checkpoint

# without acceleration

python tools/analysis_tools/benchmark.py configs/bevdet/bevdet-sttiny.py $checkpointFor bevdet4d, the FLOP result involves the current frame only.

python tools/analysis_tools/get_flops.py configs/bevdet/bevdet-sttiny.py --shape 256 704

python tools/analysis_tools/get_flops.py configs/bevdet4d/bevdet4d-sttiny.py --shape 256 704Official implementation. (Visualization locally only)

python tools/test.py $config $checkpoint --show --show-dir $save-pathPrivate implementation. (Visualization remotely/locally)

python tools/test.py $config $checkpoint --format-only --eval-options jsonfile_prefix=$savepath

python tools/analysis_tools/vis.py $savepath/pts_bbox/results_nusc.jsonThis project is not possible without multiple great open-sourced code bases. We list some notable examples below.

Beside, there are some other attractive works extend the boundary of BEVDet.

- BEVerse for multi-task learning.

- BEVFusion for acceleration, multi-task learning, and multi-sensor fusion. (Note: The acceleration method is a concurrent work of that of BEVDet and has some superior characteristics like memory saving and completely equivalent.)

If this work is helpful for your research, please consider citing the following BibTeX entry.

@article{huang2022bevdet4d,

title={BEVDet4D: Exploit Temporal Cues in Multi-camera 3D Object Detection},

author={Huang, Junjie and Huang, Guan},

journal={arXiv preprint arXiv:2203.17054},

year={2022}

}

@article{huang2021bevdet,

title={BEVDet: High-performance Multi-camera 3D Object Detection in Bird-Eye-View},

author={Huang, Junjie and Huang, Guan and Zhu, Zheng and Yun, Ye and Du, Dalong},

journal={arXiv preprint arXiv:2112.11790},

year={2021}

}