Jiamian Wang, Guohao Sun, Pichao Wang, Dongfang Liu, Sohail Dianat, Majid Rabbani, Raghuveer Rao, Zhiqiang Tao, "Text Is MASS: Modeling as Stochastic Embedding for Text-Video Retrieval".

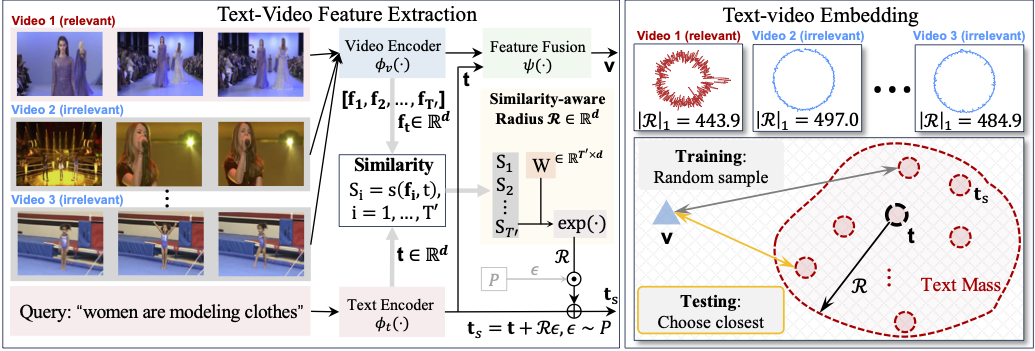

Abstract: The increasing prevalence of video clips has sparked growing interest in text-video retrieval. Recent advances focus on establishing a joint embedding space for text and video, relying on consistent embedding representations to compute similarity. However, the text content in existing datasets is generally short and concise, making it hard to fully describe the redundant semantics of a video. Correspondingly, a single text embedding may be less expressive to capture the video embedding and empower the retrieval. In this study, we propose a new stochastic text modeling method T-MASS, i.e., text is modeled as a stochastic embedding, to enrich text embedding with a flexible and resilient semantic range, yielding a text mass. To be specific, we introduce a similarity-aware radius module to adapt the scale of the text mass upon the given text-video pairs. Plus, we design and develop a support text regularization to further control the text mass during the training. The inference pipeline is also tailored to fully exploit the text mass for accurate retrieval. Empirical evidence suggests that T-MASS not only effectively attracts relevant text-video pairs while distancing irrelevant ones, but also enables the determination of precise text embeddings for relevant pairs. Our experimental results show a substantial improvement of T-MASS over baseline (3%~6.3% by R@1). Also, T-MASS achieves state-of-the-art performance on five benchmark datasets, including MSRVTT, LSMDC, DiDeMo, VATEX, and Charades.

- PyTorch 1.12.1

- OpenCV 4.7.0

- transformers 4.30.2

To download MSRVTT, LSMDC, and DiDeMo, please follow CLIP4Clip.

You will need to request a permission from MPII to download and use the Standard LSMDC data.

For LSMDC, download the data split csv files into ./data/LSMDC/.

For DiDeMo, using gdrive to download video data is recommended.

One may consider

- Setup gdrive by following "Getting started".

- Download video data by

gdrive files download --recursive FOLDER_ID_FROM_URL

| Dataset | Video Clips | Text-Video Pairs | Scale | Link |

|---|---|---|---|---|

| MSR-VTT | 10K | one-to-twenty | 6.7Gb | link |

| LSMDC | 118081 | one-to-one | 1.3Tb | link |

| DiDeMo | 10464 | one-to-many | 581Gb | link |

Download the checkpoints into ./outputs/{Dataset}/{FOLDER_NAME_UNDER_*Dataset*}.

Repeat testing process for --stochasic_trials causes either time or memory computational overhead. The sequential strategy provided is more memory-friendly. We adopt --seed=24 and --stochasic_trials=20 for all methods. One may consider specifying --save_memory_mode for larger datasets or computational-constrained platforms at evaluation. Same as XPool, the evaluation is default to text-to-video retrieval performance (i.e., --metric=t2v), for video-to-text retrieval performance, specify --metric=v2t. For post processing operation evaluation results of DSL, specify --DSL.

Replace {videos_dir} with the path to the dataset.

| Dataset | Command | Checkpoint File | t2v R@1 Result |

|---|---|---|---|

| MSR-VTT-9k | python test.py --datetime={FOLDER_NAME_UNDER_MSR-VTT-9k} --arch=clip_stochastic --videos_dir={VIDEO_DIR} --batch_size=32 --noclip_lr=3e-5 --transformer_dropout=0.3 --dataset_name=MSRVTT --msrvtt_train_file=9k --stochasic_trials=20 --gpu='0' --load_epoch=0 --exp_name=MSR-VTT-9k |

Link | 50.2 |

| LSMDC | python test.py --arch=clip_stochastic --exp_name=LSMDC --videos_dir={VIDEO_DIR} --batch_size=32 --noclip_lr=1e-5 --transformer_dropout=0.3 --dataset_name=LSMDC --stochasic_trials=20 --gpu='0' --num_epochs=5 --stochastic_prior=normal --stochastic_prior_std=3e-3 --load_epoch=0 --datetime={FOLDER_NAME_UNDER_LSMDC} |

Link | 28.9 |

| DiDeMo | python test.py --num_frame=12 --raw_video --arch=clip_stochastic --exp_name=DiDeMo --videos_dir={VIDEO_DIR} --batch_size=32 --noclip_lr=1e-5 --transformer_dropout=0.4 --dataset_name=DiDeMo --stochasic_trials=20 --gpu='0' --num_epochs=5 --load_epoch=0 --datetime={FOLDER_NAME_UNDER_DiDeMo} |

Link | 50.9 |

Run the following training code to resume the above results. Take MSRVTT as an example, one may consider support text regularization by specifying --support_loss_weight. --evals_per_epoch can be enlarged to select a better checkpoint. The CLIP model is default to --clip_arch=ViT-B/32. To train on a larger CLIP backbone, speficy --clip_arch=ViT-B/16. One may enlarge the training epochs --num_epochs by one or two when the dataset is incomplete for a better performance.

| Dataset | Command |

|---|---|

| MSR-VTT-9k | python train.py --arch=clip_stochastic --exp_name=MSR-VTT-9k --videos_dir={VIDEO_DIR} --batch_size=32 --noclip_lr=3e-5 --transformer_dropout=0.3 --dataset_name=MSRVTT --msrvtt_train_file=9k --stochasic_trials=20 --gpu='0' --num_epochs=5 --support_loss_weight=0.8 |

| LSMDC | python train.py --arch=clip_stochastic --exp_name=LSMDC --videos_dir={VIDEO_DIR} --batch_size=32 --noclip_lr=1e-5 --transformer_dropout=0.3 --dataset_name=LSMDC --stochasic_trials=20 --gpu='0' --num_epochs=5 --stochastic_prior=normal --stochastic_prior_std=3e-3 |

| DiDeMo | python train.py --num_frame=12 --raw_video --arch=clip_stochastic --exp_name=DiDeMo --videos_dir={VIDEO_DIR} --batch_size=32 --noclip_lr=1e-5 --transformer_dropout=0.4 --dataset_name=DiDeMo --stochasic_trials=20 --gpu='0' --num_epochs=5 |

If you find this work valuable for your research, we kindly request that you cite the following paper:

@inproceedings{wang2024text,

title={Text Is MASS: Modeling as Stochastic Embedding for Text-Video Retrieval},

author={Wang, Jiamian and Sun, Guohao and Wang, Pichao and Liu, Dongfang and Dianat, Sohail and Rabbani, Majid and Rao, Raghuveer and Tao, Zhiqiang},

booktitle={Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR)},

year={2024}

}

This code is built on XPool. Great thanks to them!

For discussions, please feel free to submit an issue or contact me via email at jiamiansc@gmail.com.