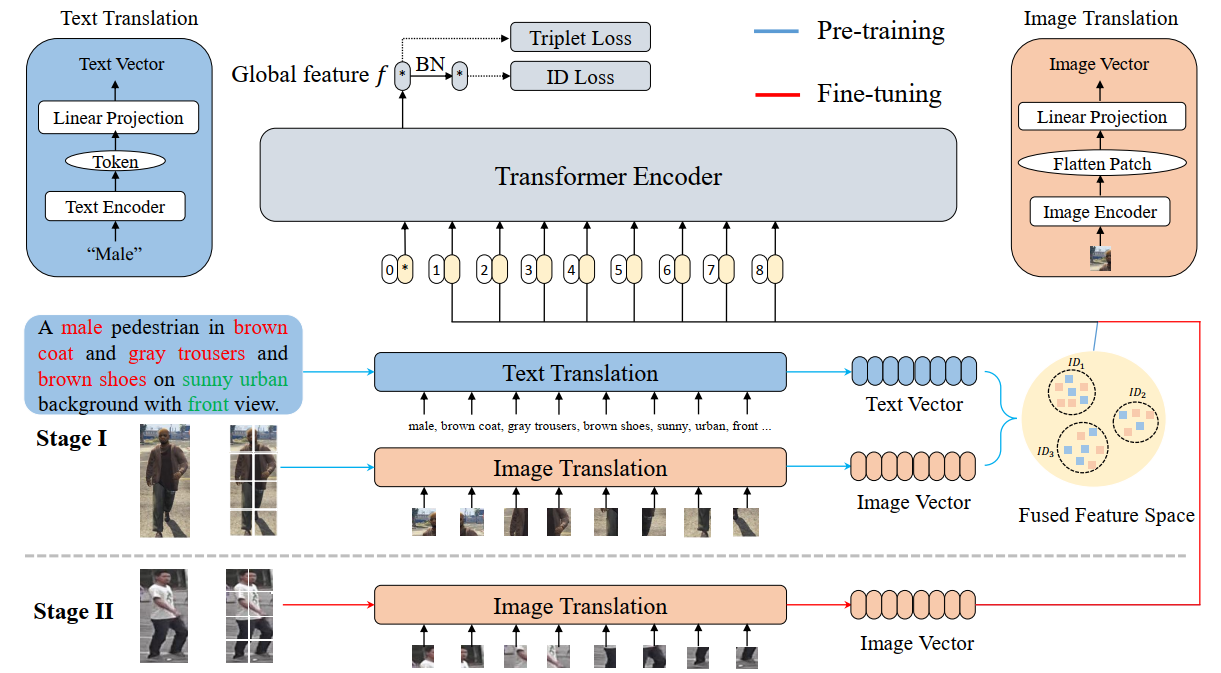

This is the official implementation of our paper Deep Multimodal Fusion for Generalizable Person Re-identification. And the pretrained models can be downloaded from data2vec.

- Support Market1501, CUHK03, MSMT17 and RandPerson datasets.

Write the documents.

- python2

- torch

- torchvision

- timm

- yacs

- opencv-python

- fairseq

This repo. supports training on multiple GPUs and the default setting is single GPU (One RTX 3090 GPU).

- Download all necessry datasets (e.g. Market1501, CUHK03 and MSMT17 datasets) and move them to 'data'.

- Training

python train.py

- Testing

python test.py

| Dataset for fine-tuning | Market-1501 | CUHK03 | MSMT17 | Settings | |||

|---|---|---|---|---|---|---|---|

| Rank-1 | mAP | Rank-1 | mAP | Rank-1 | mAP | ||

| Market-1501 | -- | -- | 23.4 | 22.6 | 50.6 | 21.5 | 1GPU |

| MSMT17 | 81.3 | 55.1 | 26.1 | 24.7 | -- | -- | 1GPU |

| MSMT17all | 82.6 | 58.8 | 34.0 | 32.1 | -- | -- | 1GPU |

| RandPerson | 78.7 | 52.0 | 21.5 | 19.3 | 52.4 | 18.9 | 1GPU |

This work was supported by the National Natural Science Foundation of China under Projects (Grant No. 61977045 and Grant No. 81974276). If you have further questions and suggestions, please feel free to contact us (xiangsuncheng17@sjtu.edu.cn).

If you find this code useful in your research, please consider citing:

@article{xiang2022deep,

title={Deep Multimodal Fusion for Generalizable Person Re-identification},

author={Xiang, Suncheng and Chen, Hao and Ran, Wei and Yu, Zefang and Liu, Ting and Qian, Dahong and Fu, Yuzhuo},

journal={arXiv preprint arXiv:2211.00933},

year={2022},

}