Visual Speech Recognition for Languages with Limited Labeled Data Using Automatic Labels from Whisper

This repository contains the Official PyTorch implementation code of the following paper:

Visual Speech Recognition for Languages with Limited Labeled Data Using Automatic Labels from Whisper

*Jeong Hun Yeo, *Minsu Kim, Shinji Watanabe, and Yong Man Ro

[Paper]

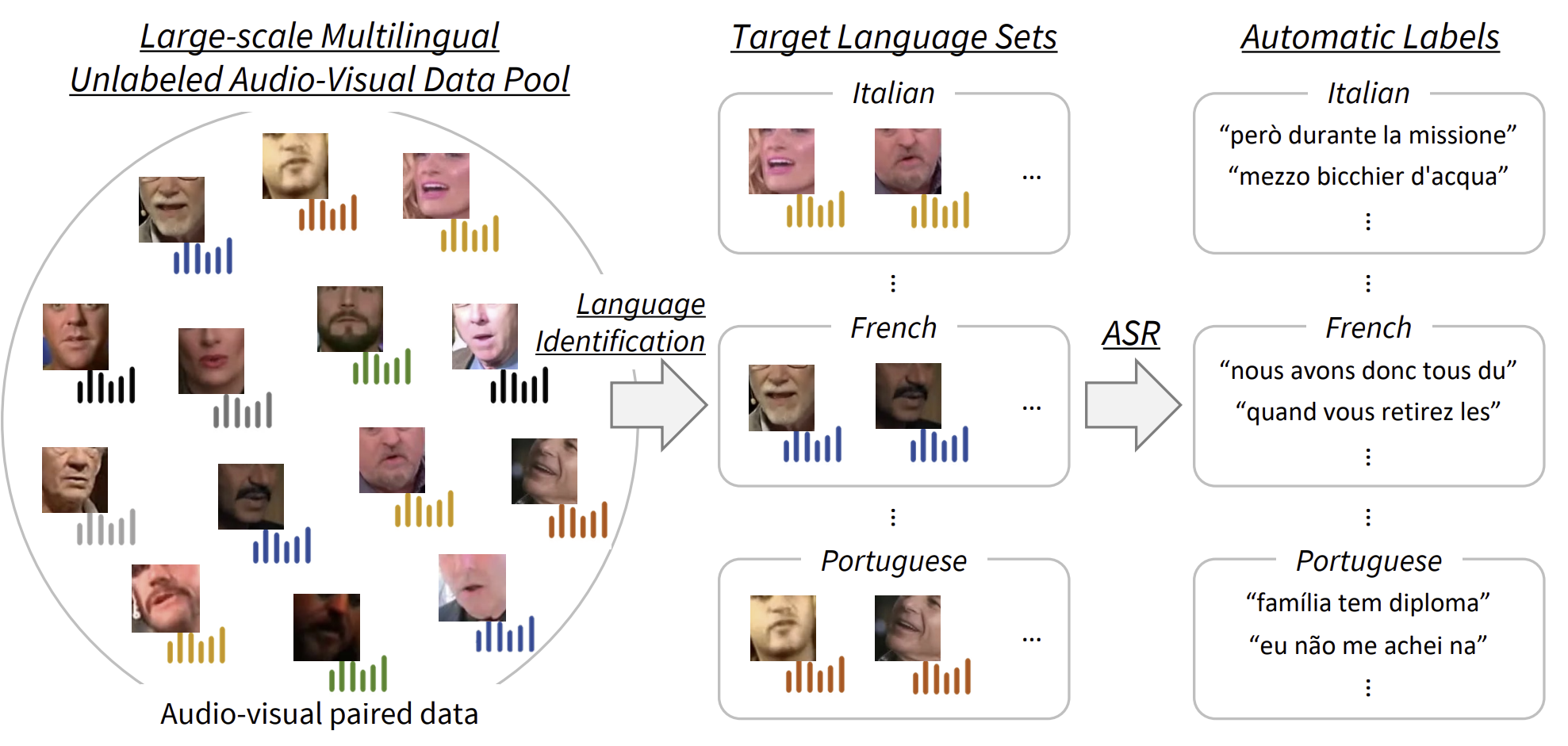

We release the automatic labels of the four low-resource languages(French, Italian, Portuguese, and Spanish).

To generate the automatic labels, we identify the languages of all videos in VoxCeleb2 and AVSpeech, and then the transcription (automatic labels) is produced by the pretrained ASR model. In this project, we use a "whisper/large-v2" model to conduct these processes.

conda create -n vsr-low python=3.9 -y

conda activate vsr-low

git clone https://github.com/JeongHun0716/vsr-low

cd vsr-lowpip install torch==1.13.1+cu117 torchvision==0.14.1+cu117 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu117

git clone https://github.com/pytorch/fairseq

cd fairseq

pip install --editable ./

pip install hydra-core==1.3.0

pip install omegaconf==2.3.0

pip install pytorch-lightning==1.5.10

pip install sentencepiece

pip install avMultilingual TEDx(mTEDx), VoxCeleb2, and AVSpeech Datasets.

- Download the mTEDx dataset from the mTEDx link of the official website.

- Download the VoxCeleb2 dataset from the VoxCeleb2 link of the official website.

- Download the AVSpeech dataset from the AVSpeech link of the official website.

When you are interested in training the model for a specific target language VSR, we recommend using language-detected files (e.g., link provided in this project instead of video lists of the AVSpeech dataset provided on the official website to reduce the dataset preparation time. Because of the huge amount of AVSpeech dataset, it takes a lot of time.

After downloading the datasets, you should detect the facial landmarks of all videos and crop the mouth region using these facial landmarks. We recommend you preprocess the videos following Visual Speech Recognition for Multiple Languages.

The training code is available soon.

Download the checkpoints from the below links and move them to the pretrained_models directory. You can evaluate the performance of each model using the scripts available in the scripts directory.

mTEDx Fr

| Model | Training Datasets | Training data (h) | WER [%] | Target Languages |

|---|---|---|---|---|

| ckpt.pt | mTEDx | 85 | 65.25 | Fr |

| ckpt.pt | mTEDx + VoxCeleb2 | 209 | 60.61 | Fr |

| ckpt.pt | mTEDx + VoxCeleb2 + AVSpeech | 331 | 58.30 | Fr |

mTEDx It

| Model | Training Datasets | Training data (h) | WER [%] | Target Languages |

|---|---|---|---|---|

| ckpt.pt | mTEDx | 46 | 60.40 | It |

| ckpt.pt | mTEDx + VoxCeleb2 | 84 | 56.48 | It |

| ckpt.pt | mTEDx + VoxCeleb2 + AVSpeech | 152 | 51.79 | It |

mTEDx Es

| Model | Training Datasets | Training data (h) | WER [%] | Target Languages |

|---|---|---|---|---|

| ckpt.pt | mTEDx | 72 | 59.91 | Es |

| ckpt.pt | mTEDx + VoxCeleb2 | 114 | 54.05 | Es |

| ckpt.pt | mTEDx + VoxCeleb2 + AVSpeech | 384 | 45.71 | Es |

mTEDx Pt

| Model | Training Datasets | Training data (h) | WER [%] | Target Languages |

|---|---|---|---|---|

| ckpt.pt | mTEDx | 82 | 59.45 | Pt |

| ckpt.pt | mTEDx + VoxCeleb2 | 91 | 58.82 | Pt |

| ckpt.pt | mTEDx + VoxCeleb2 + AVSpeech | 420 | 47.89 | Pt |

If you find this work useful in your research, please cite the paper:

@inproceedings{yeo2024visual,

title={Visual Speech Recognition for Languages with Limited Labeled Data Using Automatic Labels from Whisper},

author={Yeo, Jeong Hun and Kim, Minsu and Watanabe, Shinji and Ro, Yong Man},

booktitle={ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={10471--10475},

year={2024},

organization={IEEE}

}This project is based on the auto-avsr code. We would like to acknowledge and thank the original developers of auto-avsr for their contributions and the open-source community for making this work possible.

auto-avsr Repository: auto-avsr GitHub Repository