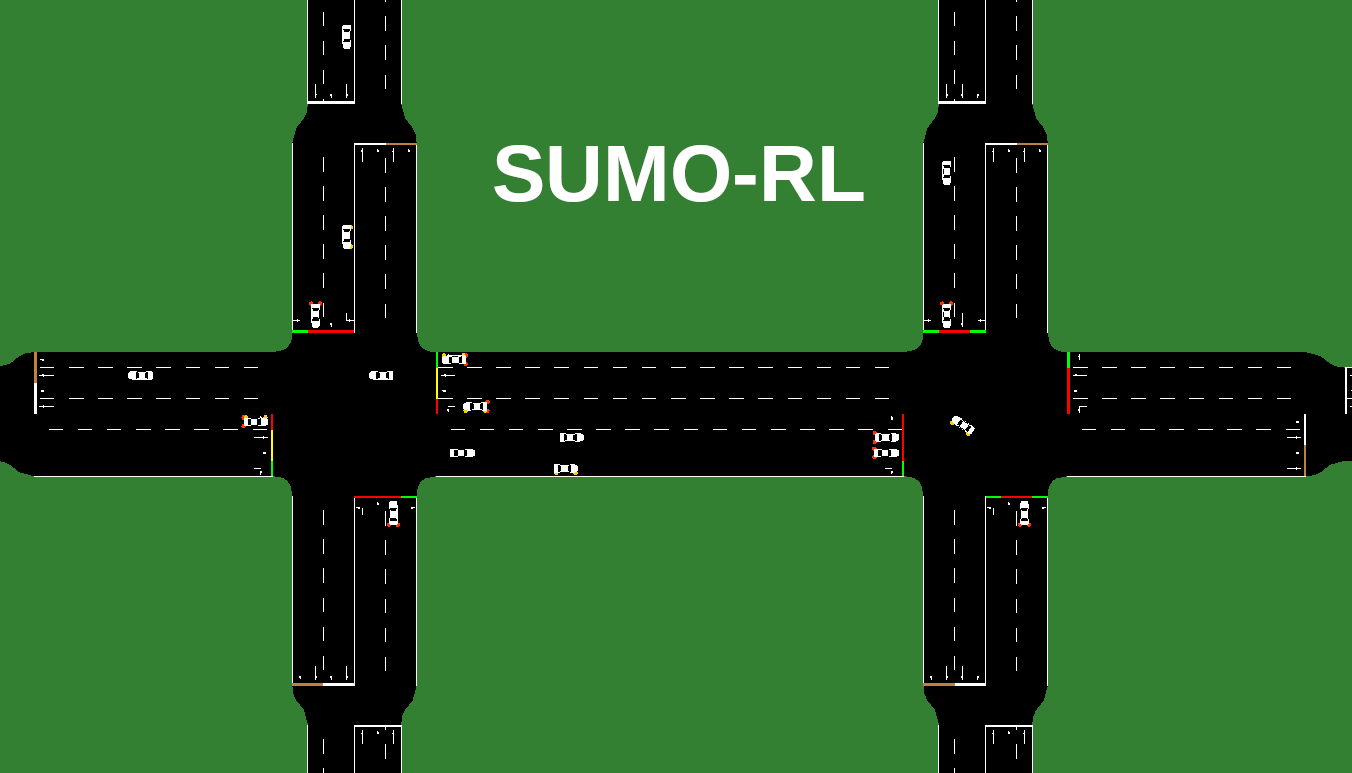

SUMO-RL provides a simple interface to instantiate Reinforcement Learning environments with SUMO for Traffic Signal Control.

The main class SumoEnvironment behaves like a MultiAgentEnv from RLlib.

If instantiated with parameter 'single-agent=True', it behaves like a regular Gymnasium Env.

Call env or parallel_env to instantiate a PettingZoo environment.

TrafficSignal is responsible for retrieving information and actuating on traffic lights using TraCI API.

Goals of this repository:

- Provide a simple interface to work with Reinforcement Learning for Traffic Signal Control using SUMO

- Support Multiagent RL

- Compatibility with gymnasium.Env and popular RL libraries such as stable-baselines3 and RLlib

- Easy customisation: state and reward definitions are easily modifiable

sudo add-apt-repository ppa:sumo/stable

sudo apt-get update

sudo apt-get install sumo sumo-tools sumo-doc Don't forget to set SUMO_HOME variable (default sumo installation path is /usr/share/sumo)

echo 'export SUMO_HOME="/usr/share/sumo"' >> ~/.bashrc

source ~/.bashrcImportant: for a huge performance boost (~8x) with Libsumo, you can declare the variable:

export LIBSUMO_AS_TRACI=1Notice that you will not be able to run with sumo-gui or with multiple simulations in parallel if this is active (more details).

Stable release version is available through pip

pip install sumo-rlRecommended: alternatively you can install using the latest (unreleased) version

git clone https://github.com/LucasAlegre/sumo-rl

cd sumo-rl

pip install -e .The default observation for each traffic signal agent is a vector:

obs = [phase_one_hot, min_green, lane_1_density,...,lane_n_density, lane_1_queue,...,lane_n_queue]phase_one_hotis a one-hot encoded vector indicating the current active green phasemin_greenis a binary variable indicating whether min_green seconds have already passed in the current phaselane_i_densityis the number of vehicles in incoming lane i dividided by the total capacity of the lanelane_i_queueis the number of queued (speed below 0.1 m/s) vehicles in incoming lane i divided by the total capacity of the lane

You can define your own observation changing the method 'compute_observation' of TrafficSignal.

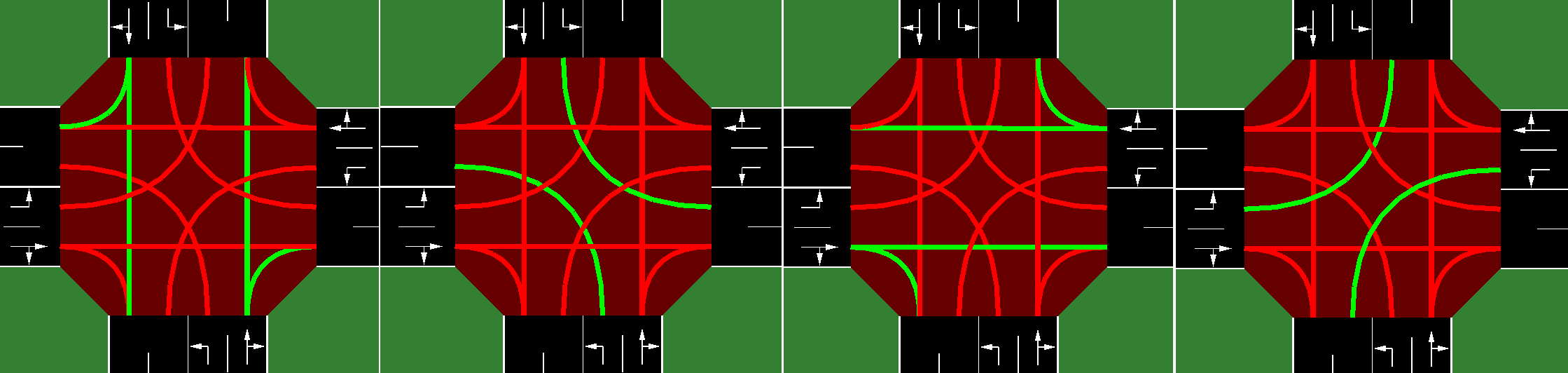

The action space is discrete. Every 'delta_time' seconds, each traffic signal agent can choose the next green phase configuration.

E.g.: In the 2-way single intersection there are |A| = 4 discrete actions, corresponding to the following green phase configurations:

Important: every time a phase change occurs, the next phase is preeceded by a yellow phase lasting yellow_time seconds.

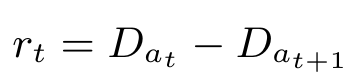

The default reward function is the change in cumulative vehicle delay:

That is, the reward is how much the total delay (sum of the waiting times of all approaching vehicles) changed in relation to the previous time-step.

You can choose a different reward function (see the ones implemented in TrafficSignal) with the parameter reward_fn in the SumoEnvironment constructor.

It is also possible to implement your own reward function:

def my_reward_fn(traffic_signal):

return traffic_signal.get_average_speed()

env = SumoEnvironment(..., reward_fn=my_reward_fn)Please see SumoEnvironment docstring for details on all constructor parameters.

If your network only has ONE traffic light, then you can instantiate a standard Gymnasium env (see Gymnasium API):

import gymnasium as gym

import sumo_rl

env = gym.make('sumo-rl-v0',

net_file='path_to_your_network.net.xml',

route_file='path_to_your_routefile.rou.xml',

out_csv_name='path_to_output.csv',

use_gui=True,

num_seconds=100000)

obs, info = env.reset()

done = False

while not done:

next_obs, reward, terminated, truncated, info = env.step(env.action_space.sample())

done = terminated or truncatedSee Petting Zoo API for more details.

import sumo_rl

env = sumo_rl.env(net_file='sumo_net_file.net.xml',

route_file='sumo_route_file.rou.xml',

use_gui=True,

num_seconds=3600)

env.reset()

for agent in env.agent_iter():

observation, reward, termination, truncation, info = env.last()

action = policy(observation)

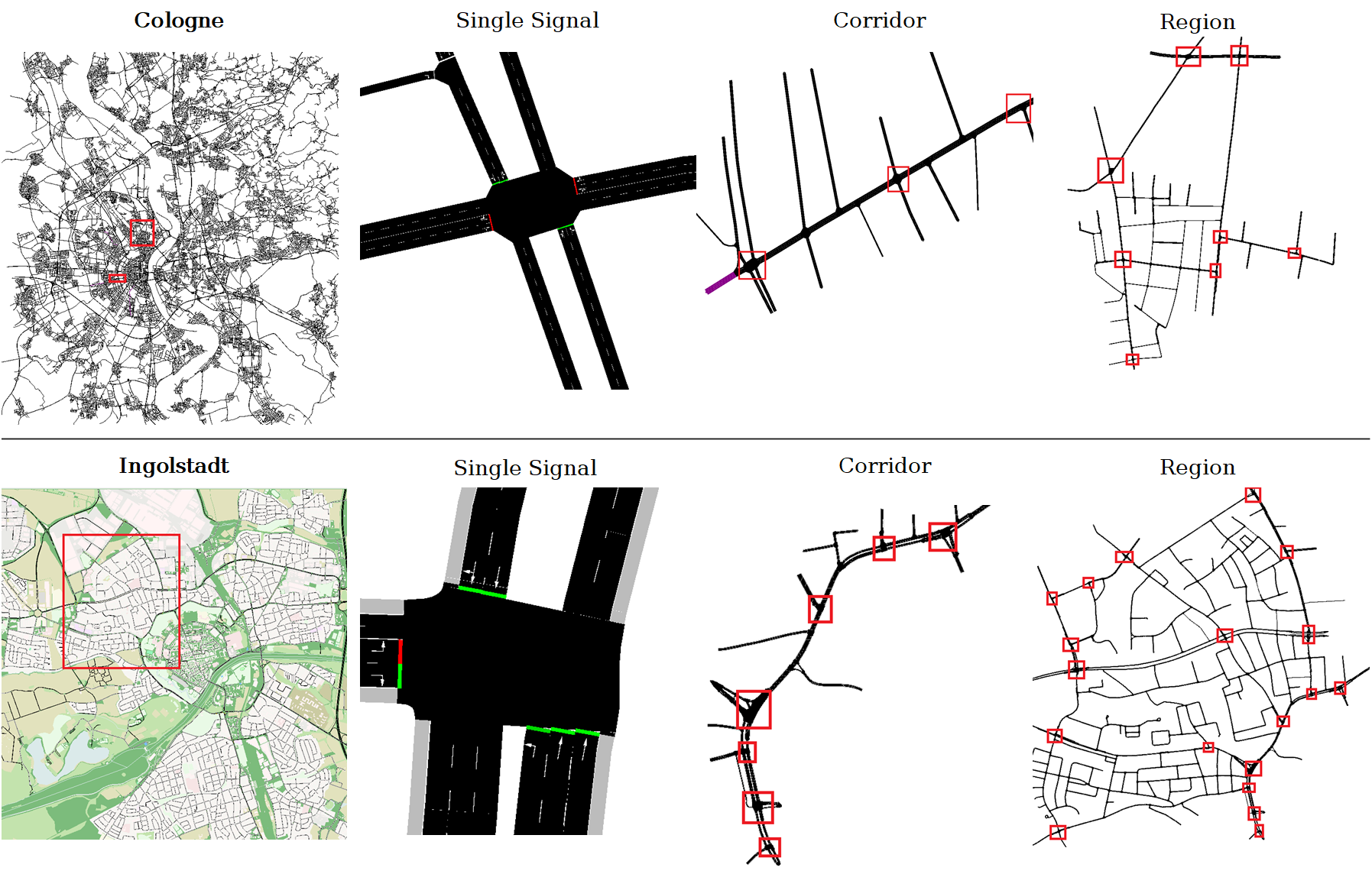

env.step(action)In the folder nets/RESCO you can find the network and route files from RESCO (Reinforcement Learning Benchmarks for Traffic Signal Control), which was built on top of SUMO-RL. See their paper for results.

WARNING: Gym 0.26 had many breaking changes, stable-baselines3 and RLlib still do not support it, but will be updated soon. See Stable Baselines 3 PR and RLib PR. Hence, only the tabular Q-learning experiment is running without erros for now.

Check experiments for examples on how to instantiate an environment and train your RL agent.

Q-learning in a one-way single intersection:

python experiments/ql_single-intersection.py RLlib A3C multiagent in a 4x4 grid:

python experiments/a3c_4x4grid.pystable-baselines3 DQN in a 2-way single intersection:

python experiments/dqn_2way-single-intersection.pypython outputs/plot.py -f outputs/2way-single-intersection/a3c If you use this repository in your research, please cite:

@misc{sumorl,

author = {Lucas N. Alegre},

title = {{SUMO-RL}},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/LucasAlegre/sumo-rl}},

}List of publications using SUMO-RL (please open a pull request to add missing entries):

- Quantifying the impact of non-stationarity in reinforcement learning-based traffic signal control (Alegre et al., 2021)

- Using ontology to guide reinforcement learning agents in unseen situations (Ghanadbashi & Golpayegani, 2022)

- An Ontology-Based Intelligent Traffic Signal Control Model (Ghanadbashi & Golpayegani, 2021)

- Information upwards, recommendation downwards: reinforcement learning with hierarchy for traffic signal control (Antes et al., 2022)

- Reinforcement Learning Benchmarks for Traffic Signal Control (Ault & Sharon, 2021)

- A Comparative Study of Algorithms for Intelligent Traffic Signal Control (Chaudhuri et al., 2022)

- EcoLight: Reward Shaping in Deep Reinforcement Learning for Ergonomic Traffic Signal Control (Agand et al., 2021)