conda env create -f environment.yml

conda activate gridworldRun gridworld_manual.py with arguments for the map size [x=y=map_shape], the number of agents and obstacle density:

python gridworld_manual.py --map_shape 10 --n_agents 2 --obj_density 0.2To see a command-line printout of the observations add --verbose

Follow comand instuctions to make agents move. For example: 0 1 1 2 s => agent 0 move up ; agent 1 move right ; step

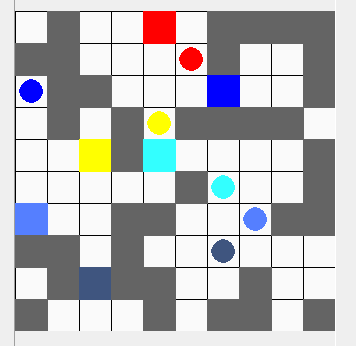

python odmstar_example.py --map_shape 32 --n_agents 20 --obj_density 0.2 --inflation 1.1python run_experiments.py --name ppo_testpython run_experiments.py --name ic3net_testpython run_experiments.py --name maac_testpython run_experiments.py --name primal_testRendering of Gridworld environment:

@misc{gym_minigrid,

author = {Chevalier-Boisvert, Maxime and Willems, Lucas and Pal, Suman},

title = {Minimalistic Gridworld Environment for OpenAI Gym},

year = {2018},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/maximecb/gym-minigrid}},

}

Code in Agents/MAAC.py and Agents/maac_utils/ modified from: https://github.com/shariqiqbal2810/MAAC

@InProceedings{pmlr-v97-iqbal19a,

title = {Actor-Attention-Critic for Multi-Agent Reinforcement Learning},

author = {Iqbal, Shariq and Sha, Fei},

booktitle = {Proceedings of the 36th International Conference on Machine Learning},

pages = {2961--2970},

year = {2019},

editor = {Chaudhuri, Kamalika and Salakhutdinov, Ruslan},

volume = {97},

series = {Proceedings of Machine Learning Research},

address = {Long Beach, California, USA},

month = {09--15 Jun},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v97/iqbal19a/iqbal19a.pdf},

url = {http://proceedings.mlr.press/v97/iqbal19a.html},

}

Code in Agents/maac_utils/vec_env/ from Open AI baselines: https://github.com/openai/baselines/tree/master/baselines/common

Code in Agents/ic3Net.py modified from: https://github.com/IC3Net/IC3Net

@article{singh2018learning,

title={Learning when to Communicate at Scale in Multiagent Cooperative and Competitive Tasks},

author={Singh, Amanpreet and Jain, Tushar and Sukhbaatar, Sainbayar},

journal={arXiv preprint arXiv:1812.09755},

year={2018}

}