OPEN-MAGVIT2: An Open-source Project Toward Democratizing Auto-Regressive Visual Generation

Zhuoyan Luo, Fengyuan Shi, Yixiao Ge, Yujiu Yang, Limin Wang, Ying Shan

ARC Lab Tecent PCG, Tsinghua University, Nanjing University

We present Open-MAGVIT2, a family of auto-regressive image generation models ranging from 300M to 1.5B. The Open-MAGVIT2 project produces an open-source replication of Google's MAGVIT-v2 tokenizer, a tokenizer with a super-large codebook (i.e.,

- [2024.09.05] 🔥🔥🔥 We release a better image tokenizer and a family of auto-regressive models ranging from 300M to 1.5B.

- [2024.06.17] 🔥🔥🔥 We release the training code of the image tokenizer and checkpoints for different resolutions, achieving state-of-the-art performance (

0.39 rFIDfor 8x downsampling) compared to VQGAN, MaskGIT, and recent TiTok, LlamaGen, and OmniTokenizer.

- [ ✔ ] Better image tokenizer with scale-up training.

- [ ✔ ] Finalize the training of the autoregressive model.

- Video tokenizer and the corresponding autoregressive model.

🤗 Open-MAGVIT2 is still at an early stage and under active development. Stay tuned for the update!

Note that our experments are all using Ascned 910B for training. But we have tested our models on V100. The performance gap is narrow.

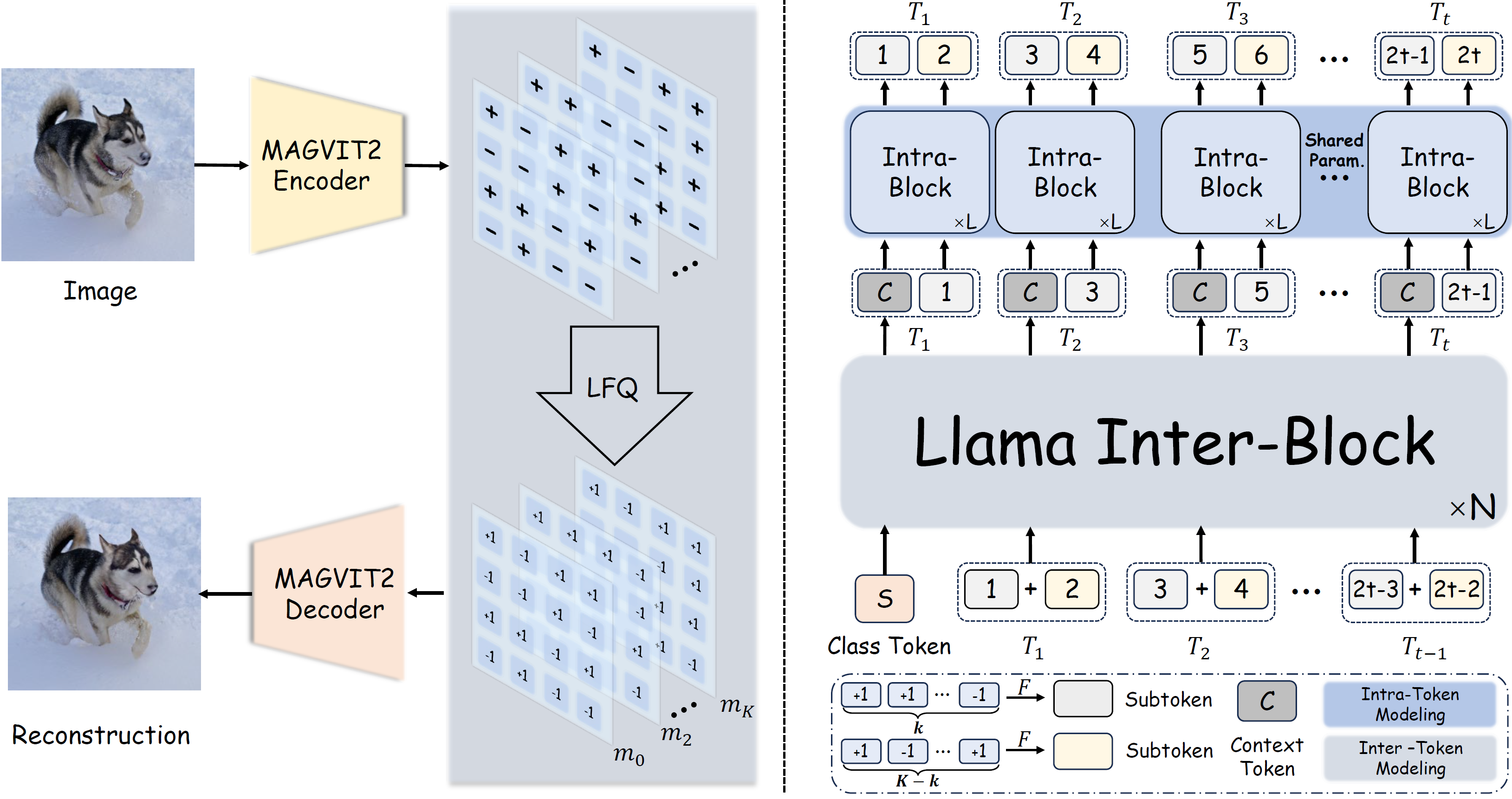

Figure 1. The framework of the Open-MAGVIT2.

- Env: We have tested on

Python 3.8.8andCUDA 11.8(other versions may also be fine). - Dependencies:

pip install -r requirements.txt

- Env:

Python 3.9.16andCANN 8.0.T13 - Main Dependencies:

torch=2.1.0+cpu+torch-npu=2.1.0.post3-20240523+Lightning - Other Dependencies: see in

requirements.txt

We use Imagenet2012 as our dataset.

imagenet

└── train/

├── n01440764

├── n01440764_10026.JPEG

├── n01440764_10027.JPEG

├── ...

├── n01443537

├── ...

└── val/

├── ...

-

$128\times 128$ Tokenizer Training

bash scripts/train_tokenizer/run_128_L.sh MASTER_ADDR MASTER_PORT NODE_RANK

-

$256\times 256$ Tokenizer Training

bash scripts/train_tokenizer/run_256_L.sh MASTER_ADDR MASTER_PORT NODE_RANK

-

$128\times 128$ Tokenizer Evaluation

bash scripts/evaluation/evaluation_128.sh

-

$256\times 256$ Tokenizer Evaluation

bash scripts/evaluation/evaluation_256.sh

Tokenizer

| Method | Token Type | #Tokens | Train Data | Codebook Size | rFID | PSNR | Codebook Utilization | Checkpoint |

|---|---|---|---|---|---|---|---|---|

| Open-MAGVIT2-20240617 | 2D | 16 |

256 |

262144 | 1.53 | 21.53 | 100% | - |

| Open-MAGVIT2-20240617 | 2D | 16 |

128 |

262144 | 1.56 | 24.45 | 100% | - |

| Open-MAGVIT2 | 2D | 16 |

256 |

262144 | 1.17 | 21.90 | 100% | IN256_Large |

| Open-MAGVIT2 | 2D | 16 |

128 |

262144 | 1.18 | 25.08 | 100% | IN128_Large |

| Open-MAGVIT2* | 2D | 32 |

128 |

262144 | 0.34 | 26.19 | 100% | above |

(*) denotes that the results are from the direct inference using the model trained with

Please see in scripts/train_autogressive/run.sh for different model configurations.

bash scripts/train_autogressive/run.sh MASTER_ADDR MASTER_PORT NODE_RANK

Please see in scripts/train_autogressive/run.sh for different sampling hyper-parameters for different scale of models.

bash scripts/evaluation/sample_npu.sh or scripts/evaluation/sample_gpu.sh Your_Total_Rank

| Method | Params | #Tokens | FID | IS | Checkpoint |

|---|---|---|---|---|---|

| Open-MAGVIT2 | 343M | 16 |

3.08 | 258.26 | AR_256_B |

| Open-MAGVIT2 | 804M | 16 |

2.51 | 271.70 | AR_256_L |

| Open-MAGVIT2 | 1.5B | 16 |

2.33 | 271.77 | AR_256_XL |

We thank Lijun Yu for his encouraging discussions. We refer a lot from VQGAN and MAGVIT. We also refer to LlamaGen, VAR and RQVAE. Thanks for their wonderful work.

If you found the codebase and our work helpful, please cite it and give us a star ⭐.

@article{luo2024open-magvit2,

title={Open-MAGVIT2: An Open-Source Project Toward Democratizing Auto-Regressive Visual Generation},

author={Luo, Zhuoyan and Shi, Fengyuan and Ge, Yixiao and Yang, Yujiu and Wang, Limin and Shan, Ying},

journal={arXiv preprint arXiv:2409.04410},

year={2024}

}