Philipp Lindenberger · Paul-Edouard Sarlin · Marc Pollefeys

LightGlue is a Graph Neural Network for local feature matching that introspects its confidences to 1) stop early if all predictions are ready and 2) remove points deemed unmatchable to save compute.

This repository hosts the inference code for LightGlue, a lightweight feature matcher with high accuracy and adaptive pruning techniques, both in the width and depth of the network, for blazing fast inference. It takes as input a set of keypoints and descriptors for each image, and returns the indices of corresponding points between them.

We release pretrained weights of LightGlue with SuperPoint and DISK local features.

The training end evaluation code will be released in July in a separate repo. If you wish to be notified, subscribe to Issue #6.

You can install this repo pip:

git clone https://github.com/cvg/LightGlue.git && cd LightGlue

python -m pip install -e .We provide a demo notebook which shows how to perform feature extraction and matching on an image pair.

Here is a minimal script to match two images:

from lightglue import LightGlue, SuperPoint, DISK

from lightglue.utils import load_image, match_pair

# SuperPoint+LightGlue

extractor = SuperPoint(max_num_keypoints=2048).eval().cuda() # load the extractor

matcher = LightGlue(pretrained='superpoint').eval().cuda() # load the matcher

# or DISK+LightGlue

extractor = DISK(max_num_keypoints=2048).eval().cuda() # load the extractor

matcher = LightGlue(pretrained='disk').eval().cuda() # load the matcher

# load images to torch and resize to max_edge=1024

image0, scales0 = load_image(path_to_image_0, resize=1024)

image1, scales1 = load_image(path_to_image_1, resize=1024)

# extraction + matching + rescale keypoints to original image size

pred = match_pair(extractor, matcher, image0, image1,

scales0=scales0, scales1=scales1)

kpts0, kpts1, matches = pred['keypoints0'], pred['keypoints1'], pred['matches']

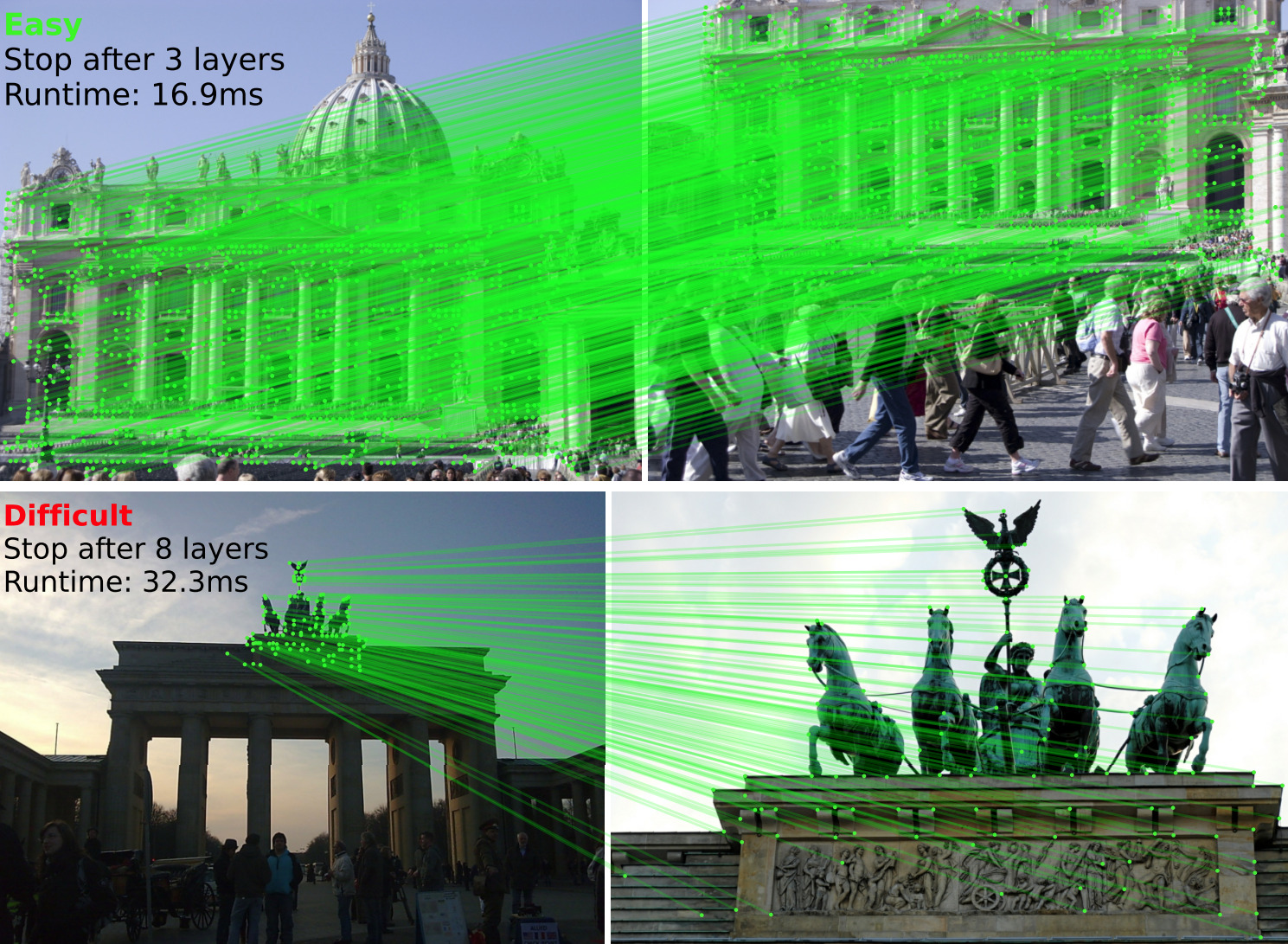

m_kpts0, m_kpts1 = kpts0[matches[..., 0]], kpts1[matches[..., 1]]LightGlue can adjust its depth (number of layers) and width (number of keypoints) per image pair, with a minimal impact on accuracy.

depth_confidence: Controls early stopping, improves run time. Recommended: 0.95. Default: -1 (off)width_confidence: Controls iterative feature removal, improves run time. Recommended: 0.99. Default: -1 (off)flash: Enable FlashAttention. Significantly improves runtime and reduces memory consumption without any impact on accuracy, but requires either FlashAttention ortorch >= 2.0.

If you use any ideas from the paper or code from this repo, please consider citing:

@inproceedings{lindenberger23lightglue,

author = {Philipp Lindenberger and

Paul-Edouard Sarlin and

Marc Pollefeys},

title = {{LightGlue}: Local Feature Matching at Light Speed},

booktitle = {ArXiv PrePrint},

year = {2023}

}