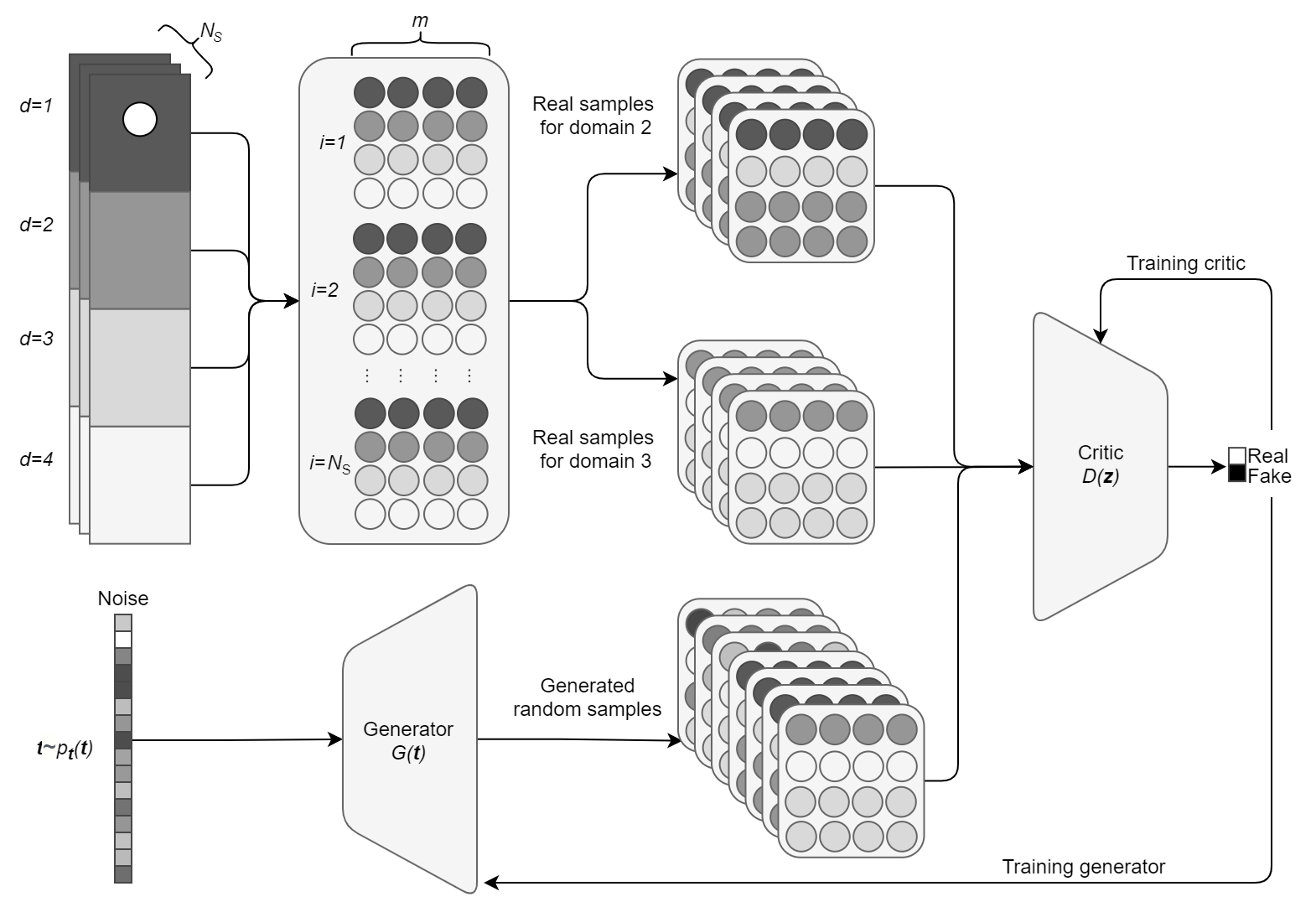

This project contains a library for interacting with a domain decomposition predictive GAN. This draws on ideas from recent research on domain decomposition methods for reduced order modelling and predictive GANs to predict fluid flow in time. Validation and examples performed using flow past a cylinder in high aspect ratio domains can be found found here.

Table of Contents

- Python 3.8

- Tensorflow and other packages in

requirements.txt

.

├── data # Various data files

│ ├── FPC_Re3900_2D_CG_old # .vtu files of FPC

│ ├── processed # End-to-end, integration tests (alternatively `e2e`)

│ │ ├── DD # Decomposed .npy files

│ │ ├── Single # Non-DD .npy files

│ │ └── old # Non-DD .npy files from an outdated simulation

│ └── reconstructed # Reconstructed simulation example

├── ddgan # Main method

│ ├── src

│ │ ├── Optimize.py # Class for predicting fluid flow

│ │ ├── Train.py # Class for training a GAN

│ │ └── Utils.py # Utils for training and predicting

│ └── tests # Unit testing for GAN

├── docs # Documentation files

├── examples

│ ├── models/224 # Saved model for replication purposes

│ └── DD-GAN_usage_example.ipynb # Usage example notebook

├── images

│ ├── flowcharts # Flowcharts

│ ├── paraview # paraview visualisations

│ └── plots # Error plots and simulations

├── LICENSE

├── README.md

├── environment.yml # Conda env

├── requirements.txt

└── setup.py

git clone https://github.com/acse-jat20/DD-GANcd ./DD-GANpip install -e .

In a python file, import the following to use all of the functions:

import ddgan

from ddgan import *If importing the modules does not work, remember to append the path:

import sys

sys.path.append("..")

from ddgan import *- The POD coefficients used in the project can be found under data - Original .vtu files curteousy of Dr. Claire Heaney

- An example notebook that demonstrates the data formatting, training and prediction can be found in examples

This tool includes a workflow split up into two packages.

- GAN Training

- GAN Prediction

Here a general outline of the possibilities each package offers is mentioned. For a more in depth review please explore the documentation and the usage example.

A minimal example of a non-dd version of the code can be found in the following notebook

The GAN initializer accepts a dictionary as an argument as follows:

gan_example = GAN(**kwargs)Here the possible keyword argument include:

# Input data parameters

nsteps: int = 10 # Consecutive timesteps

ndims: int = 10 # Reduced dimensions

batch_size: int = 20 # 32

batches: int = 10 # 900

seed: int = 143 # Random seed for reproducability

epochs: int = 500 # Number of training epochs

nLatent: int = 10 # Dimensionality of the latent space

gen_learning_rate: float = 0.0001 # Generator optimization learning rate

disc_learning_rate: float = 0.0001 # Discriminator optimization learning

logs_location: str = './logs/gradient_tape/' # Saving location for logs

model_location: str = 'models/' # Saving location for model

# WGAN training parameters

n_critic: int = 5 # Number of gradient penalty computations per epoch

lmbda: int = 10 # Gradient penalty multiplier

# Data augmentation

noise: bool = False # If input generator_input is added during training

noise_level: float = 0.001 # Standard deviation of a normal distribution with mu = 0

n_gradient_ascent: int = 500 # The periodicity of making the discriminator taking a step backThe GAN is then trained by calling a setup and train function as follows:

gan.setup()

# If the GAN is to be trained

gan.learn_hypersurface_from_POD_coeffs(training_data)The prediction workflow accepts a dictionary as an argument as follows:

optimizer = Optimize(**kwargs_opt)Here the kwargs include

# Input data hyperparameters

start_from: int = 100 # Initial timestep

nPOD: int = 10 # Number of POD coefficients

nLatent: int = 10 # Dimensionality of latent space

dt: int = 1 # Delta i in the thesis, step size

# Optimization hyperparameters

npredictions: int = 20 # Number of future steps

optimizer_epochs: int = 5000 # Number of optimizer epochs

# Only for DD

evaluated_subdomains: int = 2 # Number of non-boundrary subdomains

cycles: int = 3 # Number of times the domains are traversed

dim_steps = None # n in the paper. = [n_b, n_a, n_self]

debug: bool = False # Debug mode

initial_values: str = "Past" # Initial guess for future timestep. Zeros, Past or None

disturb: bool = False # Nudge the optimization from local minima regularlyThen the prediction can be made by calling predict or predictDD

optimizer.predict(training_data)OR

optimizer.predictDD(training_data,

boundrary_conditions,

dim_steps=added_dims)The code presented in the repo has been validated using unit testing and by the results as can be found in examples.

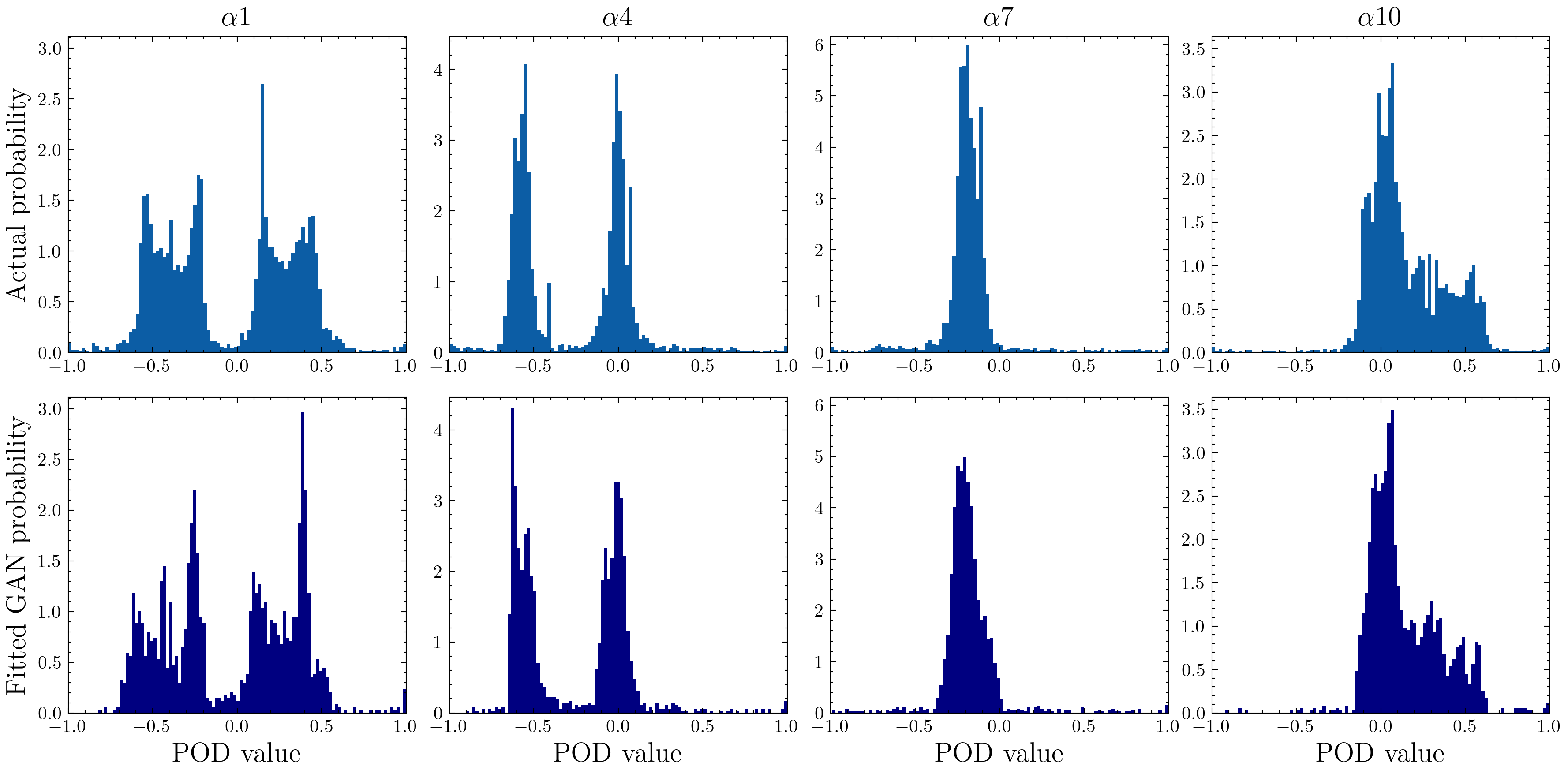

Here is the data distribition of the first 10 POD coefficients before training the gan and the imitation by the GAN. Replication code can be found in examples.

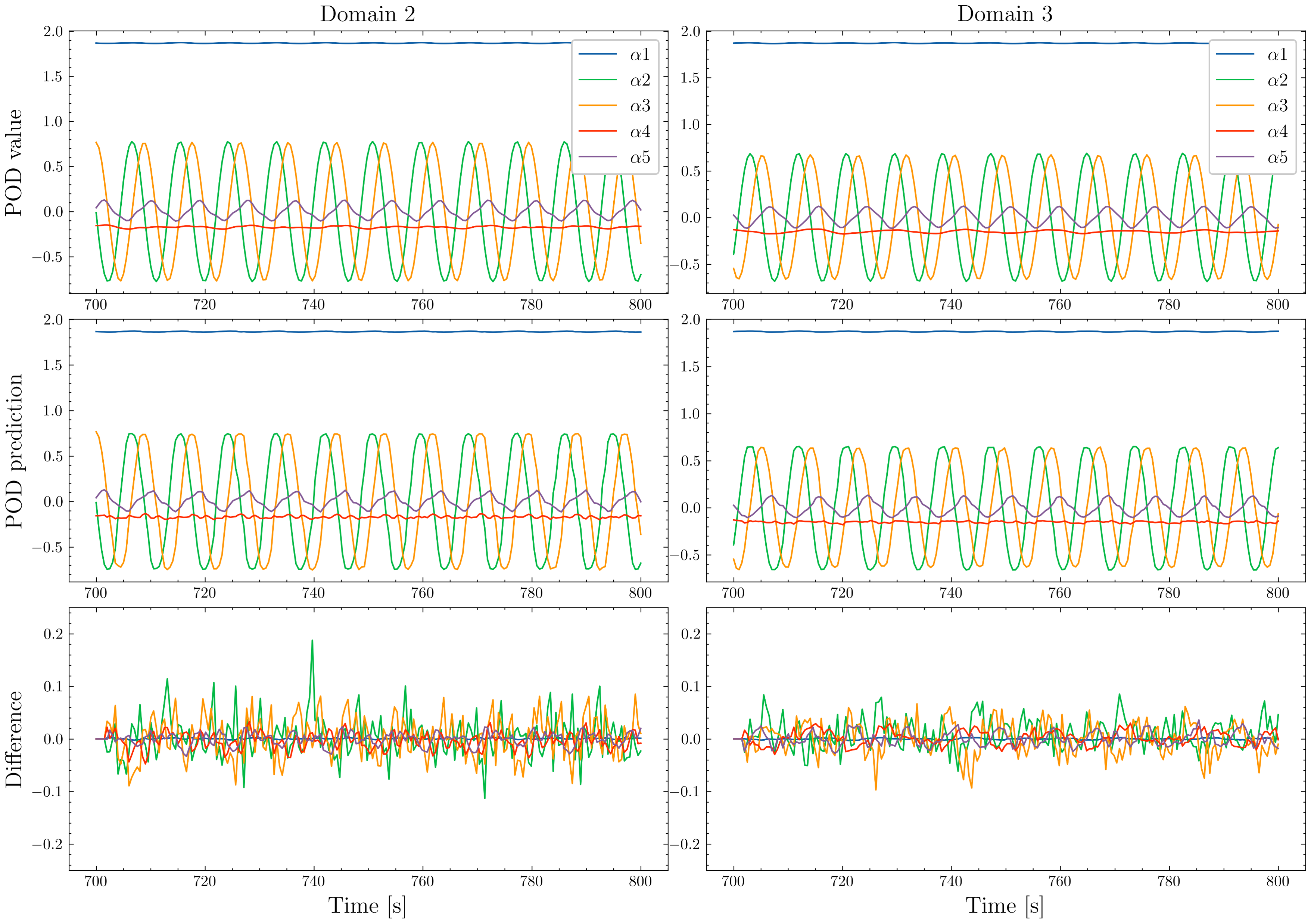

Line plots of the first 5 POD coefcients predicted for 200 time steps between the times 700 and 800. Each of the two charts show (from top to bottom) the prediction targets, the predicted values and the difference between the two. Replication code can be found in examples.

Distributed under the MIT License. See LICENSE for more information.

- Tómasson, Jón Atli jon.tomasson1@gmail.com

- Dr. Claire Heaney

- Prof. Christopher Pain

- Zef Wolffs