The advent of vision-language models fosters the interactive conversations between AI-enabled models and humans. Yet applying these models into clinics must deal with daunting challenges around large-scale training data, financial, and computational resources. Here we propose a cost-effective instruction learning framework for conversational pathology named as CLOVER. CLOVER only trains a lightweight module and uses instruction tuning while freezing the parameters of the large language model. Instead of using costly GPT-4, we propose well-designed prompts on GPT-3.5 for building generation-based instructions, emphasizing the utility of pathological knowledge derived from the Internet source. To augment the use of instructions, we construct a high-quality set of template-based instructions in the context of digital pathology. From two benchmark datasets, our findings reveal the strength of hybrid-form instructions in the visual question-answer in pathology. Extensive results show the cost-effectiveness of CLOVER in answering both open-ended and closed-ended questions, where CLOVER outperforms strong baselines that possess 37 times more training parameters and use instruction data generated from GPT-4. Through the instruction tuning, CLOVER exhibits robustness of few-shot learning in the external clinical dataset. These findings demonstrate that cost-effective modeling of CLOVER could accelerate the adoption of rapid conversational applications in the landscape of digital pathology.

- Checkpoints and instruction dataset will be released soon.

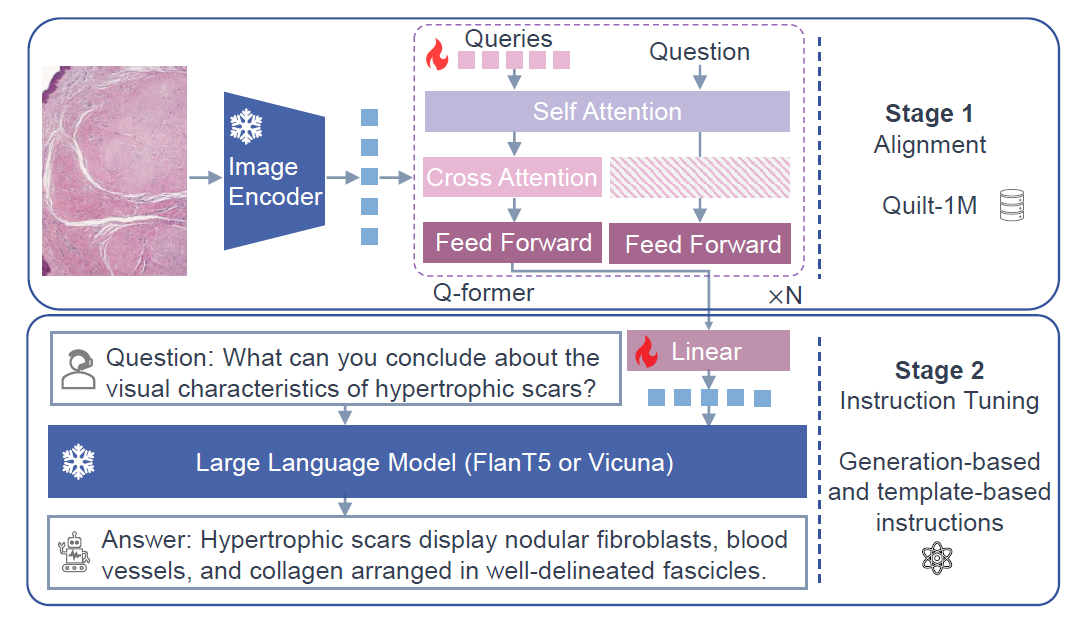

CLOVER employs the training framework of BLIP-2 to achieve a fast domain tuning with lightweight parameters. The entire training process of CLOVER includes two major stages: (i) alignment of vision and language and (ii) supervised fine-tuning with instructions. The alignment compels the model to acquire valuable representations between vision and language. Instruction fine-tuning is vital here for activating LLMs to excel in visual language question answering. Stage 1 requires inputs of image-text pairs, where we use the large-scale Quilt-1M dataset. Stage 2 demands domain-specific instruction data. As we have seen a significant lack of the required instruction data in the literature, we propose a low-cost solution of instruction data generation carefully designed for analyzing pathological data.

- Stage 1: Quilt-1M dataset can be downloaded from Google or Zenodo.

- Stage 2: CLOVER Instructions will be released. Of course, you can also use our prompt to generate the data from PY FILE if you want.

- Creating conda environment

conda create -n clover python=3.9

conda activate clover- Building from source

git clone https://github.com/JLINEkai/CLOVER.git

cd CLOVER

pip install -r requirements.txt- Stage 1 (Alignment):

python train_blip2qformer.py- Stage 2 (Instruction finetuning):

You can choose large language model (LLM) in FILE. We provide FlanT5XL and Vicuna 7B.

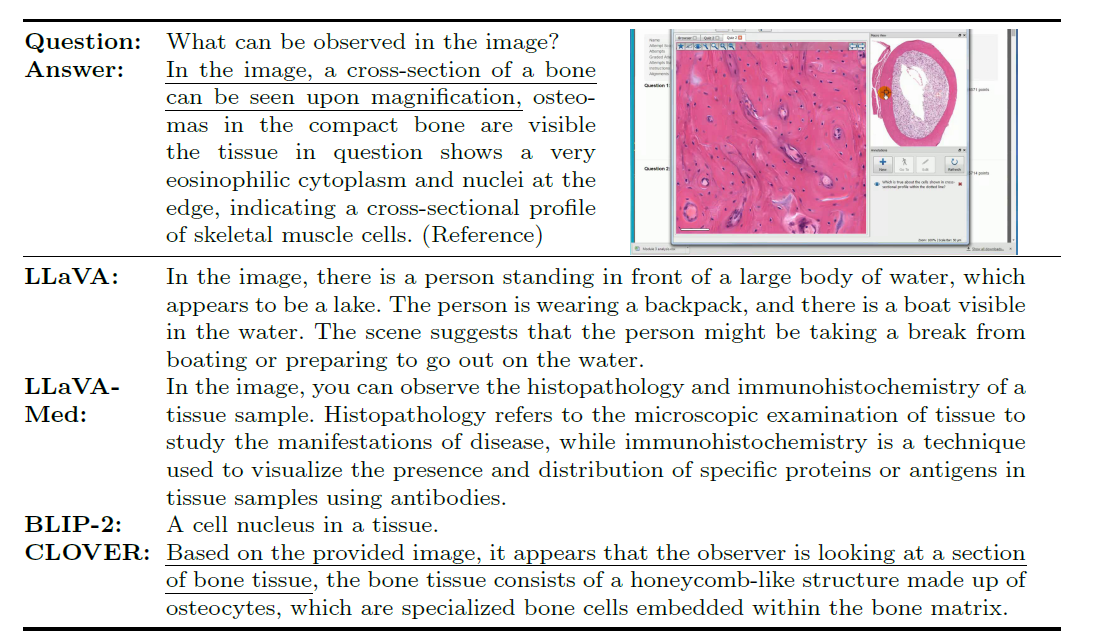

python -m torch.distributed.run --nproc_per_node=1 train.py python -m torch.distributed.run --nproc_per_node=1 evaluate.py --cfg-path lavis/projects/blip2/eval/vqav2_zeroshot_flant5xl_eval.yamlQualitative comparisons of visual question answering on QUILT-VQA. (Image source: QUILT-VQA)

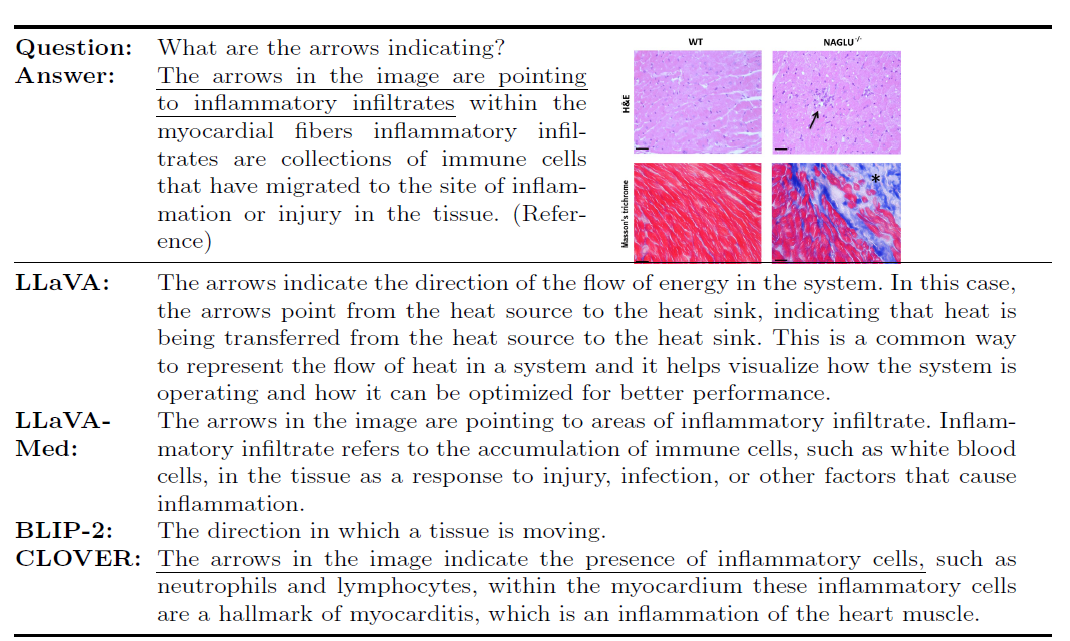

Qualitative comparisons of visual question answering on LLaVA-Med-17K. (Image source: link)

If you have any questions, please send an email to chenkaitao@pjlab.org.cn.

- Our model is based on BLIP-2 BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models