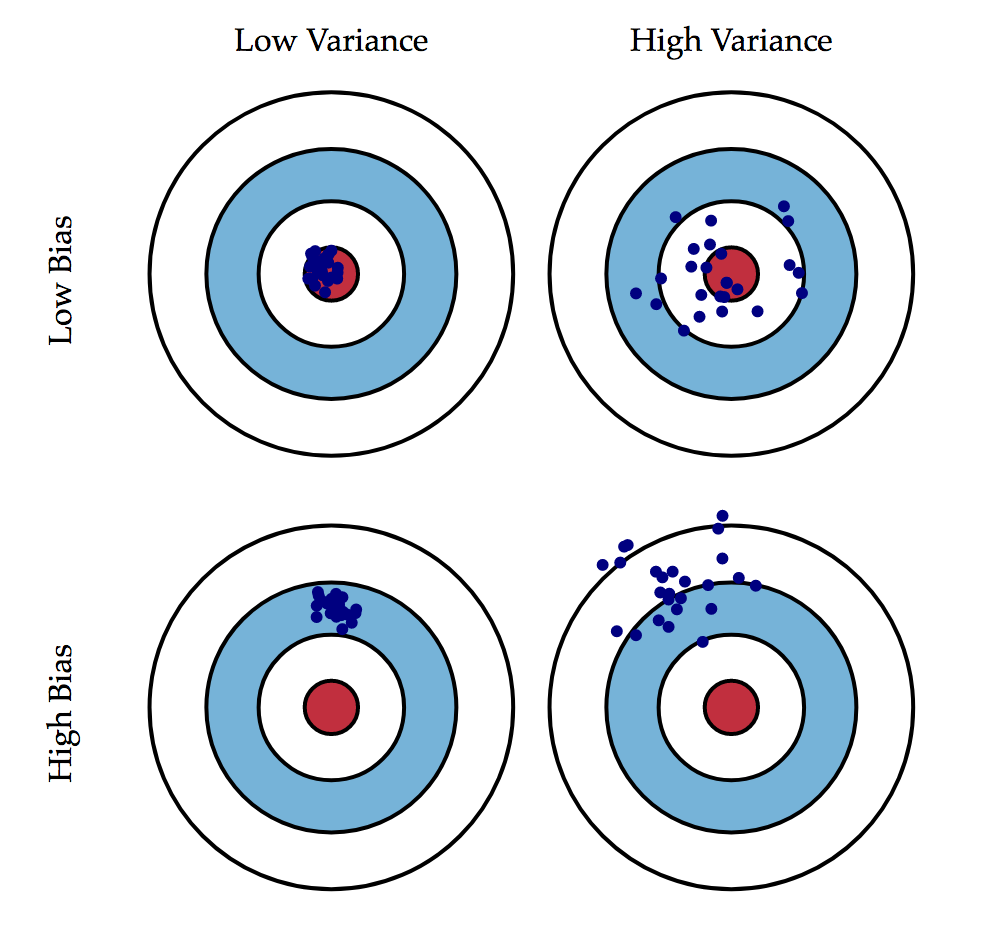

When modelling, we are trying to create a useful prediction that can help us in the future. When doing this, we have seen how we need to create a train test split in order to keep ourselves honest in tuning our model to the data itself. Another perspective on this problem of overfitting versus underfitting is the bias variance tradeoff. We can decompose the mean squared error of our models in terms of bias and variance to further investigate.

$ E[(y-\hat{f}(x)^2] = Bias(\hat{f}(x))^2 + Var(\hat{f}(x)) + \sigma^2$

import pandas as pd

df = pd.read_excel('./movie_data_detailed_with_ols.xlsx')

def norm(col):

minimum = col.min()

maximum = col.max()

return (col-minimum)/(maximum-minimum)

for col in df:

try:

df[col] = norm(df[col])

except:

pass

X = df[['budget','imdbRating','Metascore','imdbVotes']]

y = df['domgross']

df.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| budget | domgross | title | Response_Json | Year | imdbRating | Metascore | imdbVotes | Model | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.034169 | 0.055325 | 21 & Over | NaN | 0.997516 | 0.839506 | 0.500000 | 0.384192 | 0.261351 |

| 1 | 0.182956 | 0.023779 | Dredd 3D | NaN | 0.999503 | 0.000000 | 0.000000 | 0.000000 | 0.070486 |

| 2 | 0.066059 | 0.125847 | 12 Years a Slave | NaN | 1.000000 | 1.000000 | 1.000000 | 1.000000 | 0.704489 |

| 3 | 0.252847 | 0.183719 | 2 Guns | NaN | 1.000000 | 0.827160 | 0.572917 | 0.323196 | 0.371052 |

| 4 | 0.157175 | 0.233625 | 42 | NaN | 1.000000 | 0.925926 | 0.645833 | 0.137984 | 0.231656 |

#Your code here#Your code hereimport matplotlib.pyplot as plt

%matplotlib inline#Your code here#Your code hereWrite a formula to calculate the bias of a models predictions given the actual data.

(The expected value can simply be taken as the mean or average value.)

def bias():

passWrite a formula to calculate the variance of a model's predictions (or any set of data).

def variance():

pass5. Us your functions to calculate the bias and variance of your model. Do this seperately for the train and test sets.

#Train Set

b = None#Your code here

v = None#Your code here

#print('Bias: {} \nVariance: {}'.format(b,v))#Test Set

b = None#Your code here

v = None#Your code here

#print('Bias: {} \nVariance: {}'.format(b,v))#Your description here (this cell is formatted using markdown)

7. Overfit a new model by creating additional features by raising current features to various powers.

#Your Code here#Your code here#Your code here#Your code here#Your code here#Your description here (this cell is formatted using markdown)