Adaptive Gradient Methods Converge Faster with Over-Parameterization (and you can do a line-search) [Paper]

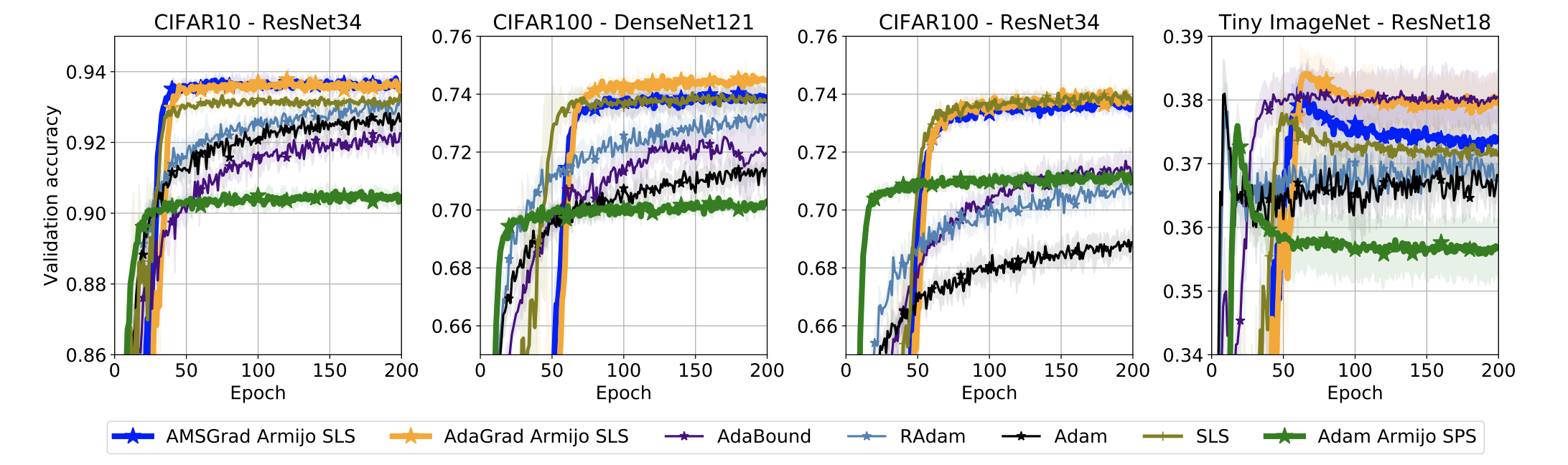

Our AMSGrad Armijo SLS and AdaGrad Armijo SLS consistently achieve best generalization results.

pip install git+https://github.com/IssamLaradji/ada_sls.git

Use AdaSls in your code by adding the following script.

import adasls

opt = adasls.AdaSLS(model.parameters())

for epoch in range(100):

opt.zero_grad()

closure = lambda : torch.nn.MSELoss()(model(X), Y)

opt.step(closure=closure)

Install the requirements

pip install -r requirements.txt

Run the experiments for the paper using the command below:

python trainval.py -e ${GROUP}_{BENCHMARK} -sb ${SAVEDIR_BASE} -d ${DATADIR} -r 1

with the placeholders defined as follows.

{GROUP}:

Defines the set of optimizers to run, which can either be,

nomomfor opimizers without momentum; ormomfor opimizers with momentum.

{BENCHMARK}:

Defines the dataset, evaluation metric and model for the experiments (see exp_configs.py), which can be,

synfor the synthetic experiments;kernelsfor the kernel experiments;mffor matrix factorization experiments;mnistfor the mnist experiments;cifar10,cifar100,cifar10_nobn,cifar100_nobnfor the cifar experiments; orimagenet200,imagenet10for the imagenet experiments.

{SAVEDIR_BASE}:

Defines the absolute path to where the results will be saved.

{DATADIR}:

Defines the absolute path containing the downloaded datasets.

@article{vaswani2020adaptive,

title={Adaptive Gradient Methods Converge Faster with Over-Parameterization (and you can do a line-search)},

author={Vaswani, Sharan and Kunstner, Frederik and Laradji, Issam and Meng, Si Yi and Schmidt, Mark and Lacoste-Julien, Simon},

journal={arXiv preprint arXiv:2006.06835},

year={2020}

}