CO2 vs. Temperature Exercise (Production Code)

This repository contains the production code contained in the associated Databricks exercise. The goal is to demonstrate what that logic would look like in production code, with tests, along with a pipeline to deploy it to a target.

To recall, the relevant questions that this code aims to answer are the following:

- Which countries are worse-hit (higher temperature anomalies)?

- Which countries are the biggest emitters?

- What are some attempts of ranking “biggest polluters” in a sensible way?

For more information on the data sources, please visit the associated Databricks exercise.

This code is designed to be deployed as an AWS Glue Job.

Prerequisites

- Basic knowledge of Python, Spark, Docker, Terraform

- Access to an AWS account (Optional)

Quickstart

- Mirror this repo in your account as a PRIVATE repo (since you're running your own self-hosted Github Runners, you'll want to ensure your project is Private)

- Set up your Development Environment

- Fetch input data:

./go fetch-data - Optionally set up a CI/CD pipeline

- Optional, but required if step (4) was completed. If you set up the pipeline in (4), you'll need to set up an AWS bucket to interact with

- Simply run:

git submodule add git@github.com:data-derp/s3-bucket-aws-cloudformation.gitand the pipeline will take care of setting up the bucket for you

- Simply run:

- Fix the tests in

data-ingestion/anddata-transformation/(in that order). See Development Environment for tips and tricks on running python/tests in the dev-container. - Delete your AWS Resources when you're done if you ran (4) and (5) (or risk AWS charges)

Delete your AWS Resources

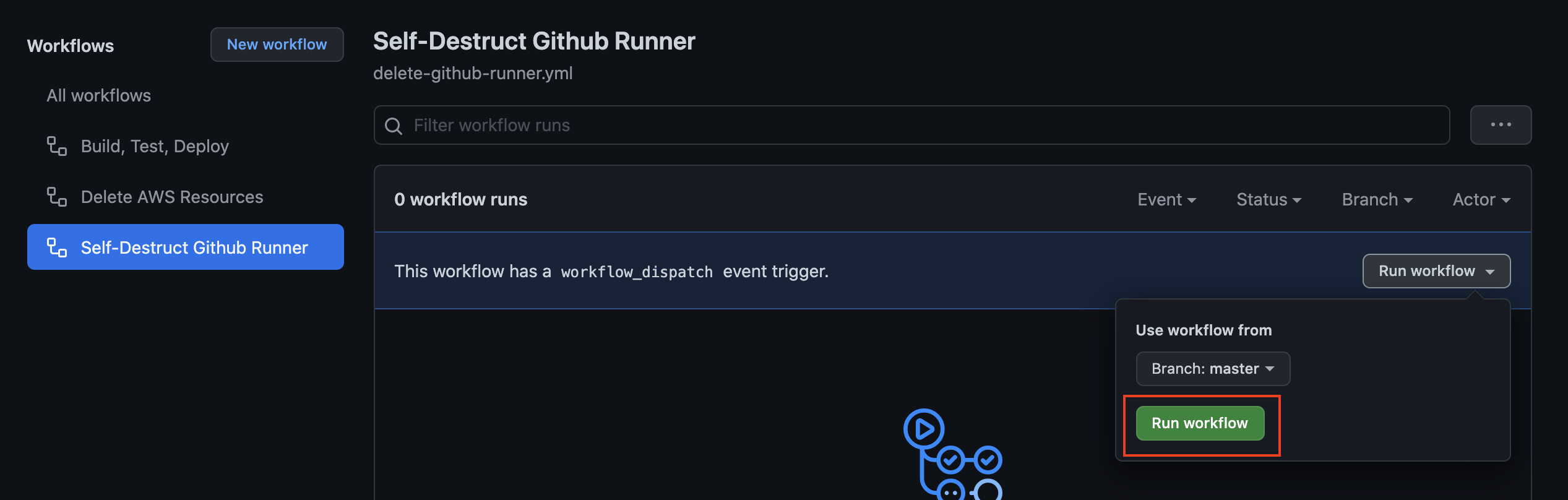

Github Runner

The Github Runner is the most expensive piece of infrastructure and can easily rack up charges. It's your responsibility to bring them down again. There is a "Self-Destruct" workflow that we provide which triggers the deletion, but doesn't check the final status (because once the Github Runner has been deleted, we don't have the AWS connection to check it from the pipeline.)

Manually trigger the workflow:

Once this pipeline is successful, please visit AWS Cloudformation to ensure that the stack has been successfully deleted.

NOTE: at the moment, the Stack fails to delete completely (role/policy detatchments) because of a failsafe. The Github Runner role is designed to not be able to change its own policies. Until a change considered, this requires manual intervention by a user with higher permissions to fully delete the stack. However, the most important aspect is that it should successfully delete the compute machines that would impact your billing.

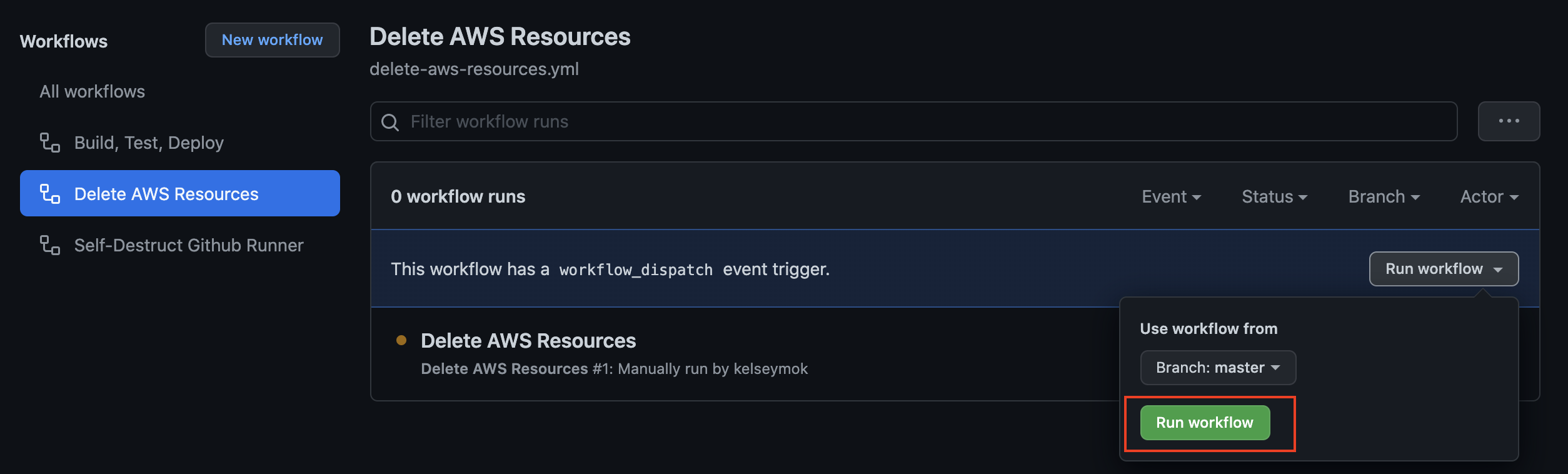

AWS S3 Bucket

We provide a "Delete AWS Resources" pipeline which deletes the S3 bucket created in the Quickstart.

Manually trigger the workflow:

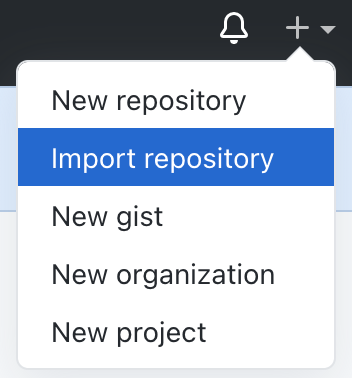

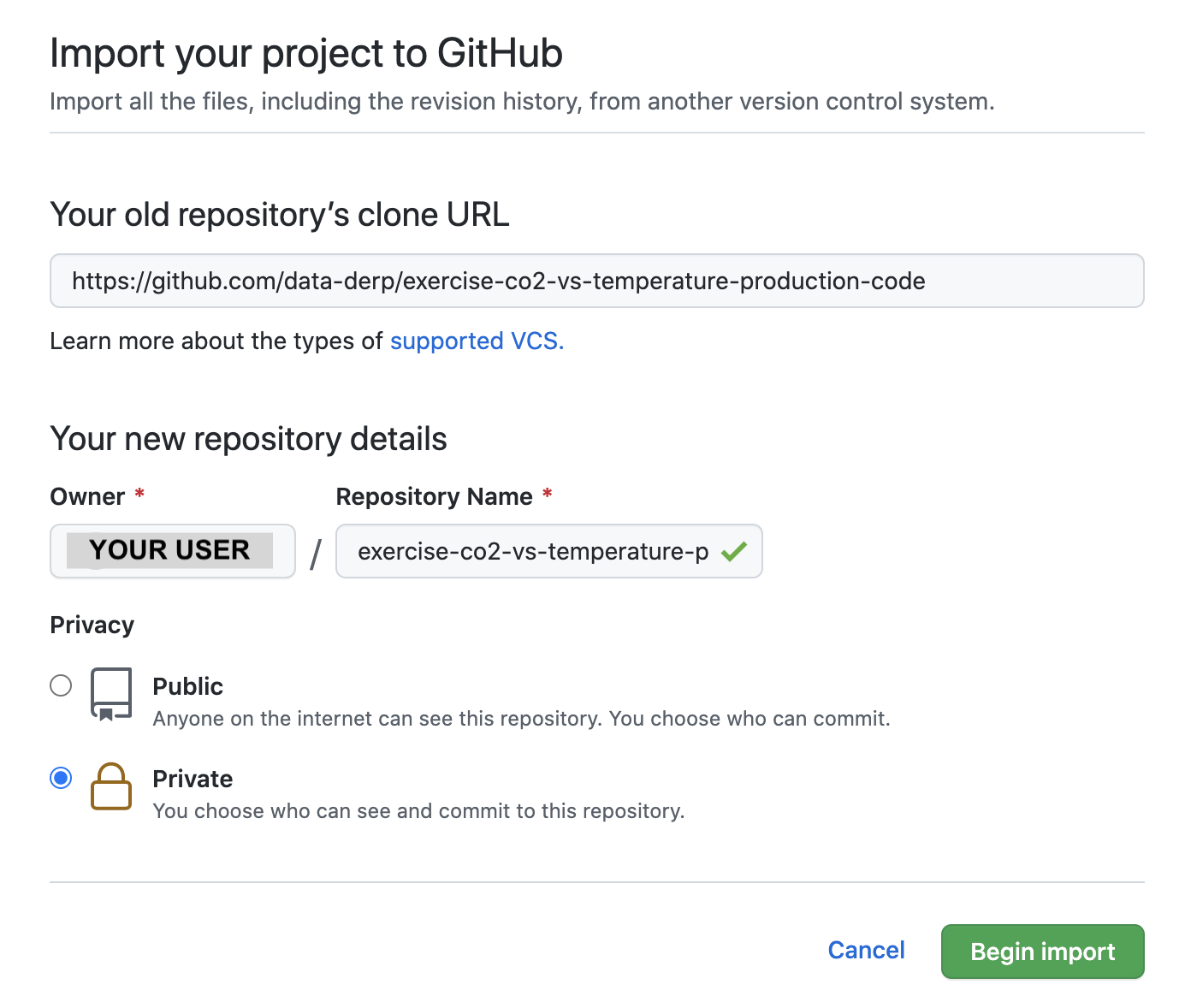

Mirror the Repository

-

Import the

https://github.com/data-derp/exercise-co2-vs-temperature-production-codeas a PRIVATE repo calledexercise-co2-vs-temperature-production-code:

-

Clone the new repo locally and add the original repository as a source:

git clone git@github.com:<your-username>/exercise-co2-vs-temperature-production-code.git

cd ./exercise-co2-vs-temperature-production-code

git remote add source git@github.com:data-derp/exercise-co2-vs-temperature-production-code.git - To pull in new changes:

git fetch source

git rebase source/masterSet up a CI/CD Pipeline (optional)

In this step, we will bootstrap a Self-Hosted Github Runner. What is a Github Self-hosted Runner?

- Set up a Github Runner

- Set up workflows:

./setup-workflows -p <your-project-name> -m <your-module-name> -r <aws-region>- Commit the new workflow template and push to see your changes.

- Fix the tests in

data-ingestion/anddata-transformation/(in that order) and push to see your changes run in the pipeline. See Development Environment for tips and tricks on running python/tests in the dev-container.

Future Development

- Script to pull in data

- Manual workflow to delete S3 Bucket and contents

- Manual workflow to delete Github Runner Cloudformation Stack (and Github Runner Reg Token)