⚠️ DISCONTINUATION OF PROJECT - This project will no longer be maintained by Intel. Intel has ceased development and contributions including, but not limited to, maintenance, bug fixes, new releases, or updates, to this project. Intel no longer accepts patches to this project. If you have an ongoing need to use this project, are interested in independently developing it, or would like to maintain patches for the open source software community, please create your own fork of this project.

Overview | Requirements | Example usage | Results | Paper | Citing

Code to accompany the paper Improving model calibration with accuracy versus uncertainty optimization [NeurIPS 2020].

Abstract: Obtaining reliable and accurate quantification of uncertainty estimates from deep neural networks is important in safety critical applications. A well-calibrated model should be accurate when it is certain about its prediction and indicate high uncertainty when it is likely to be inaccurate. Uncertainty calibration is a challenging problem as there is no ground truth available for uncertainty estimates. We propose an optimization method that leverages the relationship between accuracy and uncertainty as an anchor for uncertainty calibration. We introduce a differentiable accuracy versus uncertainty calibration (AvUC) loss function as an additional penalty term within loss-calibrated approximate inference framework. AvUC enables a model to learn to provide well-calibrated uncertainties, in addition to improved accuracy. We also demonstrate the same methodology can be extended to post-hoc uncertainty calibration on pretrained models.

This repository has code for accuracy vs uncertainty calibration (AvUC) loss and variational layers (convolutional and linear) to perform mean-field stochastic variational inference (SVI) in Bayesian neural networks. Implementations of SVI and SVI-AvUC methods with ResNet-20 (CIFAR10) and ResNet-50 (ImageNet) architectures.

We propose an optimization method that leverages the relationship between accuracy and uncertainty as anchor for uncertainty calibration in deep neural network classifiers (Bayesian and non-Bayesian). We propose differentiable approximation to accuracy vs uncertainty (AvU) measure [Mukhoti & Gal 2018] and introduce trainable AvUC loss function. A task-specific utility function is employed in Bayesian decision theory [Berger 1985] to accomplish optimal predictions. In this work, AvU utility function is optimized during training for obtaining well-calibrated uncertainties along with improved accuracy. We use AvUC loss as an additional utility-dependent penalty term to accomplish the task of improving uncertainty calibration relying on the theoretically sound loss-calibrated approximate inference framework [Lacoste-Julien et al. 2011, Cobb et al. 2018] rooted in Bayesian decision theory.

This code has been tested on PyTorch v1.6.0 and torchvision v0.7.0 with python 3.7.7.

Datasets:

- CIFAR-10 [Krizhevsky 2009]

- ImageNet [Deng et al. 2009] (download dataset to data/imagenet folder)

- CIFAR10 with corruptions [Hendrycks & Dietterich 2019] for dataset shift evaluation (download CIFAR-10-C dataset to 'data/CIFAR-10-C' folder)

- ImageNet with corruptions [Hendrycks & Dietterich 2019] for dataset shift evaluation (download ImageNet-C dataset to 'data/ImageNet-C' folder)

- SVHN [Netzer et al. 2011] for out-of-distribution evaluation (download file to 'data/SVHN' folder)

Dependencies:

- Create conda environment with python=3.7

- Install PyTorch and torchvision packages within conda environment following instructions from PyTorch install guide

- conda install -c conda-forge accimage

- pip install tensorboard

- pip install scikit-learn

We provide example usages for SVI-AvUC, SVI and Vanilla (deterministic) methods on CIFAR-10 and ImageNet to train/evaluate the models along with the implementation of AvUC loss and Bayesian layers.

To train the Bayesian ResNet-20 model on CIFAR10 with SVI-AvUC method, run this script:

sh scripts/train_bayesian_cifar_avu.sh

To train the Bayesian ResNet-50 model on ImageNet with SVI-AvUC method, run this script:

sh scripts/train_bayesian_imagenet_avu.sh

Our trained models can be downloaded from here. Download and untar SVI-AVUC ImageNet model to 'checkpoint/imagenet/bayesian_svi_avu/' folder.

To evaluate SVI-AvUC on CIFAR10, CIFAR10-C and SVHN, run the script below. Results (numpy files) will be saved in logs/cifar/bayesian_svi_avu/preds folder.

sh scripts/test_bayesian_cifar_avu.sh

To evaluate SVI-AvUC on ImageNet and ImageNet-C, run the script below. Results (numpy files) will be saved in logs/imagenet/bayesian_svi_avu/preds folder.

sh scripts/test_bayesian_imagenet_avu.sh

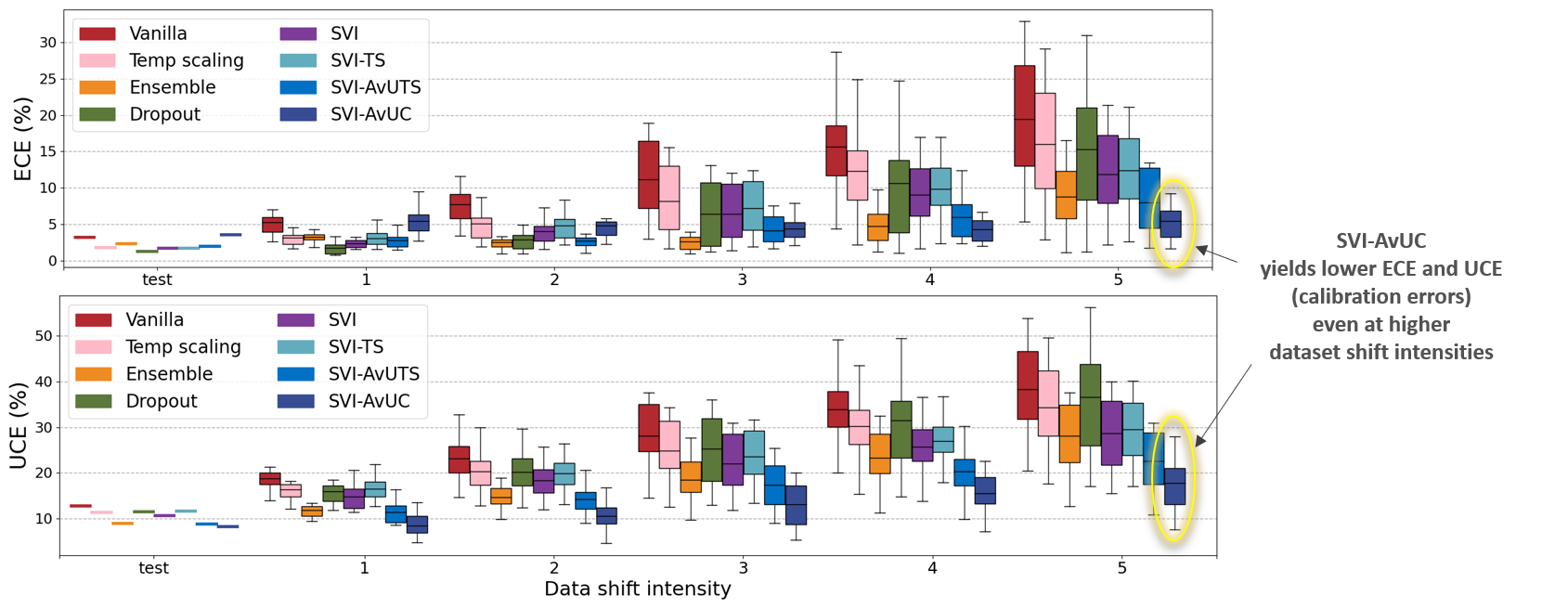

Model calibration under dataset shift

Figure below shows model calibration evaluation with Expected calibration error (ECE↓) and Expected uncertainty calibration error (UCE↓) on ImageNet under dataset shift. At each shift intensity level, the boxplot summarizes the results across 16 different dataset shift types. A well-calibrated model should provide lower calibration errors even at increased dataset shift.

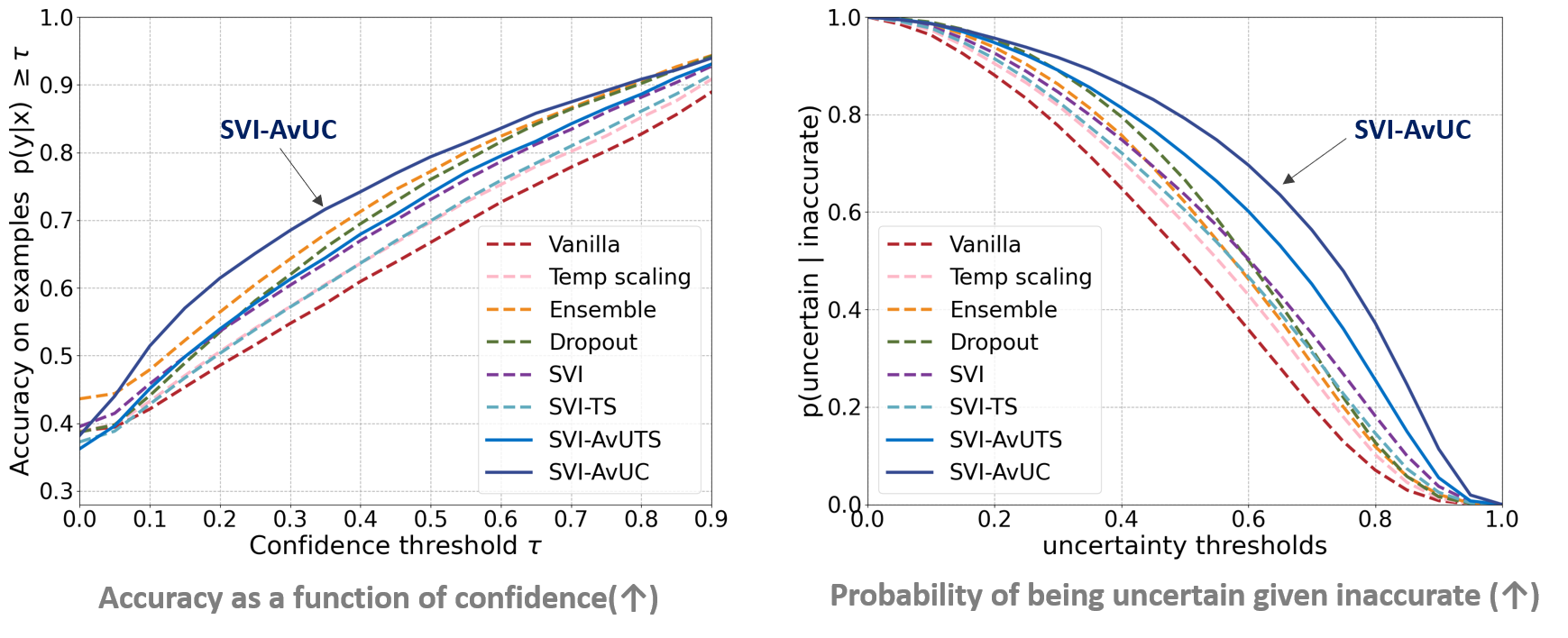

Model performance with respect to confidence and uncertainty estimates

A reliable model should be accurate when it is certain about its prediction and indicate high uncertainty when it is likely to be inaccurate. We evaluate the quality of confidence and predictive uncertainty estimates using accuracy vs confidence and p(uncertain | inaccurate) plots respectively. The plots below indicate SVI-AvUC is more accurate at higher confidence and more uncertain when making inaccurate predictions under distributional shift (ImageNet corrupted with Gaussian blur), compared to other methods.

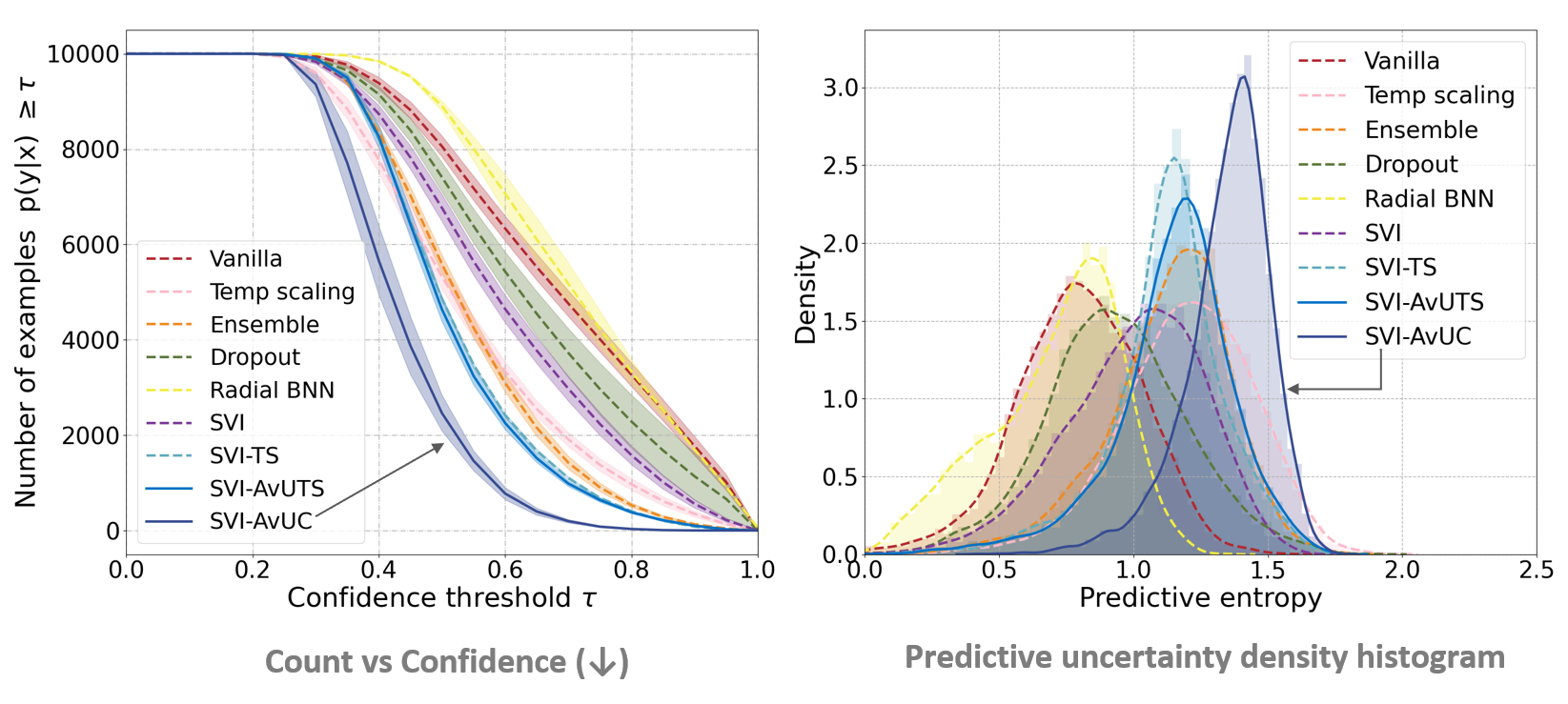

Model reliability towards out-of-distribution data

Out-of-distribution evaluation with SVHN data on the model trained with CIFAR10. SVI-AvUC has lesser number of examples with higher confidence and provides higher predictive uncertainty estimates on out-of-distribution data.

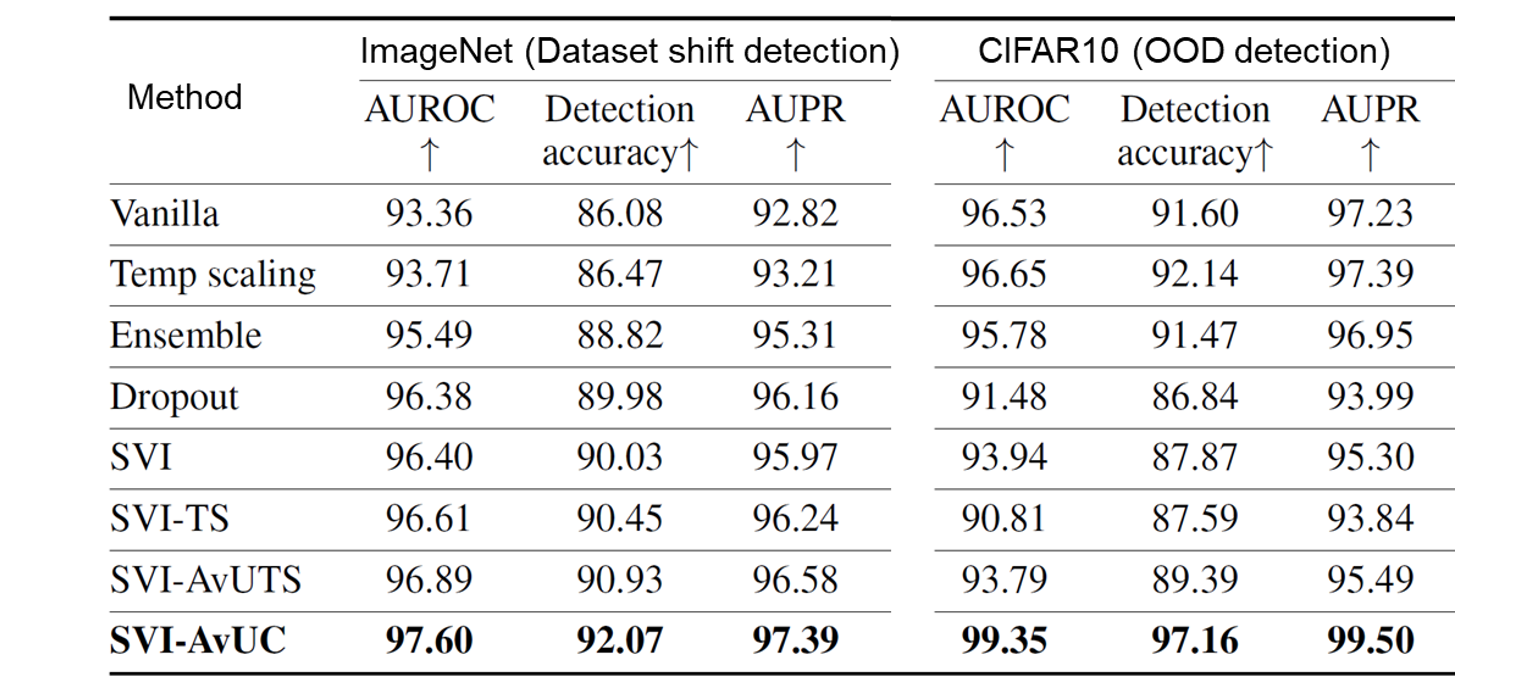

Distributional shift detection performance

Distributional shift detection using predictive uncertainty estimates. For dataset shift detection on ImageNet, test data corrupted with Gaussian blur of intensity level 5 is used. SVHN is used as out-of-distribution (OOD) data for OOD detection on model trained with CIFAR10.

Please refer to the paper for more results. The results for Vanilla, Temp scaling, Ensemble and Dropout methods are computed from the model predictions provided in UQ benchmark [Ovadia et al. 2019]

If you find this useful in your research, please cite as:

@article{krishnan2020improving,

author={Ranganath Krishnan and Omesh Tickoo},

title={Improving model calibration with accuracy versus uncertainty optimization},

journal={Advances in Neural Information Processing Systems},

year={2020}

}