This is forked from the setup used for the video streaming experiments in the MMSys'20 Paper "Comparing Fixed and Variable Segment Durations for Adaptive Video Streaming – A Holistic Analysis".

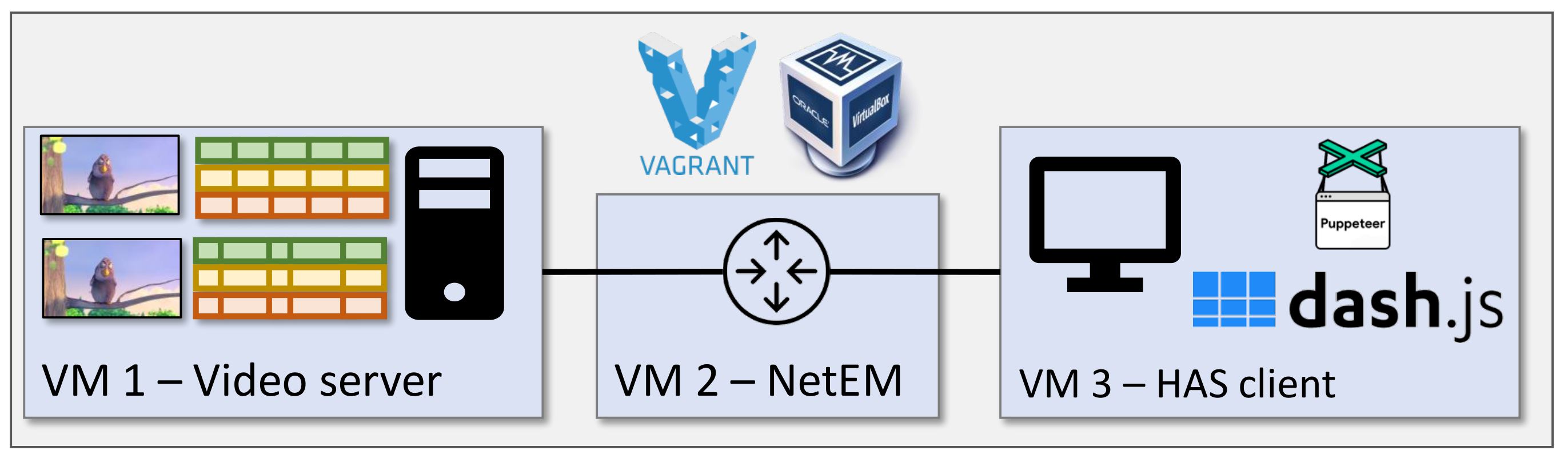

The setup consists of three virtual machines.

- The server, which hosts the video content

- The netem, which throttles the bandwidth, as for example defined by traces

- The client, which uses DASH.js to stream the video

Step 1 Open a terminal and navigate into the subfolder vagrant_files. Run the following command to provision all VMs (this will take a few minutes).

vagrant up

# with Vmware Fusion on Mac

vagrant up --provider vmware_fusionAll VMs are ready as soon as you can see the following output:

[nodemon] starting node ./bin/www

Step 2 Open a second terminal and navigate again into the subfolder vagrant_files`

-

Case 1: Performing a single measurement run:

Log in to the client VM by typing the following command:

vagrant ssh clientOn the client VM, change the directory using

cd /home/vagrant/DASH-setup-local/In this directory, a measurment run can be initiated with the following command:

npm start $browserDIr $run_var $videoDir $hostWith the parameters being as follows.

browserDir: A directory located at the client, where Chrome settings and log files are storedrun_var: A unique name for the experiment runvideoDir: Server-sided path for video to be streamedhost: IP address of the server

Then, a single run can for exmaple be initiated as follows:

npm start /home/vagrant/browserDir test_run CBR_BBB_NA_10/playlist.mpd 192.167.101.13 -

Case 2: Performing a set of measurement runs using the automation script:

The

experiment_startup.shin this repository performs 8 measurements in total. It uses the 2 traces from the `test_traces folder, performs two runs, and streams the variably segmented and the fixed segmented version of the BBB video.If neccessary, adapt the file

experiment_startup.shaccording to your needs (more detail on the script can be found below). From thevagrant_filesdirectory, run the following command from your host machine to start the script:bash experiment_startup.sh

Step 3 As soon as a measurment run is finished, the log file can be found in the following directory on the host machine: DASH-setup/client/logs.

Step 4 To destroy all VMs for clean up, run:

vagrant destroy

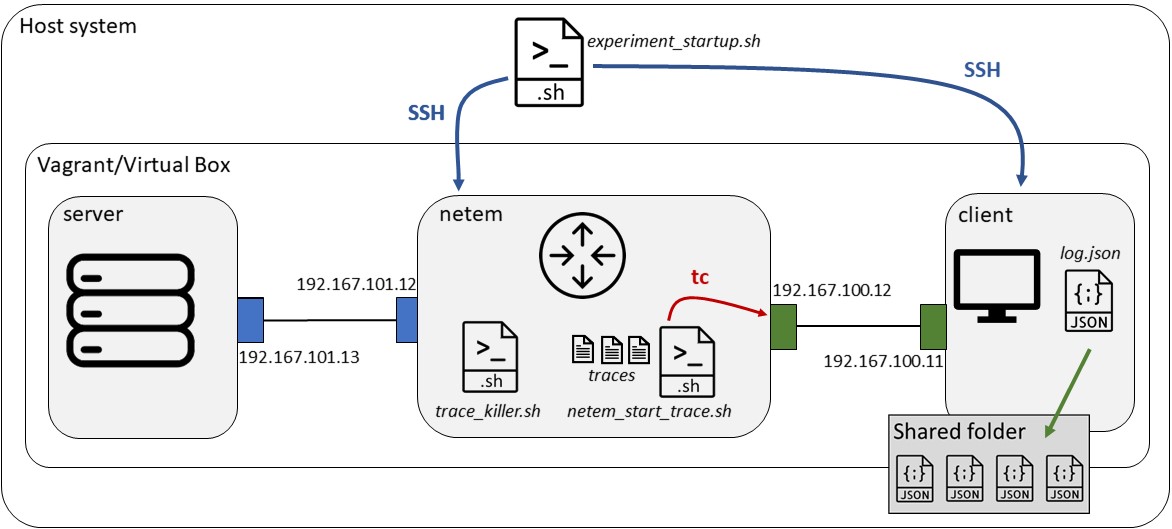

Running vagrant up on the host machine automatically provisions server, netem, and client. The configurations for these machines can be changed in the Vagrantfile, as well as in the respective provisioning scripts setup_server.sh, setup_netem.sh, and setup_client.sh. Running experiment_startup.sh initiates the automatized measurements.

Running vagrant up on the host machine automatically provisions server, netem, and client. The configurations for these machines can be changed in the Vagrantfile, as well as in the respective provisioning scripts setup_server.sh, setup_netem.sh, and setup_client.sh. Running experiment_startup.sh initiates the automatized measurements.

- line 6 -- allows to specify the number of runs to be performed for each trace

- line 20

vagrant ssh netem -- -t bash trace_killer.sh-- ensures that no old traces, e.g. from previous measurement runs, ar active. The scripttrace_killer.shis located at the netem. Via the SSH command, the host machine triggers the netem to perform the cleanup. - line 23

vagrant ssh netem -- -t timeout 1500s bash netem_start_trace.sh trace_files/$trace_folder/$tr &-- Via SSH, the host machine triggers the netem to start limiting the bandwidth according to the values specified in the trace file using linux traffic control (tc). - line 26

vagrant ssh client -- -t "(cd /home/vagrant/DASH-setup-local/client && timeout $vid_timeout npm start $browserDir $run_var $videoDirVAR $host)"-- Via SSH, the client is pushed to start the video stream with the the specified parameters. The process is started so to quit after the time specified in $vid_timeout. This is to ensure that if any error occurs during a run (e.g. a timeout event from DASH.js or 404 error), the measurement procedure does not get stuck.

As soon as one video has been streamed completely, the client stores the .json log file in a folder which is shared with the host machine.

We describe in the following, how the setup can be customized.

To adapt ABR and buffer thresholds, navigate to DASH-setup/server/public/javascripts. Open the file player.js to modify the parameters in line 20:

player.updateSettings({'streaming': {stableBufferTime: 30, bufferTimeAtTopQuality: 45, abr:{ABRStrategy: 'abrDynamic'}}});

DASH.js provides three different ABR Strategies:

abrDynamic(default, hybrid solution)abrBola(buffer-based)abrThroughput(rate-based)

Please find more information regarding different buffer threshold settings and other player/ABR settings here.

-

Traces: The automation srcipt

experiment_startup.shperforms measurement runs for all available traces in the specified trace folder. Hence, if measurements should be run with a specific set of traces, these traces simply need to be put into the respective directory here:vagrant_files/trace_files. -

Videos: All test videos, which are located in the folder

DASH-setup/public/videos, will be available for streaming at the server. In our setup, we used the following structure for the video sequences.videos | |_____CBR_BBB_VAR_10 | | playlist.mpd | |_____quality_0 | | | quality0.mpd | | | init_stream0.m4s | | | chunk1.m4s | | | chunk2.m4s | | | chunk3.m4s | | | |_____quality_1 | | | quality1.mpd | | | init_stream1.m4s | | | chunk1.m4s | | | chunk2.m4s | | | chunk3.m4s | | | |_____quality_2 | | | quality2.mpd | | | init_stream2.m4s | | | chunk1.m4s | | | chunk2.m4s | | | chunk3.m4s | |_____CBR_BBB_NA_10 | | playlist.mpd | |_____quality_0 | | | quality0.mpd | | | init_stream0.m4s | | | chunk1.m4s | | | chunk2.m4s | | | chunk3.m4s | | | |_____quality_1 | | | quality1.mpd | | | init_stream1.m4s | | | chunk1.m4s | | | chunk2.m4s | | | chunk3.m4s | | | |_____quality_2 | | | quality2.mpd | | | init_stream2.m4s | | | chunk1.m4s | | | chunk2.m4s | | | chunk3.m4s

During the measurement runs, the bandwidth is automatically throtteled at the netem according to the values specified in the trace files. However, if the bandwidth should be kept fix, please follow these steps:

Step 1: Log in to the netem

vagrant ssh netem

Step 2: Add the queueing discipline (qdisc) on the interface eth2

sudo tc qdisc add dev eth2 root handle 1: htb default 1

Step 3: Set the bandwidth limit, expressed as kbps, on eth2:

sudo tc class add dev eth2 parent 1: classid 1:1 htb rate 1000kbit

- The measurements are performed using the DASH reference client implementation DASH.js

- Puppeteer was used to allow the usage of DASH.js within the Chrome Browser in headless mode

- The QoE is evaluated according to the standardized ITU-T P.1203 model

If you experience problems or have any questions on the measurement setup, please contact Susanna Schwarzmann (susanna@inet.tu-berlin.de)