Haoji Zhang*, Yiqin Wang*, Yansong Tang †, Yong Liu, Jiashi Feng, Jifeng Dai, Xiaojie Jin†‡

* Equally contributing first authors, †Correspondence, ‡Project Lead

Work done when interning at Bytedance.

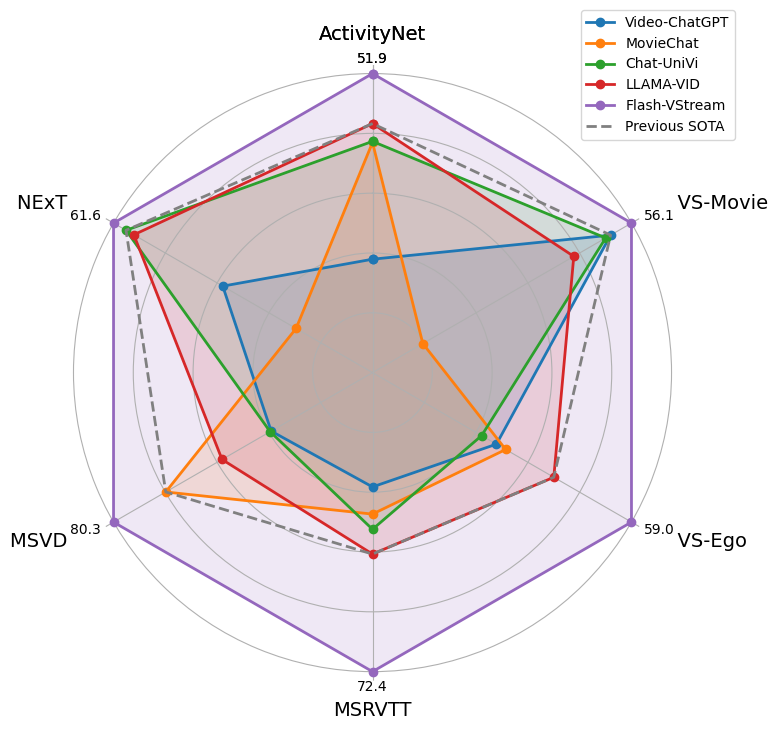

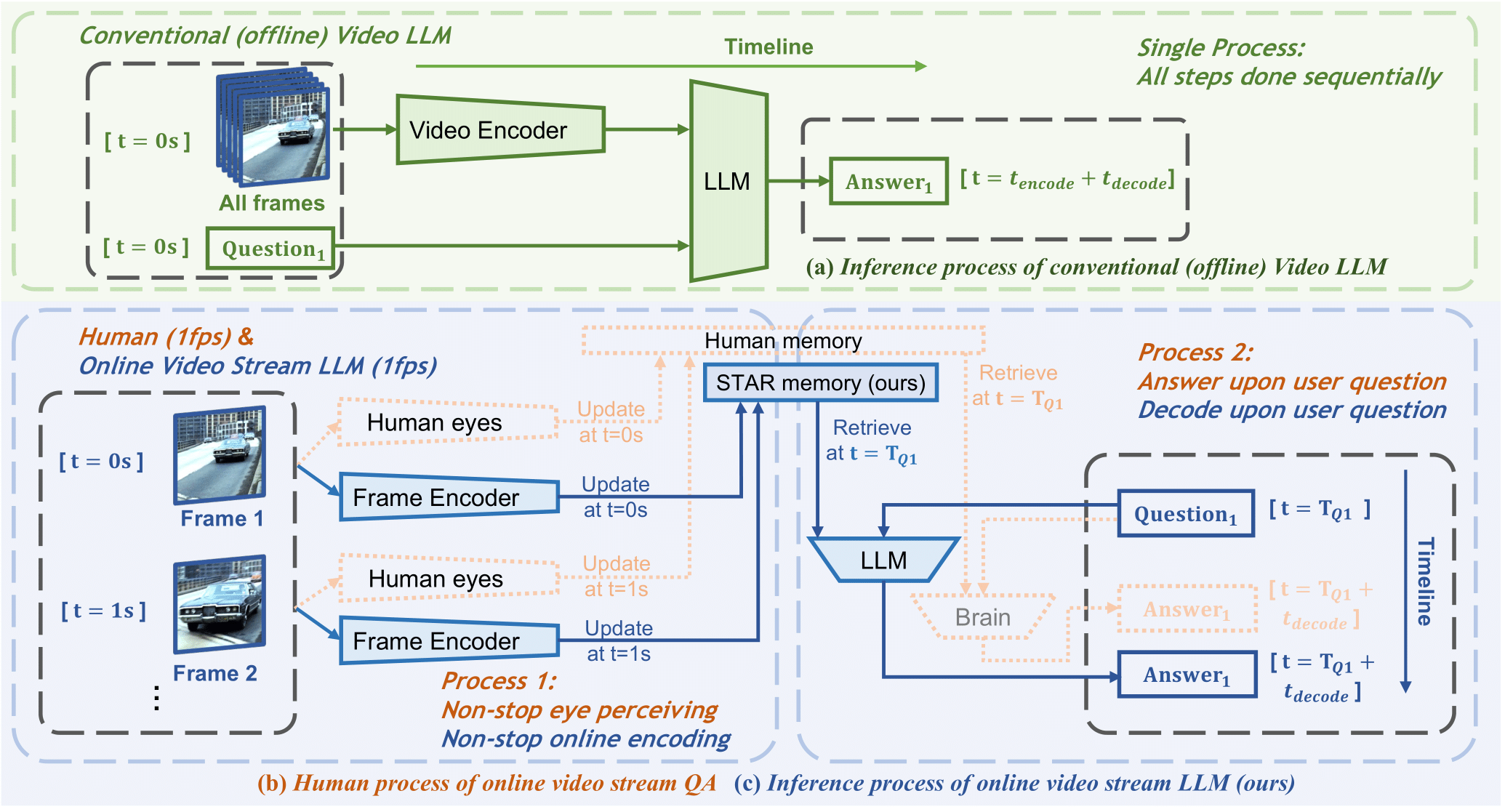

We presented Flash-VStream, a noval LMM able to process extremely long video streams in real-time and respond to user queries simultaneously.

We also proposed VStream-QA, a novel question answering benchmark specifically designed for online video streaming understanding.

-

[2024/6/15] 🏅 Our team won the 1st Place at Long-Term Video Question Answering Challenge of LOVEU Workshop@CVPR'24. Here is our certification. We used a Hierarchical Memory model based on Flash-VStream-7b.

-

[2024/06/12] 🔥 Flash-VStream is coming! We release the homepage, paper, code and model for Flash-VStream. We release the dataset for VStream-QA benchmark.

- Install

- Model

- Preparation

- Train

- Evaluation

- Real-time CLI Inference

- VStream-QA Benchmark

- Citation

- Acknowledgement

- License

Please follow the instructions below to install the required packages.

-

Clone this repository

-

Install Package

conda create -n vstream python=3.10 -y

conda activate vstream

cd Flash-VStream

pip install --upgrade pip

pip install -e .- Install additional packages for training cases

pip install ninja

pip install flash-attn --no-build-isolationWe provide our Flash-VStream models after Stage 1 and 2 finetuning:

| Model | Weight | Initialized from LLM | Initialized from ViT |

|---|---|---|---|

| Flash-VStream-7b | Flash-VStream-7b | lmsys/vicuna-7b-v1.5 | openai/clip-vit-large-patch14 |

Image VQA Dataset.

Please organize the training Image VQA training data following this and evaluation data following this.

Please put the pretraining data, finetuning data, and evaluation data in pretrain, finetune, and eval_video folder following Structure.

Video VQA Dataset. please download the 2.5M subset from WebVid and ActivityNet dataset from official website or video-chatgpt.

If you want to perform evaluation, please also download corresponding files of ActivityNet-QA and NExT-QA-OE. You can download MSVD-QA and MSRVTT-QA from LLaMA-VID.

Meta Info. For meta info of training data, please download the following files and organize them as in Structure.

| Training Stage | Data file name | Size |

|---|---|---|

| Pretrain | llava_558k_with_webvid.json | 254 MB |

| Finetune | llava_v1_5_mix665k_with_video_chatgpt.json | 860 MB |

For meta info of evaluation data, please reformat each QA list to a json file named test_qa.json under Structure with format like this:

[

{

"video_id": "v_1QIUV7WYKXg",

"question": "is the athlete wearing trousers",

"id": "v_1QIUV7WYKXg_3",

"answer": "no",

"answer_type": 3,

"duration": 9.88

},

{

"video_id": "v_9eniCub7u60",

"question": "does the girl in black clothes have long hair",

"id": "v_9eniCub7u60_2",

"answer": "yes",

"answer_type": 3,

"duration": 19.43

},

]We recommend users to download the pretrained weights from the following link

Vicuna-7b-v1.5,

clip-vit-large-patch14,

and put them in ckpt following Structure.

We recommend users to extract ViT features of training and evaluation data, which accelerates training and evaluating a lot. If you do so, just replace .mp4 with .safetensors in video filename and put them in image_features and video_features folder. If not, ignore the image_features and video_features folder.

We load video feature at fps=1 and arrange them in the time order.

Each .safetensors file should contain a dict like this:

{

'feature': torch.Tensor() with shape=[256, 1024] for image and shape=[Length, 256, 1024] for video.

}The folder structure should be organized as follows before training.

Flash-VStream

├── checkpoints-finetune

├── checkpoints-pretrain

├── ckpt

│ ├── clip-vit-large-patch14

│ ├── vicuna-7b-v1.5

├── data

│ ├── pretrain

│ │ ├── llava_558k_with_webvid.json

│ │ ├── image_features

│ │ ├── images

│ │ ├── video_features

│ │ ├── videos

│ ├── finetune

│ │ ├── llava_v1_5_mix665k_with_video_chatgpt.json

│ │ ├── activitynet

│ │ ├── coco

│ │ ├── gqa

│ │ ├── image_features

│ │ │ ├── coco

│ │ │ ├── gqa

│ │ │ ├── ocr_vqa

│ │ │ ├── textvqa

│ │ │ ├── vg

│ │ ├── ocr_vqa

│ │ ├── textvqa

│ │ ├── vg

│ │ ├── video_features

│ │ │ ├── activitynet

│ ├── eval_video

│ │ ├── ActivityNet-QA

│ │ │ ├── video_features

│ │ │ ├── test_qa.json

│ │ ├── MSRVTT-QA

│ │ │ ├── video_features

│ │ │ ├── test_qa.json

│ │ ├── MSVD-QA

│ │ │ ├── video_features

│ │ │ ├── test_qa.json

│ │ ├── nextqa

│ │ │ ├── video_features

│ │ │ ├── test_qa.json

│ │ ├── vstream

│ │ │ ├── video_features

│ │ │ ├── test_qa.json

│ │ ├── vstream-realtime

│ │ │ ├── video_features

│ │ │ ├── test_qa.json

├── flash_vstream

├── scripts

Flash-VStream is trained on 8 A100 GPUs with 80GB memory. To train on fewer GPUs, you can reduce the per_device_train_batch_size and increase the gradient_accumulation_steps accordingly. Always keep the global batch size the same: per_device_train_batch_size x gradient_accumulation_steps x num_gpus. If your GPUs have less than 80GB memory, you may try ZeRO-2 and ZeRO-3 stages.

Please make sure you download and organize the data following Preparation before training.

Like LLaVA, Flash-VStream has two training stages: pretrain and finetune. Their checkpoints will be saved in checkpoints-pretrain and checkpoints-finetune folder. These two stages will take about 15 hours on 8 A100 GPUs in total.

If you want to train Flash-VStream from pretrained LLM and evaluate it, please run the following command:

bash scripts/train_and_eval.shPlease make sure you download and organize the data following Preparation before evaluation.

If you want to evaluate a Flash-VStream model, please run the following command:

bash scripts/eval.shWe provide a real-time CLI inference script, which simulates video stream input by reading frames of a video file at a fixed frame speed. You can ask any question and get the answer at any timestamp of the video stream. Run the following command and have a try:

bash scripts/realtime_cli.shPlease download VStream-QA Benchmark following this repo.

If you find this project useful in your research, please consider citing:

@article{flashvstream,

title={Flash-VStream: Memory-Based Real-Time Understanding for Long Video Streams},

author={Haoji Zhang and Yiqin Wang and Yansong Tang and Yong Liu and Jiashi Feng and Jifeng Dai and Xiaojie Jin},

year={2024},

eprint={2406.08085},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

We would like to thank the following repos for their great work:

- This work is built upon the LLaVA.

- This work utilizes LLMs from Vicuna.

- Some code is borrowed from LLaMA-VID.

- We perform video-based evaluation from Video-ChatGPT.

This project is licensed under the Apache-2.0 License.